7 Digital soil mapping

7.1 Introduction

7.1.1 Purpose

We present here the methodology for the digital soil mapping based on the soil values from the spectra prediction.

7.1.2 Covariates

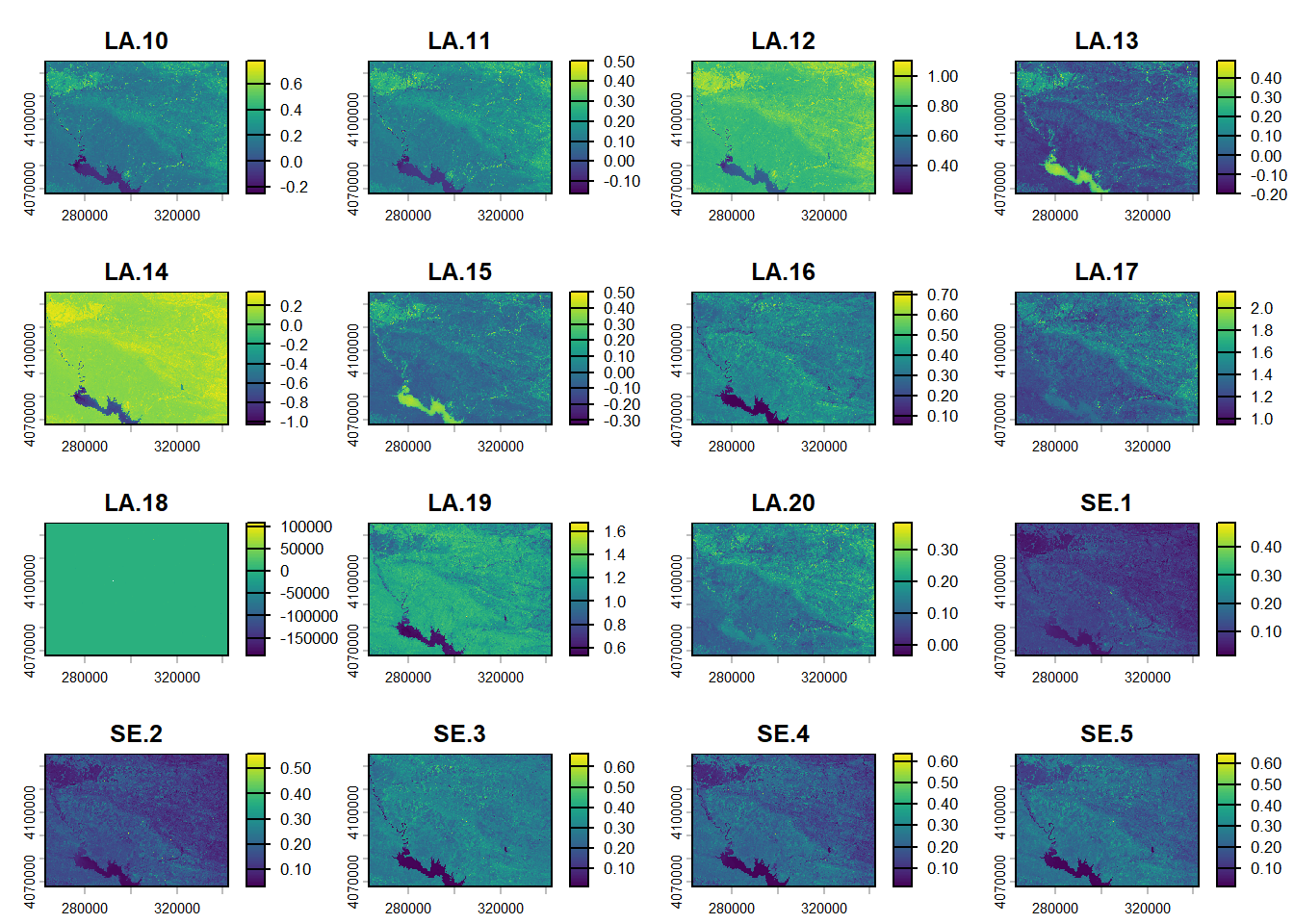

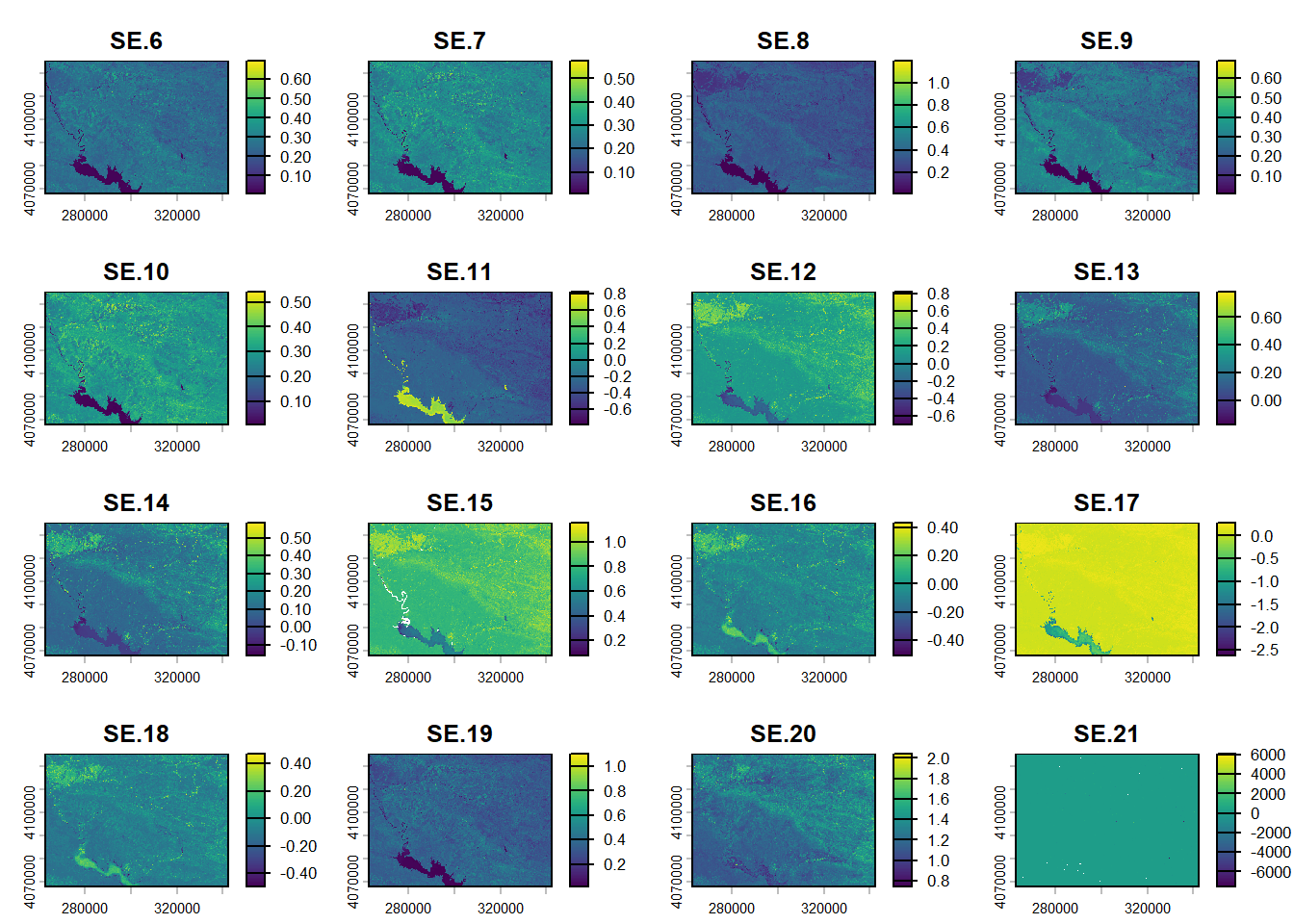

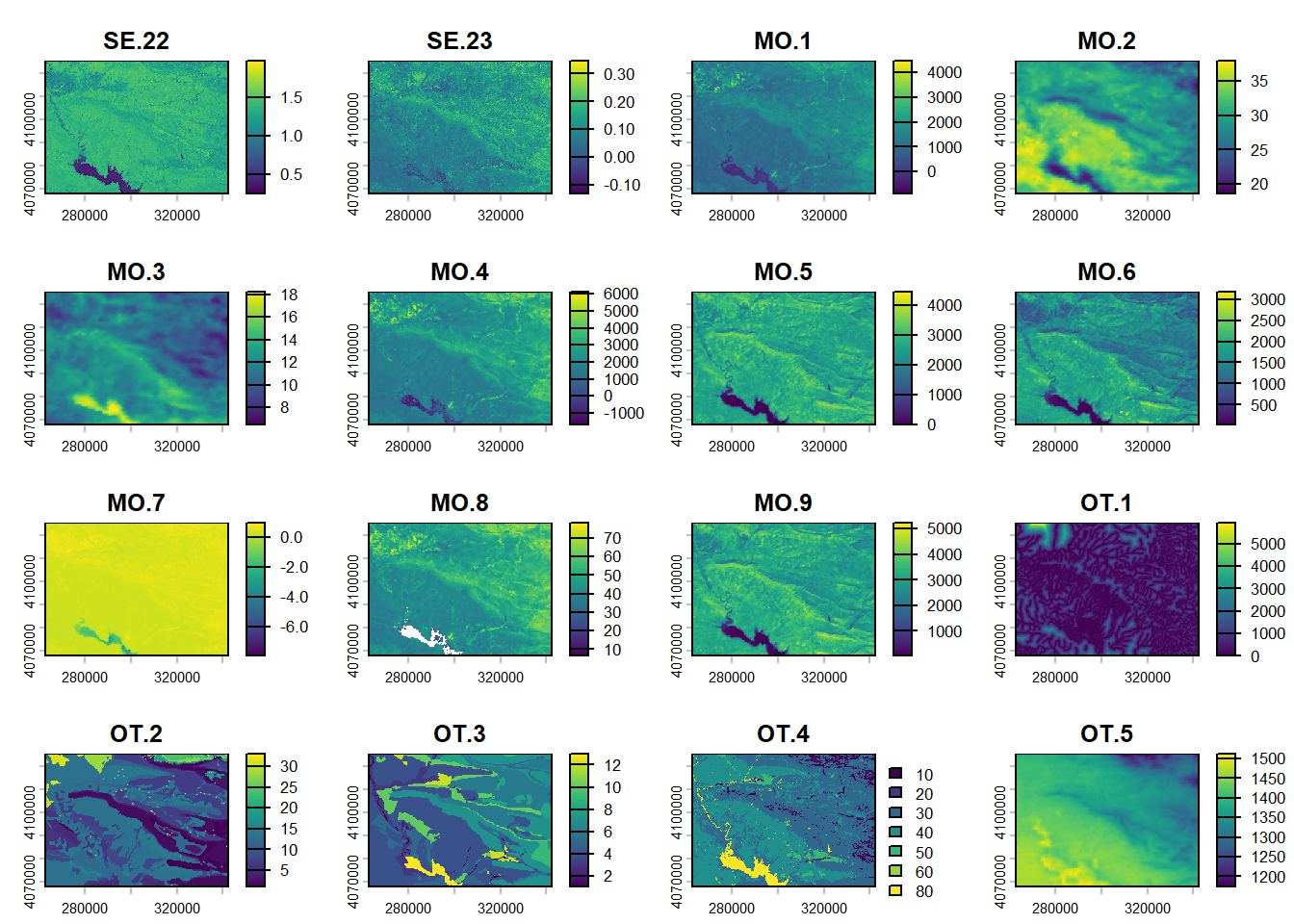

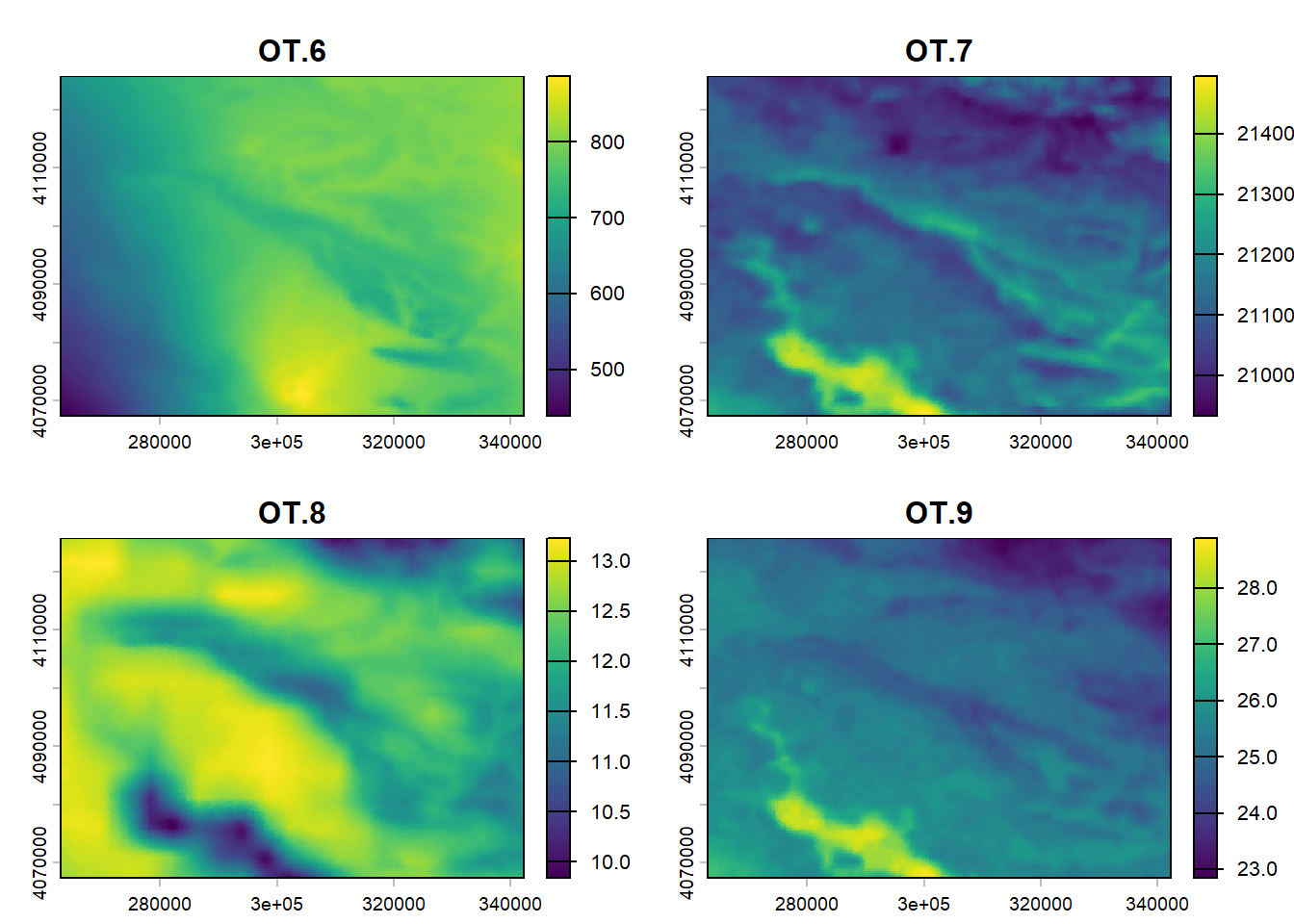

We used a set of 85 covariates mainly based on Zolfaghari Nia et al. (2022). All the data listed below are available freely online excepted for the digitized maps (geology, geohydrology and geomorphology).

| Name/ID | Original resolution (m) | Type/Unit |

|---|---|---|

| Landsat 8 Blue/LA.1 | 30 | 0.45 - 0.51 µm |

| Landsat 8 Green/LA.2 | 30 | 0.53 - 0.59 µm |

| Landsat 8 NDVI/LA.3 | 30 | - |

| Landsat 8 NDWI/LA.4 | 30 | - |

| Landsat 8 NIR/LA.5 | 30 | 0.85 - 0.88 µm |

| Landsat 8 Panchromatic/LA.6 | 15 | 0.52 - 0.90 µm |

| Landsat 8 Red/LA.7 | 30 | 0.64 - 0.67 µm |

| Landsat 8 SWIR1/LA.8 | 30 | 1.57 - 1.65 µm |

| Landsat 8 SWIR2/LA.9 | 30 | 2.11 - 2.29 µm |

| Landsat 8 EVI/LA.10 | 30 | - |

| Landsat 8 SAVI/LA.11 | 30 | - |

| Landsat 8 NDMI/LA.12 | 30 | - |

| Landsat 8 CORSI/LA.13 | 30 | - |

| Landsat 8 Brightness index/LA.14 | 30 | - |

| Landsat 8 Clay index/LA.15 | 30 | - |

| Landsat 8 Salinity index/LA.16 | 30 | - |

| Landsat 8 Carbonate index/LA.17 | 30 | - |

| Landsat 8 Gypsum index/LA.18 | 30 | - |

| MODIS EVI/MO.1 | 300 | - |

| MODIS LST day/MO.2 | 1000 | °C |

| MODIS LST night/MO.2 | 1000 | °C |

| MODIS NDVI/MO.4 | 300 | - |

| MODIS NIR/MO.5 | 300 | 0.841 - 0.876 µm |

| MODIS Red/MO.6 | 300 | 0.62 - 0.67 µm |

| MODIS SAVI/MO.7 | 300 | Meters |

| MODIS Brightness index/MO.8 | 300 | 35 classes |

| Distance rivers/OT.1 | 30 | 17 classes |

| Geology/OT.2 | 1 : 300 000 | 11 classes |

| Geomorphology/OT.3 | 1 : 300 000 | mm |

| Landuses/OT.4 | 10 | mm |

| PET sum/OT.5 | 750 | Kj m\(^{-2}\) |

| Prec. sum/OT.6 | 1000 | °C |

| SRAD sum/OT.7 | 1000 | m s\(^{-1}\) |

| Diff Max. Min. Temp./OT.8 | 1000 | 0.492 - 0.496 µm |

| Wind sum/OT.9 | 1000 | 0.559 - 0.560 µm |

| Sentinel 2 Blue/SE.1 | 10 | - |

| Sentinel 2 Green/SE.2 | 10 | - |

| Sentinel 2 NDVI/SE.3 | 20 | 0.833 - 0.835 µm |

| Sentinel 2 NDWI/SE.4 | 20 | 0.665 - 0.664 µm |

| Sentinel 2 NIR/SE.5 | 10 | 0.738 - 0.739 µm |

| Sentinel 2 Red/SE.6 | 10 | 0.739 - 0.740 µm |

| Sentinel 2 RedEdge1/SE.7 | 20 | 0.779 - 0.782 µm |

| Sentinel 2 RedEdge2/SE.8 | 20 | 1.610 - 1.613 µm |

| Sentinel 2 RedEdge3/SE.9 | 20 | 2.185 - 2.202 µm |

| Sentinel 2 SWIR1/SE.10 | 20 | 0.943 - 0.945 µm |

| Sentinel 2 SWIR2/SE.11 | 20 | - |

| Sentinel 2 water vapor/SE.12 | 90 | - |

| Sentinel 2 EVI/SE.13 | 20 | - |

| Sentinel 2 TVI/SE.14 | 20 | - |

| Sentinel 2 SAVI/SE.15 | 20 | - |

| Sentinel 2 LSWI/SE.16 | 20 | - |

| Sentinel 2 Clay index/SE.17 | 20 | - |

| Sentinel 2 Brightness index/SE.18 | 20 | - |

| Sentinel 2 Salinity index/SE.19 | 20 | - |

| Sentinel 2 Carbonate index/SE.20 | 20 | Radians |

| Sentinel 2 Gypsum index/SE.21 | 20 | - |

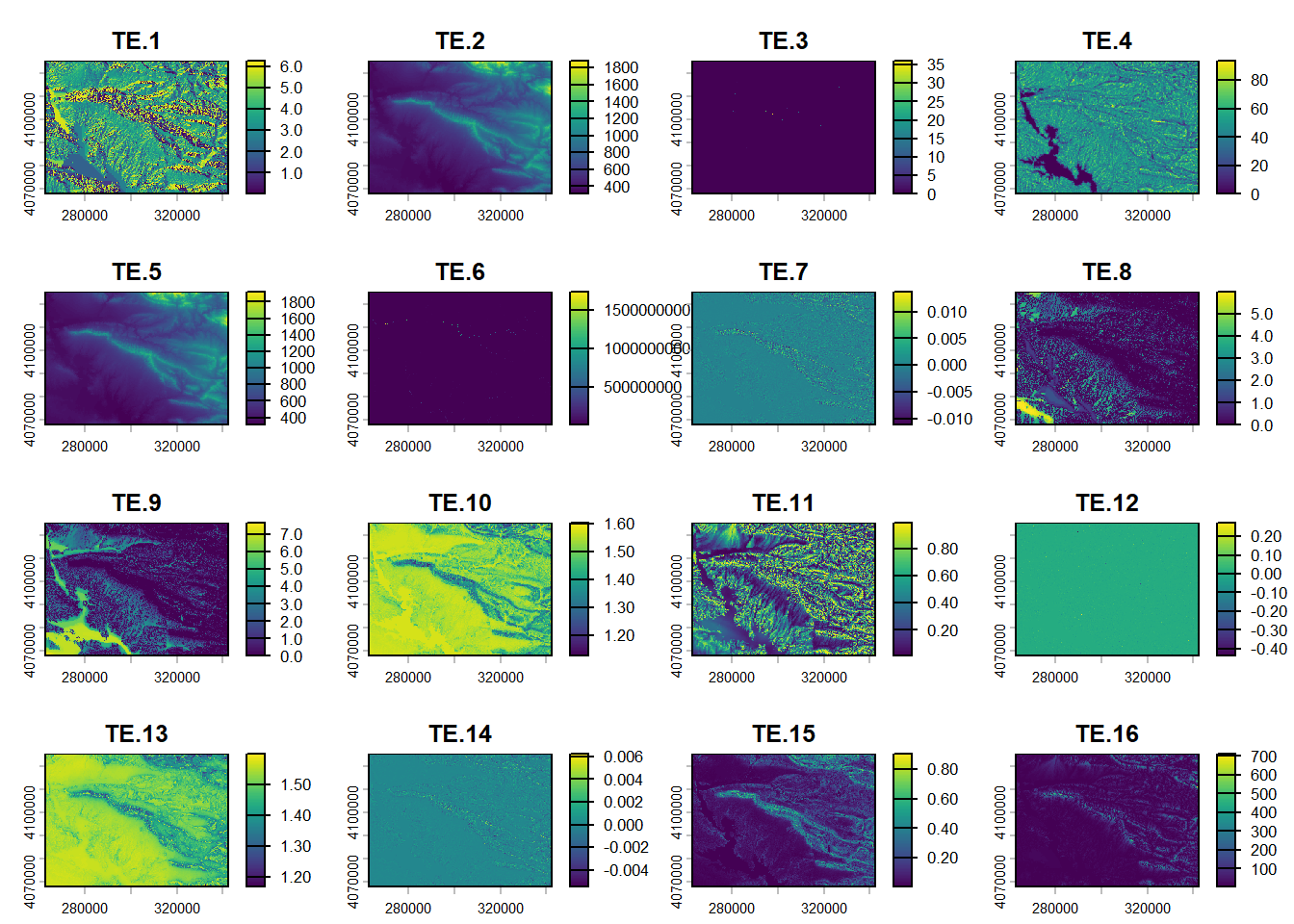

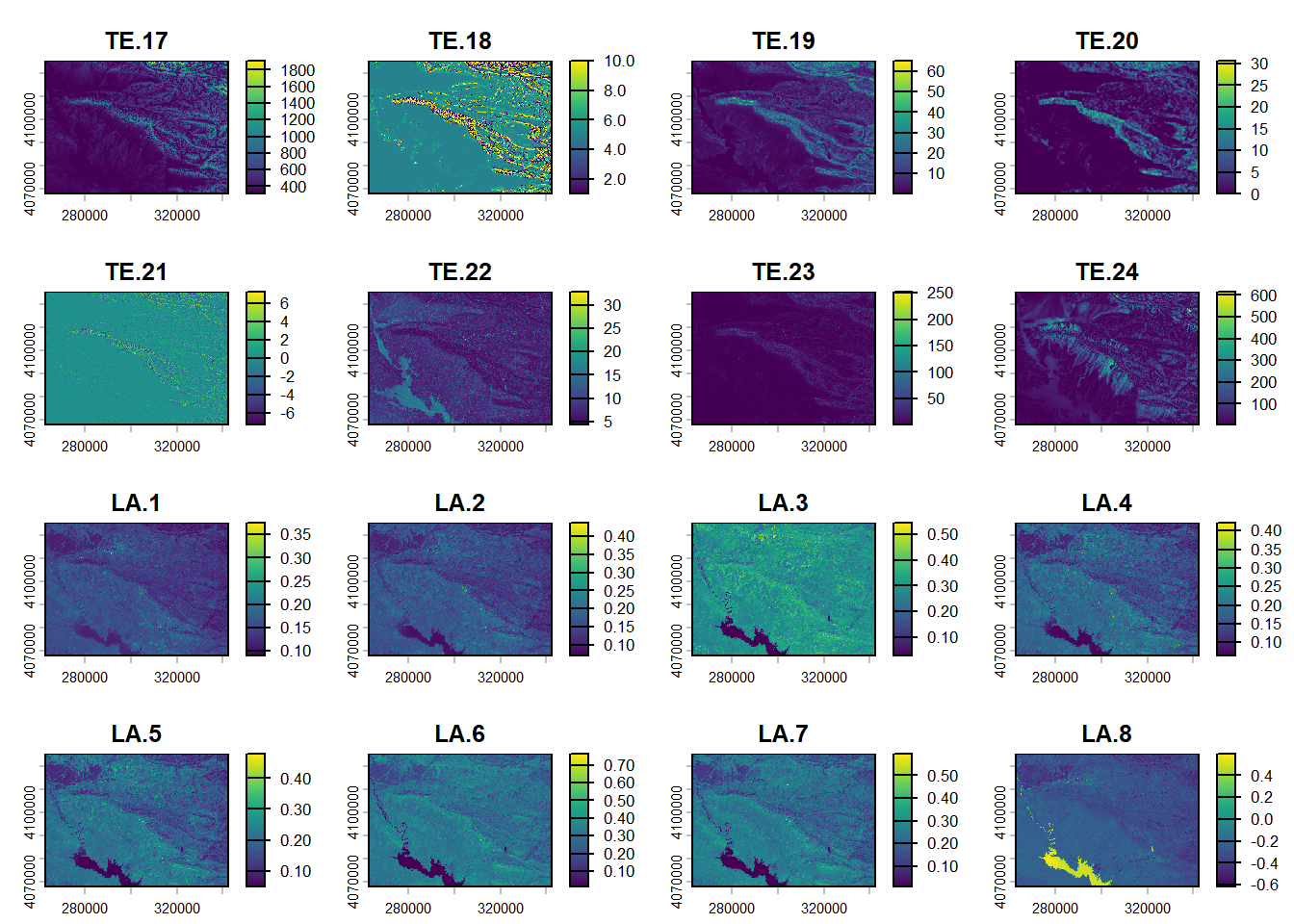

| Aspect/TE.1 | 30 | - |

| Channel network base level/TE.2 | 30 | - |

| Channel network distance/TE.3 | 30 | Meters |

| Connexity/TE.4 | 30 | - |

| DEM/TE.5 | 30 | - |

| Flow accumaltion/TE.6 | 30 | - |

| General curvature/TE.7 | 30 | - |

| MrRTF/TE.8 | 30 | Radians |

| MrVBF/TE.9 | 30 | - |

| Negative openness/TE.10 | 30 | - |

| Normalized height/TE.11 | 30 | Radians |

| Plan curvature/TE.12 | 30 | - |

| Positive openness/TE.13 | 30 | - |

| Profile curvature/TE.14 | 30 | Radians |

| Slope height/TE.15 | 30 | - |

| Slope/TE.16 | 30 | - |

| Standardized height/TE.17 | 30 | - |

| Surface landform/TE.18 | 30 | - |

| Terrain ruggedness index/TE.19 | 30 | - |

| Terrain texture/TE.20 | 30 | - |

| TPI/TE.21 | 30 | - |

| TWI/TE.22 | 30 | - |

| Total catchment area/TE.23 | 30 | 0.45 - 0.51 µm |

| Valley depth/TE.24 | 30 | 0.53 - 0.59 µm |

We create an Python API to access the Google Earth Engine plateforme. You can read to the ‘ipynb’ file for more info.

import ee

import geemap

import rasterio

from rasterio.features import shapes

import geopandas as gpd

from shapely.geometry import mapping

from shapely.geometry import shape

import os

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

ee.Authenticate()

ee.Initialize()

# Local file

raster_path = r".\data\Large_grid.tif"

with rasterio.open(raster_path) as src:

image = src.read(1)

mask = image > 0

results = (

{'properties': {'value': v}, 'geometry': s}

for i, (s, v) in enumerate(

shapes(image, mask=mask, transform=src.transform))

)

# Convert in GeoDataFrame

geoms = list(results)

gdf = gpd.GeoDataFrame.from_features(geoms)

# Create a polygon and gee object

aoi_poly = gdf.union_all()

aoi = ee.Geometry(mapping(aoi_poly))

Map = geemap.Map()

Map.centerObject(aoi, 8)

Map.addLayer(aoi, {}, "AOI")

Map

def maskS2clouds(image):

qa = image.select('QA60')

cloudBitMask = 1 << 10

cirrusBitMask = 1 << 11

mask = qa.bitwiseAnd(cloudBitMask).eq(0).And(

qa.bitwiseAnd(cirrusBitMask).eq(0))

return image.updateMask(mask).divide(10000)

dataset = (

ee.ImageCollection("COPERNICUS/S2_SR_HARMONIZED")

.filterDate("2021-01-01", "2022-01-31") # period

.filter(ee.Filter.lt("CLOUDY_PIXEL_PERCENTAGE", 20)) # cloud < 20%

.map(maskS2clouds)

)

# Median

S2_composite = dataset.median().clip(aoi)

def maskL8clouds(image):

qa = image.select('QA_PIXEL')

cloudBit = 1 << 3

cloudShadowBit = 1 << 4

# Cirrus have not been considered as it provides only black cells

# cirrusConfMask = 1 << 14

mask = (qa.bitwiseAnd(cloudBit).eq(0)

.And(qa.bitwiseAnd(cloudShadowBit).eq(0)))

return image.updateMask(mask)

dataset = (

ee.ImageCollection("LANDSAT/LC08/C02/T1_TOA")

.filterDate("2021-01-01", "2022-01-01") # period

.map(maskL8clouds)

)

# Median

L8_composite = dataset.median().clip(aoi)

modisLST = ee.ImageCollection("MODIS/061/MOD11A2")

modisLSTFiltered = modisLST.filterDate("2021-01-01", "2022-01-31").filterBounds(aoi)

modisrefl = ee.ImageCollection('MODIS/061/MOD09Q1')

modisreflFiltered = modisrefl.filterDate("2021-01-01", "2022-01-31").filterBounds(aoi)

modisvege = ee.ImageCollection("MODIS/061/MOD13Q1")

modisvegeFiltered = modisvege.filterDate("2021-01-01", "2022-01-31").filterBounds(aoi)

def kelvin_to_celsius(image):

return image.multiply(0.02).subtract(273.15).rename('LST_Celsius')

lstDay = modisLSTFiltered.select('LST_Day_1km').map(kelvin_to_celsius)

lstDayMedian = lstDay.median().clip(aoi)

lstNight = modisLSTFiltered.select('LST_Night_1km').map(kelvin_to_celsius)

lstNightMedian = lstNight.median().clip(aoi)

redBand = modisreflFiltered.select('sur_refl_b01').median().clip(aoi)

nirBand = modisreflFiltered.select('sur_refl_b02').median().clip(aoi)

NDVIBand = modisvegeFiltered.select('NDVI').median().clip(aoi)

EVIBand = modisvegeFiltered.select('EVI').median().clip(aoi)

# PET

et_monthly = ee.ImageCollection("projects/sat-io/open-datasets/global_et0/global_et0_monthly")

# Select all the months

months = ['et0_V3_01','et0_V3_02','et0_V3_03','et0_V3_04','et0_V3_05','et0_V3_06','et0_V3_07','et0_V3_08',

'et0_V3_09','et0_V3_10','et0_V3_11','et0_V3_12']

subset = et_monthly.filter(ee.Filter.inList('system:index', months))

# Sum of PET

pet_select = subset.sum().rename('ET0_sum').clip(aoi)

# DEM

dem = ee.ImageCollection("COPERNICUS/DEM/GLO30").select("DEM").mosaic().clip(aoi)

Landuse = ee.ImageCollection('ESA/WorldCover/v200').first().clip(aoi)

geemap.ee_export_image(

Landuse,

filename=r".\data\Others\Landuse_raw.tif",

scale=30,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

pet_select,

filename=r".\data\Others\PET_raw.tif",

scale=800,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

lstDayMedian,

filename=r".\data\MODIS\MODIS_LST_day_raw.tif",

scale=1000,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

lstNightMedian,

filename=r".\data\MODIS\MODIS_LST_night_raw.tif",

scale=1000,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

redBand,

filename=r".\data\MODIS\MODIS_Red_raw.tif",

scale=250,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

nirBand,

filename=r".\data\MODIS\MODIS_NIR_raw.tif",

scale=250,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

NDVIBand,

filename=r".\data\MODIS\MODIS_NDVI_raw.tif",

scale=250,

region=aoi,

crs="EPSG:32638"

)

geemap.ee_export_image(

EVIBand,

filename=r".\data\MODIS\MODIS_EVI_raw.tif",

scale=250,

region=aoi,

crs="EPSG:32638"

)

task = ee.batch.Export.image.toDrive(

image=dem,

description = f"DEM_raw",

scale = 30,

region = aoi,

crs = 'EPSG:32638',

folder = 'GoogleEarthEngine',

maxPixels = 1e13

)

task.start()

S2_bands = ['B2', 'B3', 'B4', 'B5', 'B6', 'B7', 'B8', 'B9', 'B11', 'B12']

for band in S2_bands:

task = ee.batch.Export.image.toDrive(

image=S2_composite.select([band]),

description = f"Sentinel2_{band}_2021_MedianComposite",

scale = 30,

region = aoi,

crs = 'EPSG:32638',

folder = 'GoogleEarthEngine',

maxPixels = 1e13

)

task.start()

L8_bands = ['B2', 'B3', 'B4', 'B5', 'B6', 'B7', 'B8']

for band in L8_bands:

task = ee.batch.Export.image.toDrive(

image=L8_composite.select([band]),

description = f"Landsat8_{band}_2021_MedianComposite",

scale = 30,

region = aoi,

crs = 'EPSG:32638',

folder = 'GoogleEarthEngine',

maxPixels = 1e13

)

task.start()7.1.2.1 Terrain

For the DEM and all the derivatives, we used SAGA GIS 9.3.1 software and all the specificties of the batch process are detailed below. The last LS factor corresponds to the Total catchment area covariate.

[2025-09-24/01:09:32] [Fill Sinks (Wang & Liu)] Execution started...

__________

[Fill Sinks (Wang & Liu)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

DEM: DEM_raw

Filled DEM: Filled DEM

Flow Directions: Flow Directions

Watershed Basins: Watershed Basins

Minimum Slope [Degree]: 0.1

__________

total execution time: 7000 milliseconds (07s)

[2025-09-24/01:09:39] [Fill Sinks (Wang & Liu)] Execution succeeded (07s)

[2025-09-24/01:18:18] [Simple Filter] Execution started...

__________

[Simple Filter] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Grid: DEM_raw [no sinks]

Filtered Grid: Filtered Grid

Filter: Smooth

Kernel Type: Square

Radius: 3

__________

total execution time: 3000 milliseconds (03s)

[2025-09-24/01:18:21] [Simple Filter] Execution succeeded (03s)

[2025-09-24/01:21:25] [Slope, Aspect, Curvature] Execution started...

__________

[Slope, Aspect, Curvature] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Slope: Slope

Aspect: Aspect

Northness: <not set>

Eastness: <not set>

General Curvature: General Curvature

Profile Curvature: Profile Curvature

Plan Curvature: Plan Curvature

Tangential Curvature: <not set>

Longitudinal Curvature: <not set>

Cross-Sectional Curvature: <not set>

Minimal Curvature: <not set>

Maximal Curvature: <not set>

Total Curvature: <not set>

Flow Line Curvature: <not set>

Method: 9 parameter 2nd order polynom (Zevenbergen & Thorne 1987)

Unit: radians

Unit: radians

__________

total execution time: 1000 milliseconds (01s)

[2025-09-24/01:21:27] [Slope, Aspect, Curvature] Execution succeeded (01s)

[2025-09-24/16:21:11] [Channel Network and Drainage Basins] Execution started...

__________

[Channel Network and Drainage Basins] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed]

Flow Direction: Flow Direction

Flow Connectivity: <not set>

Strahler Order: <not set>

Drainage Basins: Drainage Basins

Channels: Channels

Drainage Basins: Drainage Basins

Junctions: <not set>

Threshold: 5

Subbasins: false

__________

[Vectorizing Grid Classes] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Grid: Drainage Basins

Polygons: Polygons

Class Selection: all classes

Vectorised class as...: one single (multi-)polygon object

Keep Vertices on Straight Lines: false

[Vectorizing Grid Classes] execution time: 05s

__________

total execution time: 9000 milliseconds (09s)

[2025-09-24/16:21:20] [Channel Network and Drainage Basins] Execution succeeded (09s)

[2025-09-24/01:26:59] [Channel Network] Execution started...

__________

[Channel Network] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Flow Direction: Flow Direction

Channel Network: Channel Network

Channel Direction: Channel Direction

Channel Network: Channel Network

Initiation Grid: DEM_raw [no sinks] [Smoothed]

Initiation Type: Greater than

Initiation Threshold: 0

Divergence: <not set>

Tracing: Max. Divergence: 5

Tracing: Weight: <not set>

Min. Segment Length: 10

__________

total execution time: 142000 milliseconds (02m 22s)

[2025-09-24/01:29:21] [Channel Network] Execution succeeded (02m 22s)

[2025-09-24/01:30:53] [Multiresolution Index of Valley Bottom Flatness (MRVBF)] Execution started...

__________

[Multiresolution Index of Valley Bottom Flatness (MRVBF)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

MRVBF: MRVBF

MRRTF: MRRTF

Initial Threshold for Slope: 16

Threshold for Elevation Percentile (Lowness): 0.4

Threshold for Elevation Percentile (Upness): 0.35

Shape Parameter for Slope: 4

Shape Parameter for Elevation Percentile: 3

Update Views: true

Classify: false

Maximum Resolution (Percentage): 100

step: 1, resolution: 30.00, threshold slope 16.00

step: 2, resolution: 30.00, threshold slope 8.00

step: 3, resolution: 90.00, threshold slope 4.00

step: 4, resolution: 270.00, threshold slope 2.00

step: 5, resolution: 810.00, threshold slope 1.00

step: 6, resolution: 2430.00, threshold slope 0.50

step: 7, resolution: 7290.00, threshold slope 0.25

step: 8, resolution: 21870.00, threshold slope 0.12

step: 9, resolution: 65610.00, threshold slope 0.06

step: 10, resolution: 196830.00, threshold slope 0.03

__________

total execution time: 105000 milliseconds (01m 45s)

[2025-09-24/01:32:38] [Multiresolution Index of Valley Bottom Flatness (MRVBF)] Execution succeeded (01m 45s)

[2025-09-24/01:33:26] [Topographic Openness] Execution started...

__________

[Topographic Openness] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Positive Openness: Positive Openness

Negative Openness: Negative Openness

Radial Limit: 10000

Directions: all

Number of Sectors: 8

Method: line tracing

Unit: Radians

Difference from Nadir: true

__________

total execution time: 101000 milliseconds (01m 41s)

[2025-09-24/01:35:07] [Topographic Openness] Execution succeeded (01m 41s)

[2025-09-24/02:11:27] [Vertical Distance to Channel Network] Execution started...

__________

[Vertical Distance to Channel Network] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Channel Network: Channel Network

Vertical Distance to Channel Network: Vertical Distance to Channel Network

Channel Network Base Level: Channel Network Base Level

Tension Threshold: 1

Maximum Iterations: 0

Keep Base Level below Surface: true

Level: 12; Iterations: 1; Maximum change: 0.000000

Level: 11; Iterations: 1; Maximum change: 0.000000

Level: 10; Iterations: 1; Maximum change: 0.000000

Level: 9; Iterations: 1; Maximum change: 0.000000

Level: 8; Iterations: 1; Maximum change: 0.000000

Level: 7; Iterations: 1; Maximum change: 0.000000

Level: 6; Iterations: 1; Maximum change: 0.000000

Level: 5; Iterations: 1; Maximum change: 0.000000

Level: 4; Iterations: 4; Maximum change: 0.354675

Level: 3; Iterations: 6; Maximum change: 0.844788

Level: 2; Iterations: 15; Maximum change: 0.925110

Level: 1; Iterations: 20; Maximum change: 0.932373

__________

total execution time: 13000 milliseconds (13s)

[2025-09-24/02:11:40] [Vertical Distance to Channel Network] Execution succeeded (13s)

[2025-09-24/01:38:34] [Terrain Surface Convexity] Execution started...

__________

[Terrain Surface Convexity] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Convexity: Convexity

Laplacian Filter Kernel: conventional four-neighbourhood

Type: convexity

Flat Area Threshold: 0

Scale (Cells): 10

Method: resampling

Weighting Function: gaussian

Bandwidth: 0.7

__________

total execution time: 1000 milliseconds (01s)

[2025-09-24/01:38:35] [Terrain Surface Convexity] Execution succeeded (01s)

[2025-09-24/16:53:51] [Flow Accumulation (One Step)] Execution started...

__________

[Flow Accumulation (One Step)] Parameters:

Elevation: DEM [no sinks] [Smoothed]

Flow Accumulation: Flow Accumulation

Specific Catchment Area: Specific Catchment Area

Preprocessing: Fill Sinks (Wang & Liu)

Flow Routing: Deterministic Infinity

__________

[Fill Sinks XXL (Wang & Liu)] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

DEM: DEM [no sinks] [Smoothed]

Filled DEM: Filled DEM

Minimum Slope [Degree]: 0.0001

[Fill Sinks XXL (Wang & Liu)] execution time: 03s

__________

[Flow Accumulation (Top-Down)] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed] [no sinks]

Sink Routes: <not set>

Weights: <not set>

Flow Accumulation: Flow Accumulation

Input for Mean over Catchment: <not set>

Material for Accumulation: <not set>

Step: 1

Flow Accumulation Unit: cell area

Flow Path Length: <not set>

Channel Direction: <not set>

Method: Deterministic Infinity

Thresholded Linear Flow: false

[Flow Accumulation (Top-Down)] execution time: 07s

__________

[Flow Width and Specific Catchment Area] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed] [no sinks]

Flow Width: Flow Width

Total Catchment Area (TCA): Flow Accumulation

Specific Catchment Area (SCA): Specific Catchment Area (SCA)

Coordinate Unit: meter

Method: Aspect

[Flow Width and Specific Catchment Area] execution time: 01s

__________

total execution time: 11000 milliseconds (11s)

[2025-09-24/16:54:02] [Flow Accumulation (One Step)] Execution succeeded (11s)

[2025-09-24/01:41:22] [Relative Heights and Slope Positions] Execution started...

__________

[Relative Heights and Slope Positions] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Slope Height: Slope Height

Valley Depth: Valley Depth

Normalized Height: Normalized Height

Standardized Height: Standardized Height

Mid-Slope Position: Mid-Slope Position

w: 0.5

t: 10

e: 2

[2025-09-24/01:41:22] Pass 1

[2025-09-24/01:42:51] Pass 2

__________

total execution time: 214000 milliseconds (03m 34s)

[2025-09-24/01:44:56] [Relative Heights and Slope Positions] Execution succeeded (03m 34s)

[2025-09-24/01:45:50] [TPI Based Landform Classification] Execution started...

__________

[TPI Based Landform Classification] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Landforms: Landforms

Small Scale: 0; 100

Large Scale: 0; 1000

Weighting Function: no distance weighting

__________

[Topographic Position Index (TPI)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Topographic Position Index: Topographic Position Index

Standardize: true

Scale: 0; 100

Weighting Function: no distance weighting

[Topographic Position Index (TPI)] execution time: 02s

__________

[Topographic Position Index (TPI)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Topographic Position Index: Topographic Position Index

Standardize: true

Scale: 0; 1000

Weighting Function: no distance weighting

[Topographic Position Index (TPI)] execution time: 03m 51s

__________

total execution time: 234000 milliseconds (03m 54s)

[2025-09-24/01:49:44] [TPI Based Landform Classification] Execution succeeded (03m 54s)

[2025-09-24/01:51:16] [Topographic Position Index (TPI)] Execution started...

__________

[Topographic Position Index (TPI)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Topographic Position Index: Topographic Position Index

Standardize: true

Scale: 0; 100

Weighting Function: no distance weighting

__________

total execution time: 3000 milliseconds (03s)

[2025-09-24/01:51:19] [Topographic Position Index (TPI)] Execution succeeded (03s)

[2025-09-24/01:51:58] [Terrain Surface Texture] Execution started...

__________

[Terrain Surface Texture] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Texture: Texture

Flat Area Threshold: 1

Scale (Cells): 10

Method: resampling

Weighting Function: gaussian

Bandwidth: 0.7

__________

total execution time: 4000 milliseconds (04s)

[2025-09-24/01:52:02] [Terrain Surface Texture] Execution succeeded (04s)

[2025-09-24/17:05:39] [Terrain Ruggedness Index (TRI)] Execution started...

__________

[Terrain Ruggedness Index (TRI)] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed]

Terrain Ruggedness Index (TRI): Terrain Ruggedness Index (TRI)

Search Mode: Square

Search Radius: 3

Weighting Function: no distance weighting

__________

total execution time: 2000 milliseconds (02s)

[2025-09-24/17:05:41] [Terrain Ruggedness Index (TRI)] Execution succeeded (02s)

[2025-09-24/17:08:48] [Topographic Wetness Index (One Step)] Execution started...

__________

[Topographic Wetness Index (One Step)] Parameters:

Elevation: DEM [no sinks] [Smoothed]

Topographic Wetness Index: Topographic Wetness Index

Flow Distribution: Deterministic Infinity

__________

[Sink Removal] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

DEM: DEM [no sinks] [Smoothed]

Sink Route: <not set>

Preprocessed DEM: Preprocessed DEM

Method: Fill Sinks

Threshold: false

Epsilon: 0.001

number of processed sinks: 2266

[Sink Removal] execution time: 13s

__________

[Flow Accumulation (Top-Down)] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed] [no sinks]

Sink Routes: <not set>

Weights: <not set>

Flow Accumulation: Flow Accumulation

Input for Mean over Catchment: <not set>

Material for Accumulation: <not set>

Step: 1

Flow Accumulation Unit: cell area

Flow Path Length: <not set>

Channel Direction: <not set>

Method: Deterministic Infinity

Thresholded Linear Flow: false

[Flow Accumulation (Top-Down)] execution time: 06s

__________

[Flow Width and Specific Catchment Area] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed] [no sinks]

Flow Width: Flow Width

Total Catchment Area (TCA): Flow Accumulation

Specific Catchment Area (SCA): Specific Catchment Area (SCA)

Coordinate Unit: meter

Method: Aspect

[Flow Width and Specific Catchment Area] execution time: 01s

__________

[Slope, Aspect, Curvature] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Elevation: DEM [no sinks] [Smoothed] [no sinks]

Slope: Slope

Aspect: <not set>

Northness: <not set>

Eastness: <not set>

General Curvature: <not set>

Profile Curvature: <not set>

Plan Curvature: <not set>

Tangential Curvature: <not set>

Longitudinal Curvature: <not set>

Cross-Sectional Curvature: <not set>

Minimal Curvature: <not set>

Maximal Curvature: <not set>

Total Curvature: <not set>

Flow Line Curvature: <not set>

Method: 9 parameter 2nd order polynom (Zevenbergen & Thorne 1987)

Unit: radians

Unit: radians

[Slope, Aspect, Curvature] execution time: 01s

__________

[Topographic Wetness Index] Parameters:

Grid System: 30; 2641x 1940y; 262905x 4067505y

Slope: Slope

Catchment Area: Specific Catchment Area (SCA)

Transmissivity: <not set>

Topographic Wetness Index: Topographic Wetness Index

Area Conversion: no conversion (areas already given as specific catchment area)

Method (TWI): Standard

[Topographic Wetness Index] execution time: less than 1 millisecond

__________

total execution time: 22000 milliseconds (22s)

[2025-09-24/17:09:11] [Topographic Wetness Index (One Step)] Execution succeeded (22s)

[2025-09-24/01:58:44] [LS Factor (One Step)] Execution started...

__________

[LS Factor (One Step)] Parameters:

DEM: DEM_raw [no sinks] [Smoothed]

LS Factor: LS Factor

Feet Conversion: false

Method: Moore et al. 1991

Preprocessing: none

Minimum Slope: 0.0001

__________

[Slope, Aspect, Curvature] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Slope: Slope

Aspect: <not set>

Northness: <not set>

Eastness: <not set>

General Curvature: <not set>

Profile Curvature: <not set>

Plan Curvature: <not set>

Tangential Curvature: <not set>

Longitudinal Curvature: <not set>

Cross-Sectional Curvature: <not set>

Minimal Curvature: <not set>

Maximal Curvature: <not set>

Total Curvature: <not set>

Flow Line Curvature: <not set>

Method: 9 parameter 2nd order polynom (Zevenbergen & Thorne 1987)

Unit: radians

Unit: radians

[Slope, Aspect, Curvature] execution time: 01s

__________

[Flow Accumulation (Top-Down)] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Sink Routes: <not set>

Weights: <not set>

Flow Accumulation: Flow Accumulation

Input for Mean over Catchment: <not set>

Material for Accumulation: <not set>

Step: 1

Flow Accumulation Unit: cell area

Flow Path Length: <not set>

Channel Direction: <not set>

Method: Multiple Flow Direction

Thresholded Linear Flow: false

Convergence: 1.1

Contour Length: false

[Flow Accumulation (Top-Down)] execution time: 04s

__________

[Flow Width and Specific Catchment Area] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Elevation: DEM_raw [no sinks] [Smoothed]

Flow Width: Flow Width

Total Catchment Area (TCA): Flow Accumulation

Specific Catchment Area (SCA): Specific Catchment Area (SCA)

Coordinate Unit: meter

Method: Aspect

[Flow Width and Specific Catchment Area] execution time: less than 1 millisecond

__________

[LS Factor] Parameters:

Grid System: 30; 2840x 2175y; 258795x 4063035y

Slope: Slope

Catchment Area: Specific Catchment Area (SCA)

LS Factor: LS Factor

Area to Length Conversion: no conversion (areas already given as specific catchment area)

Feet Adjustment: false

Method (LS): Moore et al. 1991

Rill/Interrill Erosivity: 1

Stability: stable

[LS Factor] execution time: less than 1 millisecond

__________

total execution time: 6000 milliseconds (06s)

[2025-09-24/01:58:50] [LS Factor (One Step)] Execution succeeded (06s)7.1.2.2 Remote sensing images and indexes

The Landsat 8 images were collected via a Google Earth Engine script on a period covering 2020, the median of the composite image from Tier 1 TOA collection was used. The Sentinel 2 image were collected via a Google Earth Engine script on a period covering 2021, the median of the composite image from MultiSpectral Instrument Level-2A collection was used.The land surface temperature (LST) and other MODIS component were computed also on Google Earth with a time covering from 2020 to 2021. the median of the MODIS Terra collection was used. The javascript codes for scraping these images are available in the supplementary file inside the “7 - DSM/code” folder. We computed the following indexes: Normalized Difference Vegetation Index (NDVI); Normalized Difference Water Index (NDWI); Enhanced Vegetation Index (EVI); Soil Adjusted Vegetation Index (SAVI); Transformed Vegetation Index (TVI); Normilized Difference Moisture Index (NDMI); COmbined Specteral Response Index (COSRI); Land Surface Water Index (LSWI).

\[ NDVI = \frac{NIR - Red}{NIR + Red} \]

Rouse et al. (1974)

\[ NDWI = \frac{Green - NIR}{Green + NIR} \]

McFeeters (1996)

\[ EVI = G\frac{NIR - Red}{(NIR + 6Red - 7.5Blue) + L} \]

Where \(G\) is 2.5 and \(L\) is 1 A. Huete, Justice, and Liu (1994)

\[ SAVI = (1 + L)\frac{NIR - Red}{NIR + Red + L} \]

Where \(L\) is 0.5. A. R. Huete (1988)

\[ TVI = \sqrt{NDVI + 0.5} \]

Deering (1975)

\[ NDMI = \frac{NIR - SWIR1}{NIR + SWIR1} \]

Gao (1996)

\[ COSRI = \frac{Blue + Green}{Red + NIR} * NDVI \]

Fernández-Buces et al. (2006)

\[ LSWI = \frac{NIR - SWIR1}{NIR + SWIR1} \]

Chandrasekar et al. (2010)

\[ Brightness~index = \sqrt{Red^2 + NIR^2} \]

Khan et al. (2001)

\[ Simple~Ratio~Clay~index = \frac{SWIR1}{SWIR2} \]

Bousbih et al. (2019)

\[ Salinity~index = \frac{SWIR1 - SWIR2}{SWIR1 - NIR} \]

Also called NIR-SWIR index (NSI) Abuelgasim and Ammad (2019)

\[ Carbonate~index = \frac{Red}{Green} \]

Boettinger et al. (2008)

\[ Gypsum~index = \frac{SWIR1 - SWIR2}{SWIR1 + SWIR2} \]

Nield, Boettinger, and Ramsey (2007)

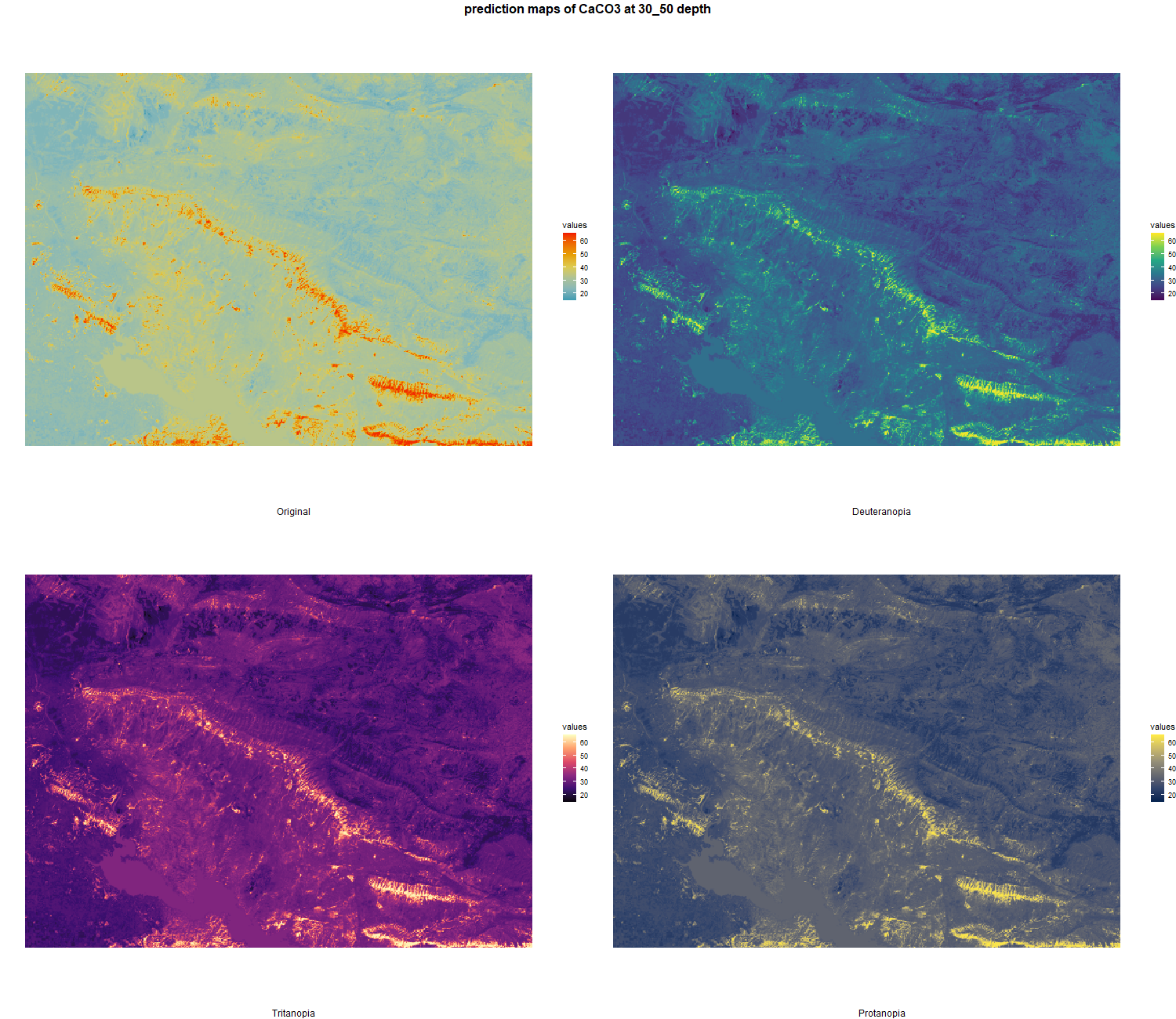

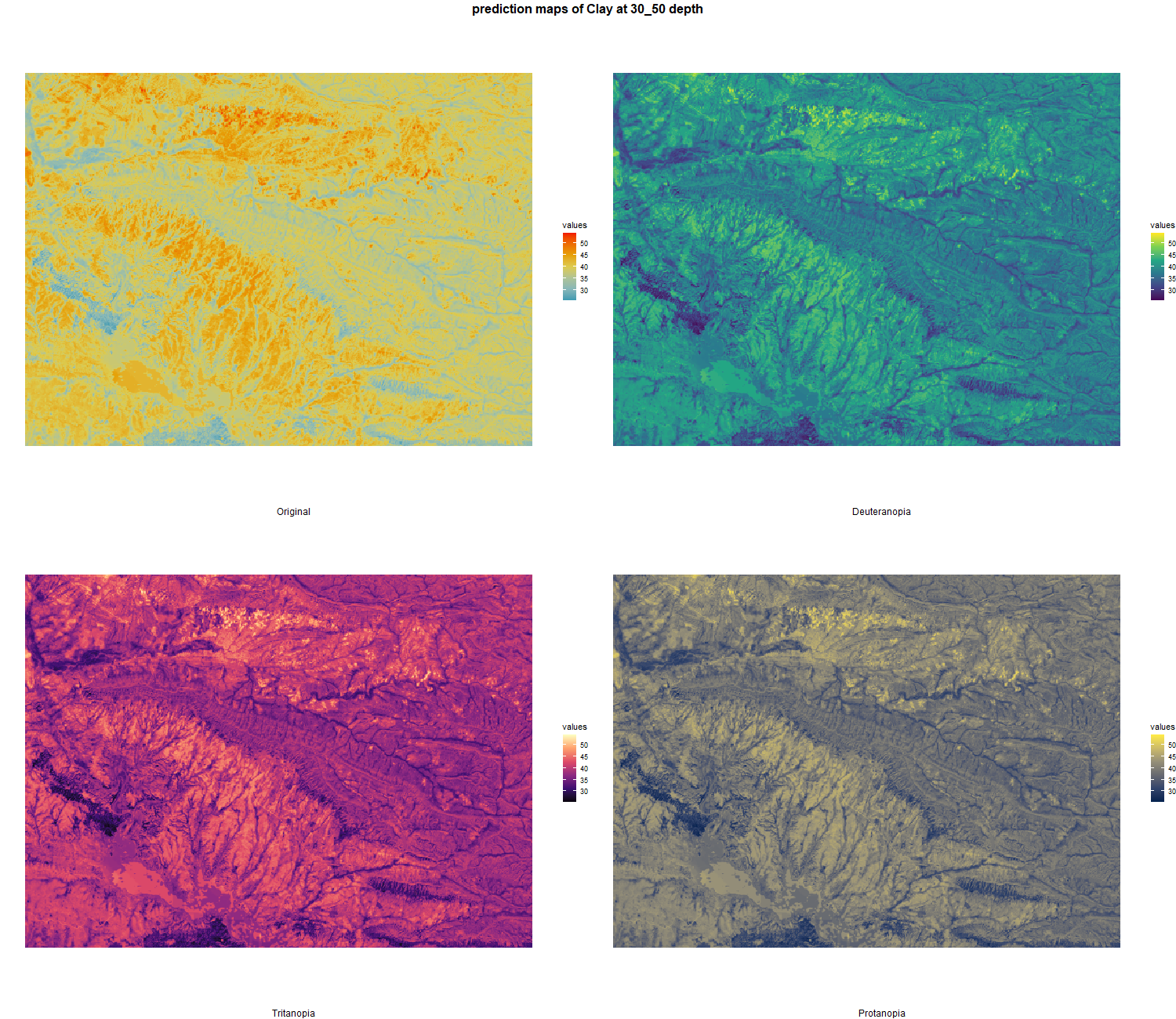

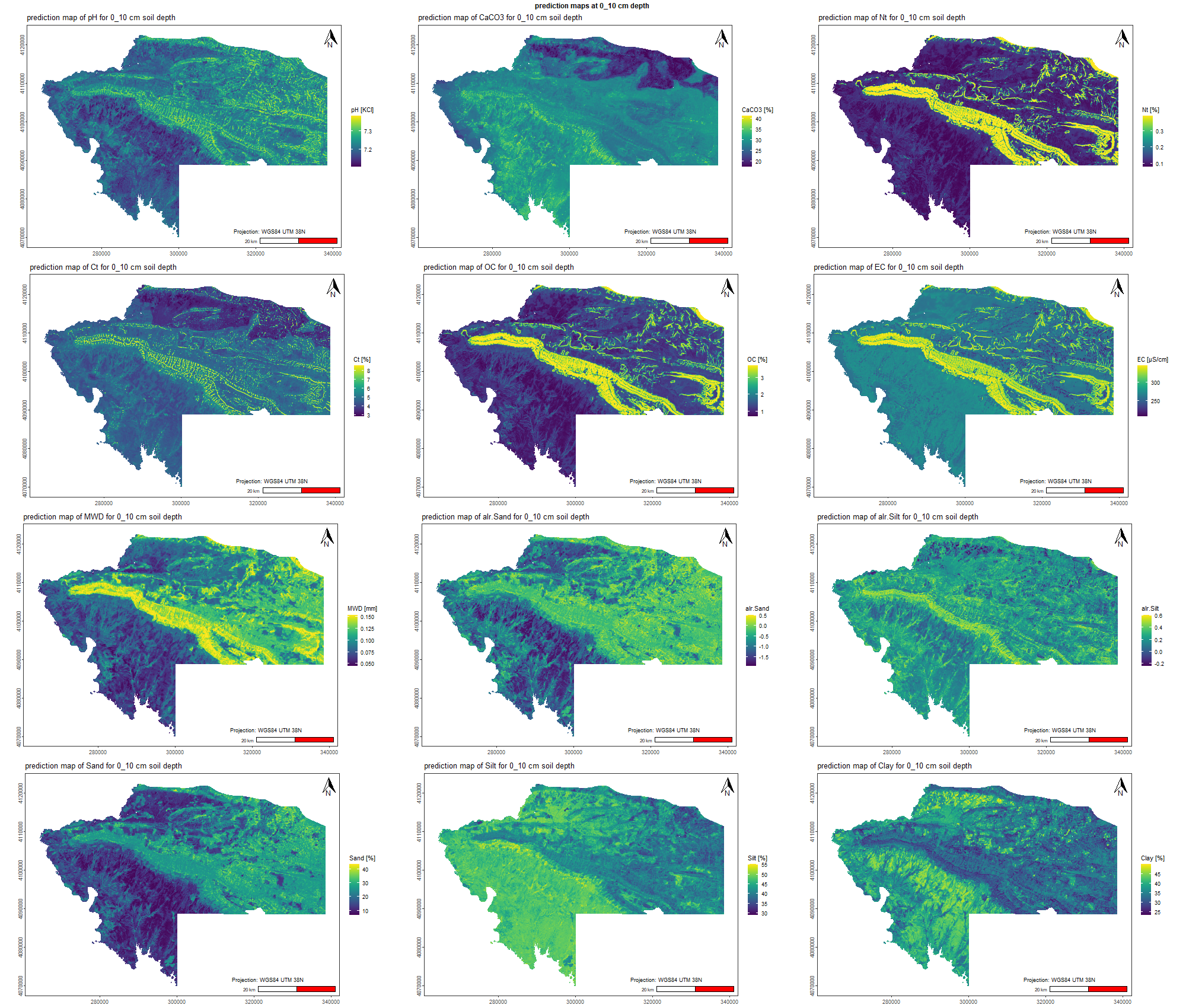

7.1.3 Soil properties

The soil 10 variables measurements for the five soil depth increment came from the predictions of the previous chapter and can be found online at https://doi.org/10.1594/PANGAEA.973700.

7.1.4 Preparation of the data

All raster were sampled to 30 x 30 m tiles to match the DEM. We used bilinear method excepted for the discrete maps (geology and geomorphology) where we used ngb resampling. The heavier data from GEE were also called from the GoogleDrive and WorldClim directly collected from ‘geodata’ package. The the vector data were also transformed in raster beeing resampled.

# 0.1 Prepare environment ======================================================

# Folder check

getwd()

# Clean up workspace

rm(list = ls(all.names = TRUE))

# 0.2 Install packages =========================================================

install.packages("pacman") #Install and load the "pacman" package (allow easier download of packages)

library(pacman)

pacman::p_load(pastclim, terra, sf, httr, geodata, googledrive, mapview) # Specify required packages and download it if needed

# 0.3 Show session infos =======================================================

sessionInfo()

# 01 Import data sets ##########################################################

# 01.1 Import background layers ================================================

grid <- rast("./data/Small_grid_UTM38.tif")

crs(grid) <- "EPSG:32638"

# 01.2 Import from Google Drive ================================================

drive_auth()

files <- drive_ls()

# Import Sentinel 2

file <- files[grepl("Sentinel2", files$name), ]

for (i in 1:nrow(file)) {

drive_download(

as_id(file$id[[i]]),

path = paste0("./data/Sentinel/Raw_",file[[1]][[i]]),

overwrite = TRUE)

}

# Import Landsat 8

file <- files[grepl("Landsat8", files$name), ]

for (i in 1:nrow(file)) {

drive_download(

as_id(file$id[[i]]),

path = paste0("./data/Landsat/Raw_",file[[1]][[i]]),

overwrite = TRUE)

}

file <- files[grepl("DEM", files$name), ]

drive_download(

as_id(file$id),

path = paste0("./data/Others/",file[[1]][[1]]),

overwrite = TRUE)

# 03 Resize data ###############################################################

# 03.1 Resize GEE single data ==================================================

DEM_raw <- rast("./data/Others/DEM_raw.tif")

PET_raw <- rast("./data/Others/PET_raw.tif")

Landuse_raw <- rast("./data/Others/Landuse_raw.tif")

EVI_raw <- rast("./data/MODIS/MODIS_EVI_raw.tif")

NDVI_raw <- rast("./data/MODIS/MODIS_NDVI_raw.tif")

NIR_raw <- rast("./data/MODIS/MODIS_NIR_raw.tif")

Red_raw <- rast("./data/MODIS/MODIS_Red_raw.tif")

LST_day_raw <- rast("./data/MODIS/MODIS_LST_day_raw.tif")

LST_night_raw <- rast("./data/MODIS/MODIS_LST_night_raw.tif")

DEM <- crop(DEM_raw, grid)

PET <- resample(PET_raw, DEM, method = "bilinear")

PET <- crop(PET, DEM)

Landuse <- resample(Landuse_raw, DEM, method = "mode")

Landuse <- crop(Landuse, DEM)

EVI <- resample(EVI_raw, DEM, method = "bilinear")

EVI <- crop(EVI, DEM)

NDVI <- resample(NDVI_raw, DEM, method = "bilinear")

NDVI <- crop(NDVI, DEM)

NIR <- resample(NIR_raw, DEM, method = "bilinear")

NIR <- crop(NIR, DEM)

Red <- resample(Red_raw, DEM, method = "bilinear")

Red <- crop(Red, DEM)

LST_day <- resample(LST_day_raw, DEM, method = "bilinear")

LST_day <- crop(LST_day, DEM)

LST_night <- resample(LST_night_raw, DEM, method = "bilinear")

LST_night <- crop(LST_night, DEM)

writeRaster(DEM, "./data/Others/DEM.tif", overwrite=TRUE)

writeRaster(PET, "./data/Others/PET.tif", overwrite=TRUE)

writeRaster(Landuse, "./data/Others/Landuse.tif", overwrite=TRUE)

writeRaster(EVI, "./data/MODIS/MODIS_EVI.tif", overwrite=TRUE)

writeRaster(NDVI, "./data/MODIS/MODIS_NDVI.tif", overwrite=TRUE)

writeRaster(NIR, "./data/MODIS/MODIS_NIR.tif", overwrite=TRUE)

writeRaster(Red, "./data/MODIS/MODIS_Red.tif", overwrite=TRUE)

writeRaster(LST_day, "./data/MODIS/MODIS_LST_day.tif", overwrite=TRUE)

writeRaster(LST_night, "./data/MODIS/MODIS_LST_night.tif", overwrite=TRUE)

rm(list=setdiff(ls(), c("DEM", "grid")))

# 03.2 Resize GEE sentinel and landsat data ====================================

Sentinel <- rast(list.files("./data/Sentinel/", full.names = TRUE))

Sentinel_names <- list.files("./data/Sentinel/", full.names = FALSE)

patterns <- c("B2", "B3", "B4", "B5", "B6", "B7", "B8", "B9", "B11", "B12")

replacers <- c("blue", "green", "red", "redEdge1", "redEdge2", "redEdge3", "NIR", "water", "SWIR1", "SWIR2")

for (i in seq_along(patterns)) {

Sentinel_names <- gsub(patterns[i], replacers[i], Sentinel_names)

}

names(Sentinel) <- gsub("\\.tif$", "", Sentinel_names)

names(Sentinel) <- gsub("Raw_", "", names(Sentinel))

for (i in 1:nlyr(Sentinel)) {

r <-resample(Sentinel[[i]], DEM, method = "bilinear")

r <- crop(r, DEM)

writeRaster(r, paste0("./data/Sentinel/",names(r),".tif"), overwrite=T)

}

Landsat <- rast(list.files("./data/Landsat/", full.names = TRUE))

Landsat_names <- list.files("./data/Landsat/", full.names = FALSE)

patterns <- c("B2", "B3", "B4", "B5", "B6", "B7", "B8")

replacers <- c("blue", "green", "red", "NIR", "SWIR1", "SWIR2", "PAN")

for (i in seq_along(patterns)) {

Landsat_names <- gsub(patterns[i], replacers[i], Landsat_names)

}

names(Landsat) <- gsub("\\.tif$", "", Landsat_names)

names(Landsat) <- gsub("Raw_", "", names(Landsat))

for (i in 1:nlyr(Landsat)) {

r <-resample(Landsat[[i]], DEM, method = "bilinear")

r <- crop(r, DEM)

writeRaster(r, paste0("./data/Landsat/",names(r),".tif"), overwrite=T)

}

# 04 Download and compute the present-climate data #############################

# 04.1 Download the present WolrdClim =========================================

temp.min <- worldclim_country("Iraq",var="tmin", res=0.5, "./data/WolrdClim.tif", version="2.1")

temp.max <- worldclim_country("Iraq",var="tmax", res=0.5, "./data/WolrdClim.tif", version="2.1")

prec <- worldclim_country("Iraq",var="prec", res=0.5, "./data/WolrdClim.tif", version="2.1")

srad <- worldclim_country("Iraq",var="srad", res=0.5, "./data/WolrdClim.tif", version="2.1")

wind <- worldclim_country("Iraq",var="wind", res=0.5, "./data/WolrdClim.tif", version="2.1")

# 04.2 Resize the present WolrdClim temperatures ===============================

temp.min_mean <- mean(temp.min)

temp.max_mean <- mean(temp.max)

BIO01 <- temp.max_mean - temp.min_mean

BIO01 <- project(BIO01, "EPSG:32638")

BIO01 <-resample(BIO01, DEM, method = "bilinear") #Careful to implement "near" option for classification maps

BIO01 <- crop(BIO01, DEM)

writeRaster(BIO01, "./data/Others/WorldClim_Temp.tif", overwrite=T)

plot(BIO01)

# 04.3 Resize the present WolrdClim precipiations ==============================

prec_sum <- sum(prec)

BIO12 <- project(prec_sum, "EPSG:32638")

BIO12 <-resample(BIO12, DEM, method = "bilinear") #Careful to implement "near" option for classification maps

BIO12 <-crop(BIO12, DEM)

writeRaster(BIO12, "./data/Others/WorldClim_Prec.tif", overwrite=T)

plot(BIO12)

# 04.4 Resize the present WolrdClim solar radiations ===========================

srad_sum <- sum(srad)

srad <- project(srad_sum, "EPSG:32638")

srad <-resample(srad, DEM, method = "bilinear") #Careful to implement "near" option for classification maps

srad <-crop(srad, DEM)

writeRaster(srad, "./data/Others/WorldClim_Srad.tif", overwrite=T)

plot(srad)

# 04.5 Resize the present WolrdClim wind forces ================================

wind_sum <- sum(wind)

wind <- project(wind_sum, "EPSG:32638")

wind <-resample(wind, DEM, method = "bilinear") #Careful to implement "near" option for classification maps

wind <-crop(wind, DEM)

writeRaster(wind, "./data/Others/WorldClim_Wind.tif", overwrite=T)

plot(wind)

# 05 Convert Shapefile data ####################################################

# 05.1 Convert from polylign to distance raster ================================

water <- st_read("./data/Natural.gpkg", layer = "Waterways")

mapview(water)

water_raster <- rasterize(vect(water), DEM, field=1, background=NA)

dist_water <- distance(water_raster)

writeRaster(dist_water, "./data/Others/Distance to water.tif", overwrite=TRUE)

# 05.2 Convert from polygon to raster ==========================================

geomorpho <- st_read("./data/Natural.gpkg", layer = "Geomorphology")

geol <- st_read("./data/Natural.gpkg", layer = "Geology")

geomorpho_raster <- rasterize(vect(geomorpho), DEM, field="Class", background=NA)

geol_raster <- rasterize(vect(geol), DEM, field="Class", background=NA)

writeRaster(geomorpho_raster, "./data/Others/Geomorphology.tif", overwrite=TRUE)

writeRaster(geol_raster, "./data/Others/Geology.tif", overwrite=TRUE)7.2 Soil digital mapping preparation

7.2.1 Preparation of the environment

# 0.1 Prepare environment ======================================================

# Folder check

getwd()

# Set folder direction

setwd()

# Clean up workspace

rm(list = ls(all.names = TRUE))

# 0.2 Install packages =========================================================

install.packages("pacman") #Install and load the "pacman" package (allow easier download of packages)

library(pacman)

pacman::p_load(dplyr, tidyr,ggplot2, mapview, sf, cli, terra, corrplot, doParallel, viridis, Boruta, caret,

quantregForest, readr, rpart, reshape2, usdm, soiltexture, compositions, patchwork)

# 0.3 Show session infos =======================================================R version 4.5.1 (2025-06-13 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 11 x64 (build 26200)

Matrix products: default

LAPACK version 3.12.1

locale:

[1] LC_COLLATE=French_France.utf8 LC_CTYPE=French_France.utf8 LC_MONETARY=French_France.utf8 LC_NUMERIC=C

[5] LC_TIME=French_France.utf8

time zone: Europe/Paris

tzcode source: internal

attached base packages:

[1] parallel stats graphics grDevices utils datasets methods base

other attached packages:

[1] patchwork_1.3.2 compositions_2.0-9 soiltexture_1.5.3 usdm_2.1-7 reshape2_1.4.5 rpart_4.1.24

[7] readr_2.1.6 quantregForest_1.3-7.1 RColorBrewer_1.1-3 randomForest_4.7-1.2 caret_7.0-1 lattice_0.22-7

[13] Boruta_9.0.0 viridis_0.6.5 viridisLite_0.4.2 doParallel_1.0.17 iterators_1.0.14 foreach_1.5.2

[19] corrplot_0.95 terra_1.8-93 cli_3.6.5 sf_1.0-24 mapview_2.11.4 ggplot2_4.0.1

[25] tidyr_1.3.2 dplyr_1.1.4

loaded via a namespace (and not attached):

[1] DBI_1.2.3 pROC_1.19.0.1 gridExtra_2.3 tcltk_4.5.1 rlang_1.1.7 magrittr_2.0.4

[7] otel_0.2.0 e1071_1.7-17 compiler_4.5.1 png_0.1-8 vctrs_0.7.0 stringr_1.6.0

[13] pkgconfig_2.0.3 fastmap_1.2.0 leafem_0.2.5 rmarkdown_2.30 tzdb_0.5.0 prodlim_2025.04.28

[19] purrr_1.2.1 xfun_0.56 satellite_1.0.6 recipes_1.3.1 R6_2.6.1 stringi_1.8.7

[25] parallelly_1.46.1 lubridate_1.9.4 Rcpp_1.1.1 bookdown_0.46 knitr_1.51 future.apply_1.20.1

[31] base64enc_0.1-3 pacman_0.5.1 Matrix_1.7-4 splines_4.5.1 nnet_7.3-20 timechange_0.3.0

[37] tidyselect_1.2.1 rstudioapi_0.18.0 dichromat_2.0-0.1 yaml_2.3.12 timeDate_4051.111 codetools_0.2-20

[43] listenv_0.10.0 tibble_3.3.1 plyr_1.8.9 withr_3.0.2 S7_0.2.1 evaluate_1.0.5

[49] future_1.69.0 survival_3.8-6 bayesm_3.1-7 units_1.0-0 proxy_0.4-29 pillar_1.11.1

[55] tensorA_0.36.2.1 rsconnect_1.7.0 KernSmooth_2.23-26 stats4_4.5.1 generics_0.1.4 sp_2.2-0

[61] hms_1.1.4 scales_1.4.0 globals_0.18.0 class_7.3-23 glue_1.8.0 tools_4.5.1

[67] robustbase_0.99-6 data.table_1.18.0 ModelMetrics_1.2.2.2 gower_1.0.2 grid_4.5.1 crosstalk_1.2.2

[73] ipred_0.9-15 nlme_3.1-168 raster_3.6-32 lava_1.8.2 DEoptimR_1.1-4 gtable_0.3.6

[79] digest_0.6.39 classInt_0.4-11 htmlwidgets_1.6.4 farver_2.1.2 htmltools_0.5.9 lifecycle_1.0.5 7.2.2 Prepare the data

# 01 Import data sets ##########################################################

# 01.1 Import soils infos ======================================================

# From the data accessible at the https://doi.org/10.1594/PANGAEA.973700

soil_infos <- read.csv("./data/MIR_spectra_prediction.csv", sep=";")

soil_infos$Depth..bot <- as.factor(soil_infos$Depth..bot)

soil_infos <- soil_infos[,-c(5,7,8)]

soil_list <- split(soil_infos, soil_infos$Depth..bot)

depths <- c("0_10", "10_30", "30_50", "50_70", "70_100")

names(soil_list) <- depths

soil_infos <- soil_list

for (i in 1:length(soil_infos)) {

soil_infos[[i]] <- soil_infos[[i]][,-c(2,5)]

colnames(soil_infos[[i]]) <- c("Site_name","Latitude","Longitude","pH","CaCO3","Nt","Ct","Corg","EC","Sand","Silt","Clay","MWD")

write.csv(soil_infos[[i]], paste0("./data/Infos_",names(soil_infos[i]),"_soil.csv"))

}

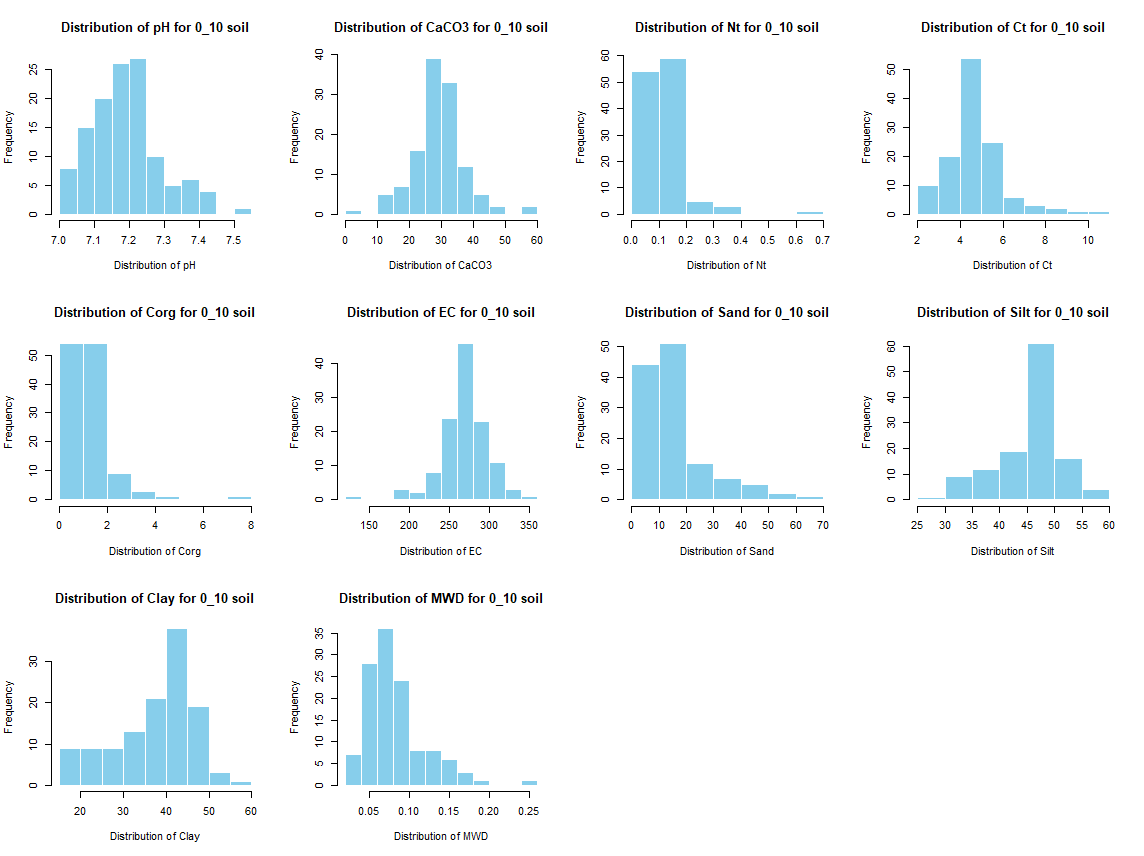

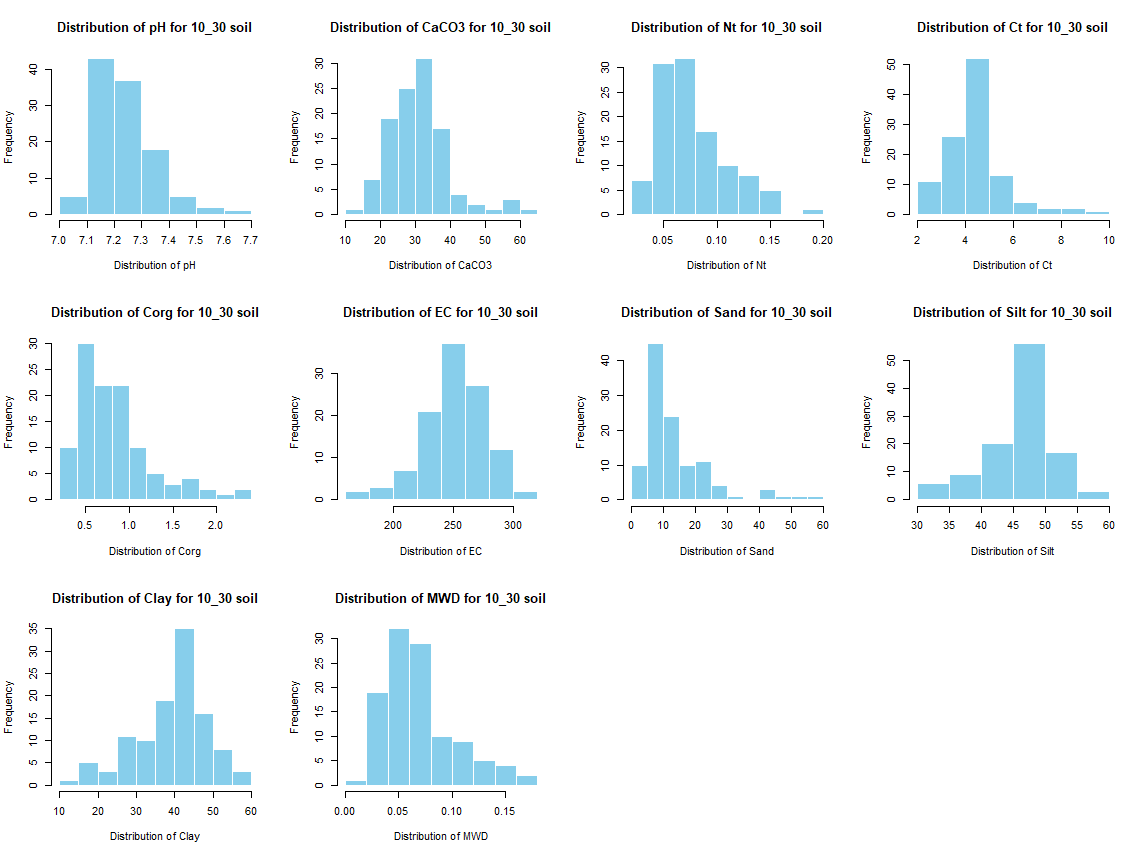

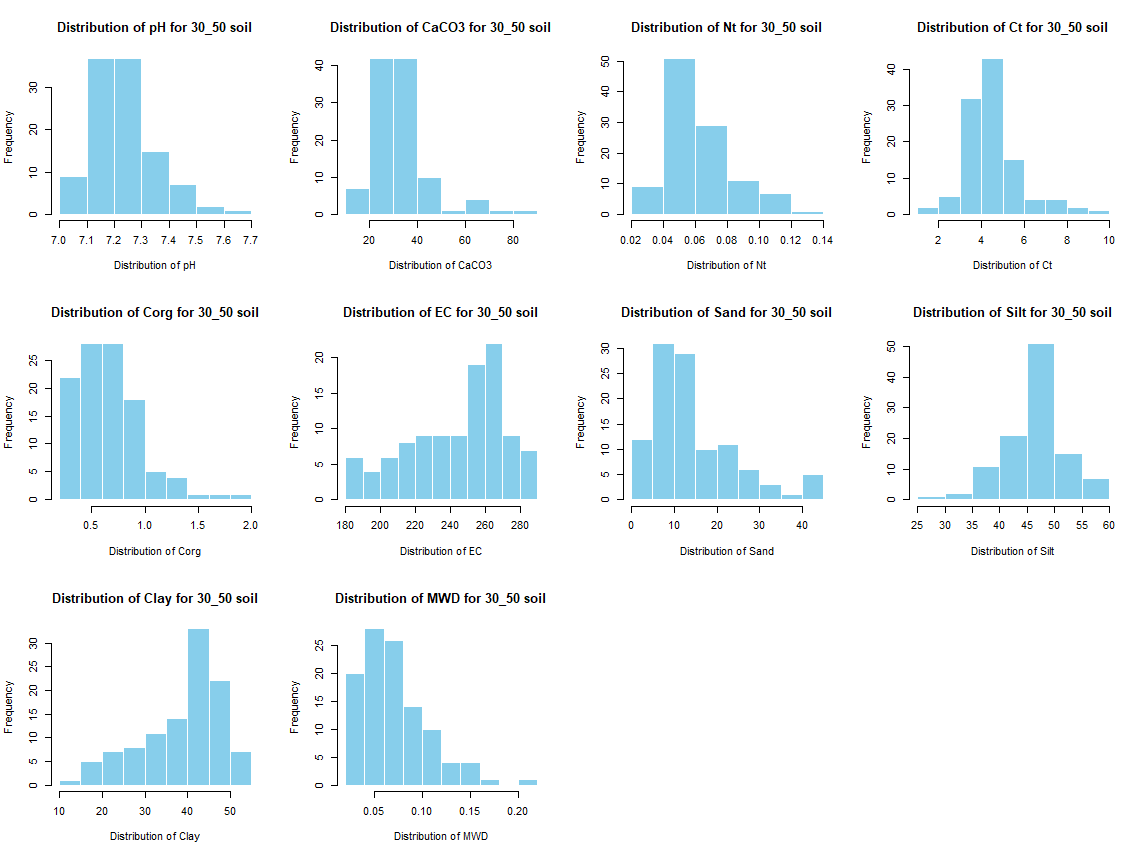

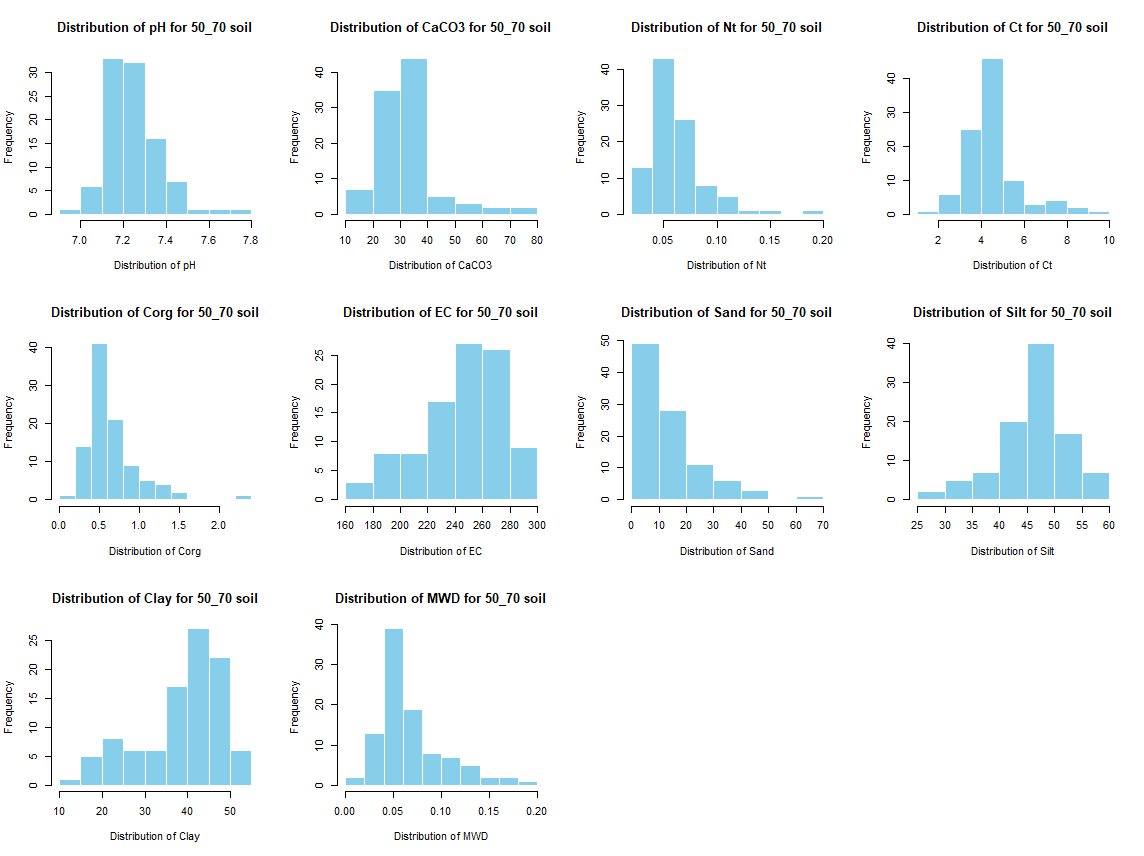

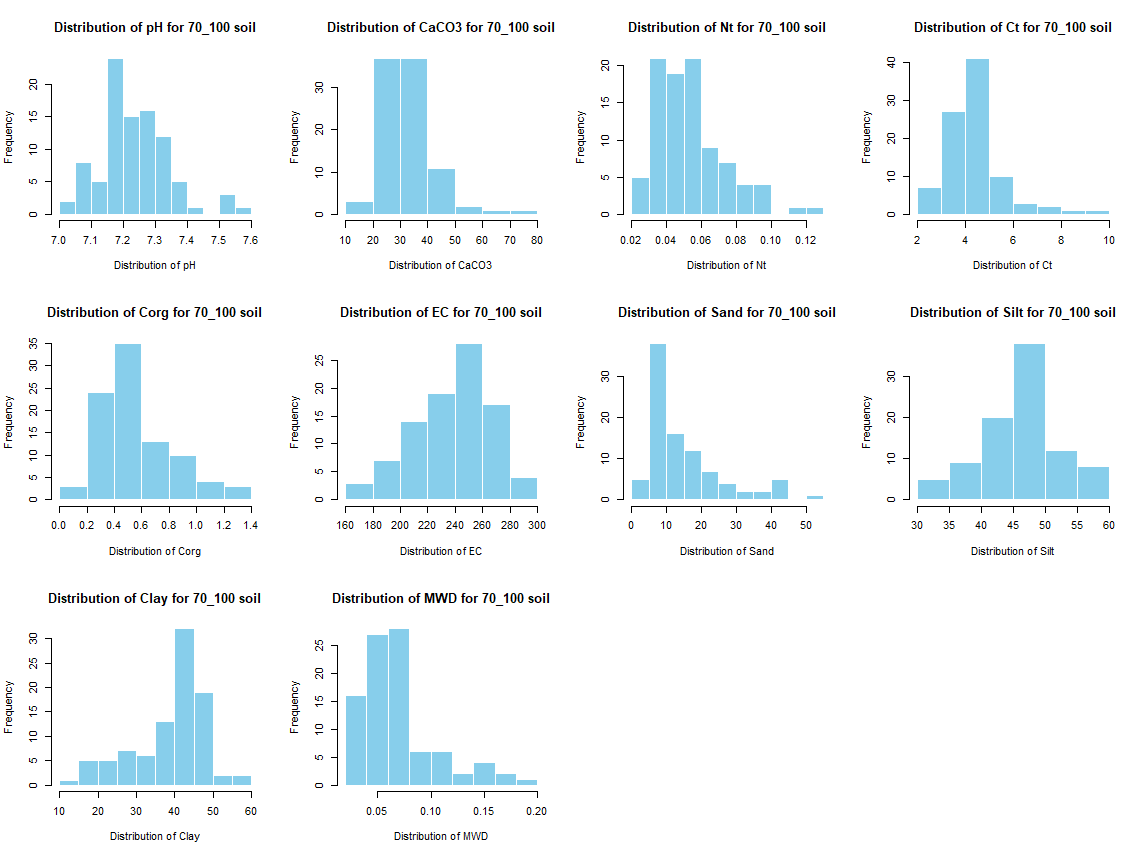

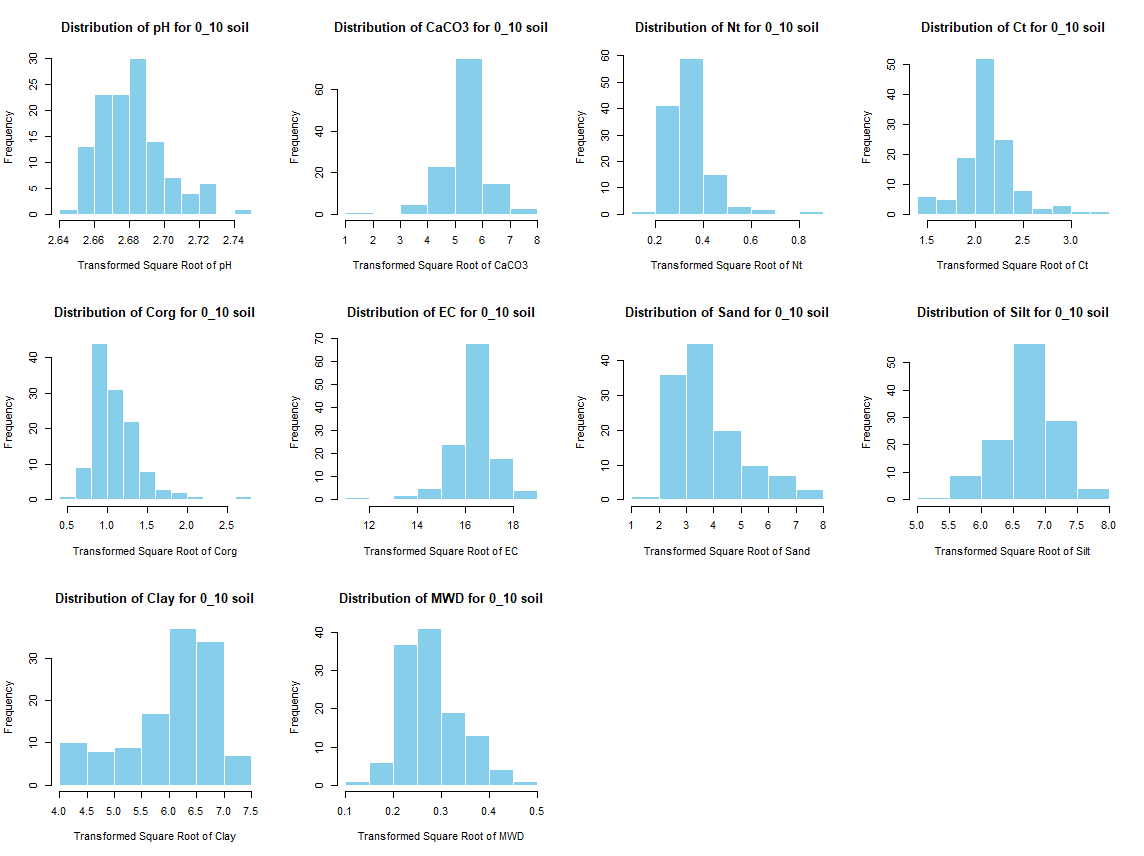

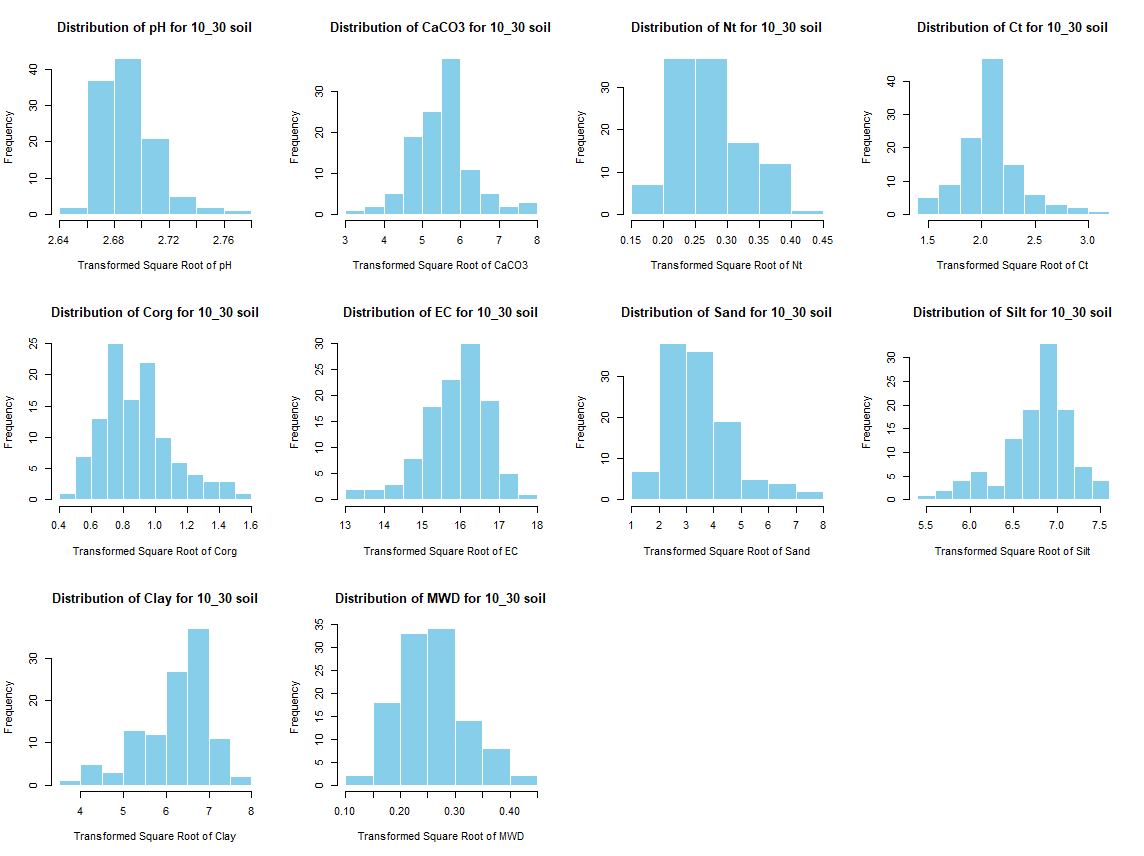

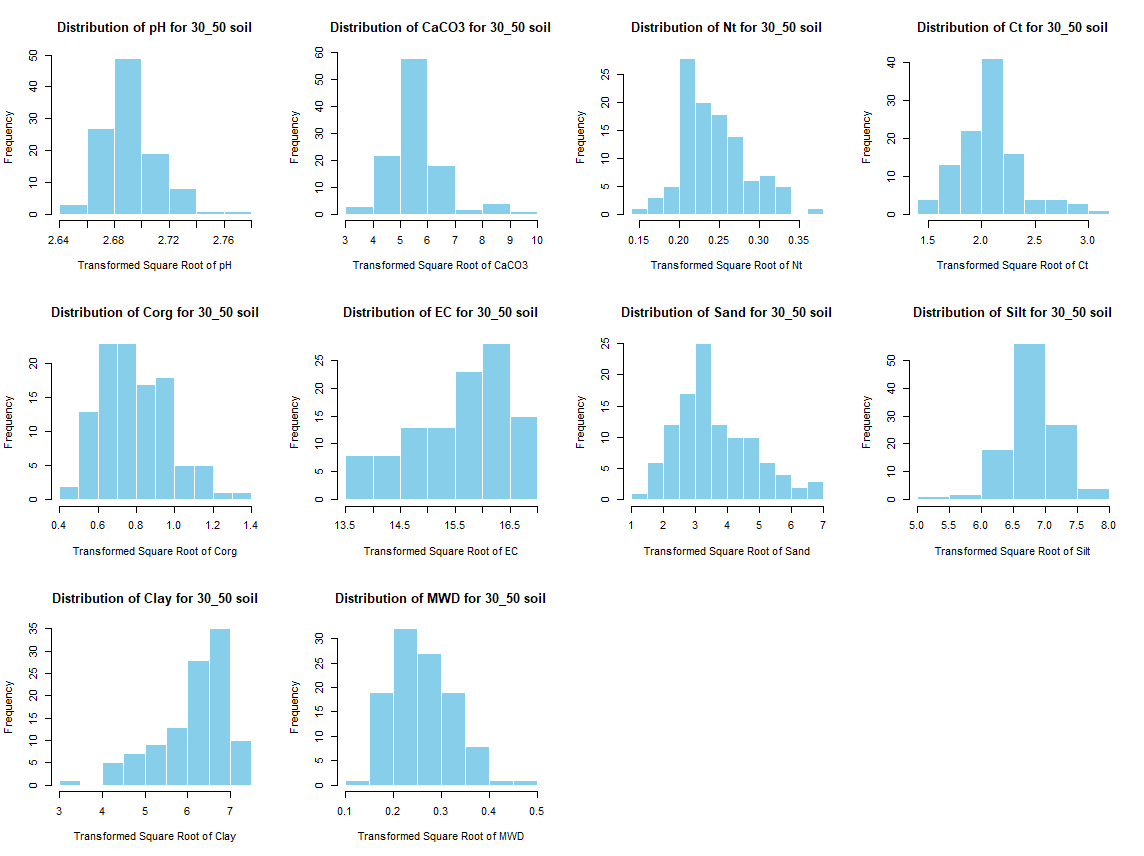

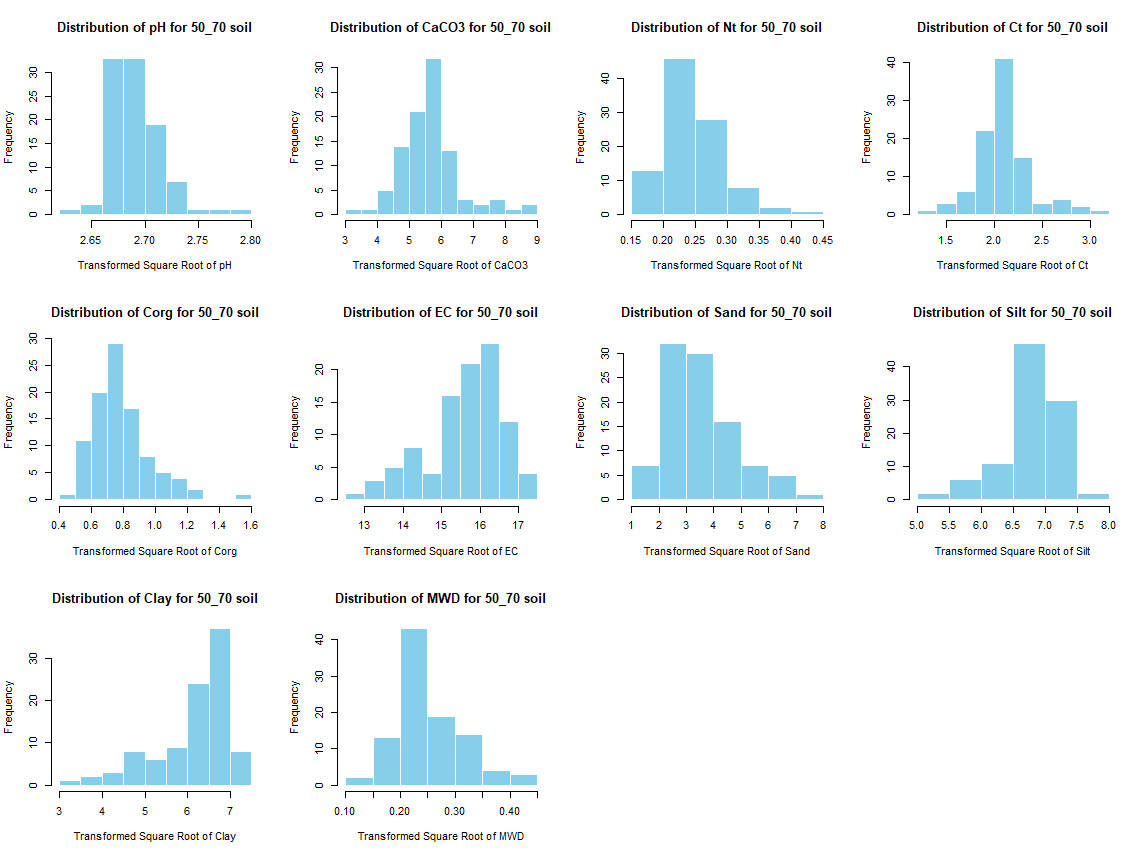

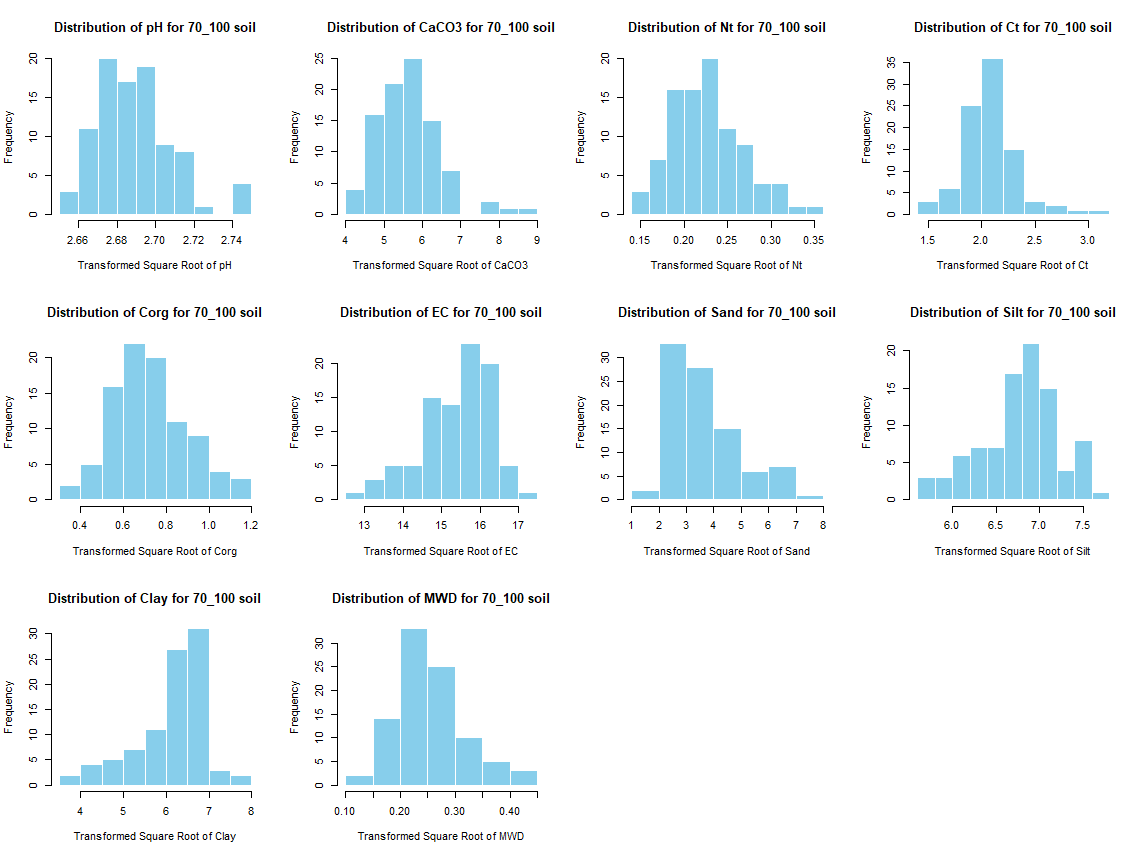

# Histogramm ploting with normal and sqrt values

for (i in 1:length(soil_infos)) {

windows(width = 12, height = 9)

par(mfrow = c(3, 4))

for (j in 4:length(soil_infos[[1]])) {

hist(sqrt(soil_infos[[i]][, j]), main = paste0("Distribution of ", names(soil_infos[[i]][j]), " for ", names(soil_infos[i]) ," soil"),

xlab = paste0("Transformed Square Root of ", names(soil_infos[[i]][j])), col = "skyblue", border = "white")

}

savePlot(paste0("./export/preprocess/Histogram_sqrt_", names(soil_infos[i]), "_soil.png"), type = "png")

par(mfrow = c(1, 1))

dev.off()

windows(width = 12, height = 9)

par(mfrow = c(3, 4))

for (j in 4:length(soil_infos[[1]])) {

hist(soil_infos[[i]][, j], main = paste0("Distribution of ", names(soil_infos[[i]][j]), " for ", names(soil_infos[i]) ," soil"),

xlab = paste0("Distribution of ", names(soil_infos[[i]][j])), col = "skyblue", border = "white")

}

savePlot(paste0("./export/preprocess/Histogram_", names(soil_infos[i]), "_soil.png"), type = "png")

par(mfrow = c(1, 1))

dev.off()

}

dev.off()

# 01.3 Set coordinates =========================================================

# Create a spatial dataframe and convert to WGS84 UTM 38 N coordinates

soil_infos_sp <- soil_infos

for (i in 1:length(soil_infos)) {

soil_infos_sp[[i]] <- st_as_sf(soil_infos_sp[[i]], coords = c("Longitude", "Latitude"), crs = 4326)

soil_infos_sp[[i]] <-st_transform(soil_infos_sp[[i]], crs = 32638)

}

mapview(soil_infos_sp[[1]]) + mapview(soil_infos_sp[[2]], col.regions = "red") + mapview(soil_infos_sp[[3]], col.regions = "green") +

mapview(soil_infos_sp[[4]], col.regions = "pink") + mapview(soil_infos_sp[[5]], col.regions = "darkgrey")7.2.3 Prepare the covariates

# 01.4 Import covariates raster ================================================

Landsat <- list.files("./data/Landsat/", full.names = TRUE)

Landsat <- Landsat[!grepl("raw", Landsat, ignore.case = TRUE)]

Landsat <- rast(Landsat)

Sentinel <- list.files("./data/Sentinel/", full.names = TRUE)

Sentinel <- Sentinel[!grepl("raw", Sentinel, ignore.case = TRUE)]

Sentinel <- rast(Sentinel)

Terrain <- list.files("./SAGA/", pattern = "*sg-grd-z" , full.names = TRUE)

Terrain <- rast(Terrain[-c(2,4,7,8,9,11,15,25)])

names(Terrain)[names(Terrain) == "DEM_raw [no sinks] [Smoothed]"] <- "DEM"

names(Terrain)[names(Terrain) == "Landforms"] <- "Surface Landform"

names(Terrain)[names(Terrain) == "LS Factor"] <- "Total Catchment Area"

names(Terrain)[names(Terrain) == "Vertical Distance to Channel Network"] <- "Channel Network Distance"

Terrain <- Terrain[[order(names(Terrain))]]

Sentinel <- list.files("./data/Sentinel/", full.names = TRUE)

Sentinel <- Sentinel[!grepl("raw", Sentinel, ignore.case = TRUE)]

Sentinel <- rast(Sentinel)

Others <- list.files("./data/Others/", full.names = TRUE)

Others <- Others[!grepl("raw", Others, ignore.case = TRUE)]

Others <- Others[!grepl("DEM", Others, ignore.case = TRUE)]

Others <- rast(Others)

Others_names <- list.files("./data/Others/")

Others_names <- Others_names[!grepl("raw", Others_names, ignore.case = TRUE)]

Others_names <- Others_names[!grepl("DEM", Others_names, ignore.case = TRUE)]

names(Others) <- gsub("\\.tif$", "", Others_names)

Modis <- list.files("./data/MODIS/", full.names = TRUE)

Modis <- Modis[!grepl("raw", Modis, ignore.case = TRUE)]

Modis <- rast(Modis)

names(Modis) <- gsub("\\_raw$", "", names(Modis))

# RS Landsat 8

Landsat$Landsat8_NDWI <- (Landsat$Landsat8_green_2021_MedianComposite - Landsat$Landsat8_NIR_2021_MedianComposite)/(Landsat$Landsat8_green_2021_MedianComposite + Landsat$Landsat8_NIR_2021_MedianComposite)

Landsat$Landsat8_NDVI <- (Landsat$Landsat8_NIR_2021_MedianComposite - Landsat$Landsat8_red_2021_MedianComposite)/(Landsat$Landsat8_NIR_2021_MedianComposite + Landsat$Landsat8_red_2021_MedianComposite)

Landsat$EVI <- 2.5 * ((Landsat$Landsat8_NIR_2021_MedianComposite - Landsat$Landsat8_red_2021_MedianComposite)/((Landsat$Landsat8_NIR_2021_MedianComposite + 6 * Landsat$Landsat8_red_2021_MedianComposite) - (7.5*Landsat$Landsat8_blue_2021_MedianComposite) + 1))

Landsat$SAVI <- 1.5 * ((Landsat$Landsat8_NIR_2021_MedianComposite - Landsat$Landsat8_red_2021_MedianComposite) / (Landsat$Landsat8_NIR_2021_MedianComposite + Landsat$Landsat8_red_2021_MedianComposite + 0.5)) #Enhanced Vegetation Index

Landsat$TVI <- sqrt(Landsat$Landsat8_NDVI + 0.5)

Landsat$NDMI <- (Landsat$Landsat8_NIR_2021_MedianComposite - Landsat$Landsat8_SWIR1_2021_MedianComposite)/(Landsat$Landsat8_NIR_2021_MedianComposite + Landsat$Landsat8_SWIR1_2021_MedianComposite) # normilized difference moisture index

Landsat$COSRI <- Landsat$Landsat8_NDVI * ((Landsat$Landsat8_blue_2021_MedianComposite + Landsat$Landsat8_green_2021_MedianComposite)/(Landsat$Landsat8_red_2021_MedianComposite + Landsat$Landsat8_NIR_2021_MedianComposite)) # Combined Specteral Response Index

Landsat$LSWI <- (Landsat$Landsat8_NIR_2021_MedianComposite - Landsat$Landsat8_SWIR1_2021_MedianComposite) / (Landsat$Landsat8_NIR_2021_MedianComposite + Landsat$Landsat8_SWIR1_2021_MedianComposite)

Landsat$BrightnessIndex <- sqrt((Landsat$Landsat8_red_2021_MedianComposite^2) + (Landsat$Landsat8_NIR_2021_MedianComposite^2))

Landsat$ClayIndex <- Landsat$Landsat8_SWIR1_2021_MedianComposite / Landsat$Landsat8_SWIR2_2021_MedianComposite

Landsat$SalinityIndex <- (Landsat$Landsat8_SWIR1_2021_MedianComposite - Landsat$Landsat8_SWIR2_2021_MedianComposite) / (Landsat$Landsat8_SWIR1_2021_MedianComposite - Landsat$Landsat8_NIR_2021_MedianComposite)

Landsat$CarbonateIndex <- Landsat$Landsat8_red_2021_MedianComposite / Landsat$Landsat8_green_2021_MedianComposite

Landsat$GypsumIndex <- (Landsat$Landsat8_SWIR1_2021_MedianComposite - Landsat$Landsat8_SWIR2_2021_MedianComposite) / (Landsat$Landsat8_SWIR1_2021_MedianComposite + Landsat$Landsat8_SWIR2_2021_MedianComposite)

# RS Sentinel 2

Sentinel$Sentinel2_NDWI <- (Sentinel$Sentinel2_green_2021_MedianComposite - Sentinel$Sentinel2_NIR_2021_MedianComposite) / (Sentinel$Sentinel2_green_2021_MedianComposite + Sentinel$Sentinel2_NIR_2021_MedianComposite)

Sentinel$Sentinel2_NDVI <- (Sentinel$Sentinel2_NIR_2021_MedianComposite - Sentinel$Sentinel2_red_2021_MedianComposite) / (Sentinel$Sentinel2_NIR_2021_MedianComposite + Sentinel$Sentinel2_red_2021_MedianComposite)

Sentinel$EVI <- 2.5 * ((Sentinel$Sentinel2_NIR_2021_MedianComposite - Sentinel$Sentinel2_red_2021_MedianComposite) / ((Sentinel$Sentinel2_NIR_2021_MedianComposite + 6 * Sentinel$Sentinel2_red_2021_MedianComposite) - (7.5 * Sentinel$Sentinel2_blue_2021_MedianComposite) + 1))

Sentinel$SAVI <- 1.5 * ((Sentinel$Sentinel2_NIR_2021_MedianComposite - Sentinel$Sentinel2_red_2021_MedianComposite) / (Sentinel$Sentinel2_NIR_2021_MedianComposite + Sentinel$Sentinel2_red_2021_MedianComposite + 0.5))

Sentinel$TVI <- sqrt(Sentinel$Sentinel2_NDVI + 0.5)

Sentinel$NDMI <- (Sentinel$Sentinel2_NIR_2021_MedianComposite - Sentinel$Sentinel2_SWIR1_2021_MedianComposite) / (Sentinel$Sentinel2_NIR_2021_MedianComposite + Sentinel$Sentinel2_SWIR1_2021_MedianComposite) # normilized difference moisture index

Sentinel$COSRI <- Sentinel$Sentinel2_NDVI * ((Sentinel$Sentinel2_blue_2021_MedianComposite + Sentinel$Sentinel2_green_2021_MedianComposite)/(Sentinel$Sentinel2_red_2021_MedianComposite + Sentinel$Sentinel2_NIR_2021_MedianComposite)) # Combined Specteral Response Index

Sentinel$LSWI <- (Sentinel$Sentinel2_NIR_2021_MedianComposite - Sentinel$Sentinel2_SWIR1_2021_MedianComposite) / (Sentinel$Sentinel2_NIR_2021_MedianComposite + Sentinel$Sentinel2_SWIR1_2021_MedianComposite)

Sentinel$BrightnessIndex <- sqrt((Sentinel$Sentinel2_red_2021_MedianComposite^2) + (Sentinel$Sentinel2_NIR_2021_MedianComposite^2))

Sentinel$ClayIndex <- Sentinel$Sentinel2_SWIR1_2021_MedianComposite / Sentinel$Sentinel2_SWIR2_2021_MedianComposite

Sentinel$SalinityIndex <- (Sentinel$Sentinel2_SWIR1_2021_MedianComposite - Sentinel$Sentinel2_SWIR2_2021_MedianComposite) / (Sentinel$Sentinel2_SWIR1_2021_MedianComposite - Sentinel$Sentinel2_NIR_2021_MedianComposite)

Sentinel$CarbonateIndex <- Sentinel$Sentinel2_red_2021_MedianComposite / Sentinel$Sentinel2_green_2021_MedianComposite

Sentinel$GypsumIndex <- (Sentinel$Sentinel2_SWIR1_2021_MedianComposite - Sentinel$Sentinel2_SWIR2_2021_MedianComposite) / (Sentinel$Sentinel2_SWIR1_2021_MedianComposite + Sentinel$Sentinel2_SWIR2_2021_MedianComposite)

# RS MODIS

Modis$SAVI <- 1.5 * ((Modis$MODIS_NIR - Modis$MODIS_Red) / (Modis$MODIS_NIR + Modis$MODIS_Red + 0.5))

Modis$TVI <- sqrt(Modis$MODIS_NDVI + 0.5)

Modis$BrightnessIndex <- sqrt((Modis$MODIS_Red^2) + (Modis$MODIS_NIR^2))

df_names <- data.frame()

for (i in 1:length(names(Terrain))) {

c <- paste0("TE.",i)

df_names[i,1] <- c

df_names[i,2] <- names(Terrain)[i]

}

t <- nrow(df_names)

for (i in 1:length(names(Landsat))) {

c <- paste0("LA.",i)

df_names[i+t,1] <- c

df_names[i+t,2] <- names(Landsat)[i]

}

t <- nrow(df_names)

for (i in 1:length(names(Sentinel))) {

c <- paste0("SE.",i)

df_names[i+t,1] <- c

df_names[i+t,2] <- names(Sentinel)[i]

}

t <- nrow(df_names)

for (i in 1:length(names(Modis))) {

c <- paste0("MO.",i)

df_names[i+t,1] <- c

df_names[i+t,2] <- names(Modis)[i]

}

t <- nrow(df_names)

for (i in 1:length(names(Others))) {

c <- paste0("OT.",i)

df_names[i+t,1] <- c

df_names[i+t,2] <- names(Others)[i]

}

write.table(df_names,"./data/Covariates_names_DSM.txt")

x <- c(Terrain, Landsat, Sentinel, Modis, Others)

names(x) <- df_names[,1]

writeRaster(x, "./data/Stack_layers_DSM.tif", overwrite = TRUE)

# 01.5 Plot the covariates maps ================================================

covariates <- rast("./data/Stack_layers_DSM.tif")

reduce <- aggregate(covariates, fact=10, fun=modal)

writeRaster(reduce, "./data/Stack_layers_DSM_reduce.tif", overwrite = TRUE)

plot(reduce)

# 01.6 Extract the values ======================================================

# Extract the values of each band for the sampling location

df_cov <- soil_infos_sp

for (i in 1:length(df_cov)) {

df_cov[[i]] <- extract(covariates, df_cov[[i]], method='simple')

df_cov[[i]] <- as.data.frame(df_cov[[i]])

write.csv(df_cov[[i]], paste0("./data/df_",names(df_cov[i]),"_cov_DSM.csv"))

}

# 01.7 Export and save data ====================================================

save(df_cov, soil_infos_sp, file = "./export/save/Preprocess.RData")

rm(list = ls())

# 02 Check the data ############################################################

# 02.1 Import the data and merge ===============================================

make_subdir <- function(parent_dir, subdir_name) {

path <- file.path(parent_dir, subdir_name)

if (!dir.exists(path)) {

dir.create(path, recursive = TRUE)

message("✅ Folder created", path)

} else {

message("ℹ️ Existing folder : ", path)

}

return(path)

}

make_subdir("./export", "preprocess")

load(file = "./export/save/Preprocess.RData")

SoilCov <- df_cov

for (i in 1:length(SoilCov)) {

ID <- 1:nrow(SoilCov[[i]])

SoilCov[[i]] <- cbind(df_cov[[i]], ID, st_drop_geometry(soil_infos_sp[[i]]))

cat("There is ", sum(is.na(SoilCov[[i]])== TRUE), "Na values in ", names(SoilCov[i])," soil list \n")

}

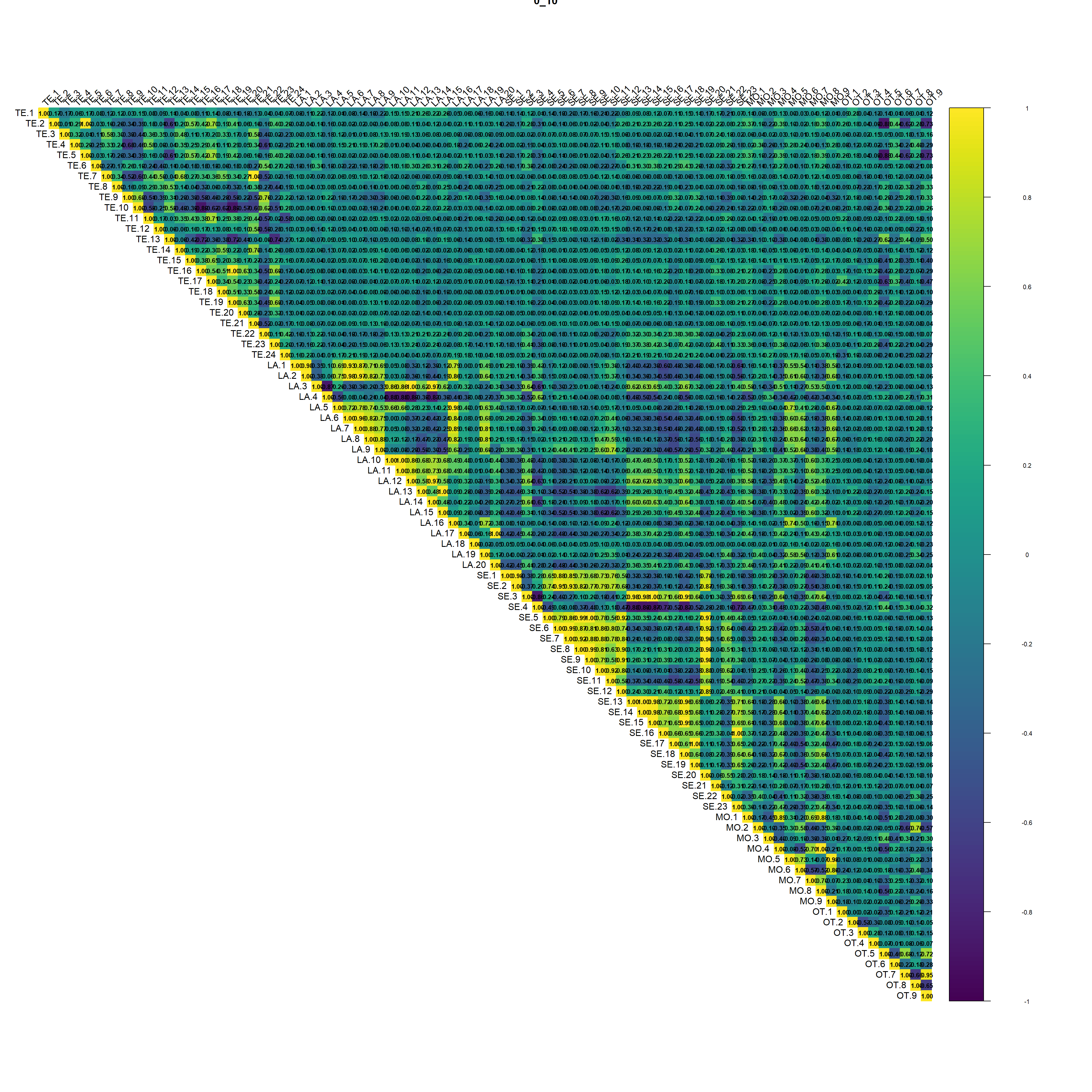

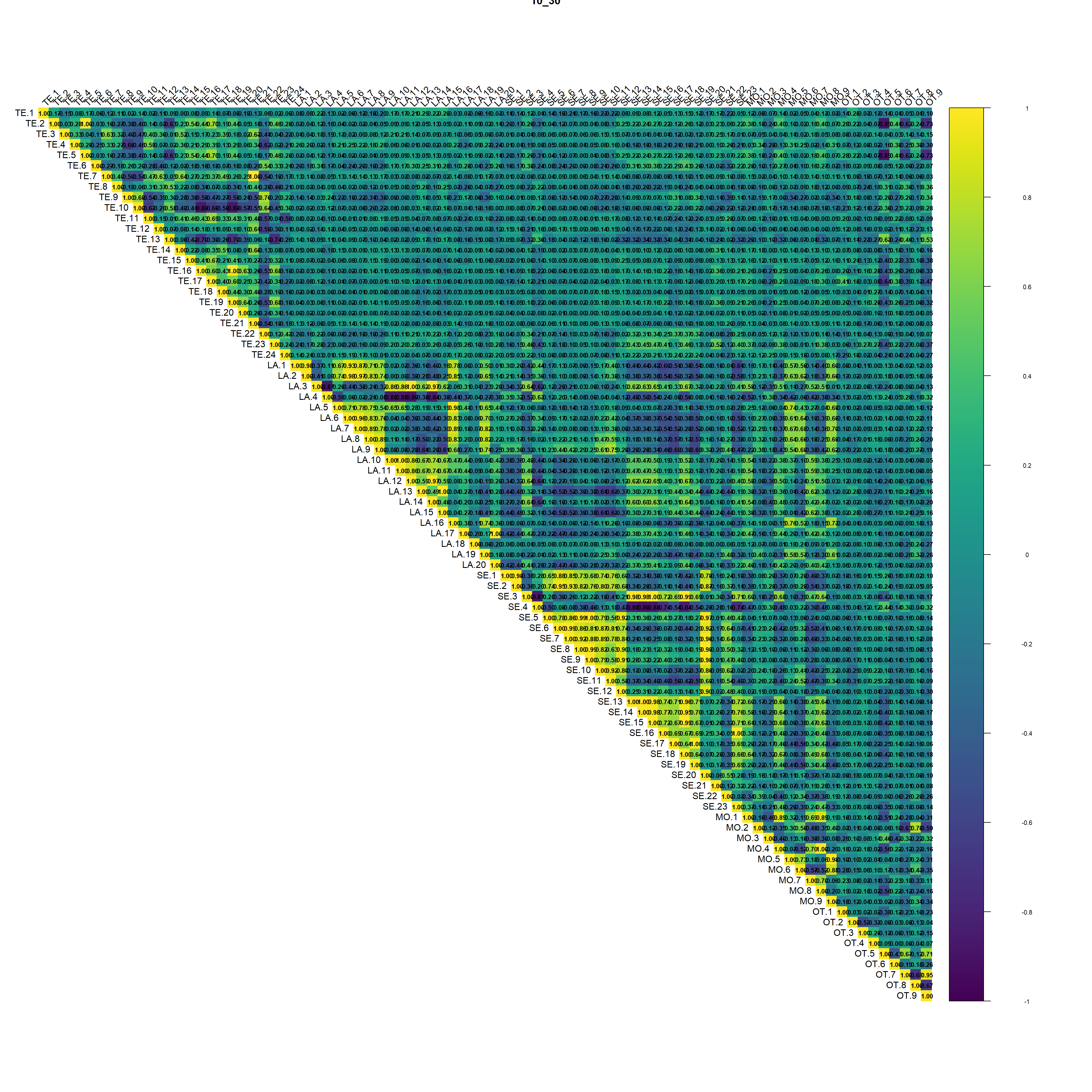

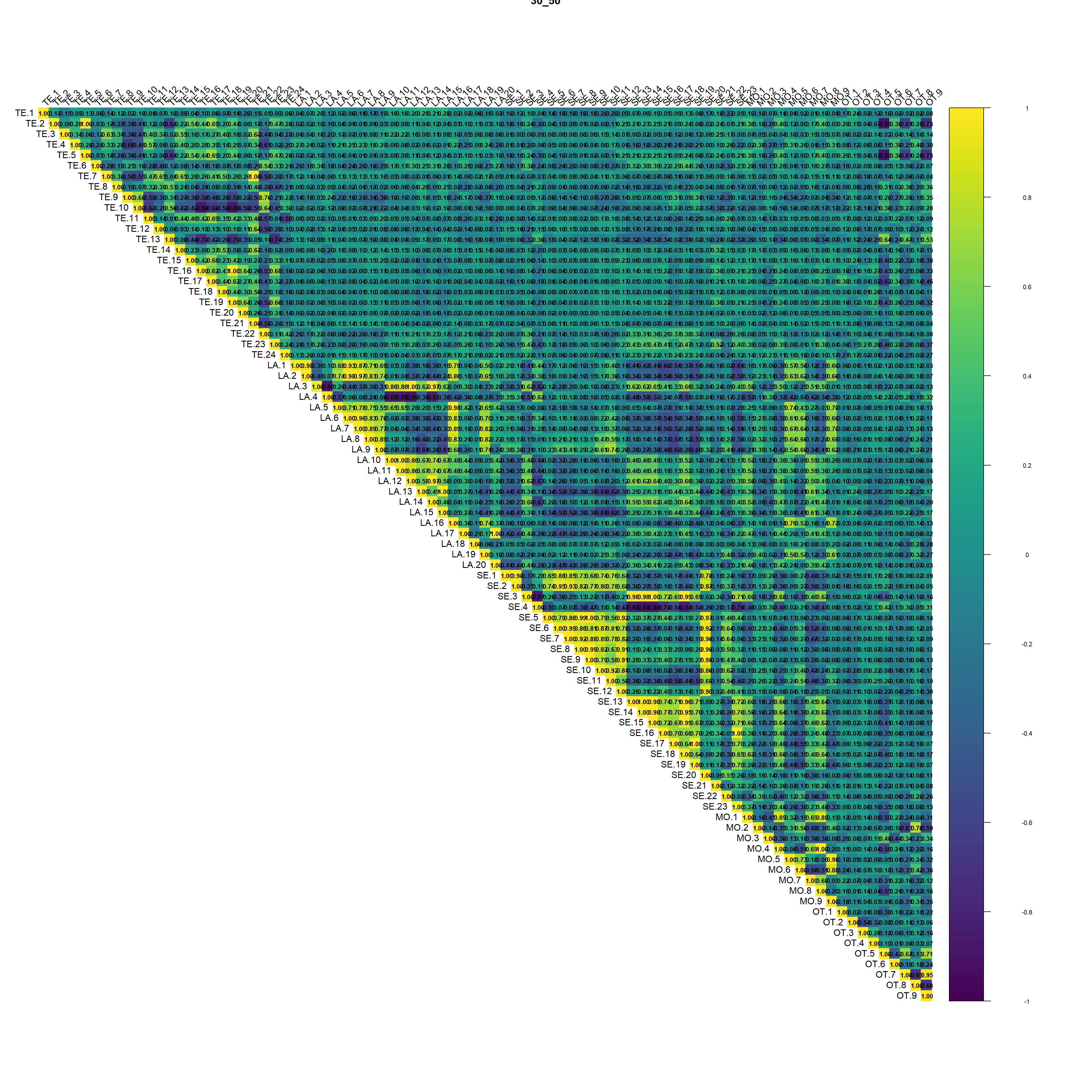

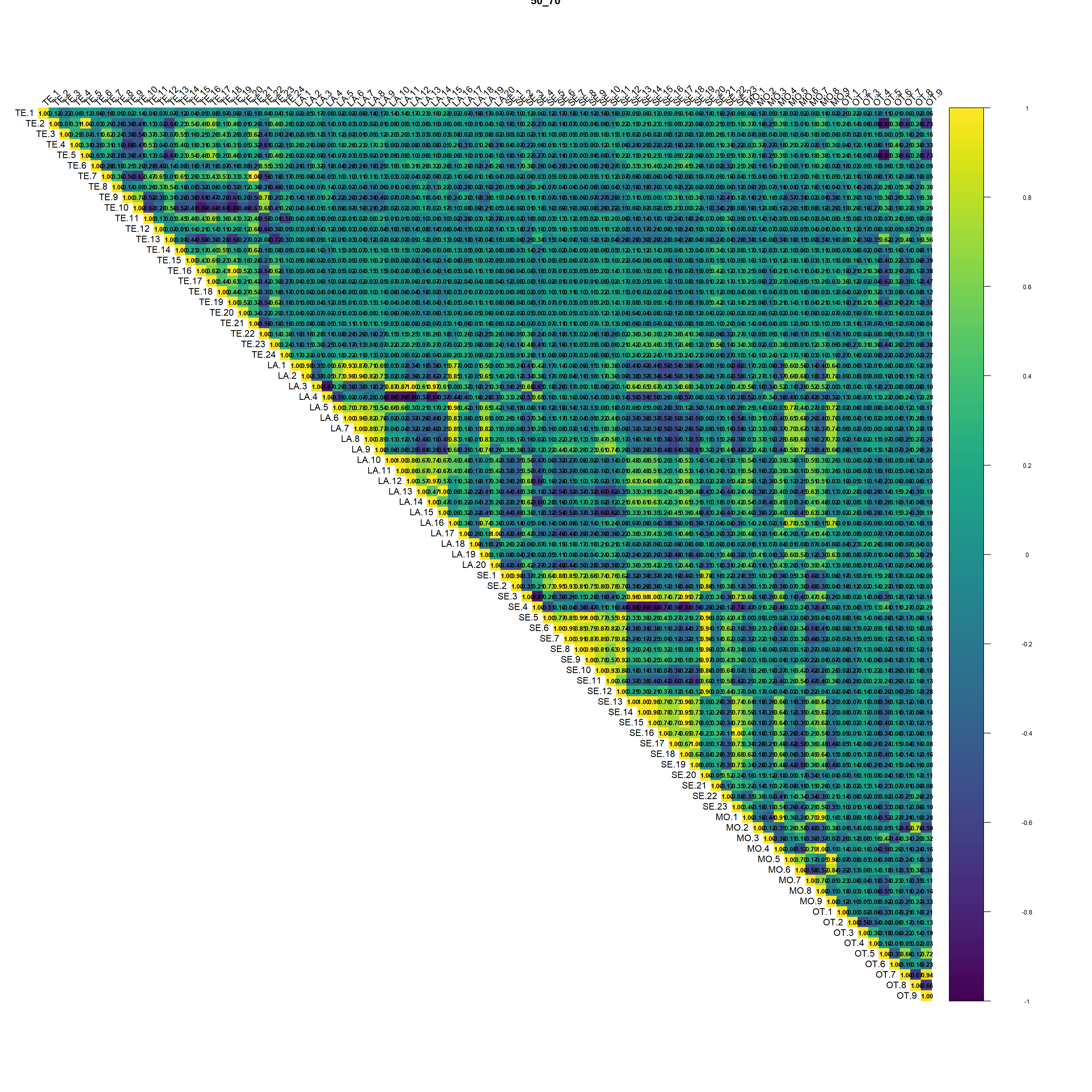

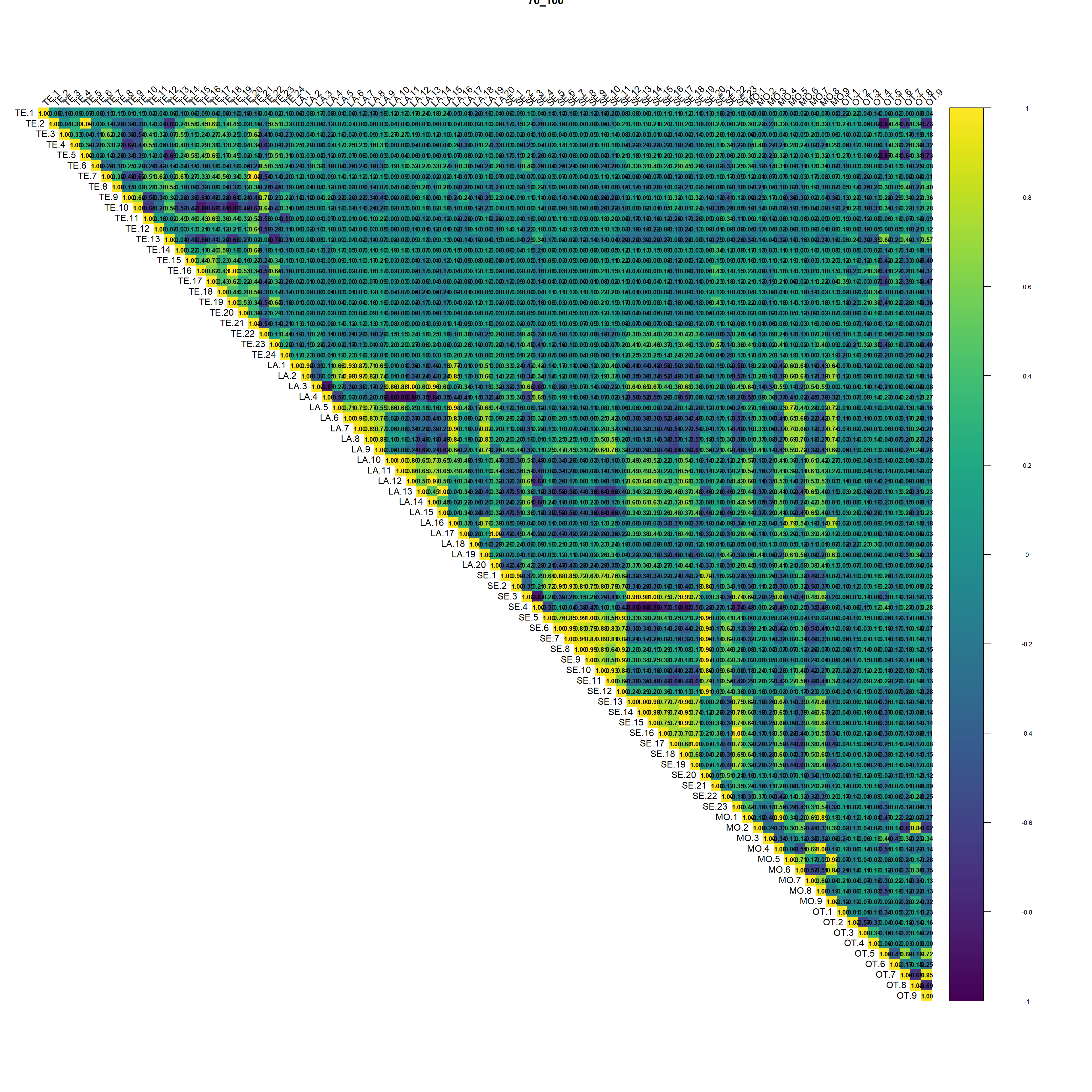

# 02.2 Plot and export the correlation matrix ==================================

for (i in 1:length(df_cov)) {

pdf(paste0("./export/preprocess/Correlation_",names(df_cov[i]), ".pdf"), # File name

width = 40, height = 40, # Width and height in inches

bg = "white", # Background color

colormodel = "cmyk") # Color model

# Correlation of the data (remove discrete data)

corrplot(cor(df_cov[[i]][,-c(1,79:81)]), method = "color", col = viridis(200),

type = "upper",

addCoef.col = "black", # Add coefficient of correlation

tl.col = "black", tl.srt = 45, # Text label color and rotation

number.cex = 0.7, # Size of the text labels

cl.cex = 0.7, # Size of the color legend text

cl.lim = c(-1, 1)) # Color legend limits

dev.off()

}

Regarding the correlation plot the Landsat and Sentinel bands are the ones with the higher correlation followed by terrain derivatives from DEM.

# 02.3 Select with VIF correlation =============================================

vif <- df_cov

vif_plot <- df_cov

for (i in 1:length(df_cov)) {

vif[[i]] <-vifcor(df_cov[[i]], th=0.8)

vif_df <- as.data.frame(vif[[i]]@results)

write.table(vif_df, paste0("./export/VIF/vif_results_",names(df_cov[i]) ,"_soil.txt"))

vif_plot[[i]] <- ggplot(vif_df, aes(x = reorder(Variables, VIF), y = VIF)) +

geom_bar(stat = "identity", fill = "lightblue") +

coord_flip() +

theme_minimal() +

labs(title = paste0("VIF Values for ", names(df_cov[i]) ," soil"), x = "Variables", y = "VIF") +

theme(axis.text.x = element_text(angle = 45, hjust = 1))

ggsave(paste0("./export/VIF/VIF_", names(df_cov[i]),"_soil.png"), vif_plot[[i]], width = 12, height = 8)

ggsave(paste0("./export/VIF/VIF_", names(df_cov[i]),"_soil.pdf"), vif_plot[[i]], width = 12, height = 8)

}

# 02.4 Statistics ==============================================================

increments <- c("0_10", "10_30", "30_50", "50_70", "70_100")

make_subdir("./export", "Boruta")

for (depth in increments) {

make_subdir("./export/Boruta", depth)

make_subdir("./export/RFE", depth)

make_subdir("./export/preprocess", depth)

# Basic statistics

print(names(SoilCov[[depth]]))

print(nrow(SoilCov[[depth]]))

# If necessary

SoilCov[[depth]] <- na.omit(SoilCov[[depth]])

print(head(SoilCov[[depth]]))

sapply(SoilCov[[depth]], class)

print(summary(SoilCov[[depth]]))

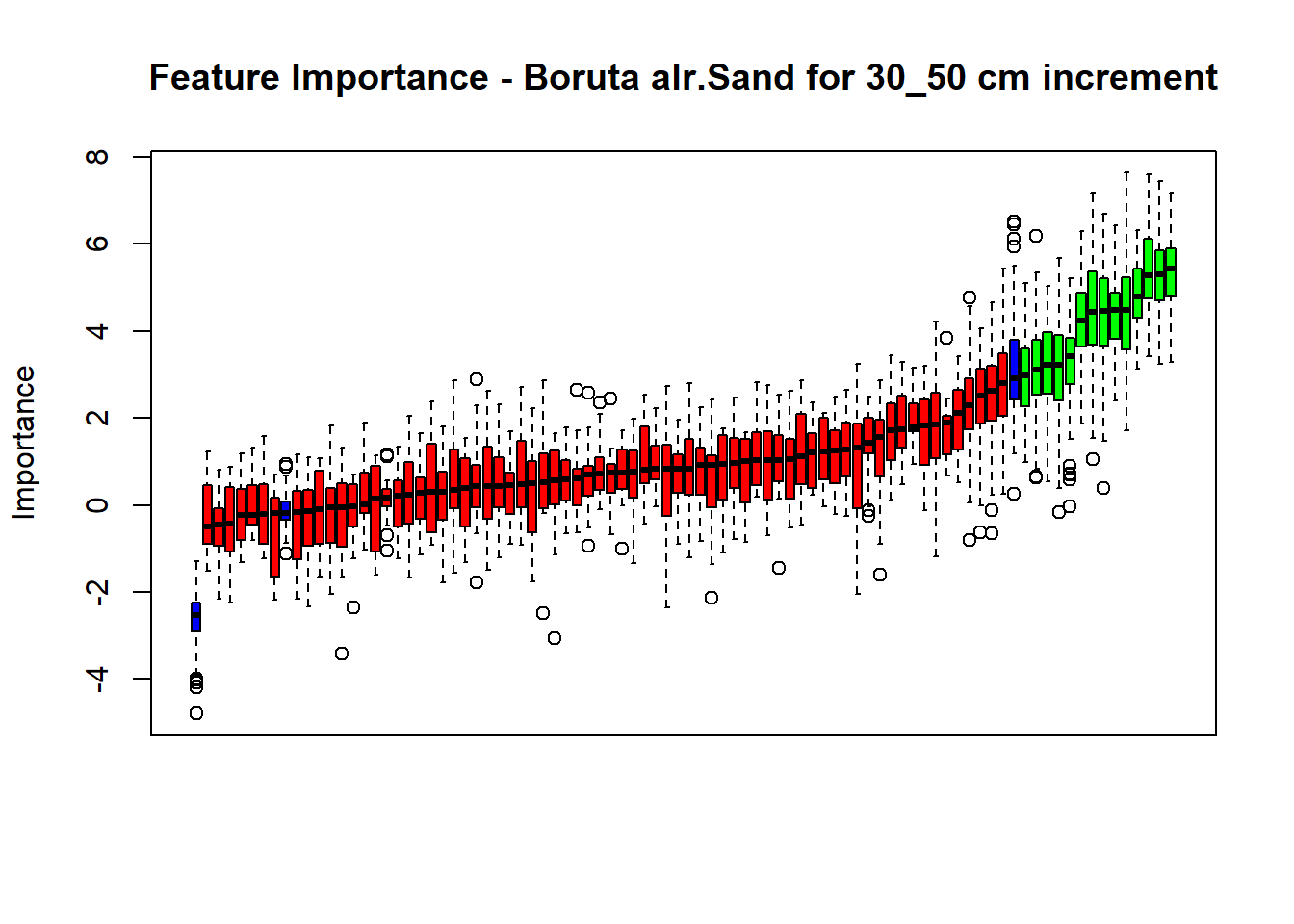

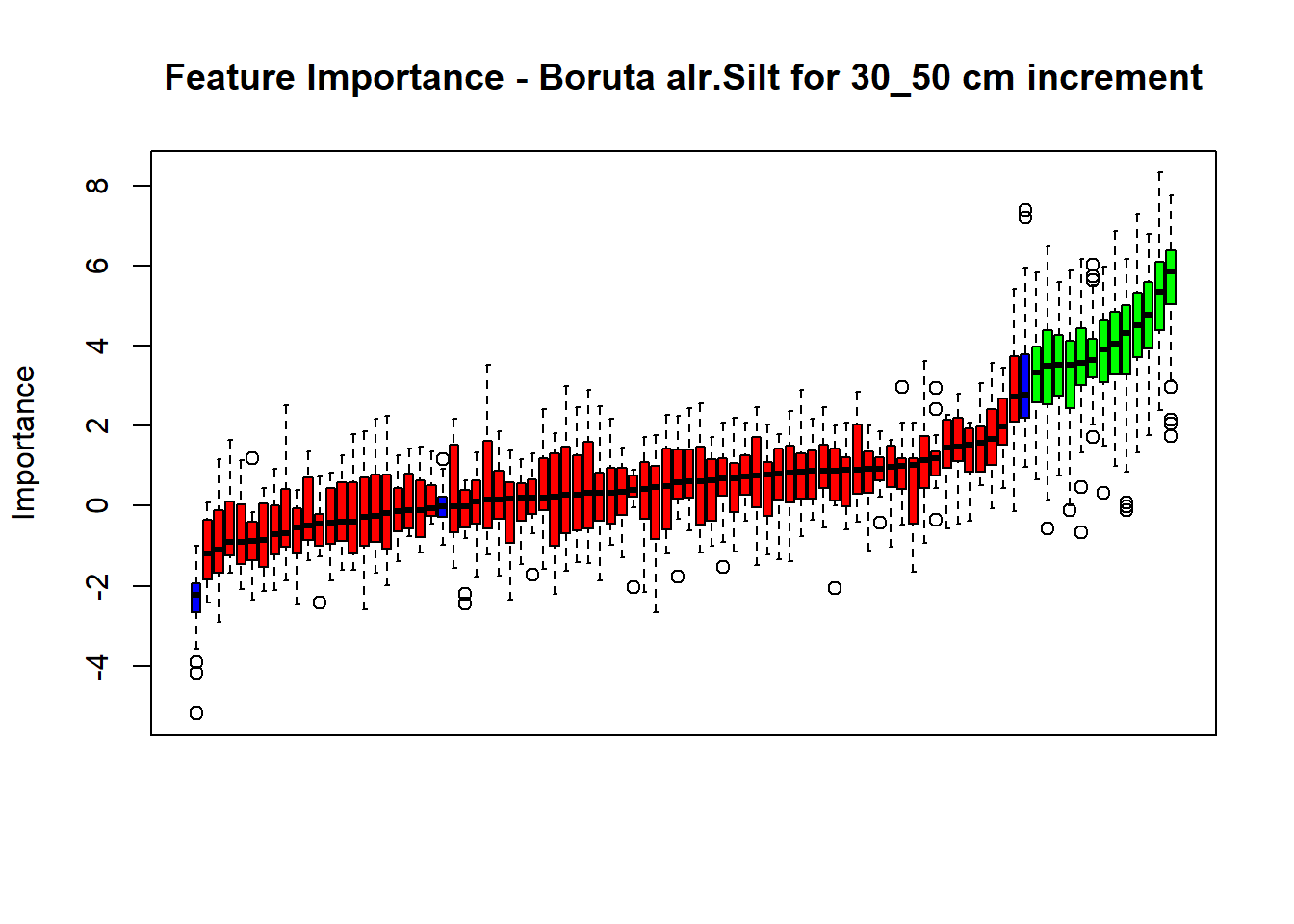

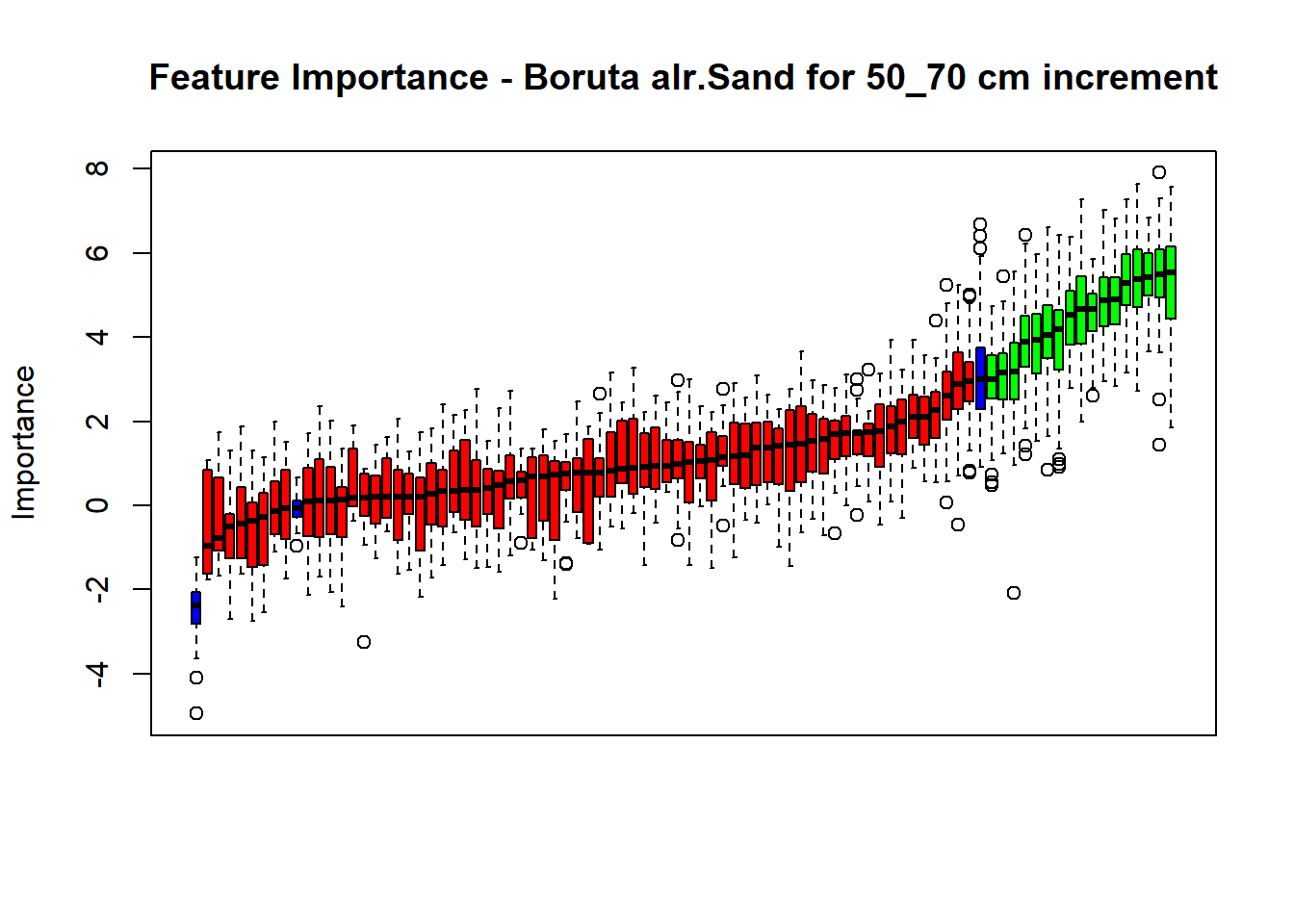

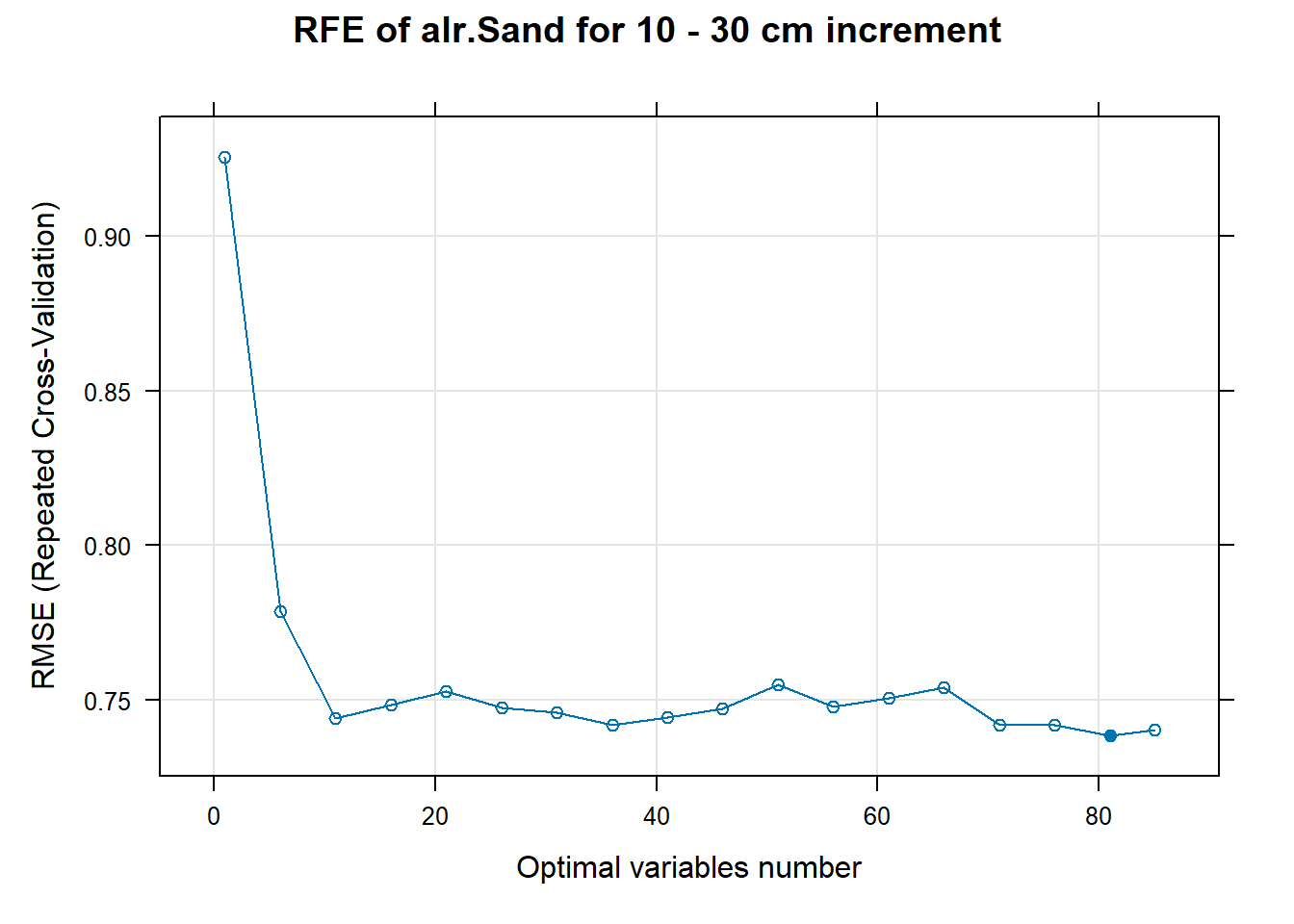

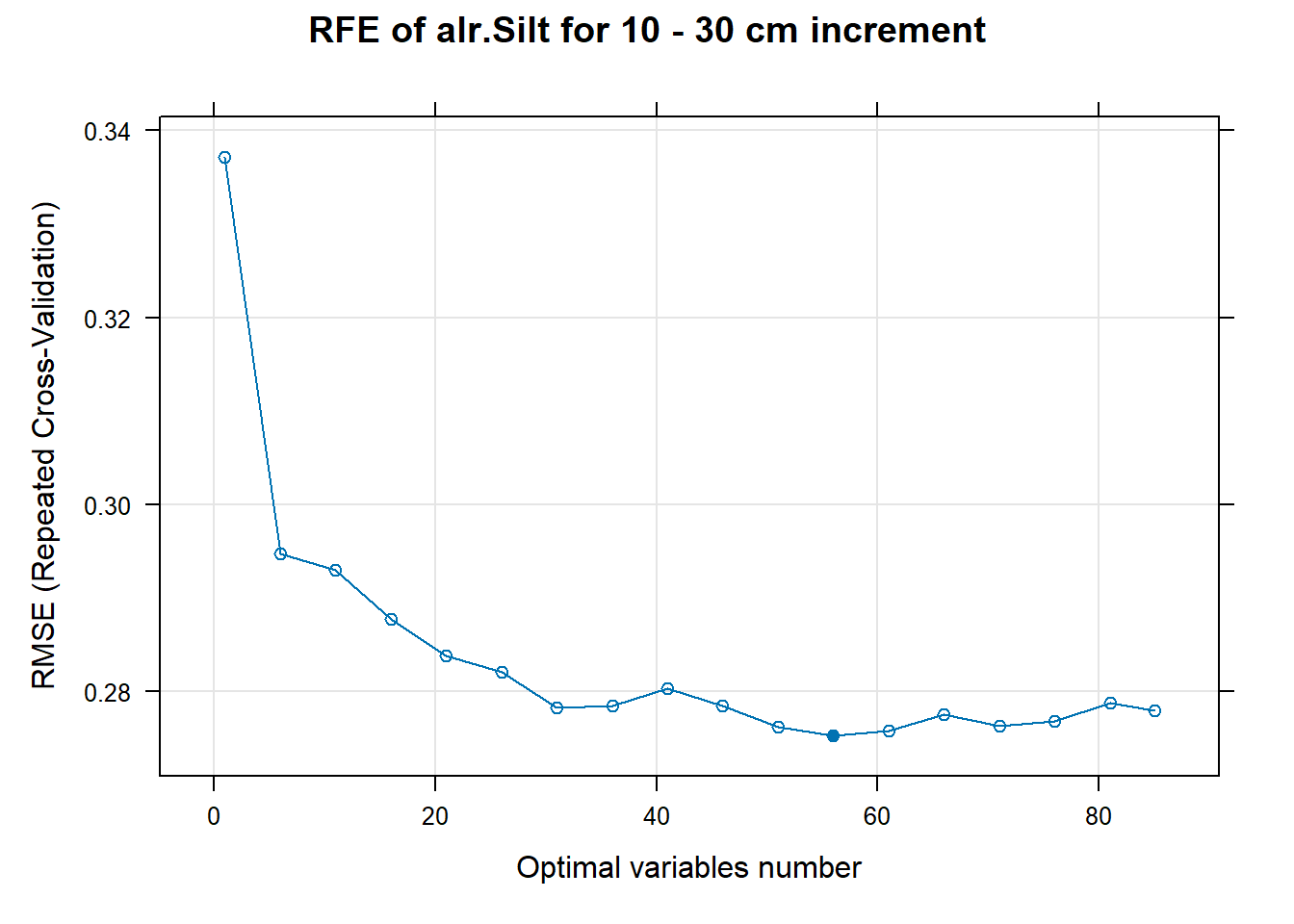

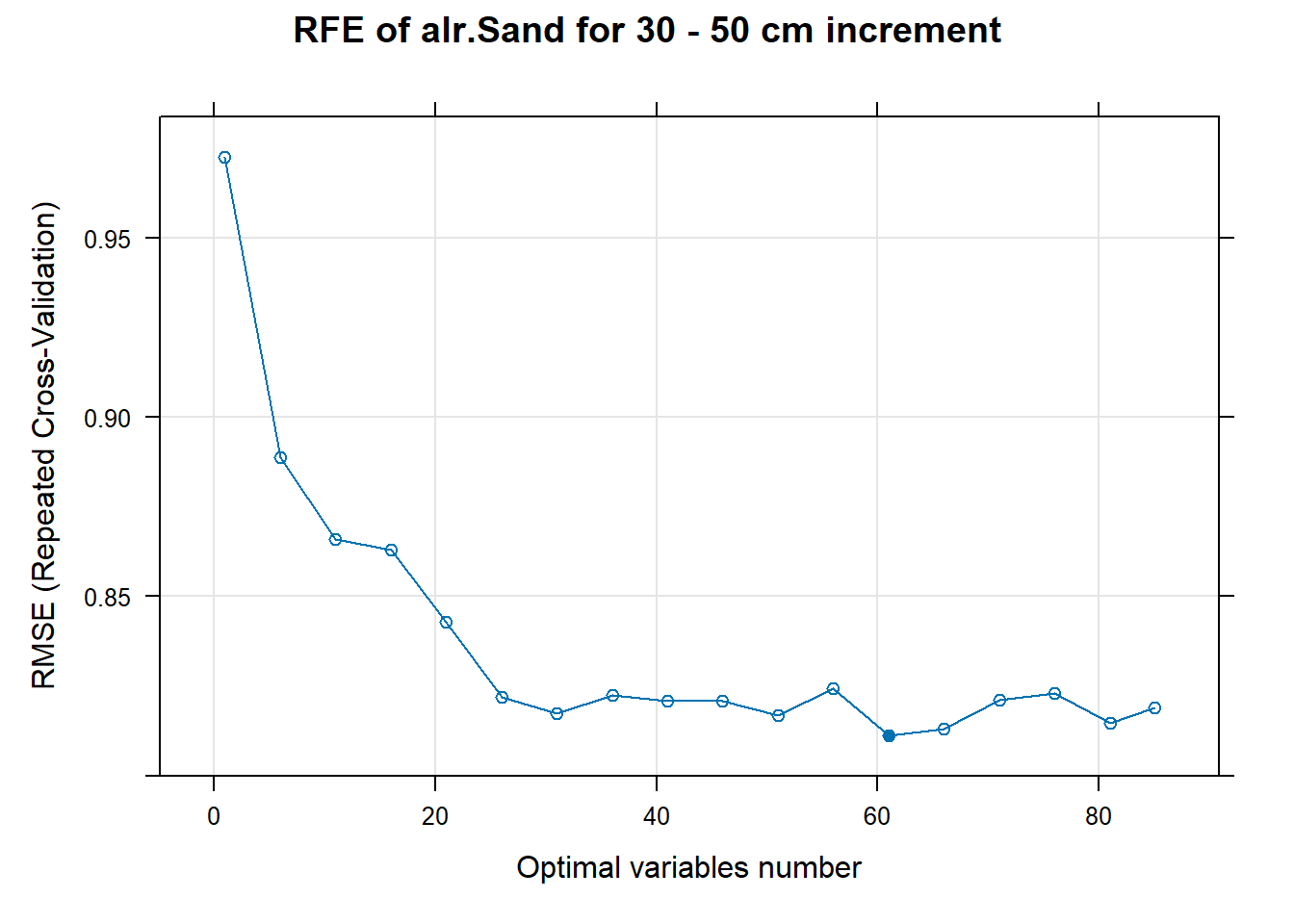

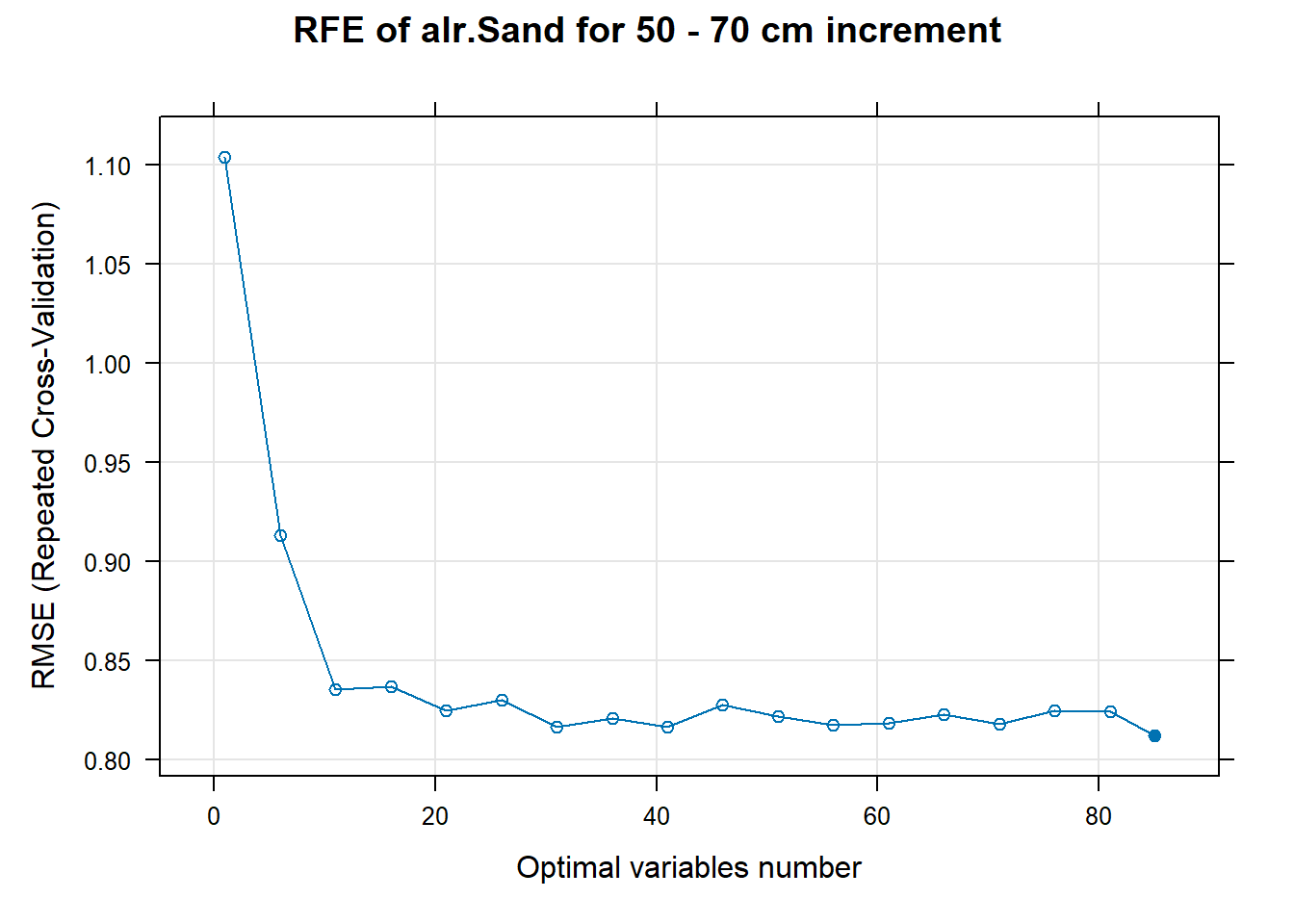

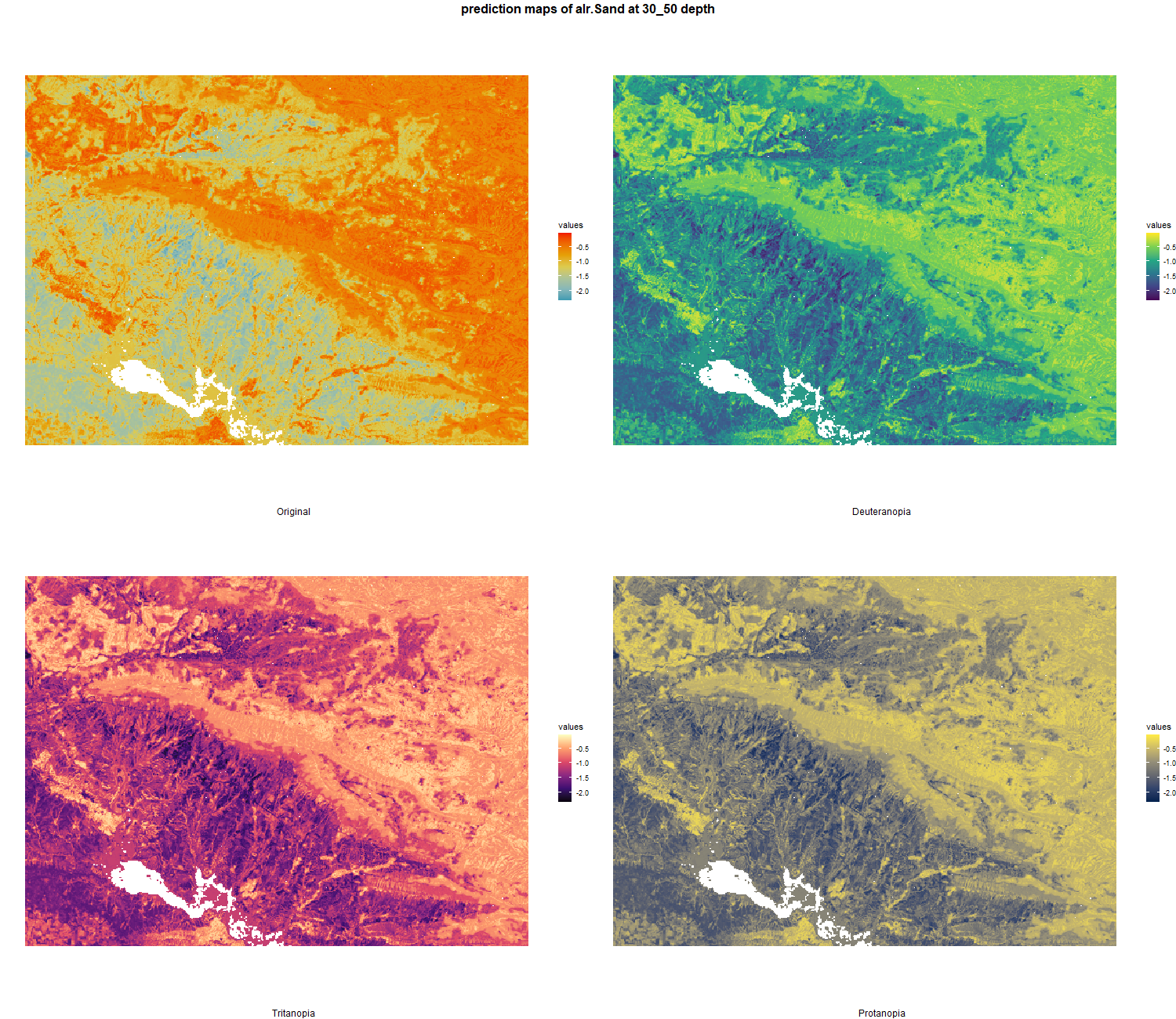

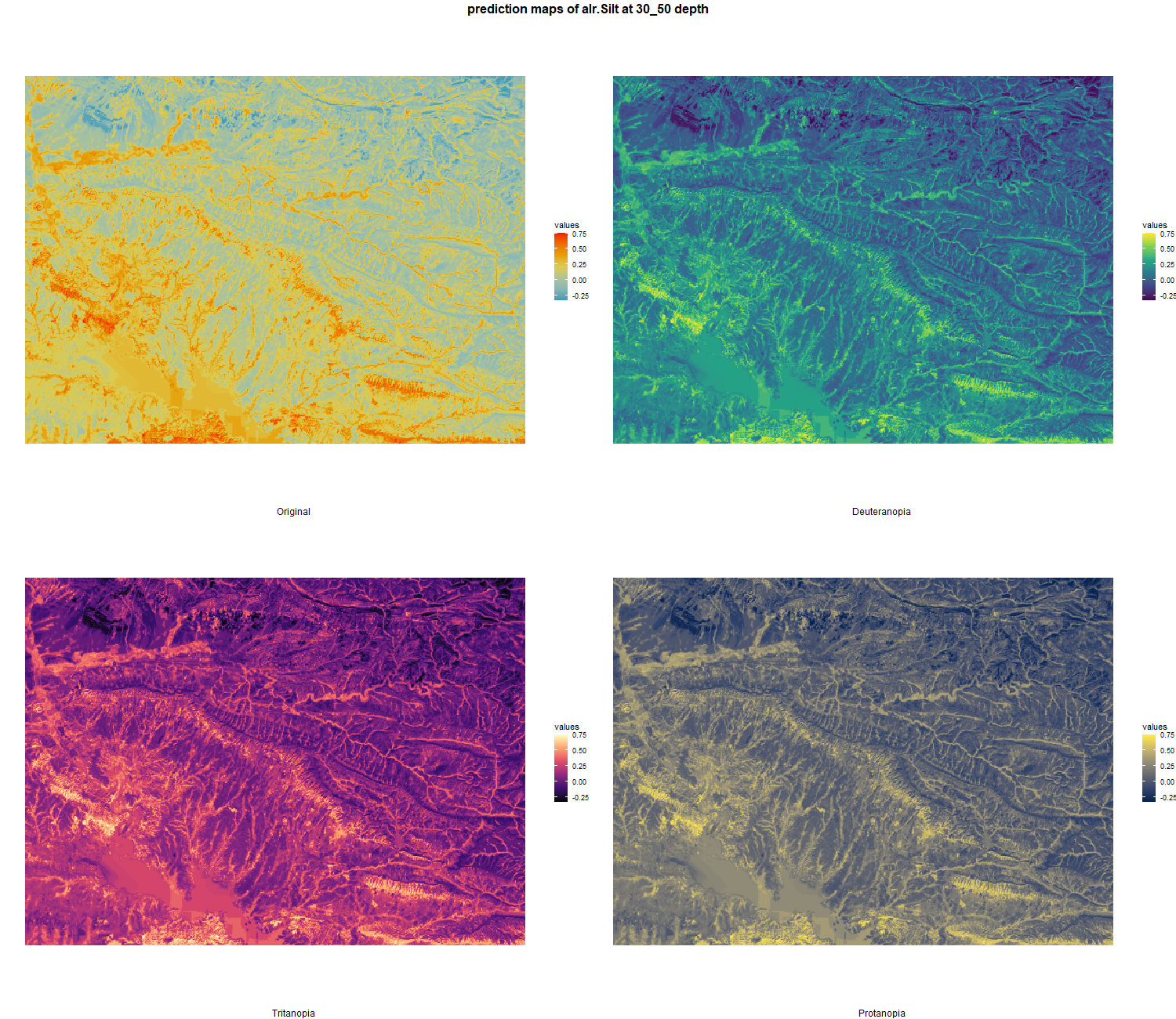

}7.2.4 ALR transformation

# 03 Check covariates influences ###############################################

Cov <- list()

nzv_vars <- list()

cl <- makeCluster(6)

registerDoParallel(cl)

# 03.1 Transform the soiltexture and remove nzv ================================

for (depth in increments) {

depth_name <- gsub("_", " - ", depth)

SoilCovMLCon <- SoilCov[[depth]][,-c(1,87:88)] # Remove ID and site name

NumCovLayer = 85 # define number of covariate layer after hot coding

StartTargetCov = NumCovLayer + 1 # start column after all covariates

NumDataCol= ncol(SoilCovMLCon) # number of column in all data set

# Remove and save NZV

nzv <- nearZeroVar(SoilCovMLCon[,1:NumCovLayer], saveMetrics = TRUE)

nzv_vars[[depth]] <- rownames(nzv)[nzv$nzv == TRUE]

print(rownames(nzv)[nzv$nzv == TRUE])

# Realise the PSF transformation

texture_df <- SoilCovMLCon[,c((NumDataCol-3):(NumDataCol-1))]

colnames(texture_df) <- c("SAND","SILT", "CLAY")

texture_df <- TT.normalise.sum(texture_df, css.names = c("SAND","SILT", "CLAY"))

colnames(texture_df) <- colnames(SoilCovMLCon[,c((NumDataCol-3):(NumDataCol-1))])

alr_df <- as.data.frame(alr(texture_df)) # Additive-log ratio with Sand/Clay and Silt/Clay (last column is taken)

colnames(alr_df) <- c("alr.Sand", "alr.Silt")

SoilCovMLCon <- SoilCovMLCon[,-c((NumDataCol-3):(NumDataCol-1))]

SoilCovMLCon <- cbind(SoilCovMLCon, alr_df, texture_df)

NumDataCol <- (NumDataCol -1)

Cov[[depth]] <- SoilCovMLCon

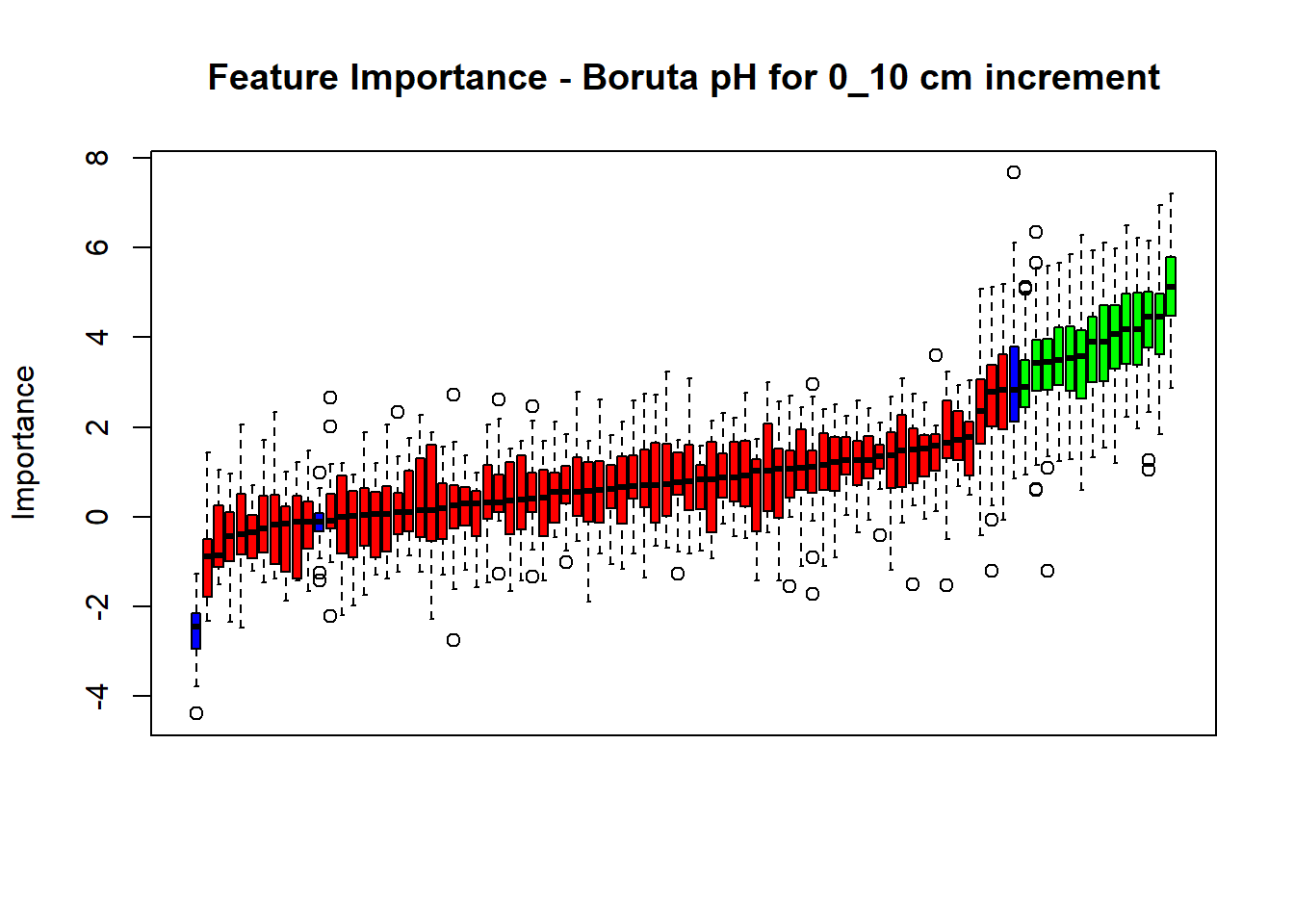

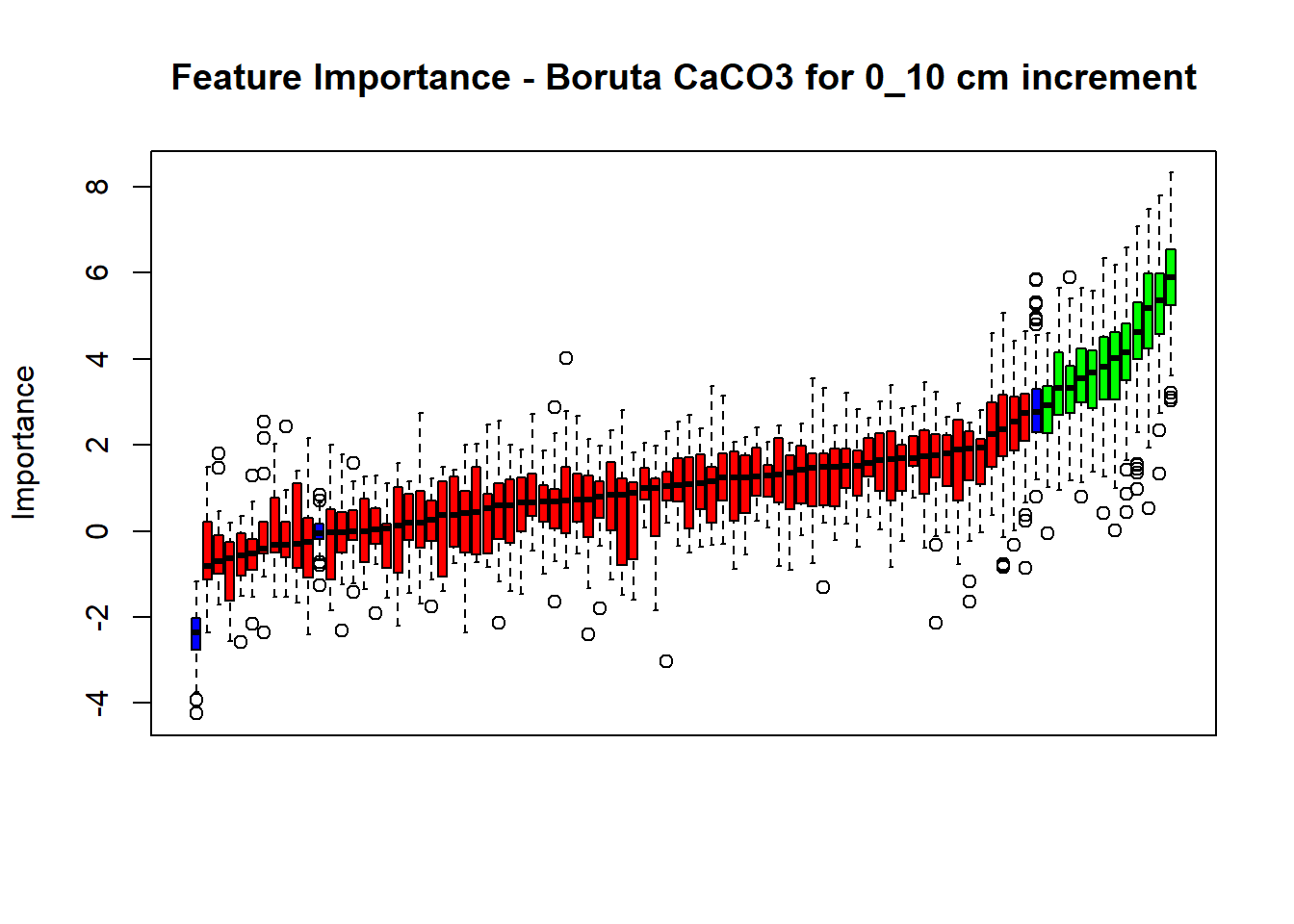

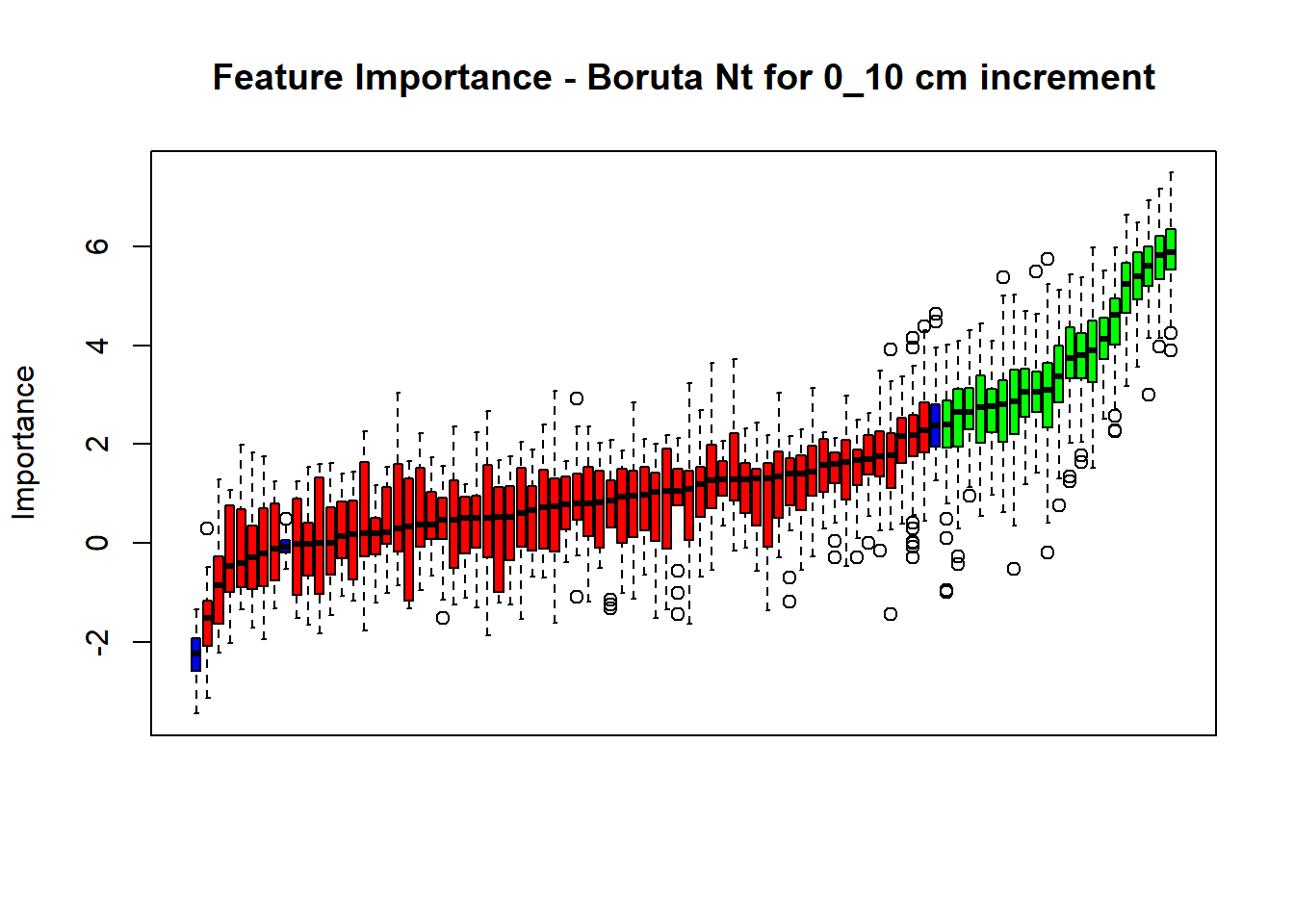

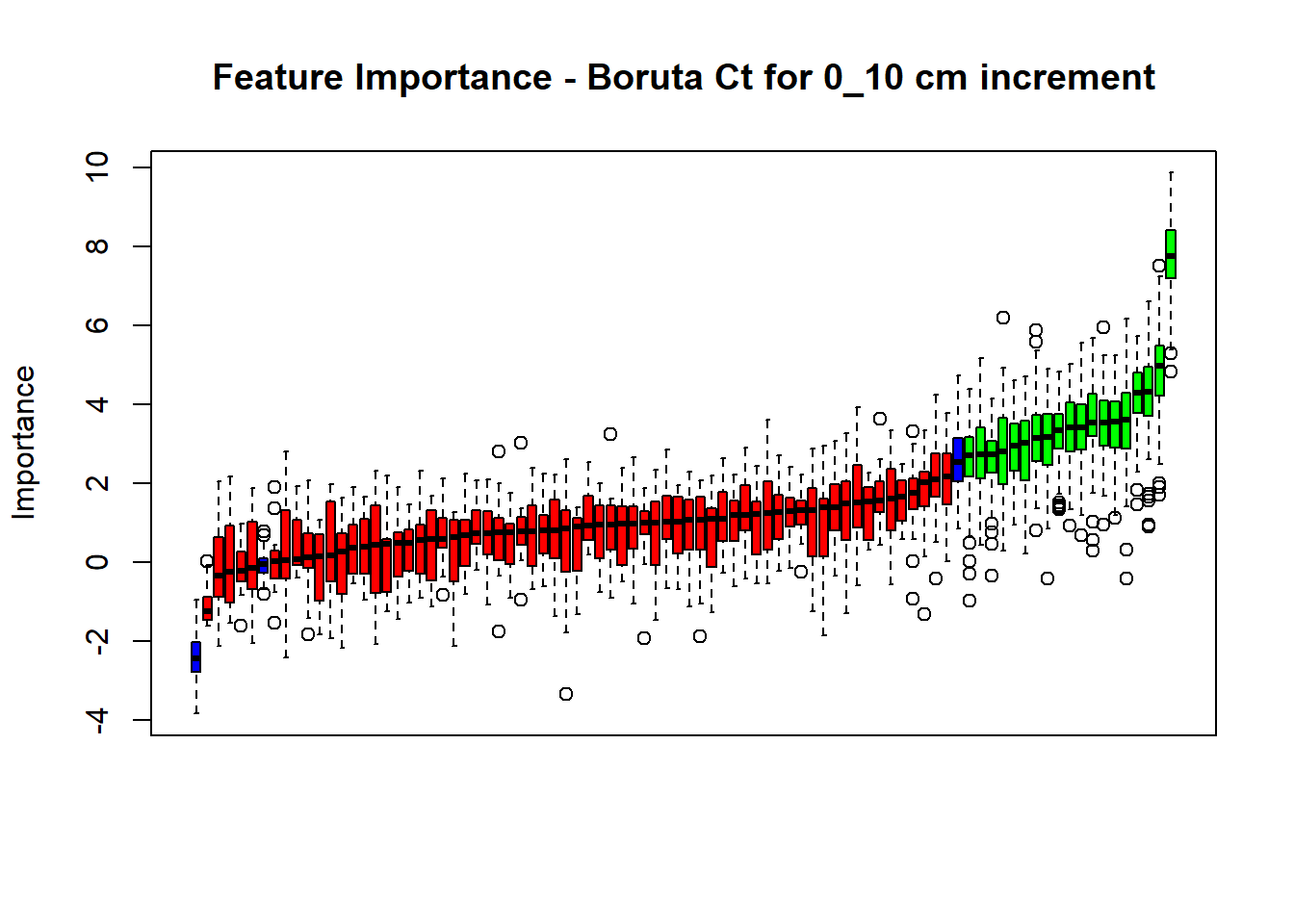

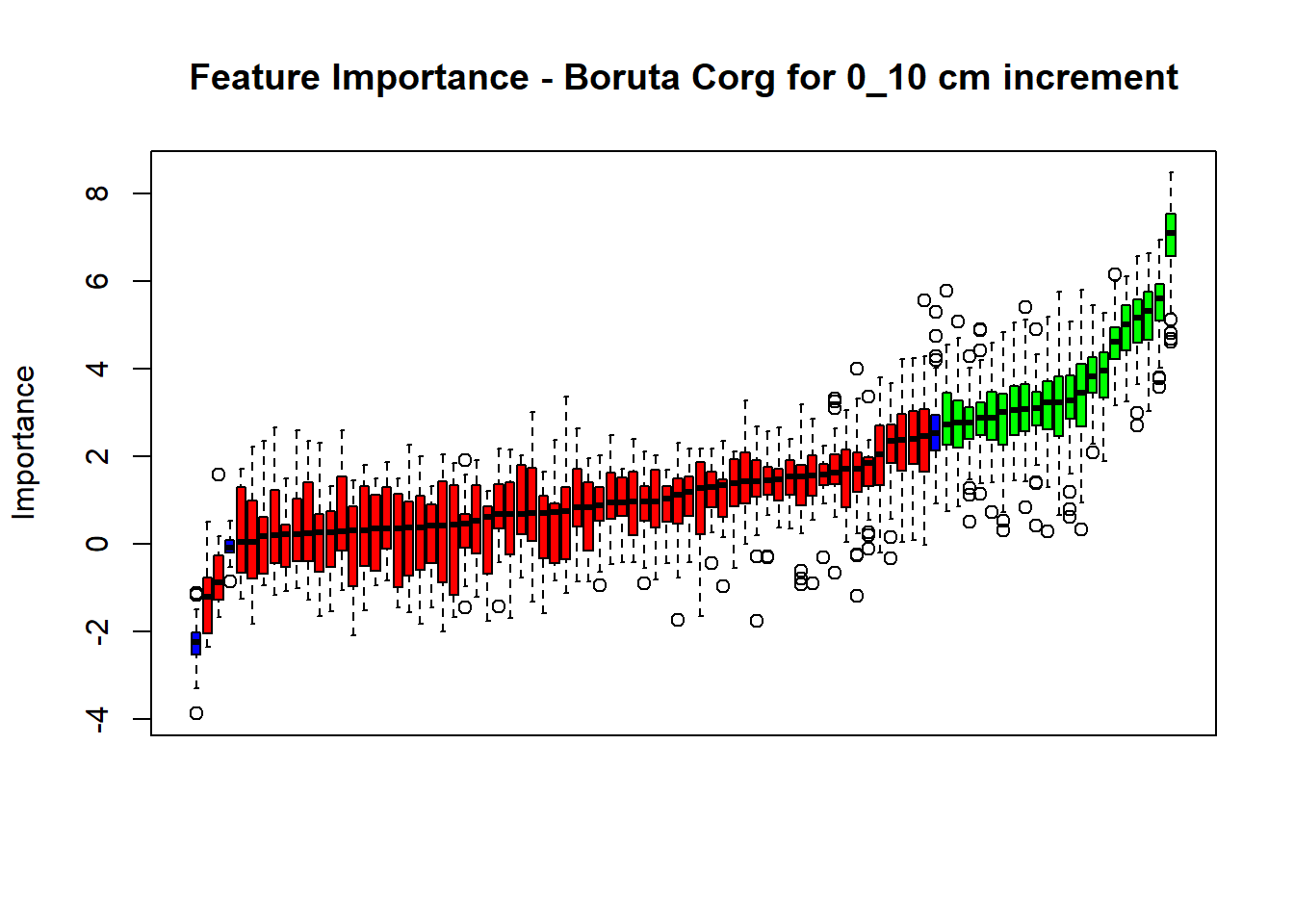

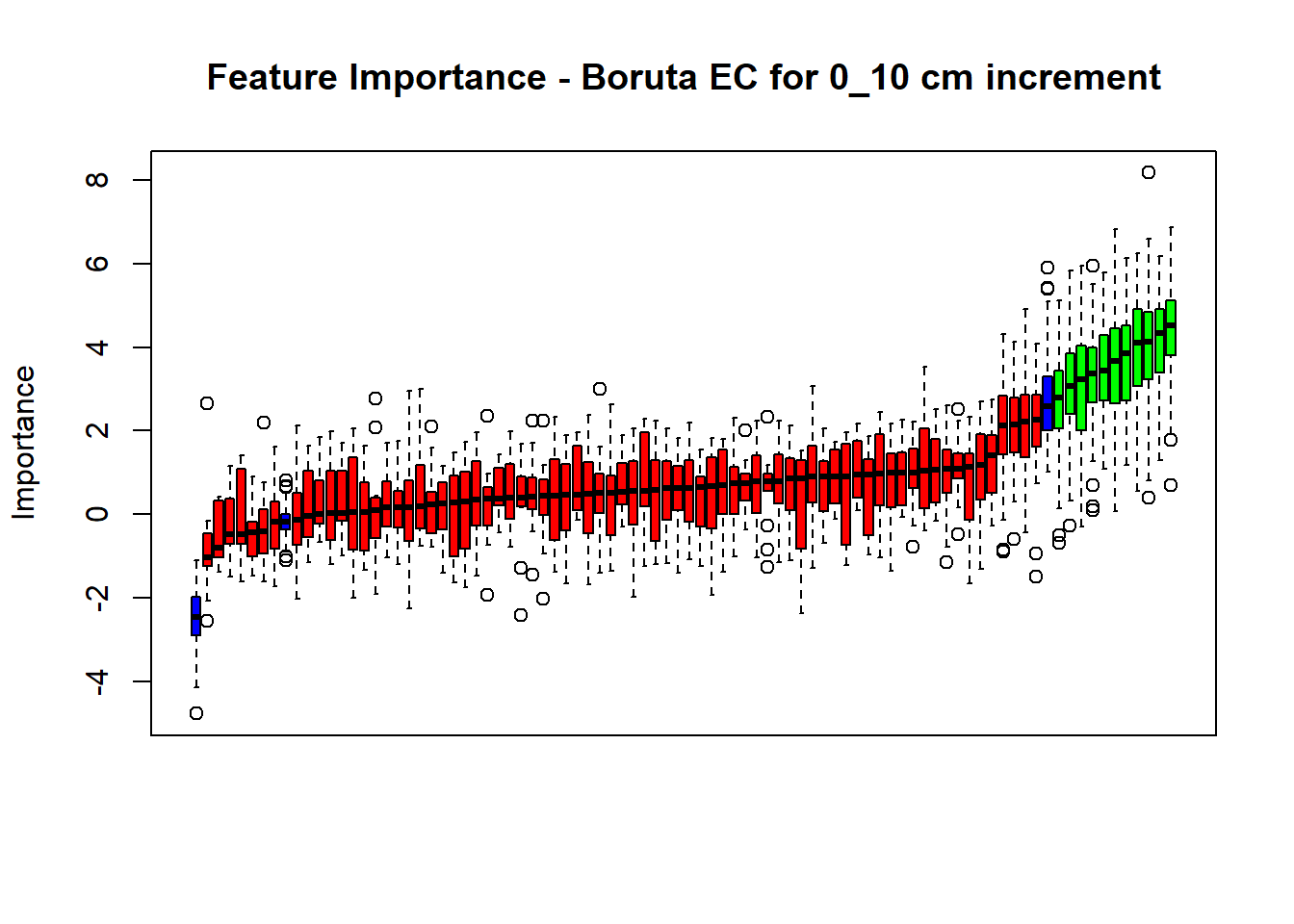

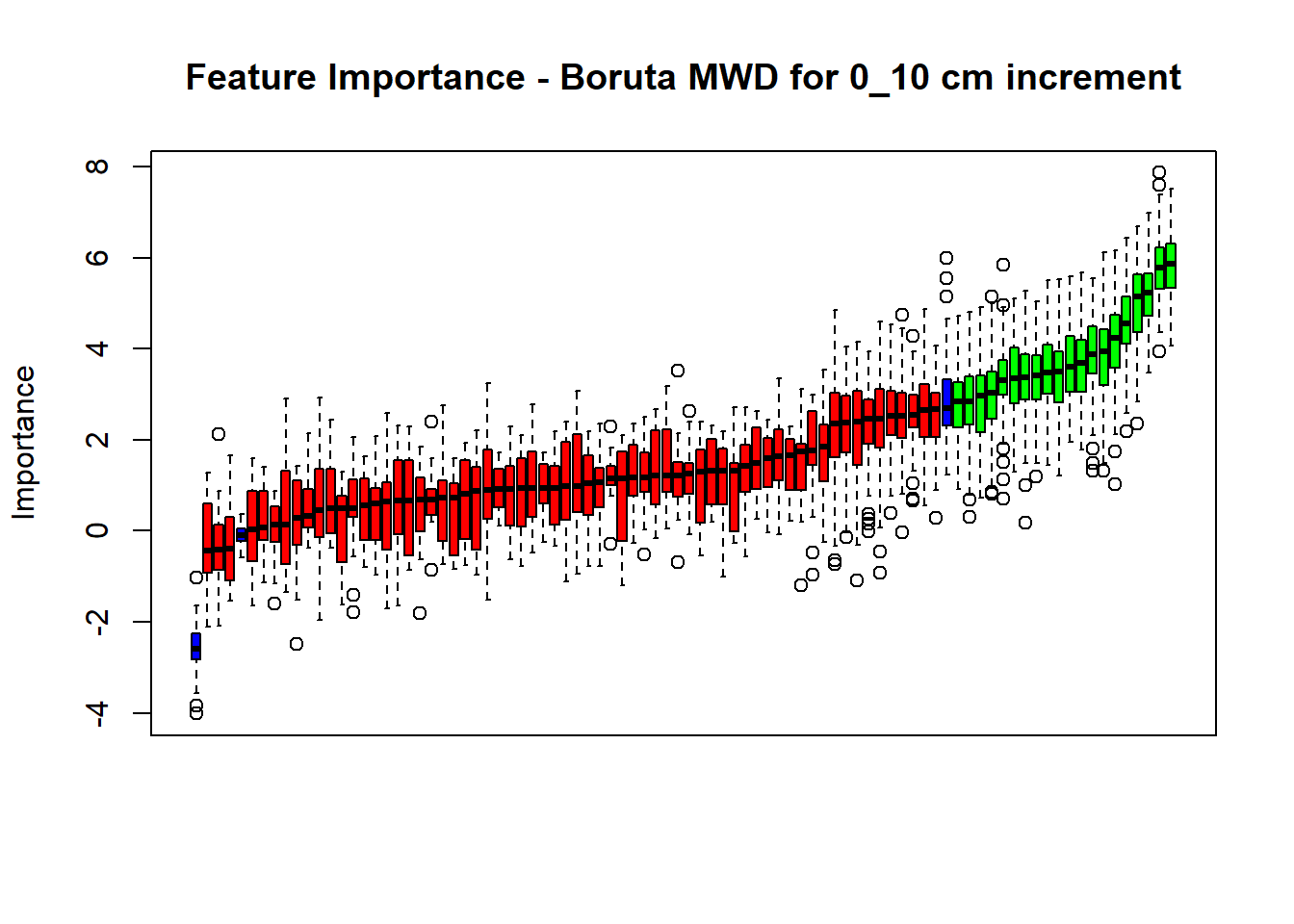

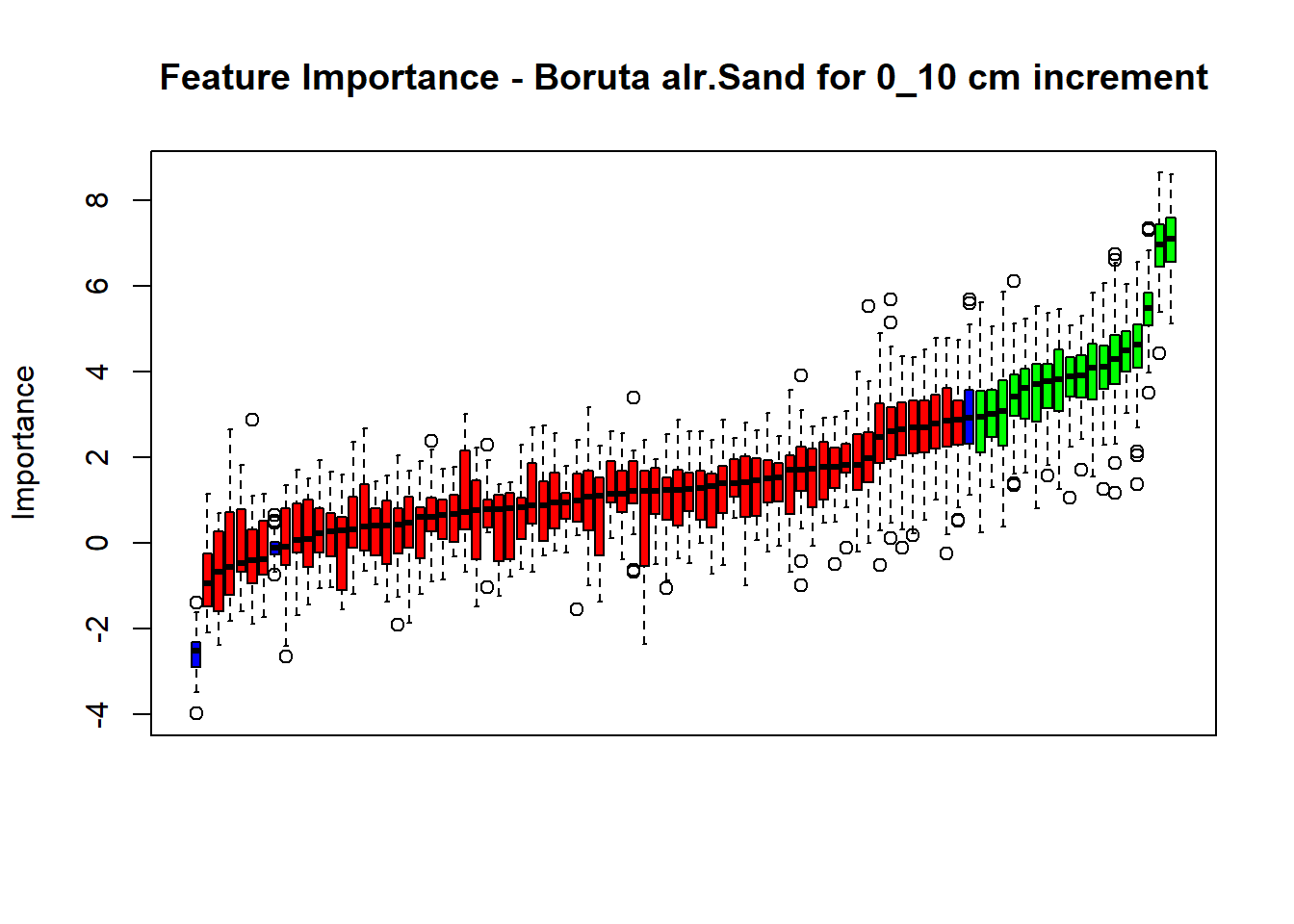

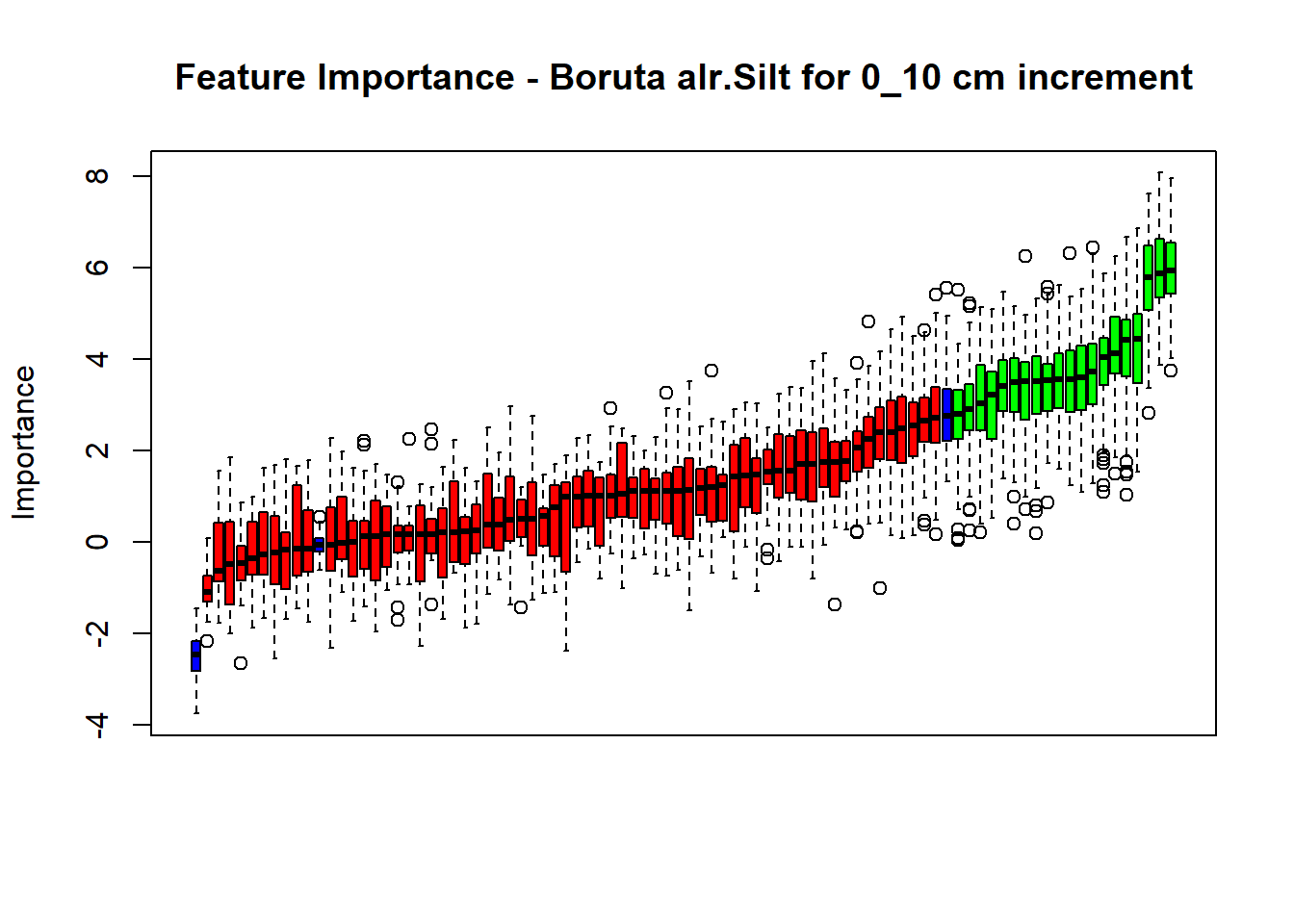

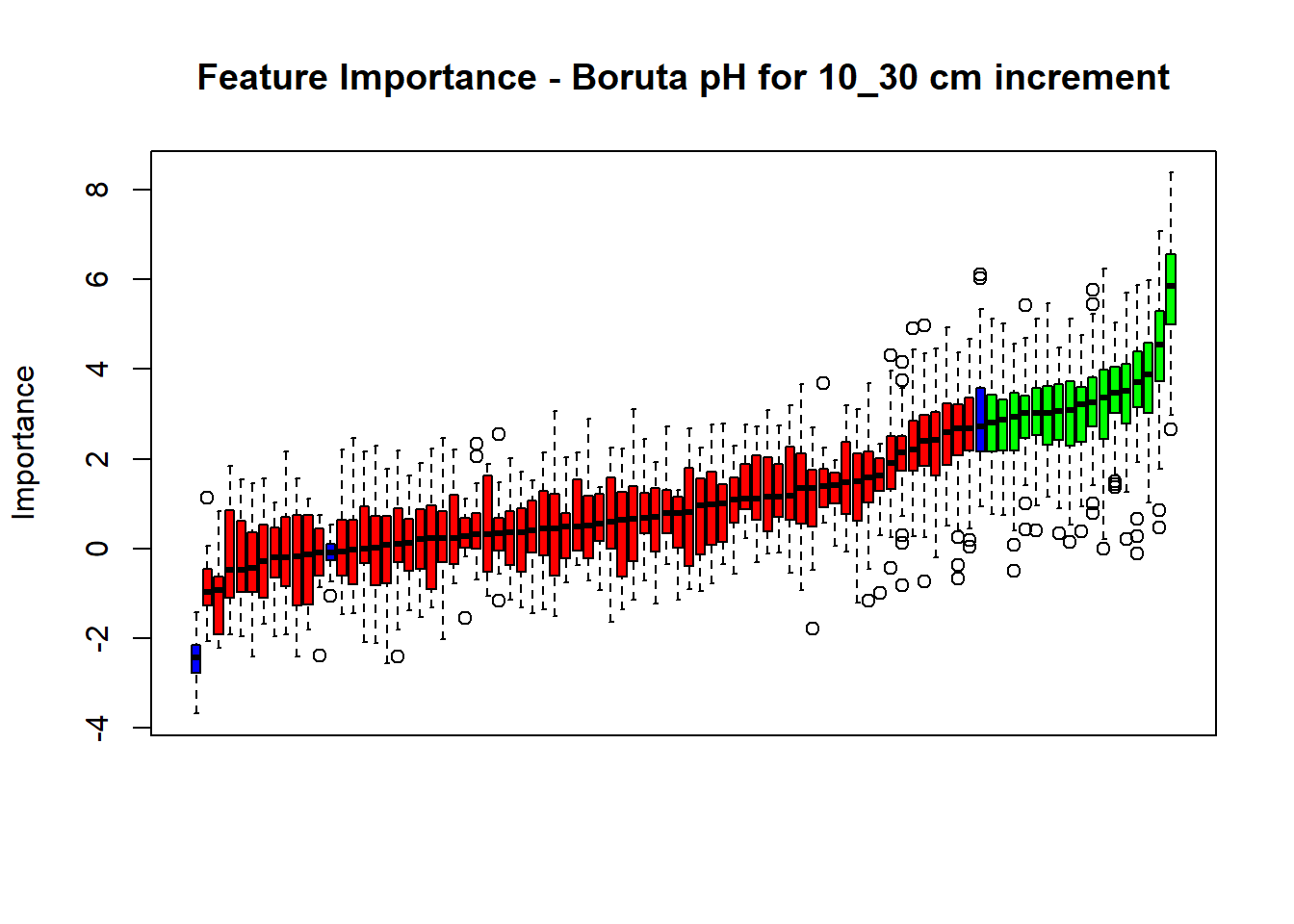

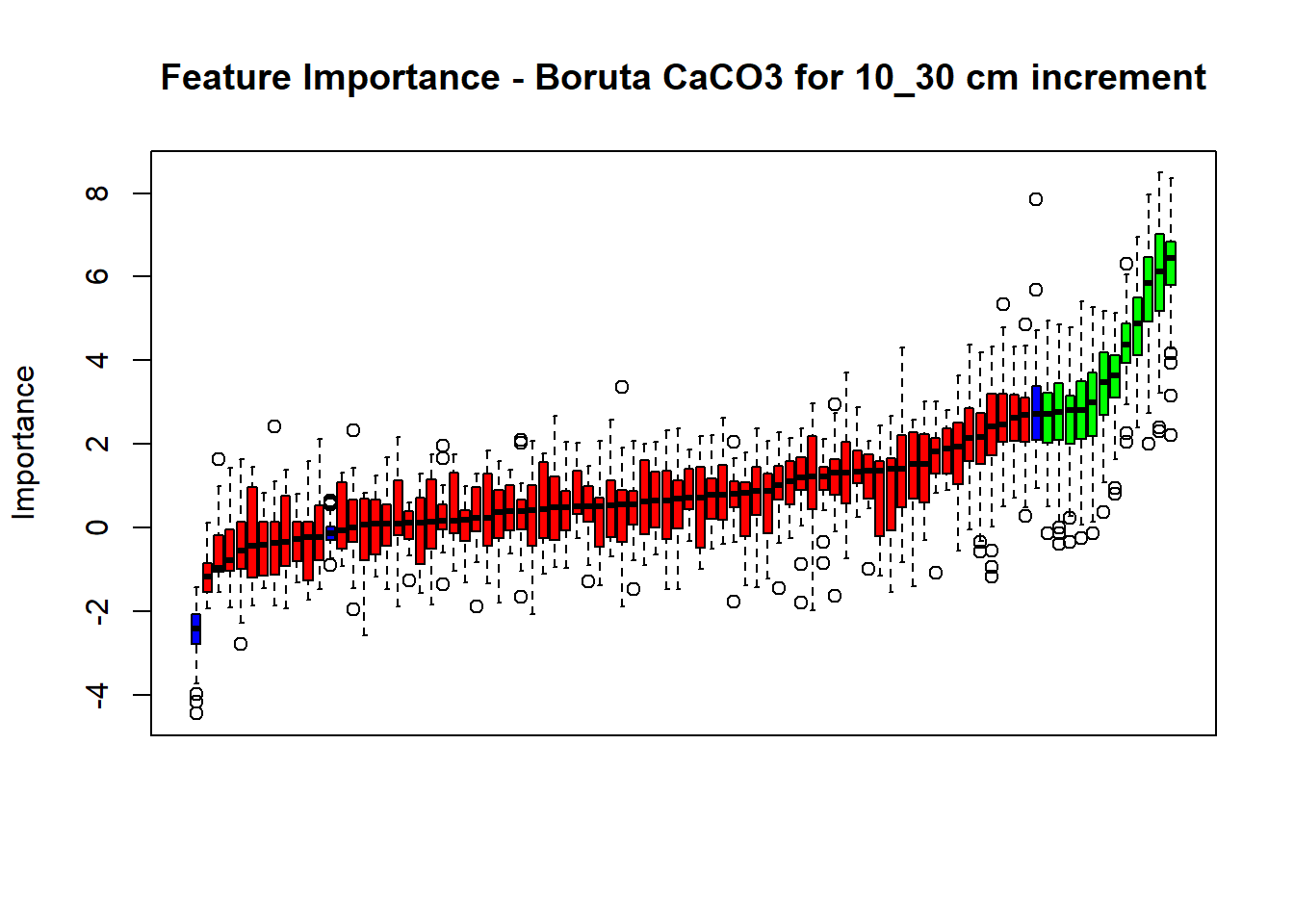

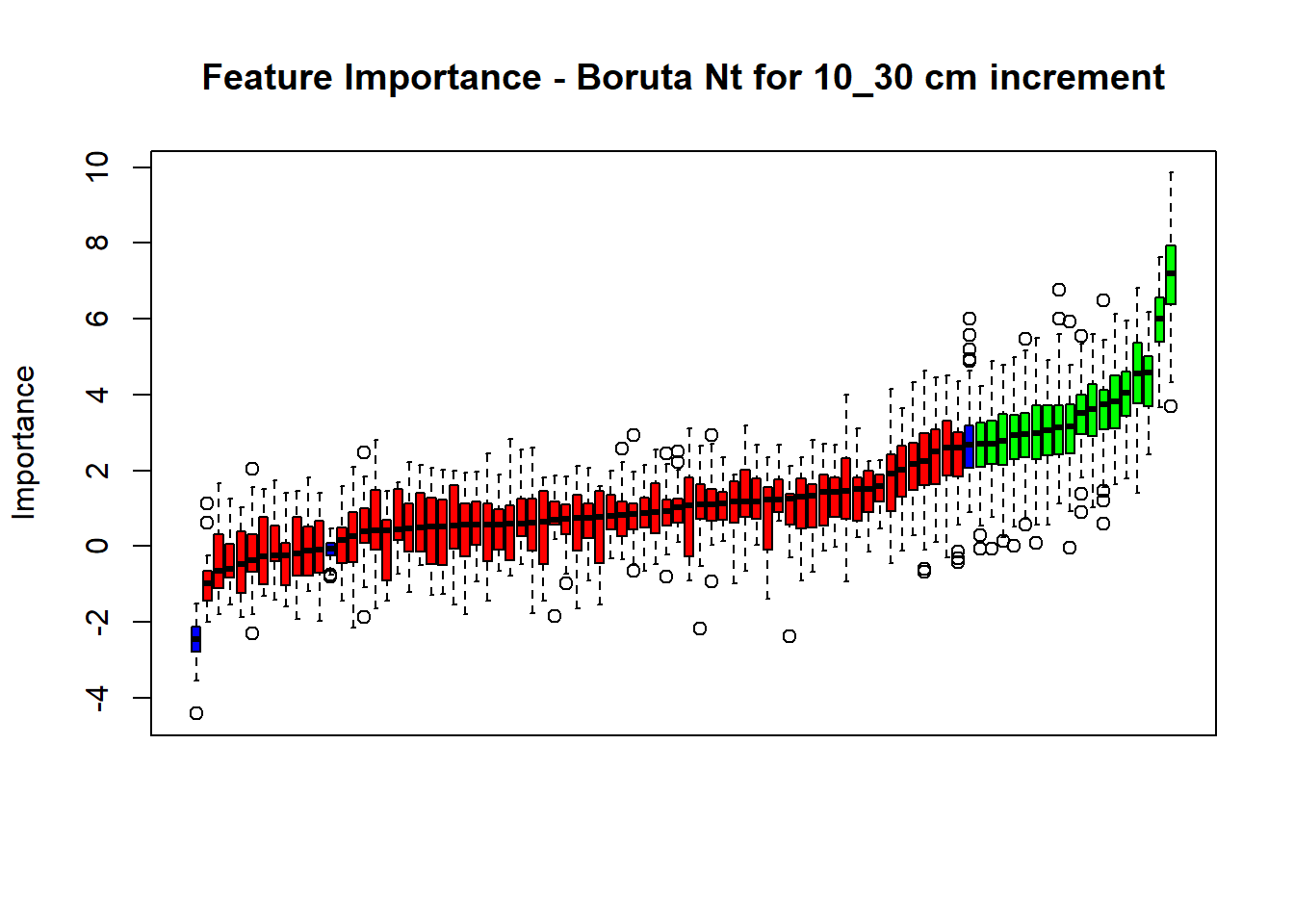

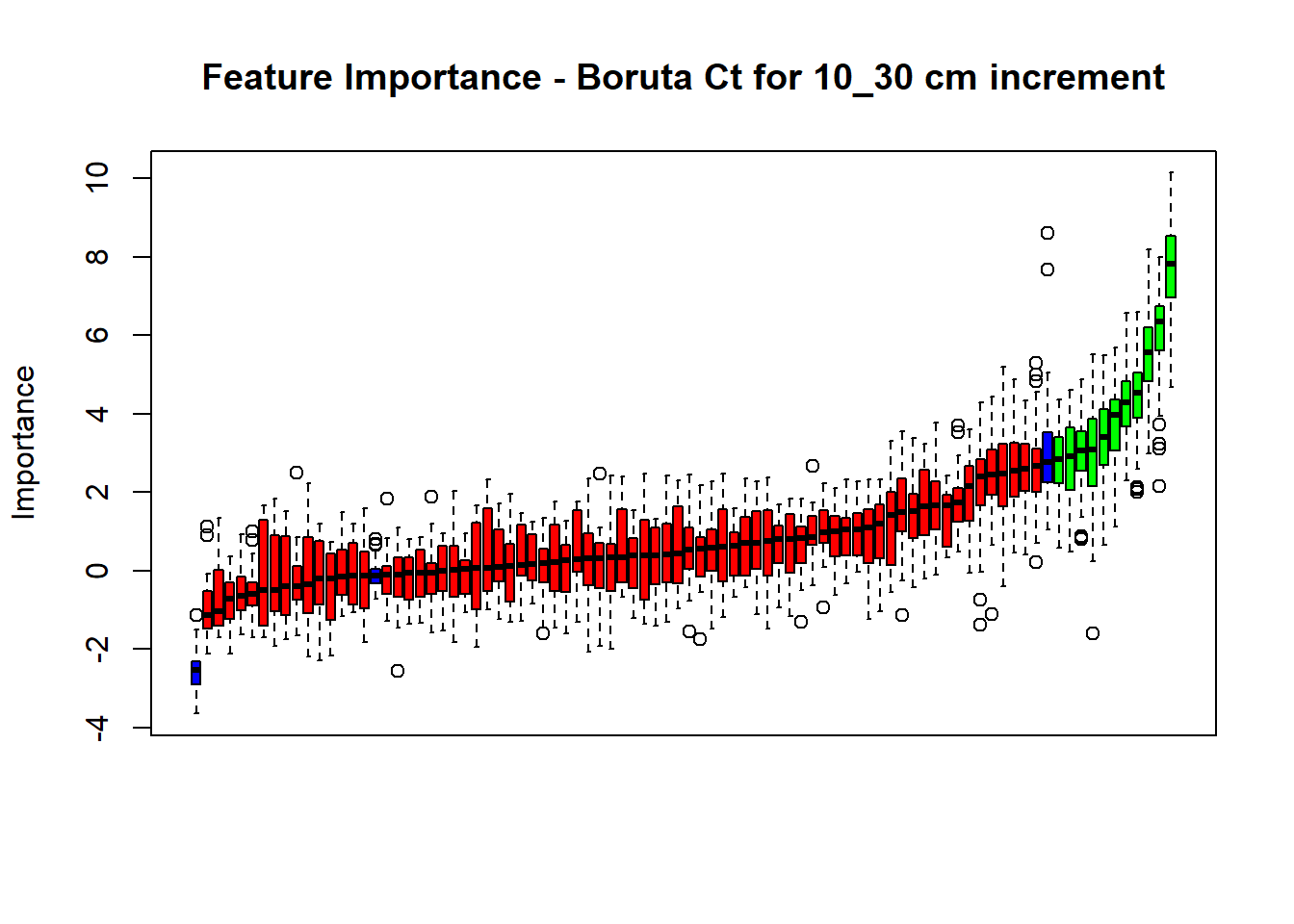

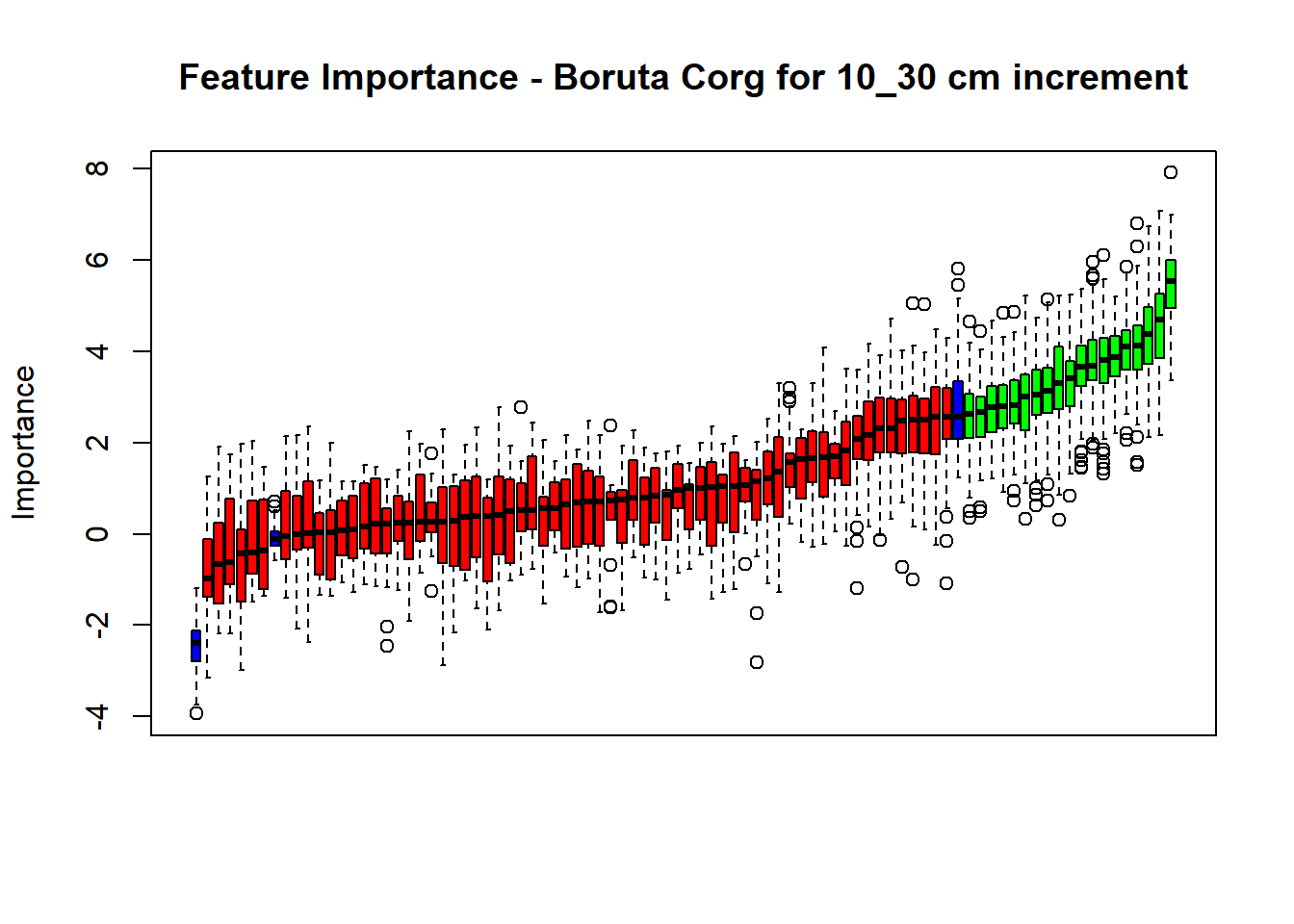

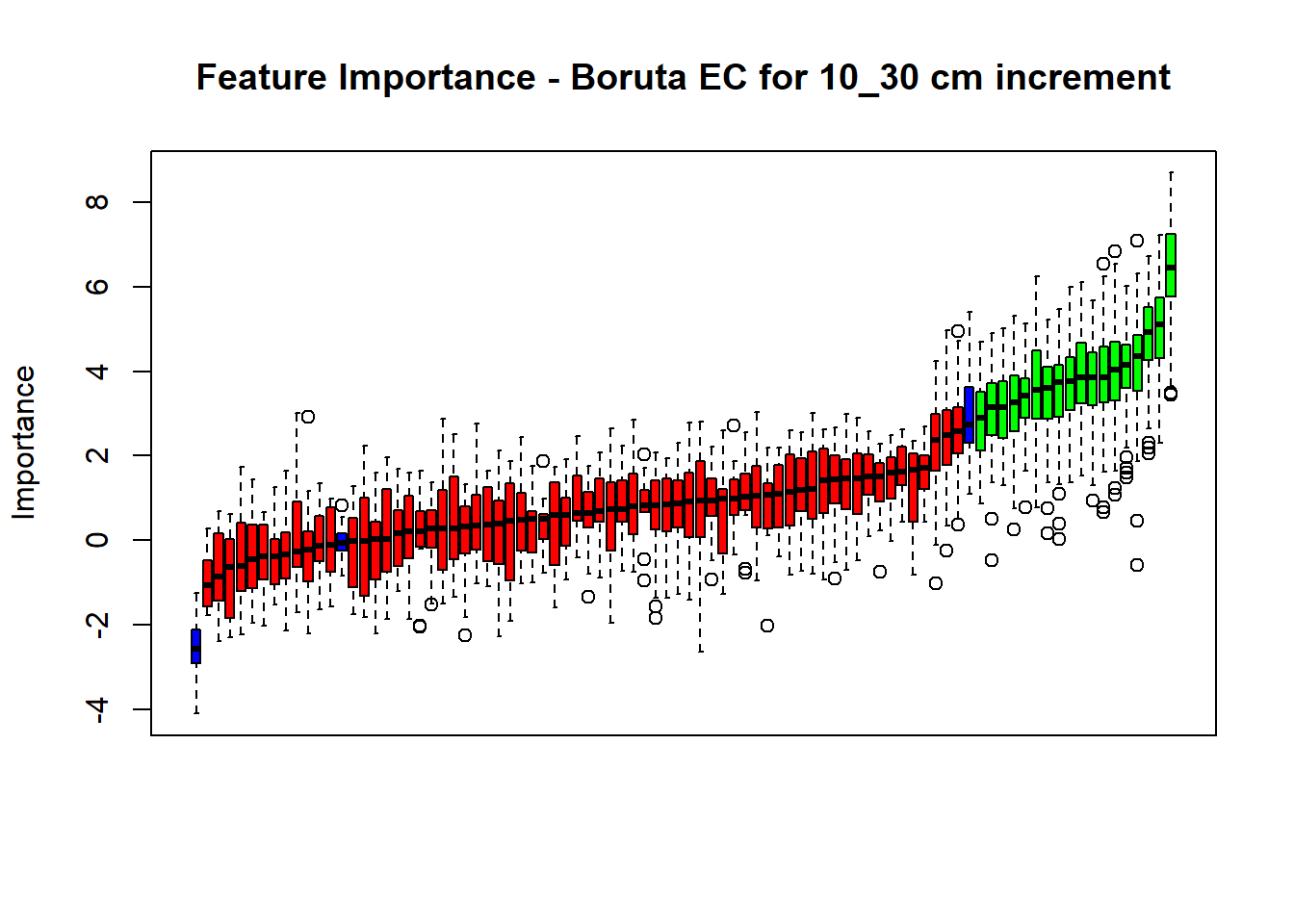

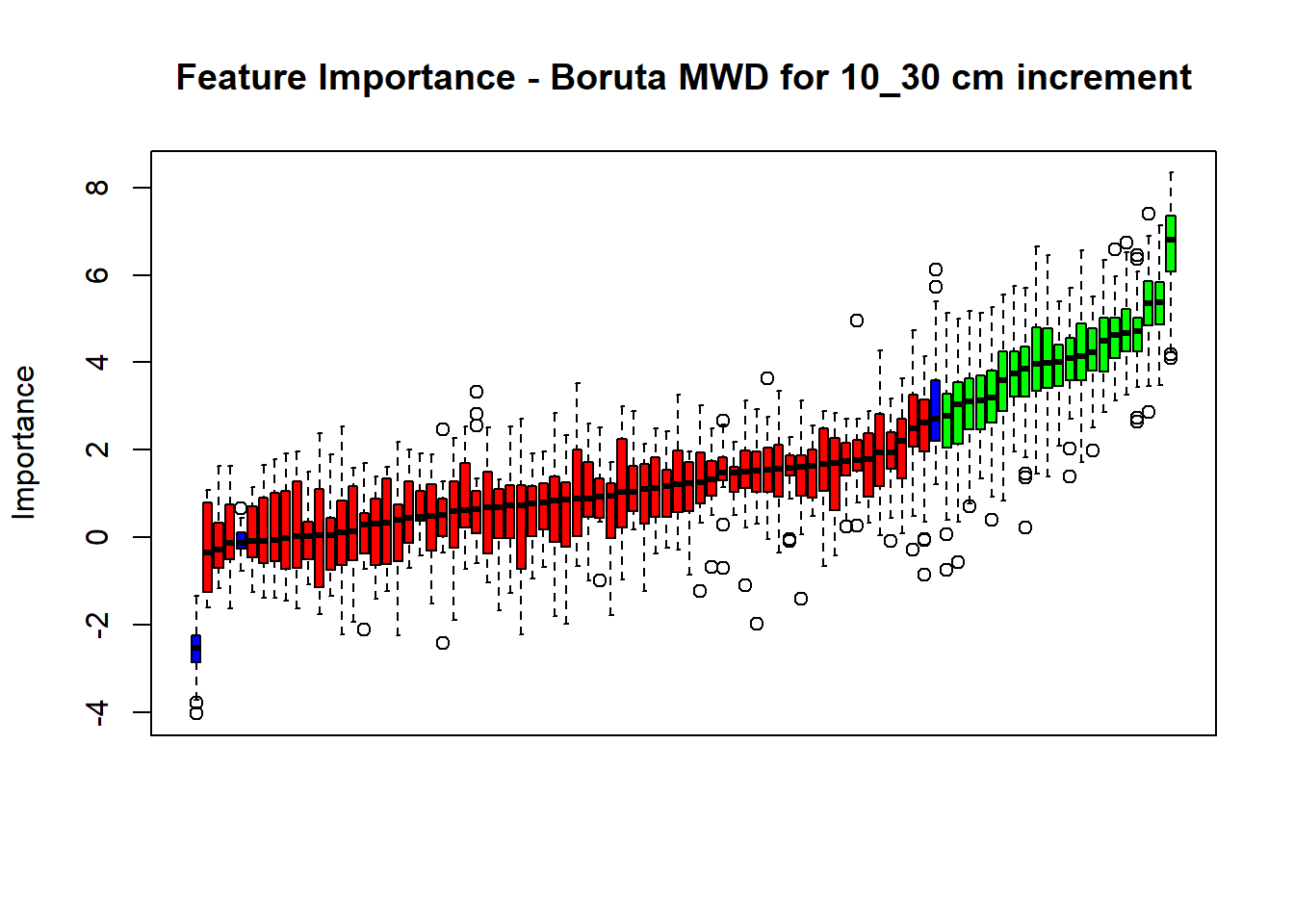

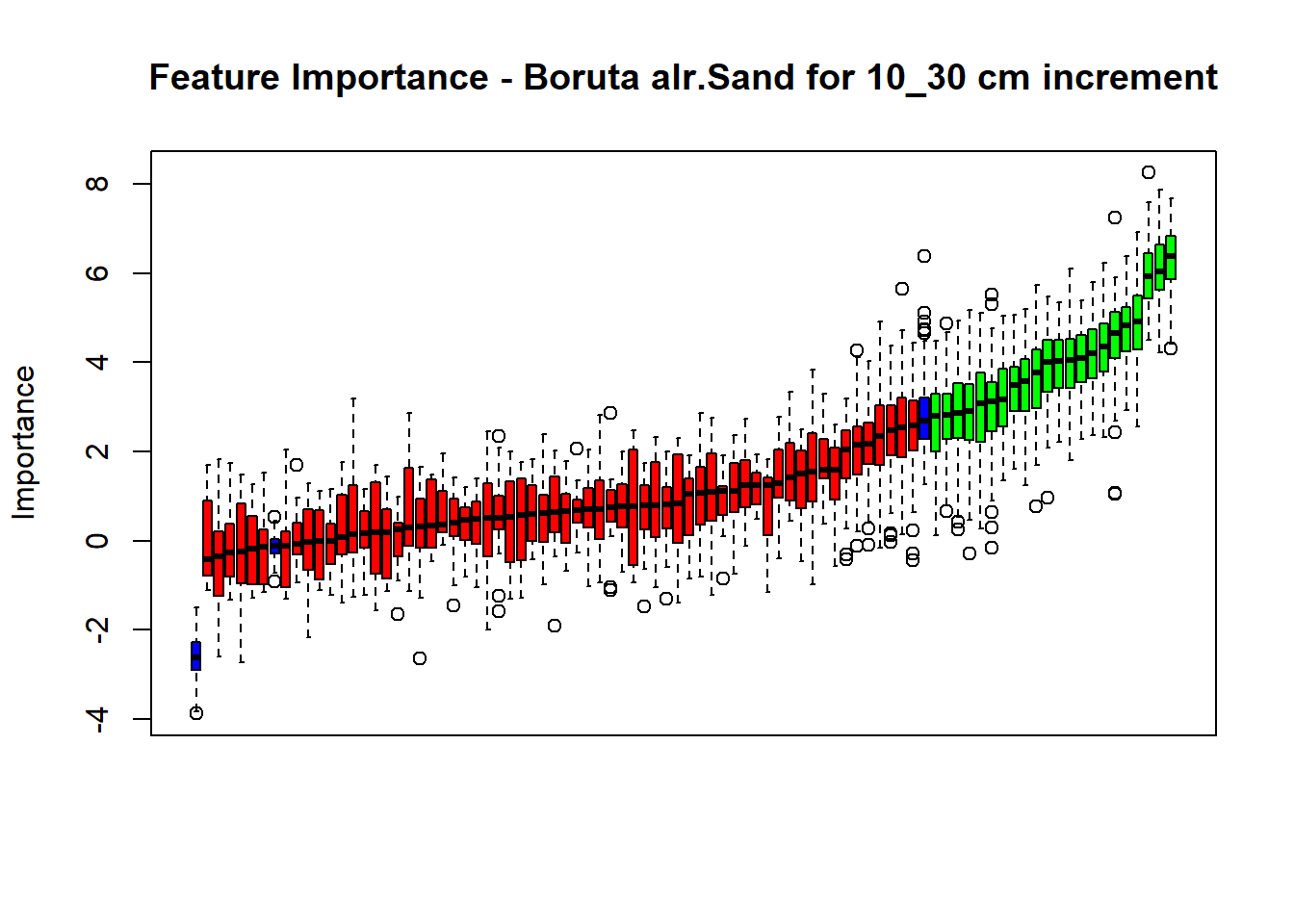

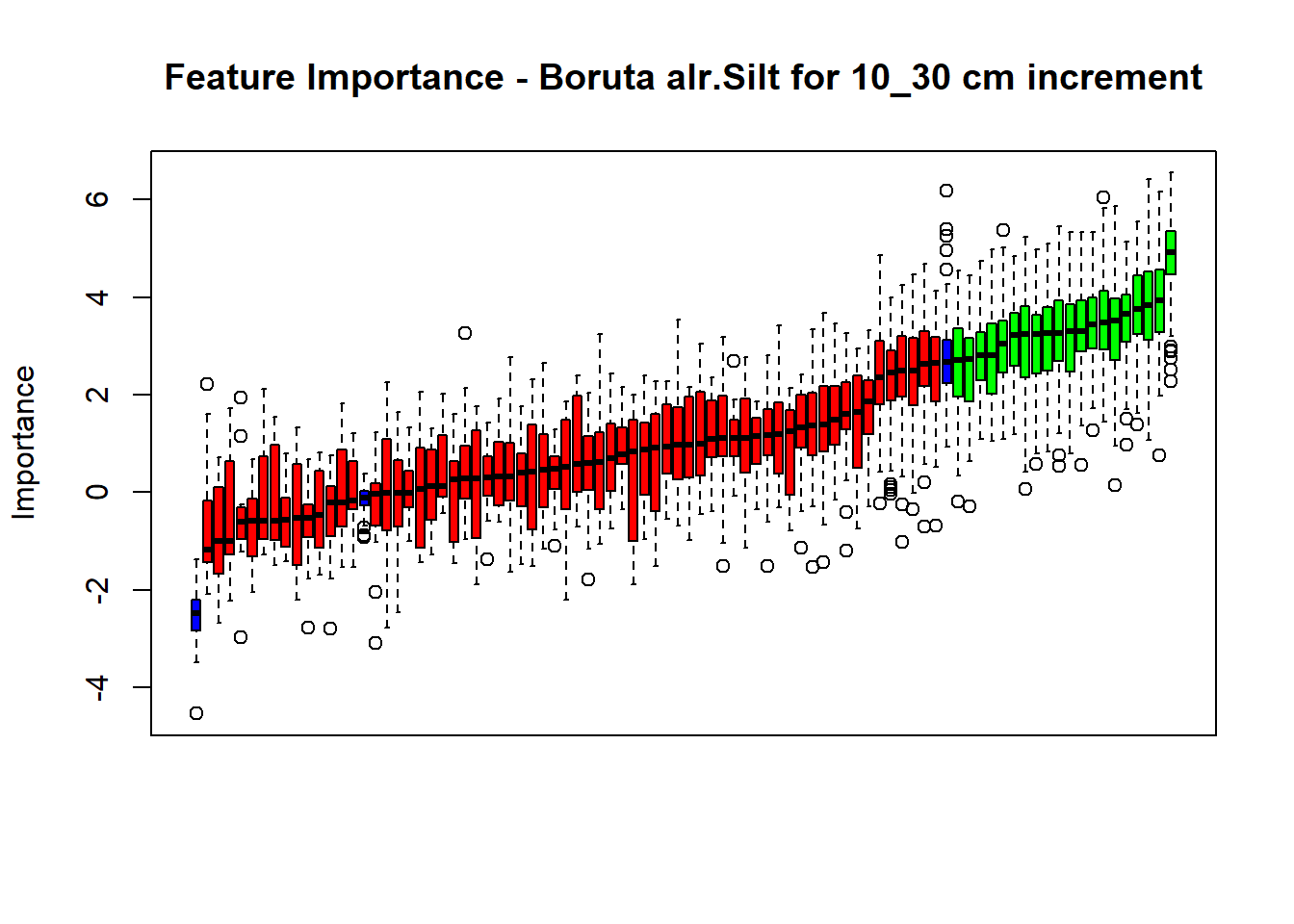

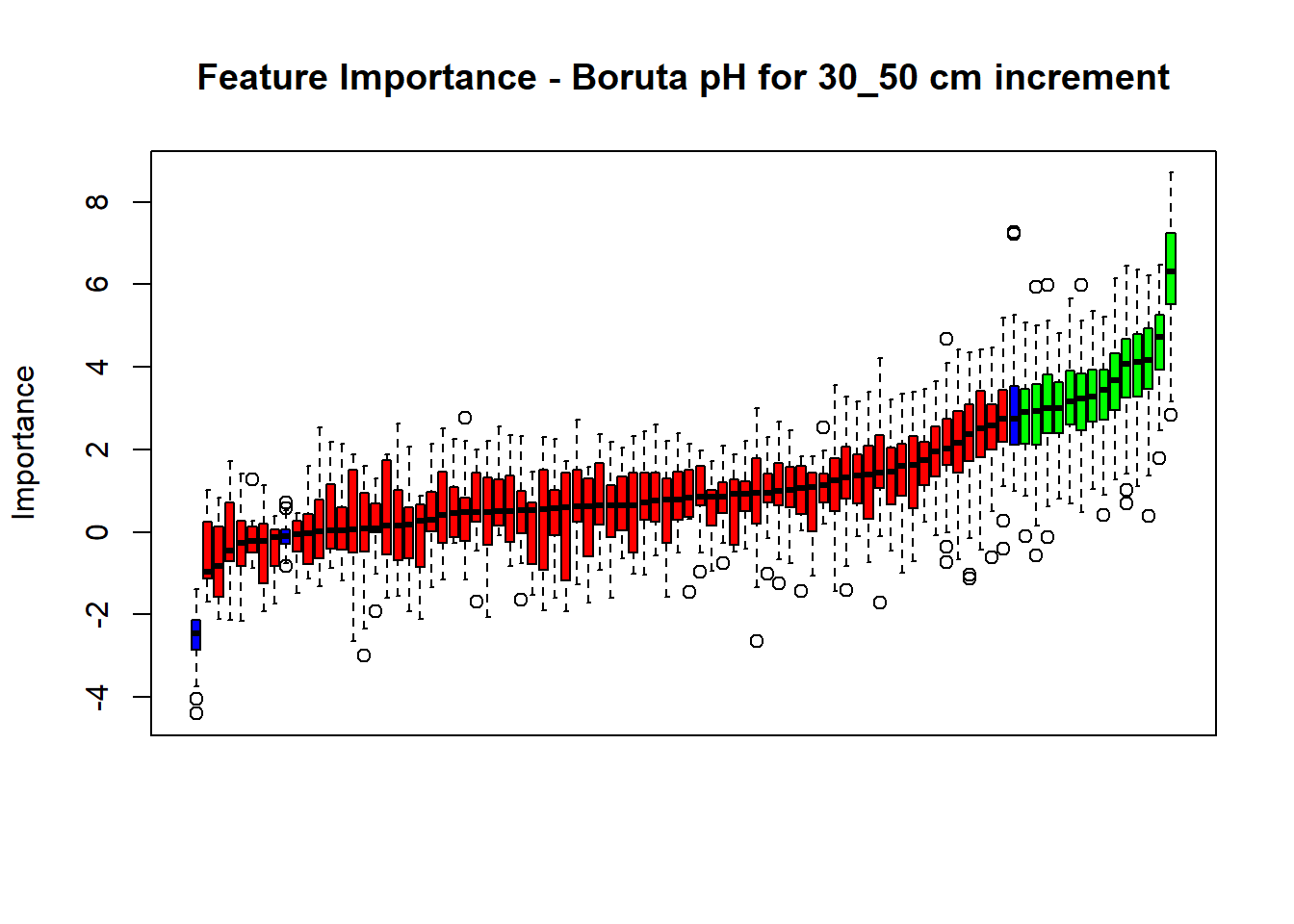

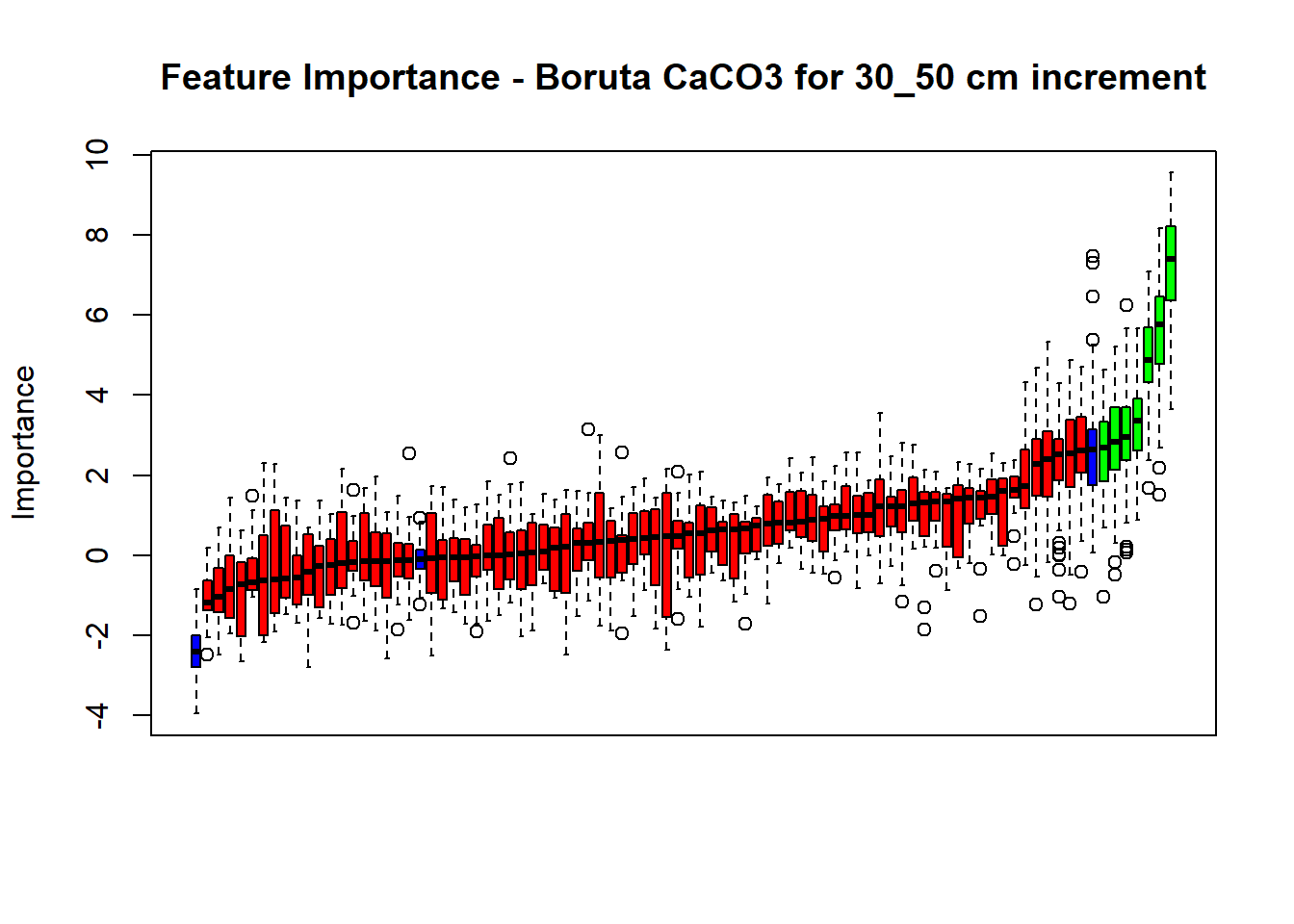

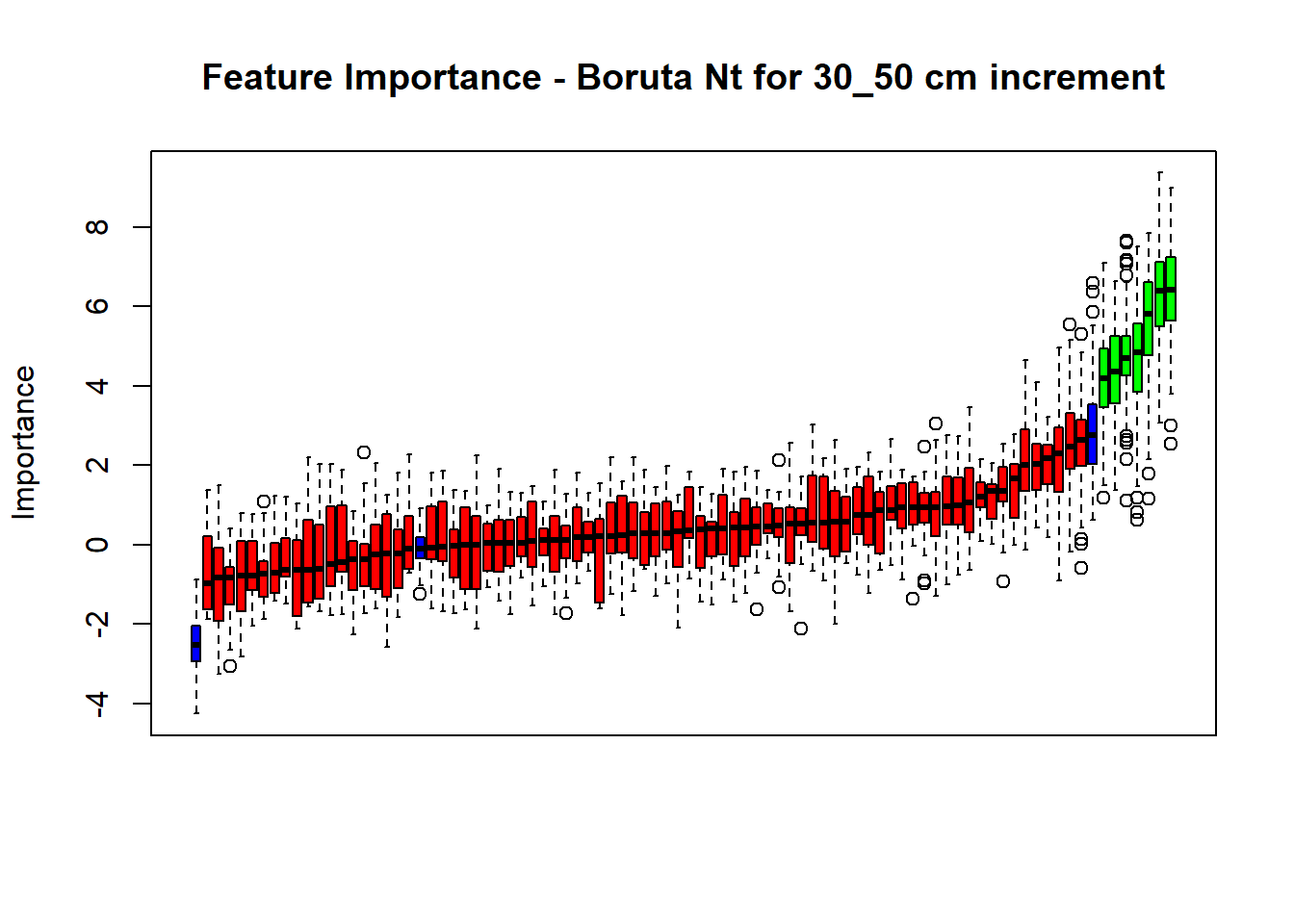

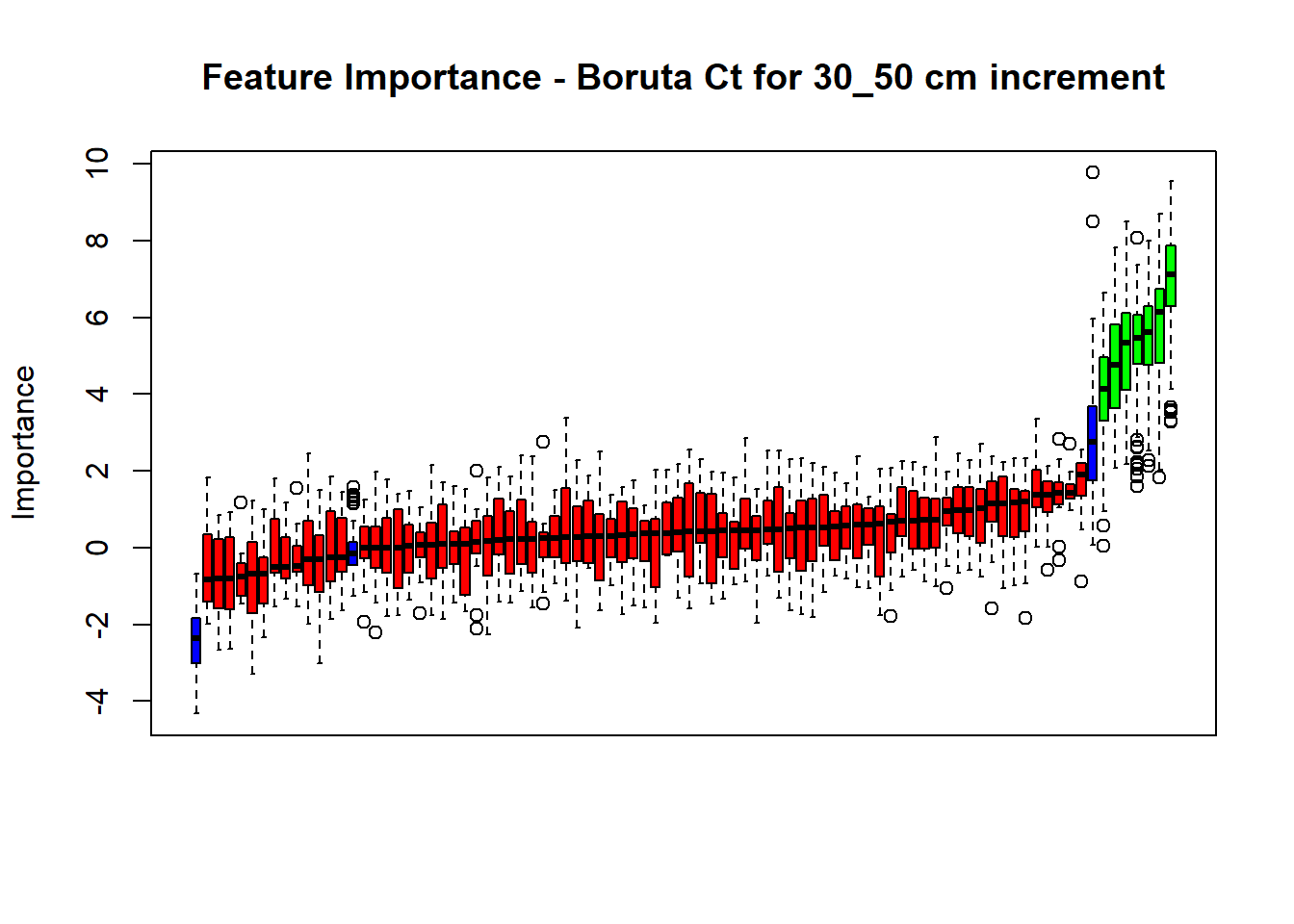

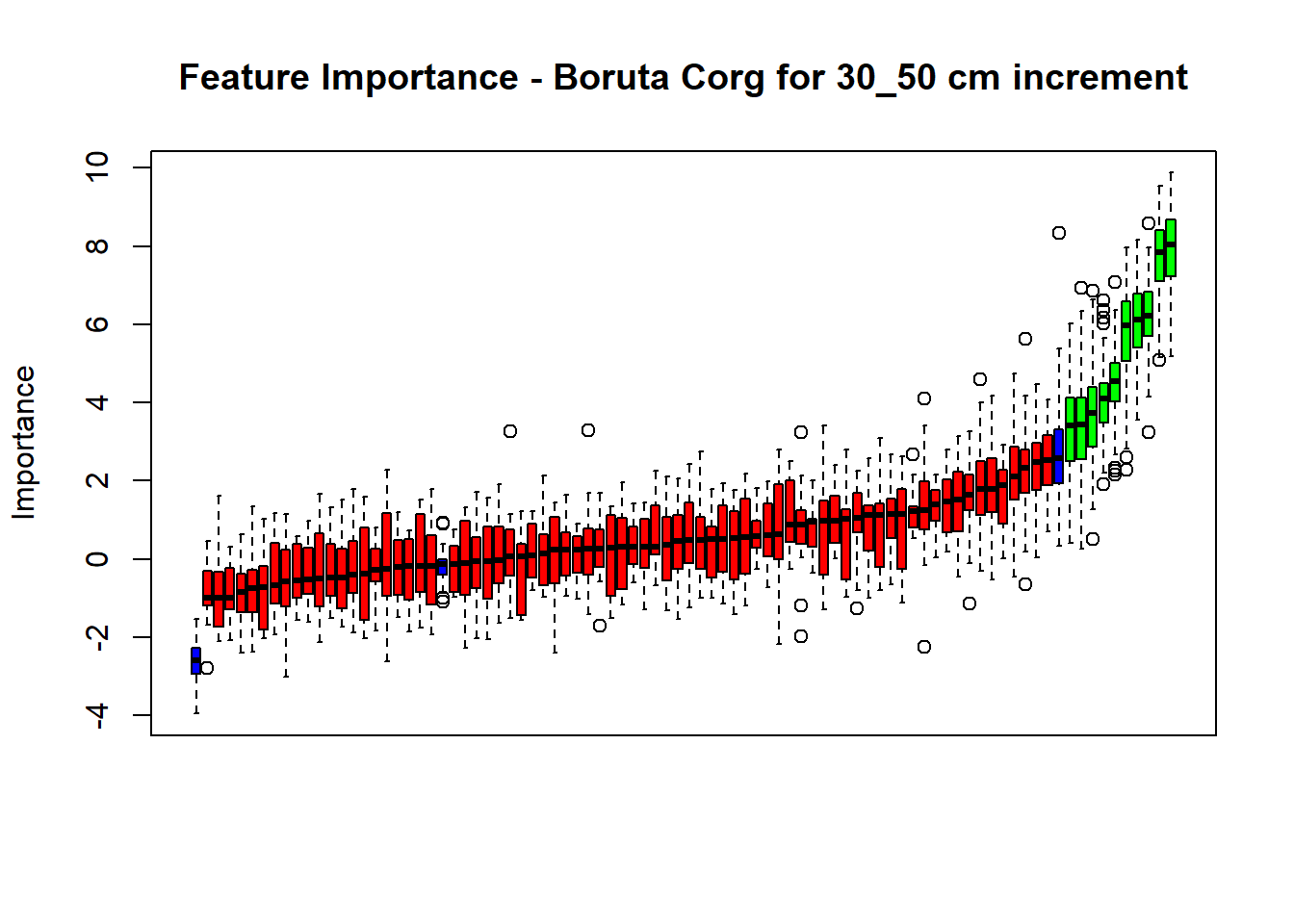

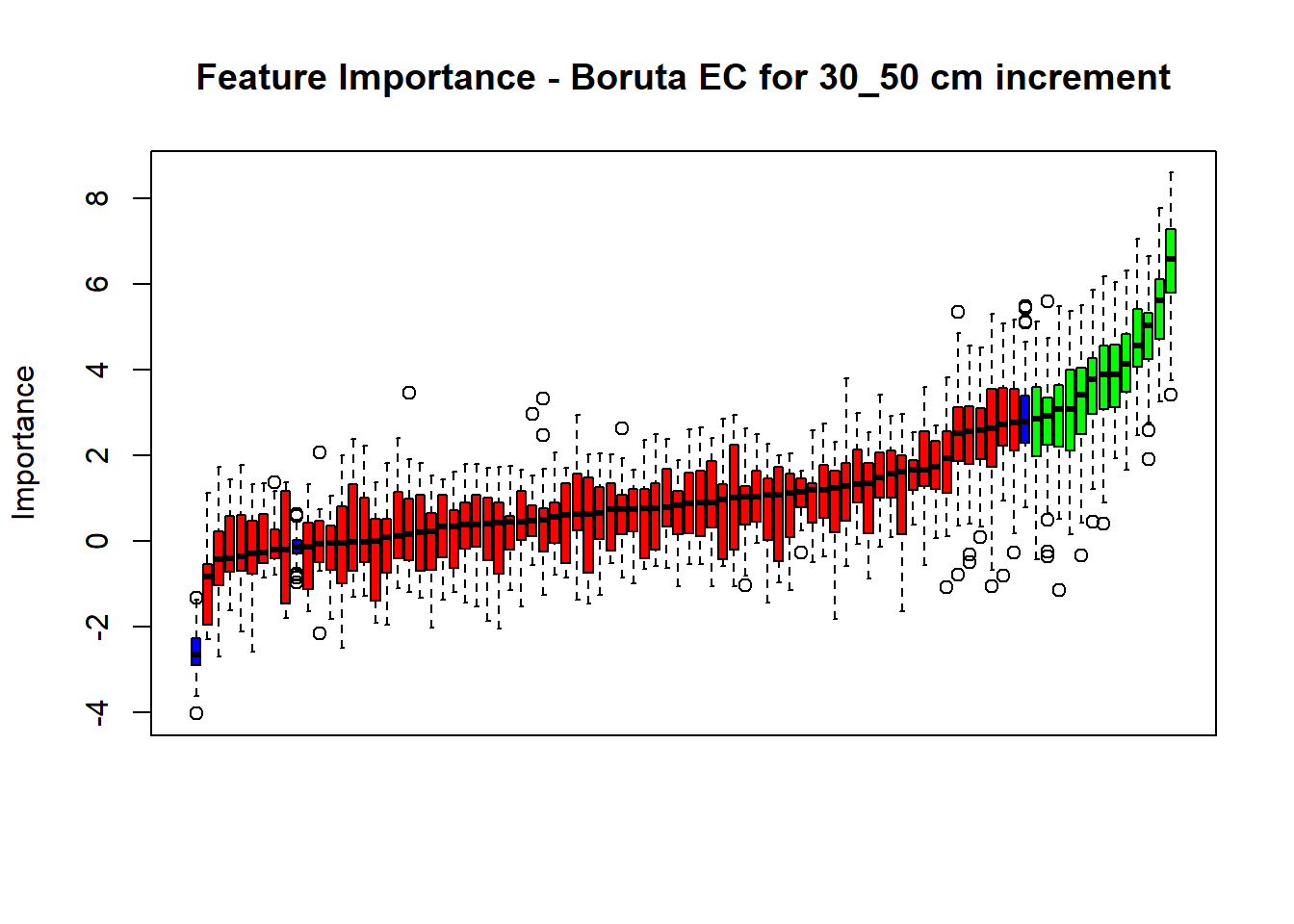

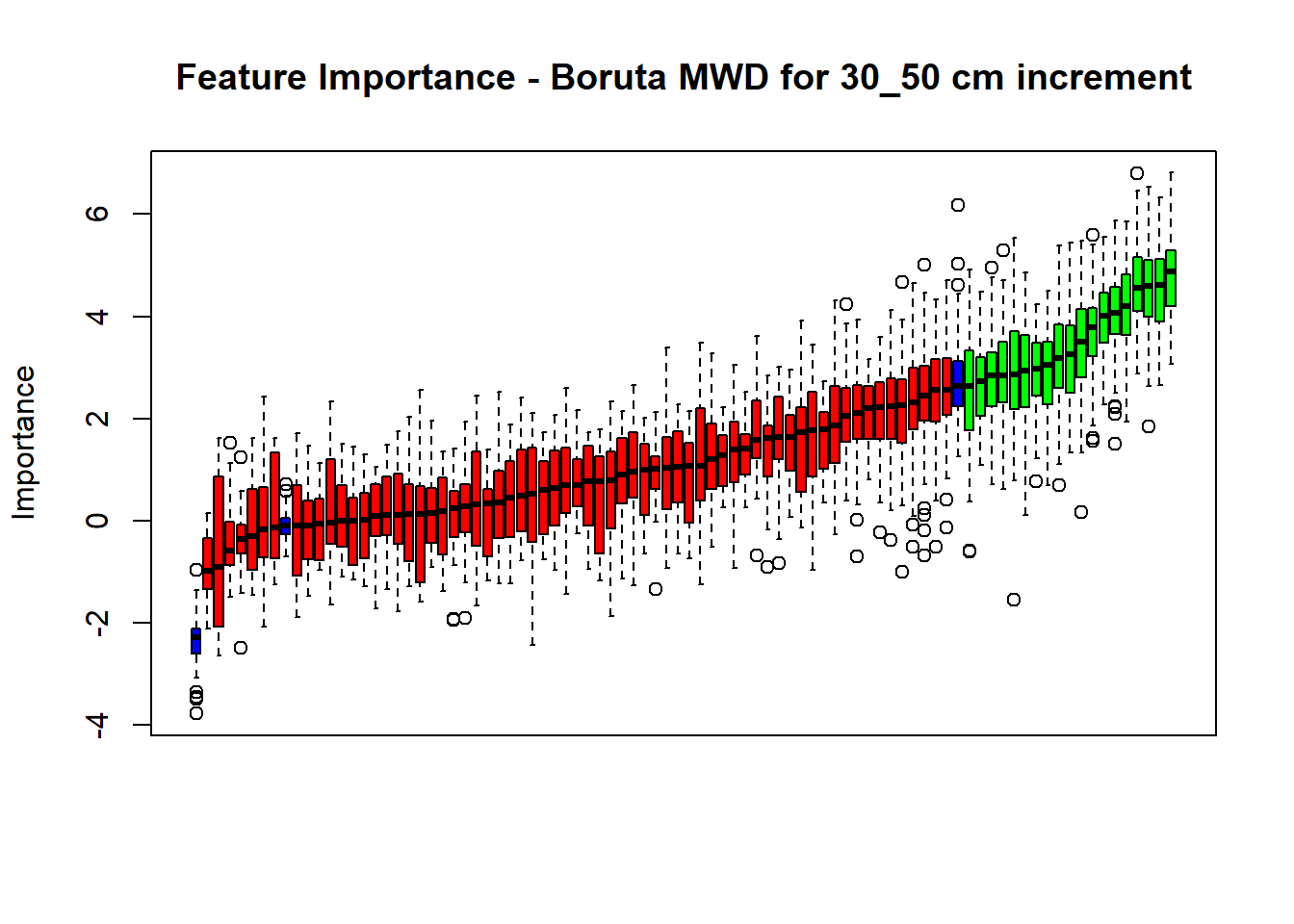

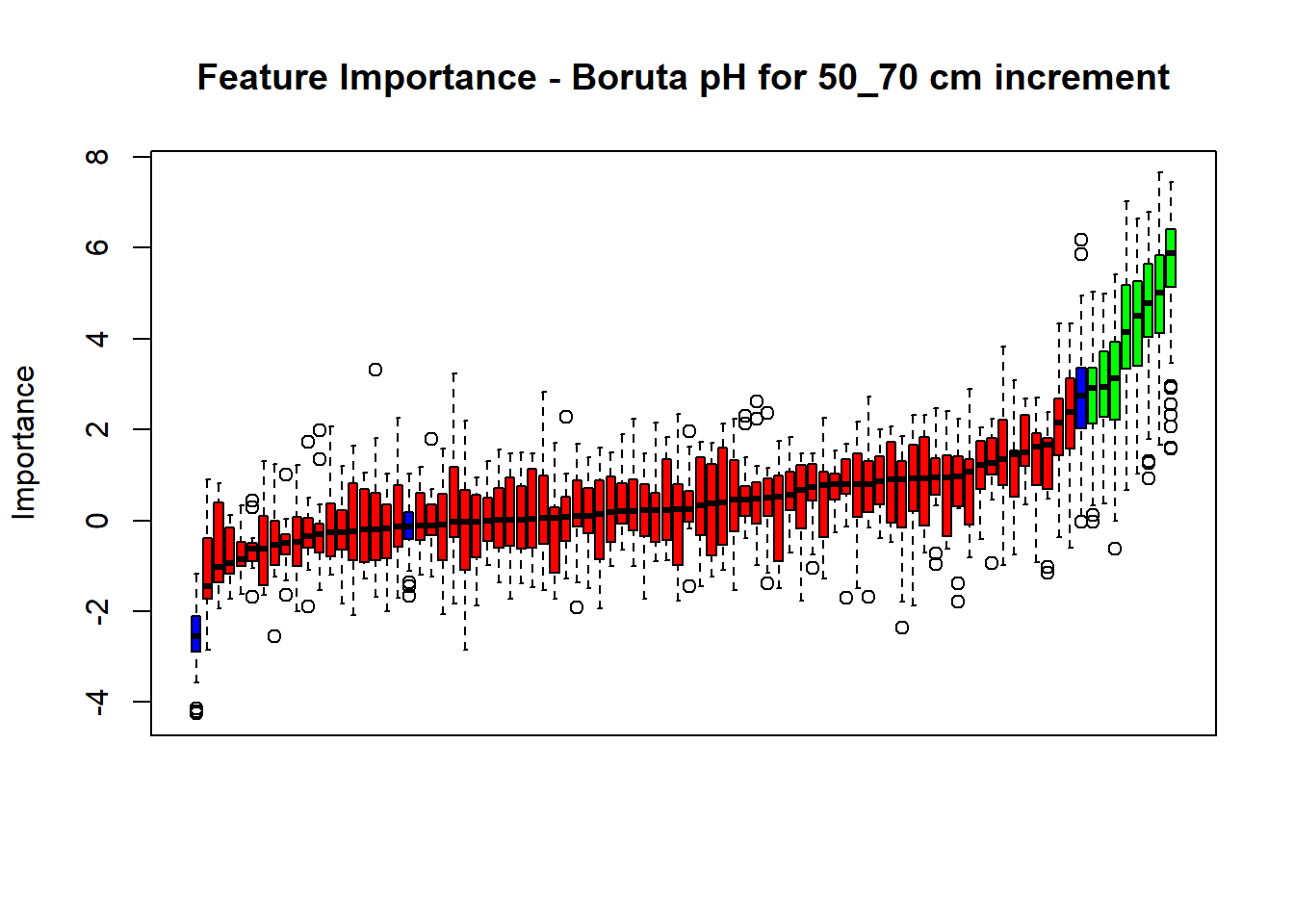

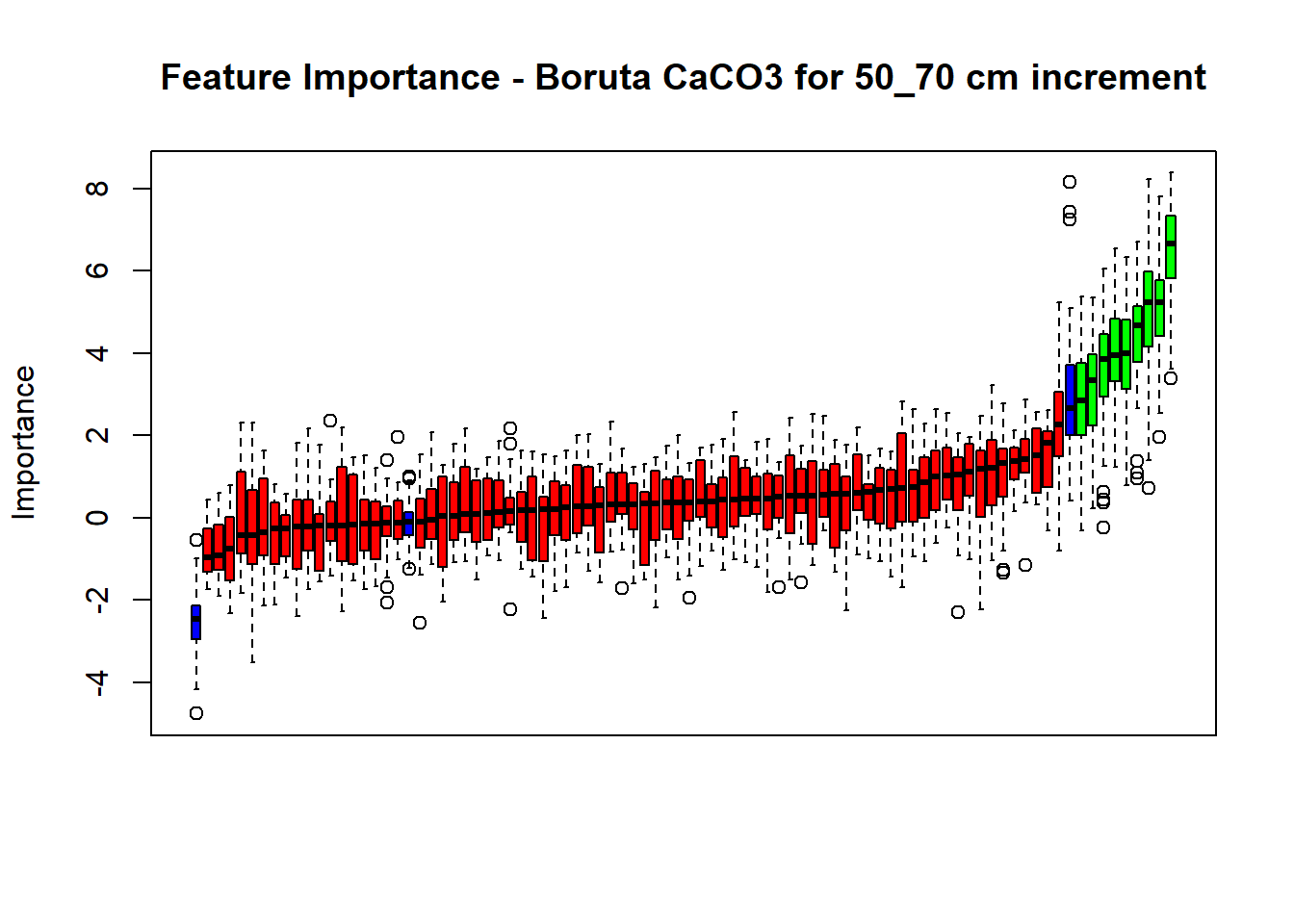

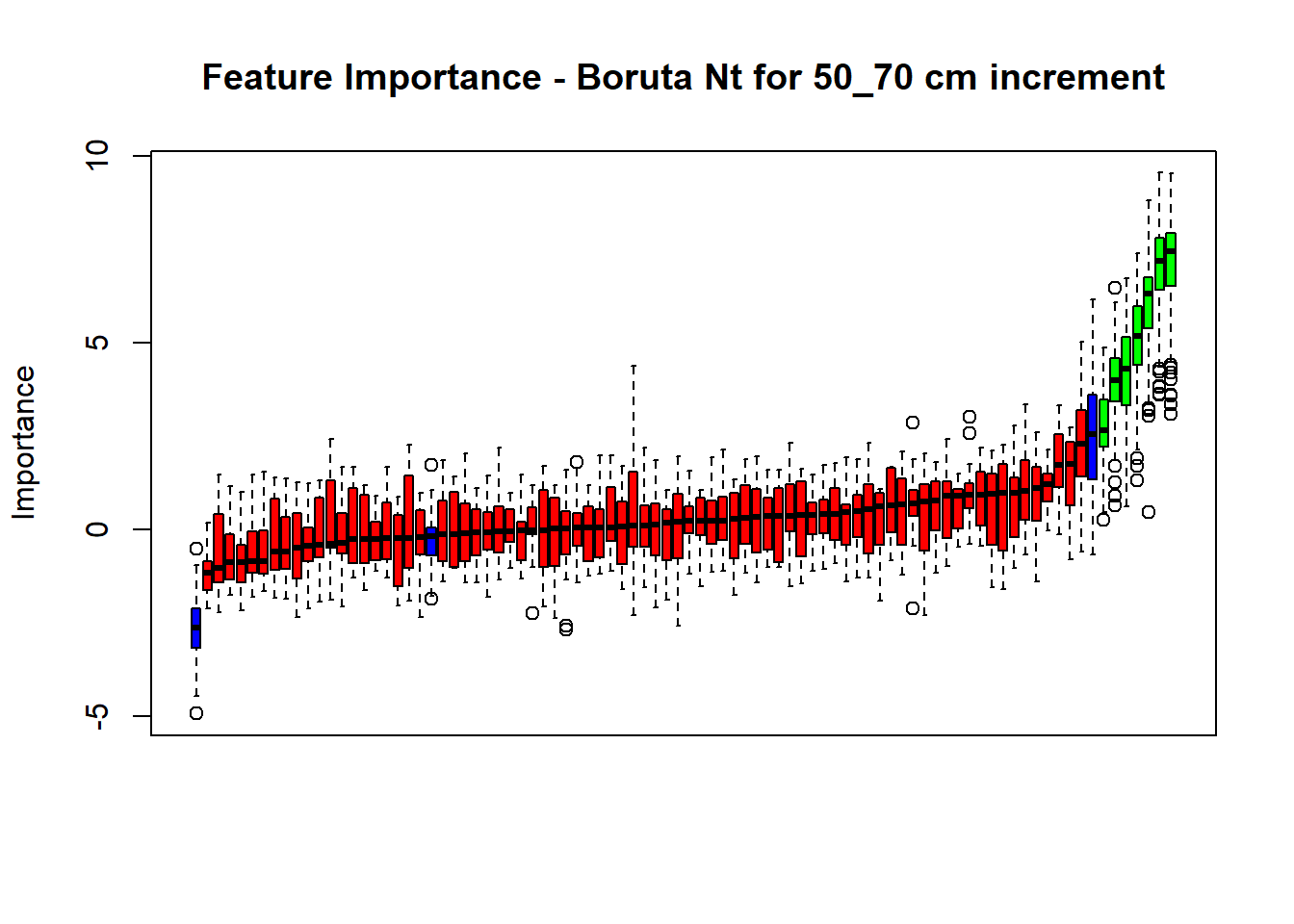

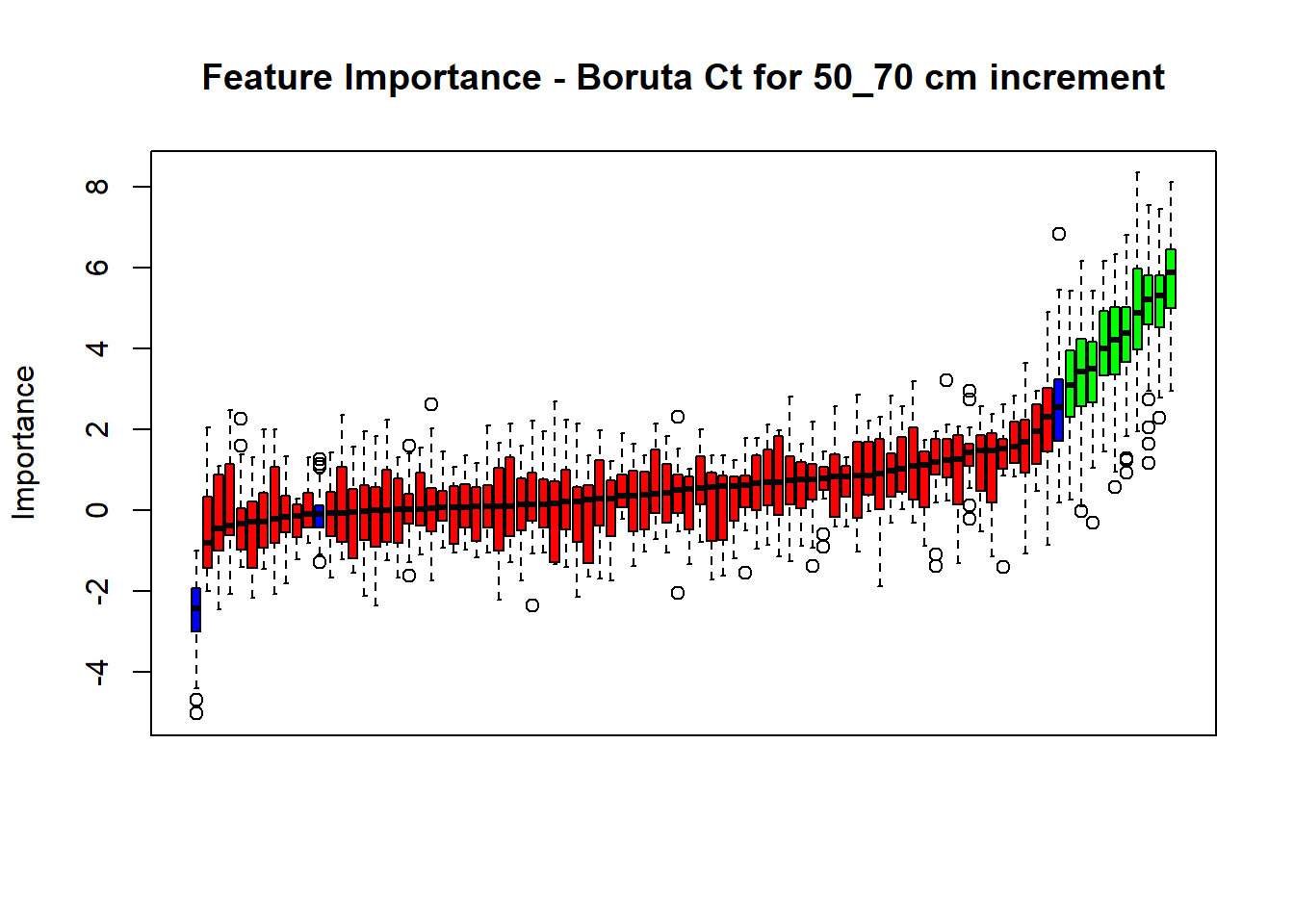

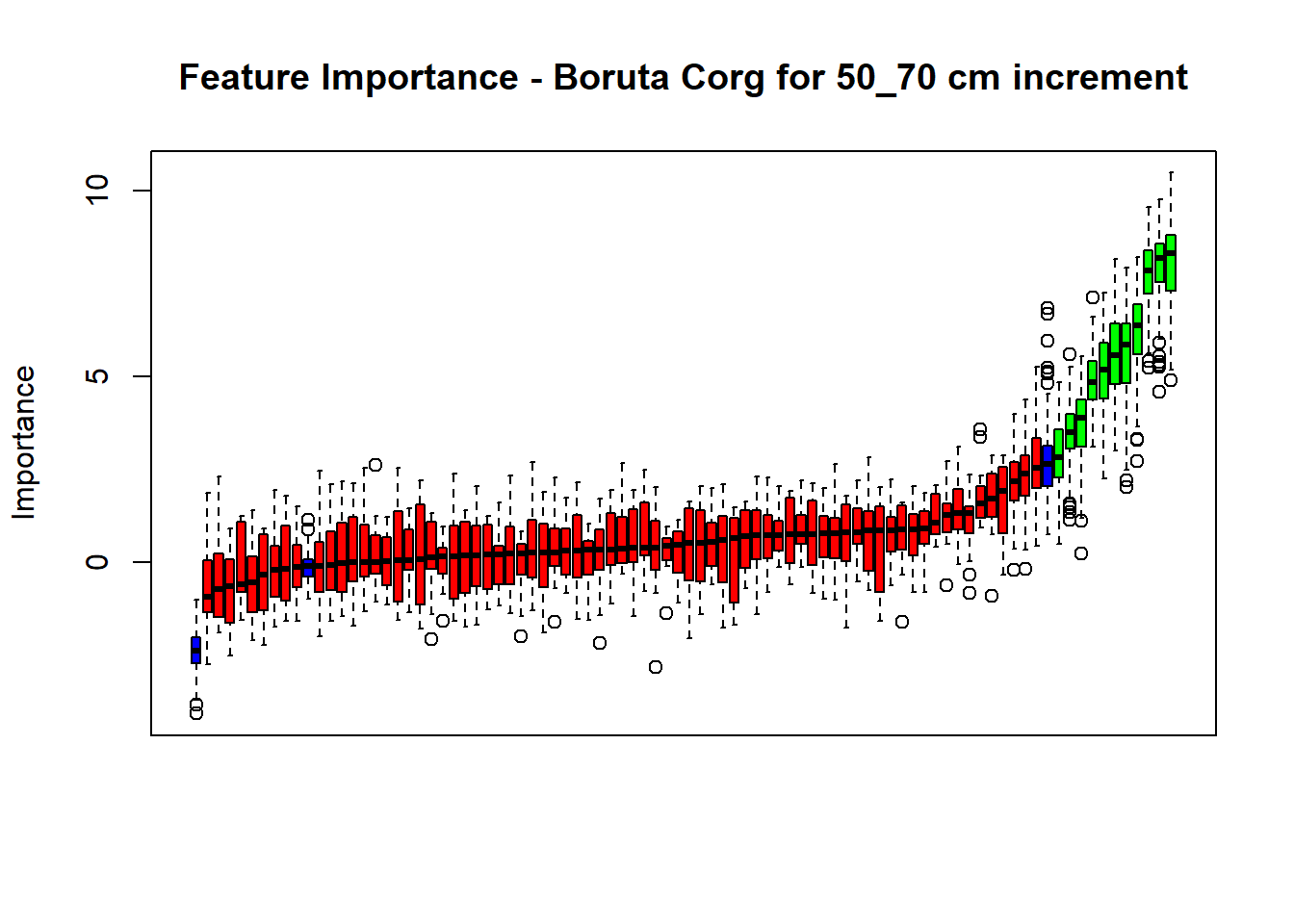

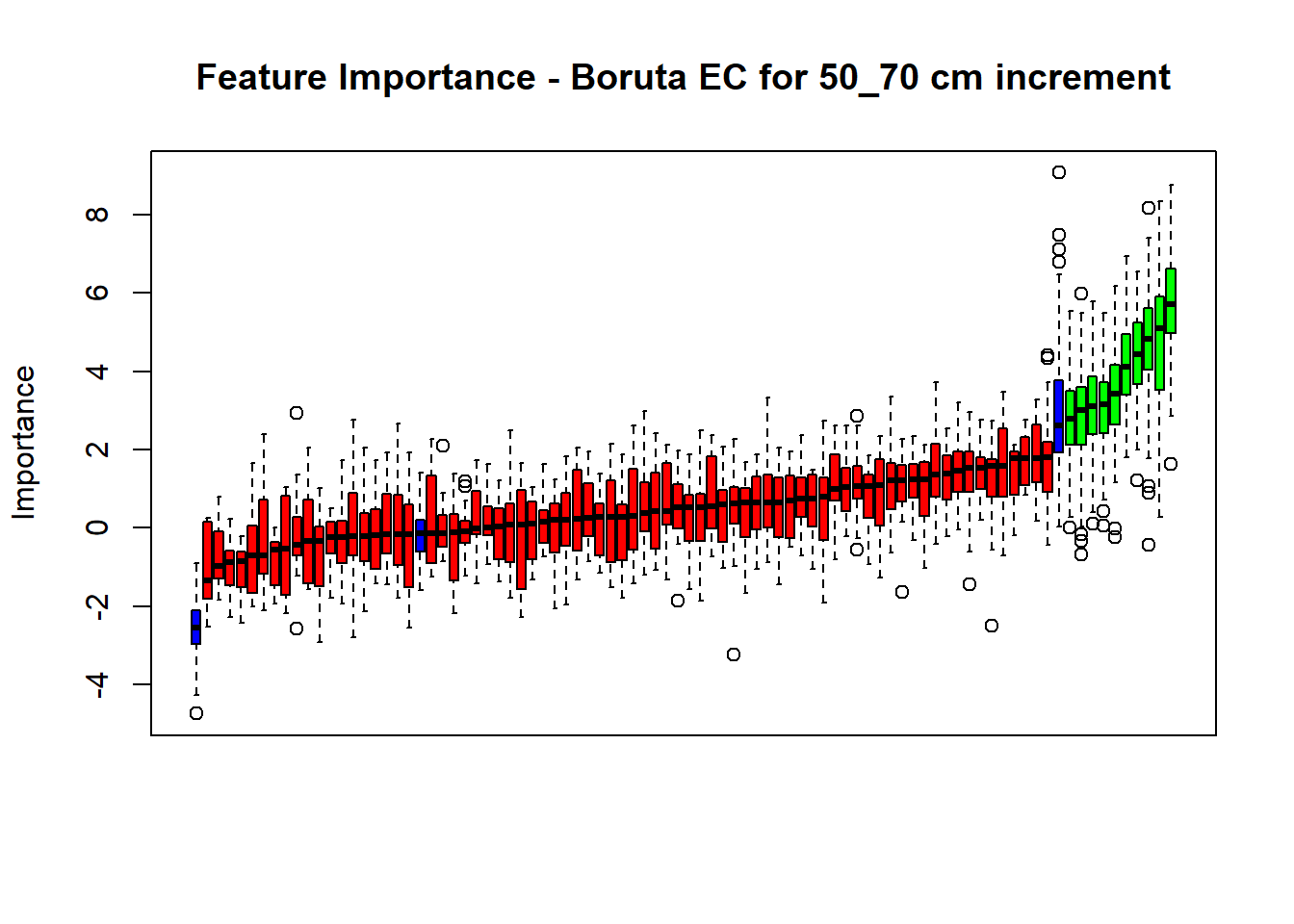

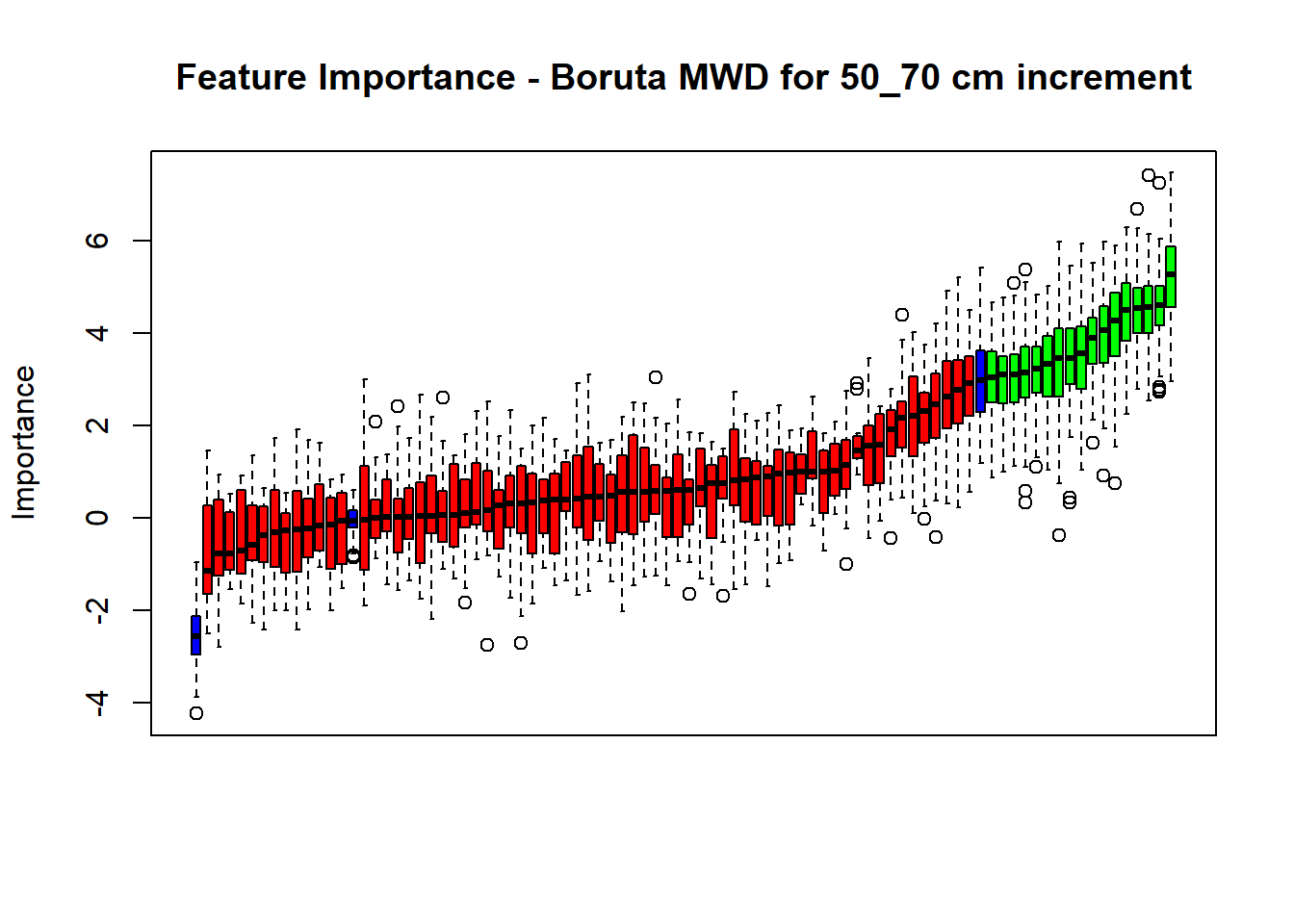

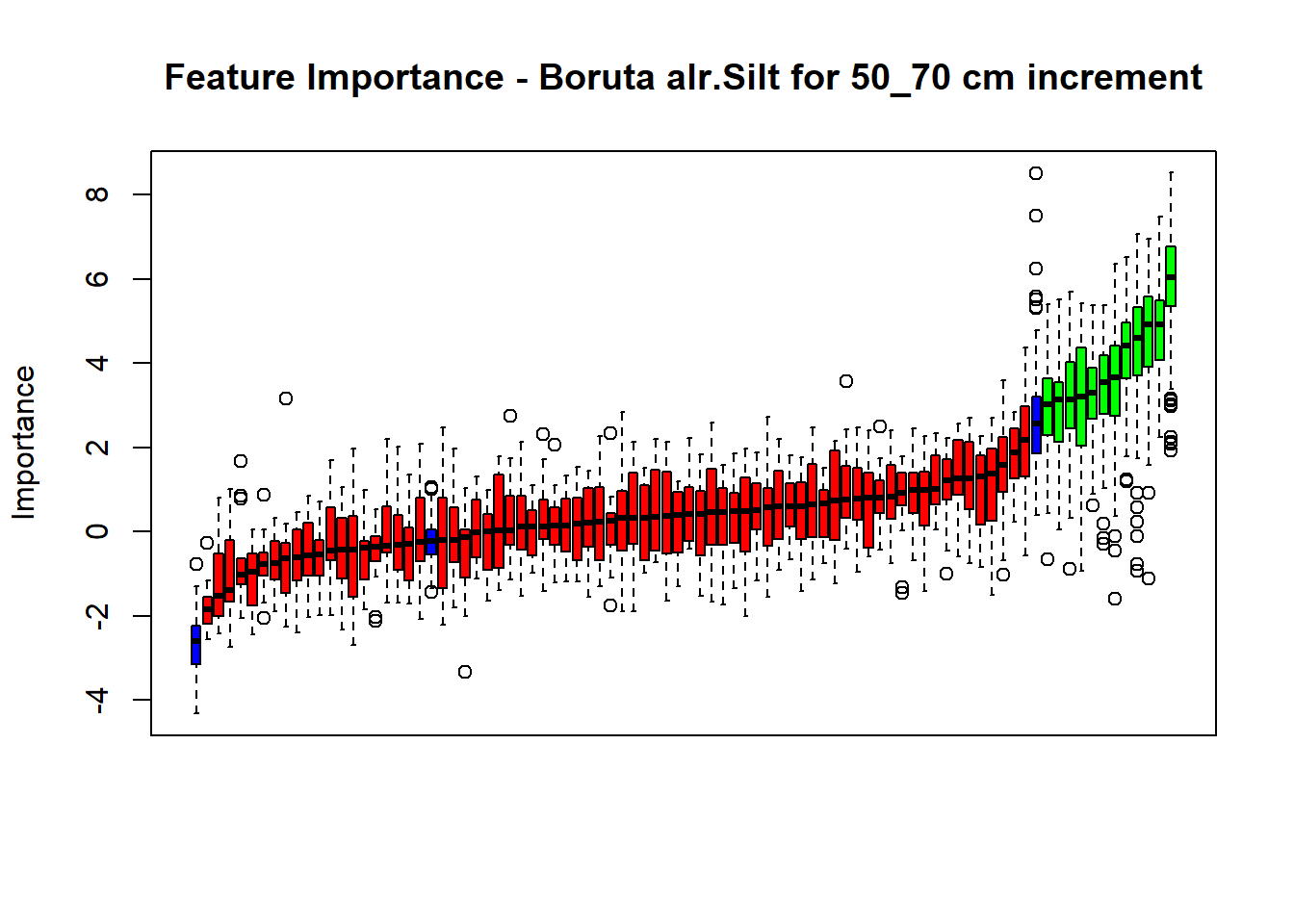

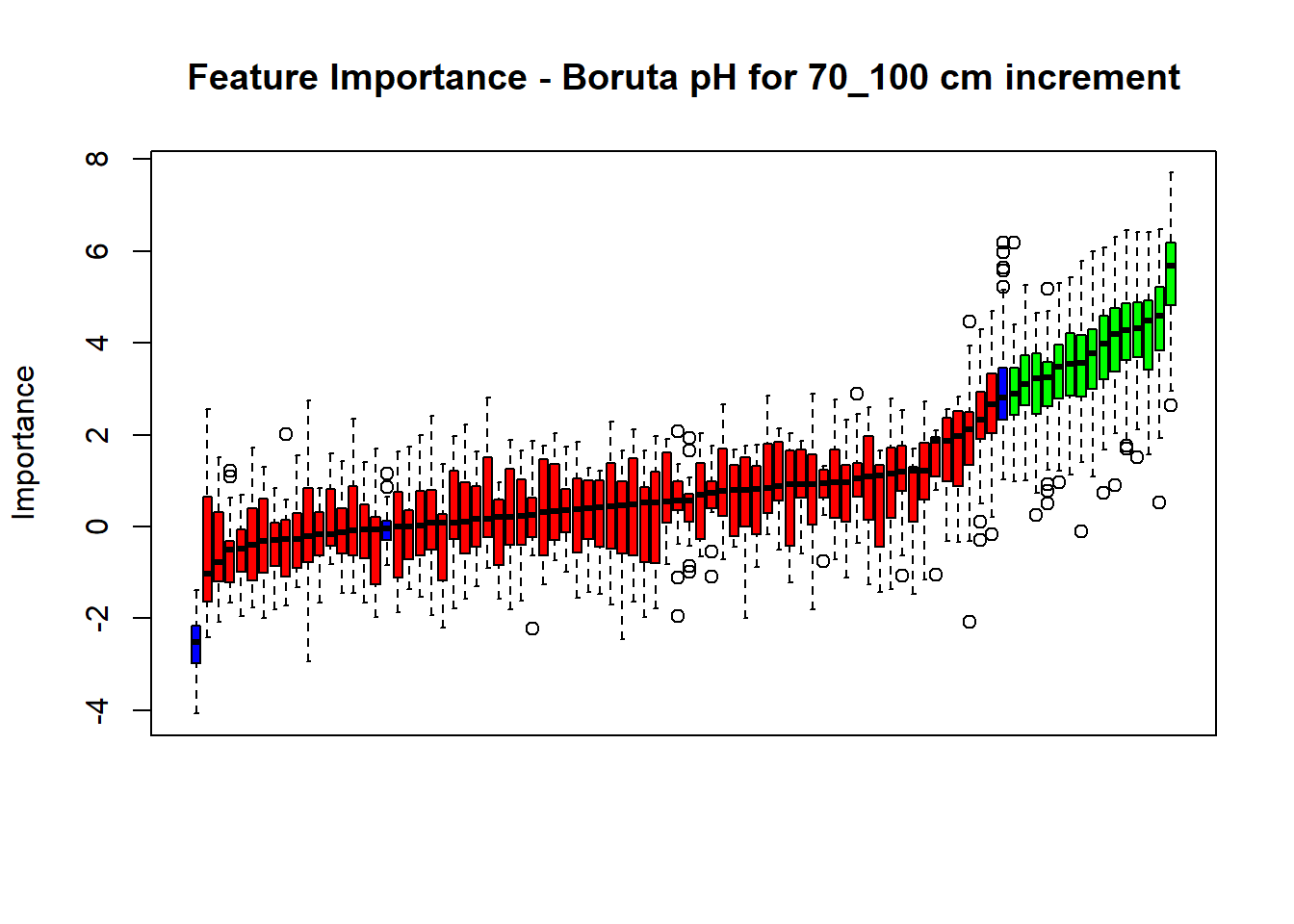

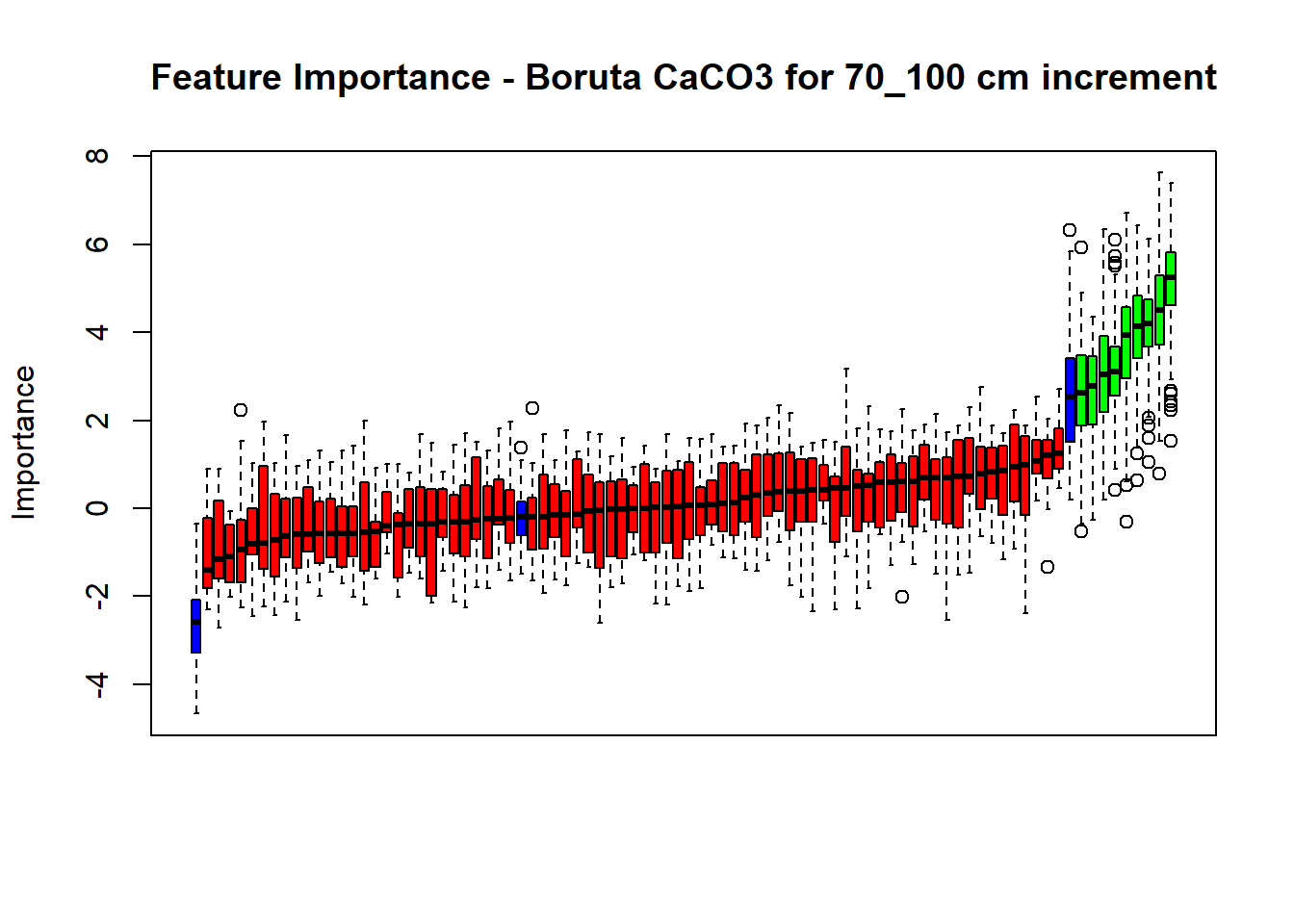

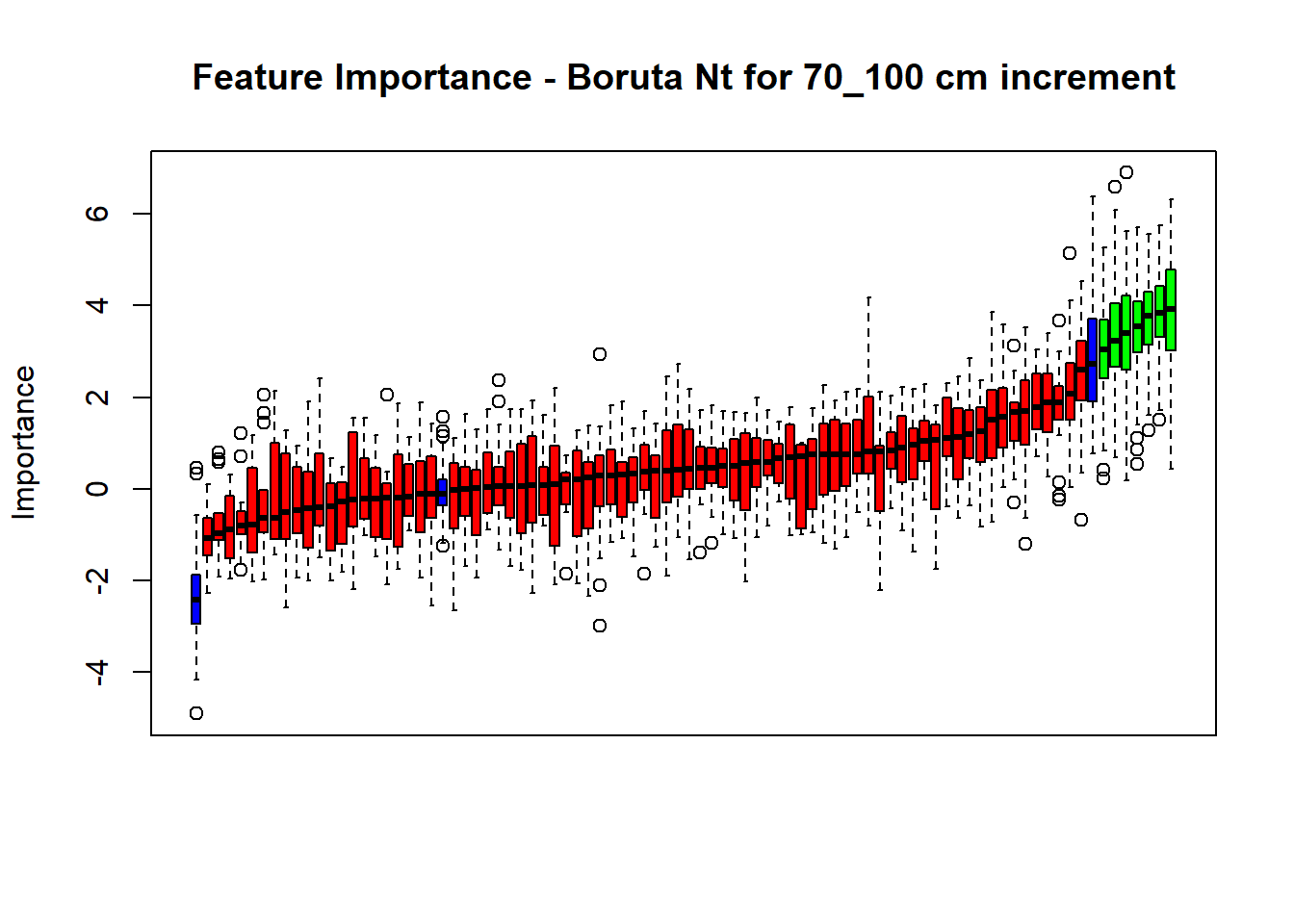

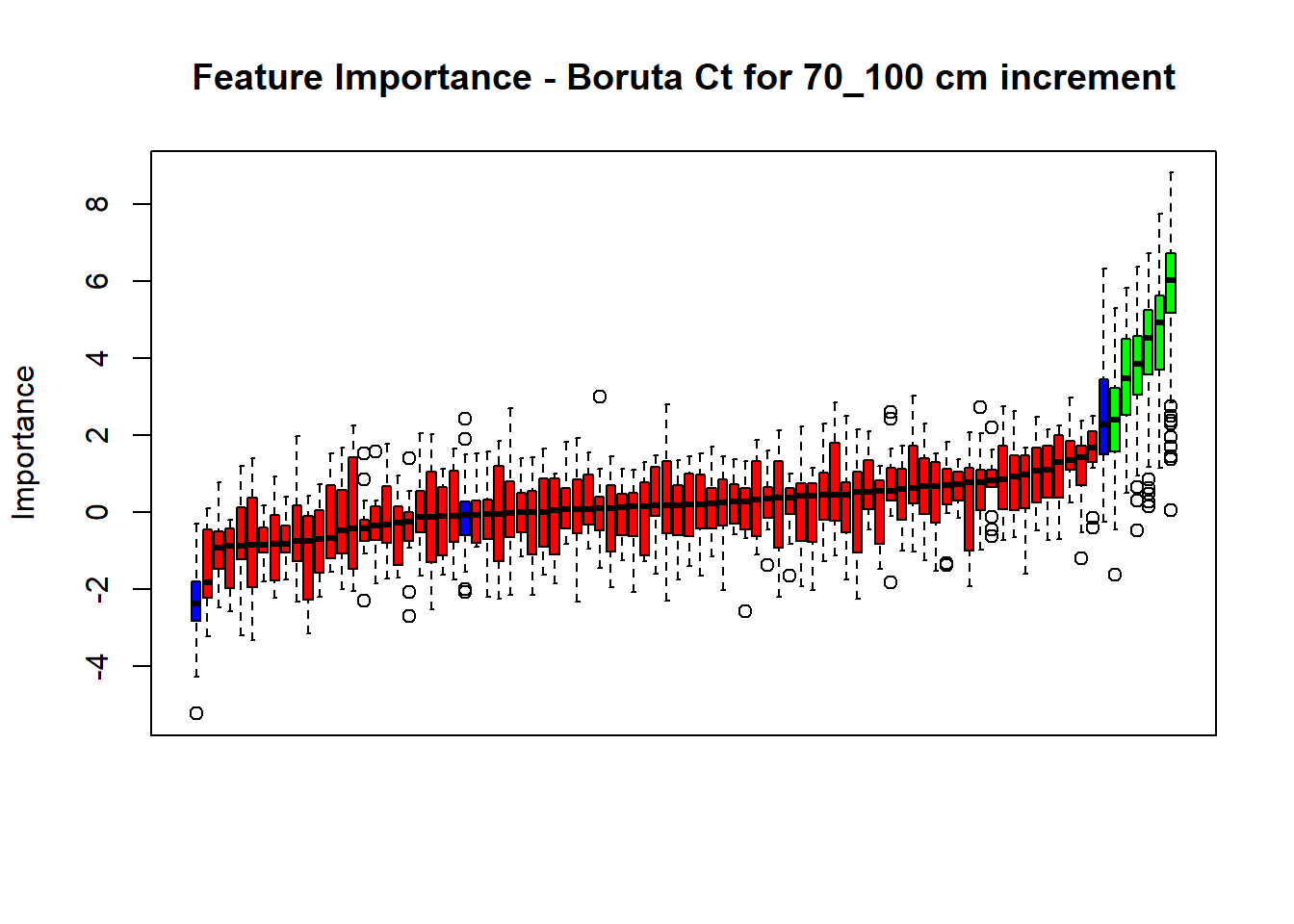

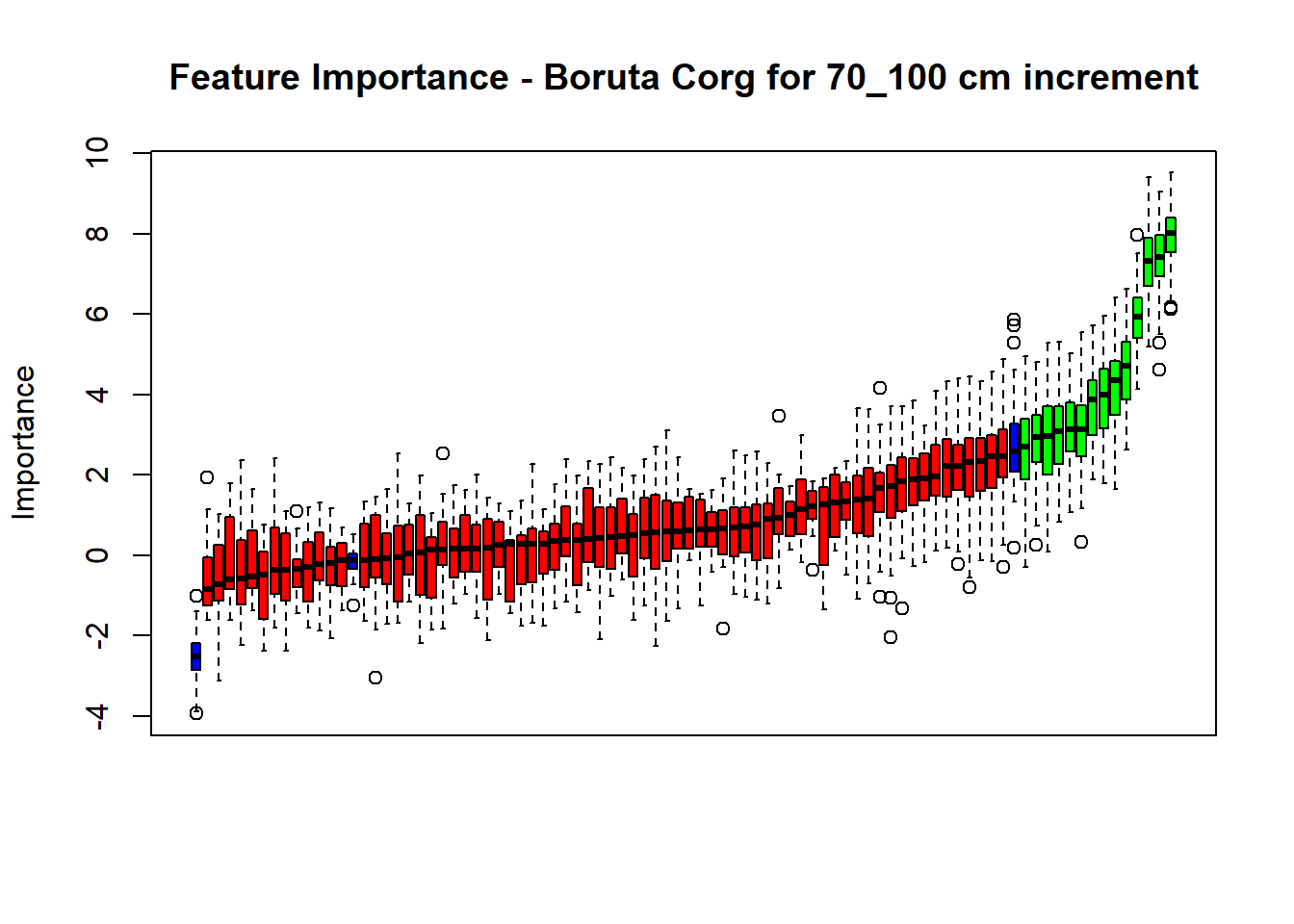

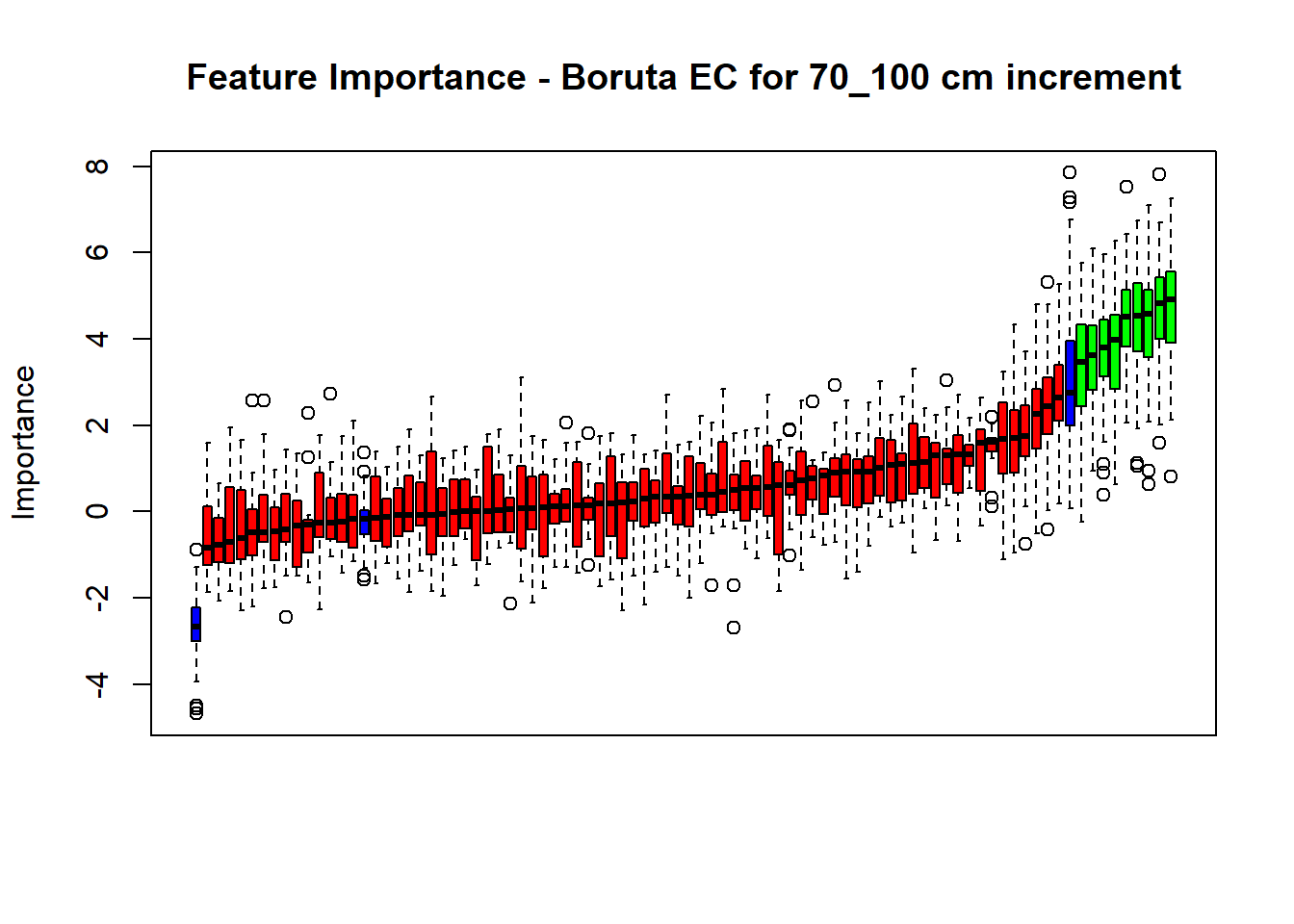

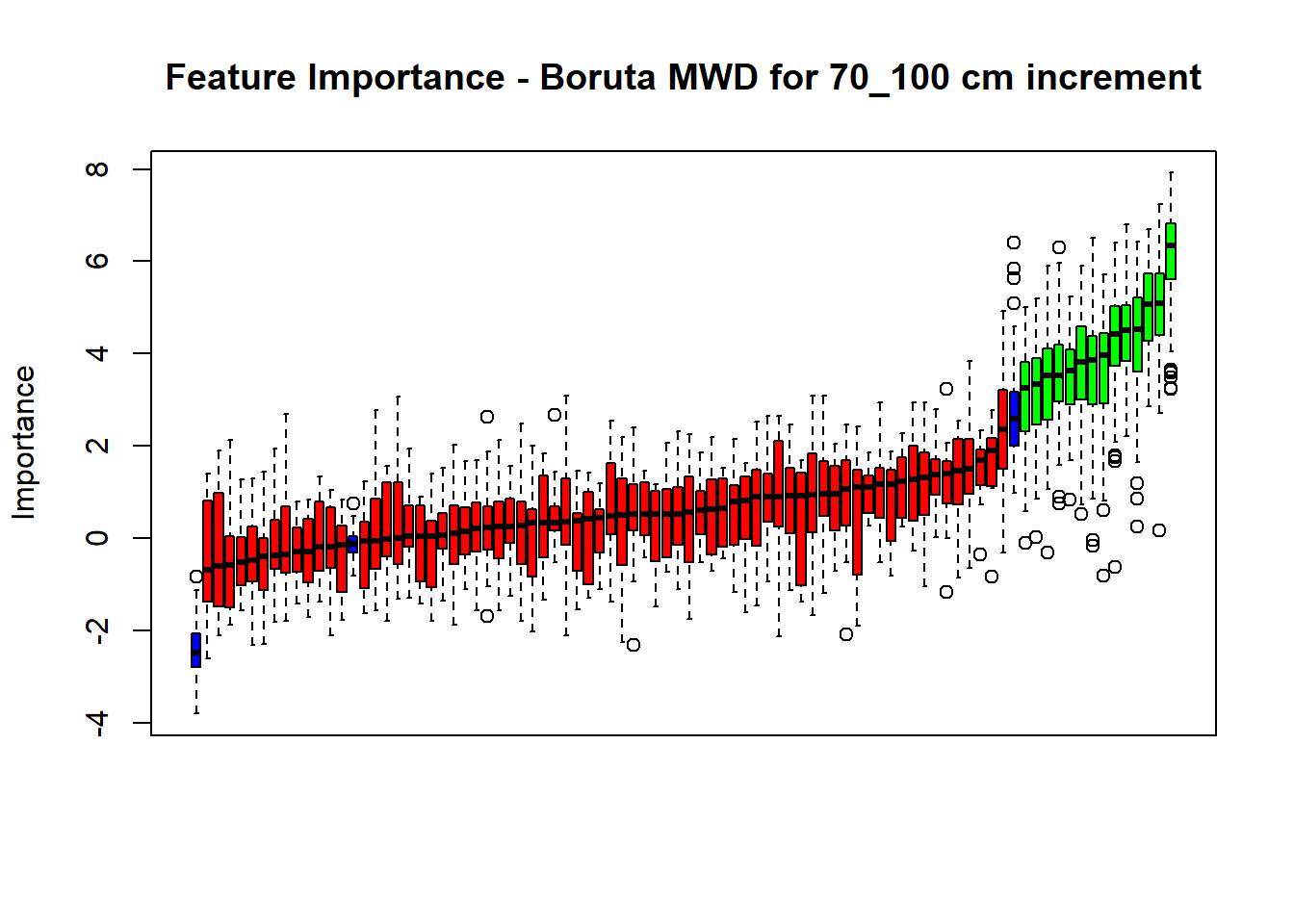

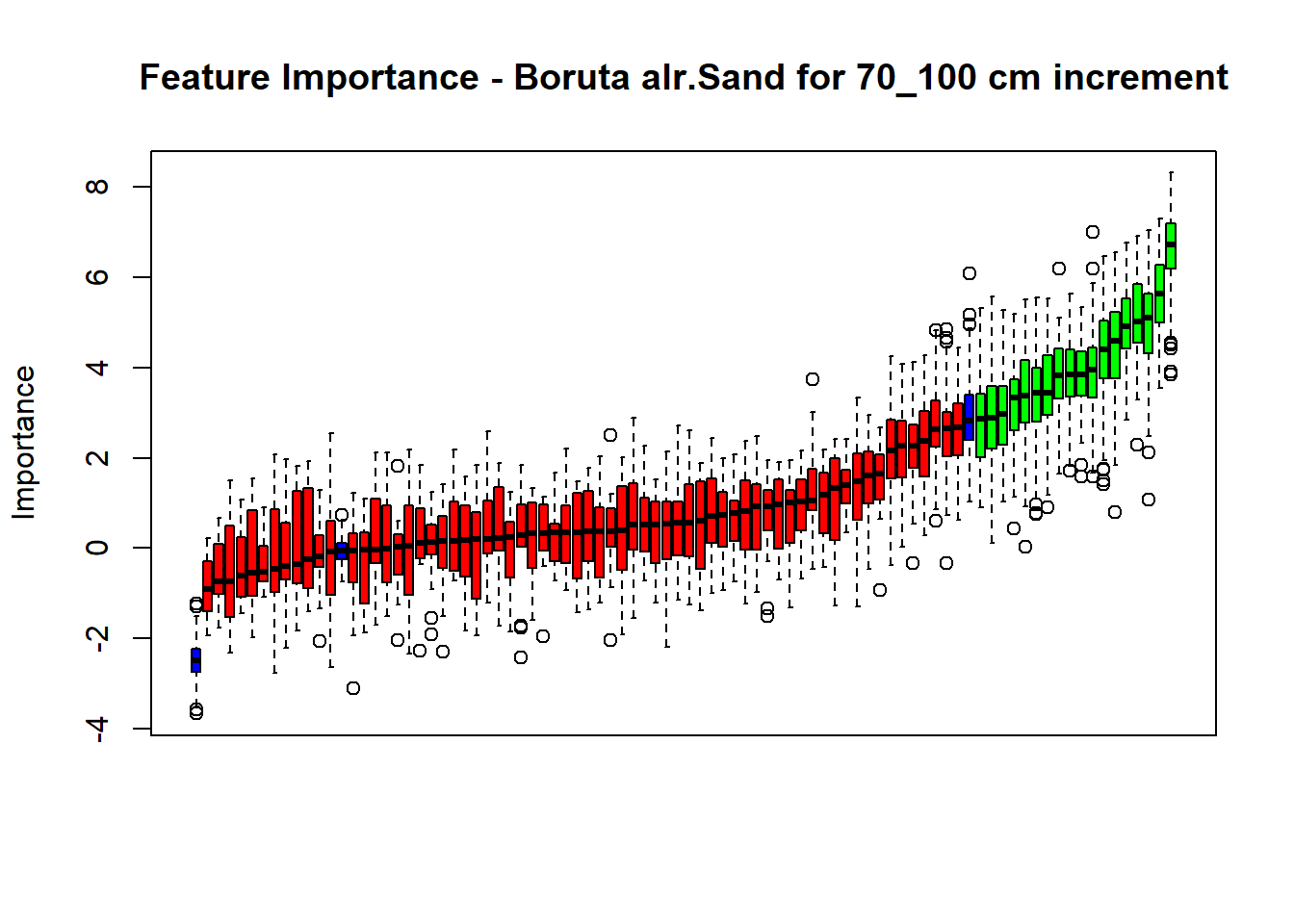

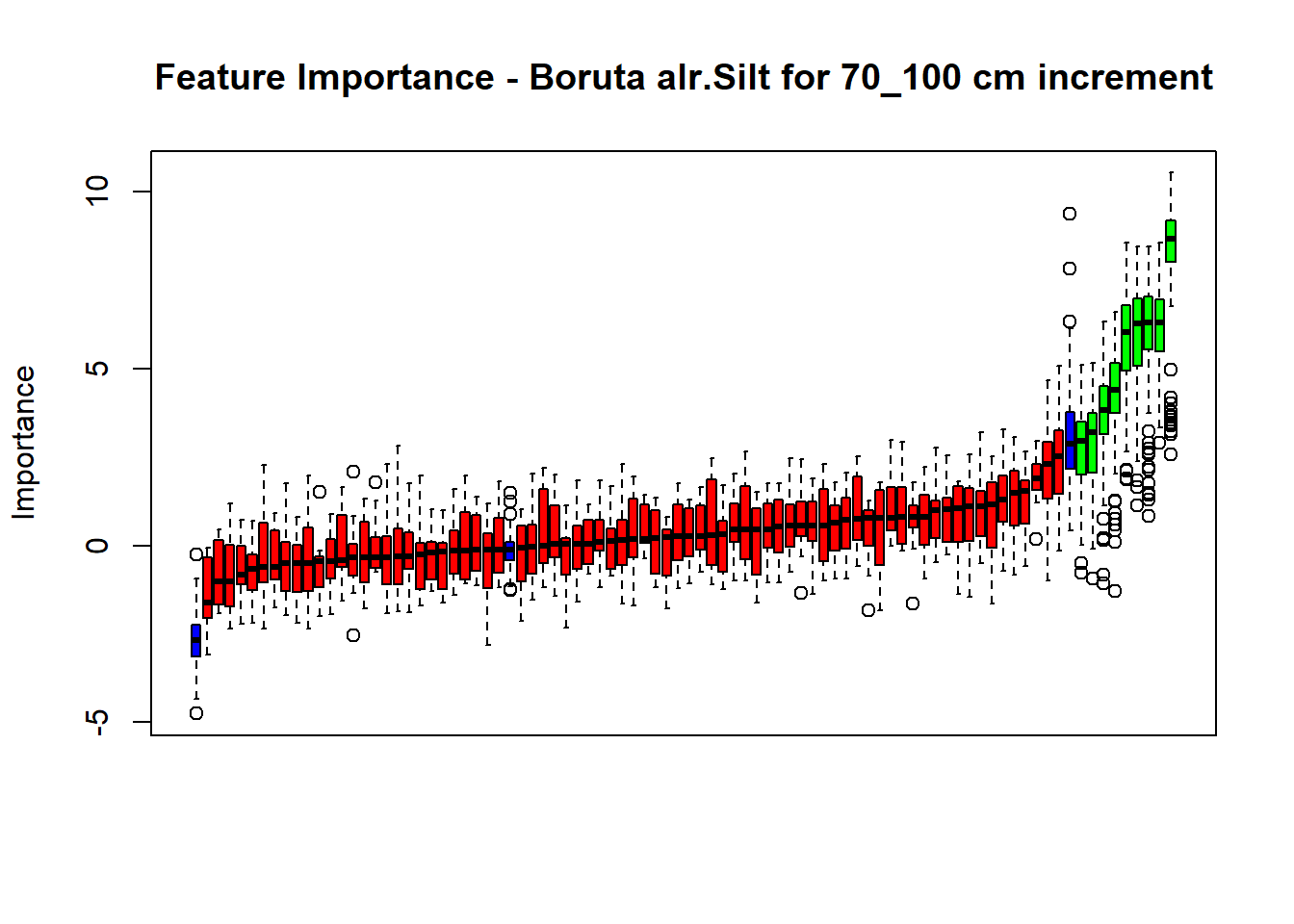

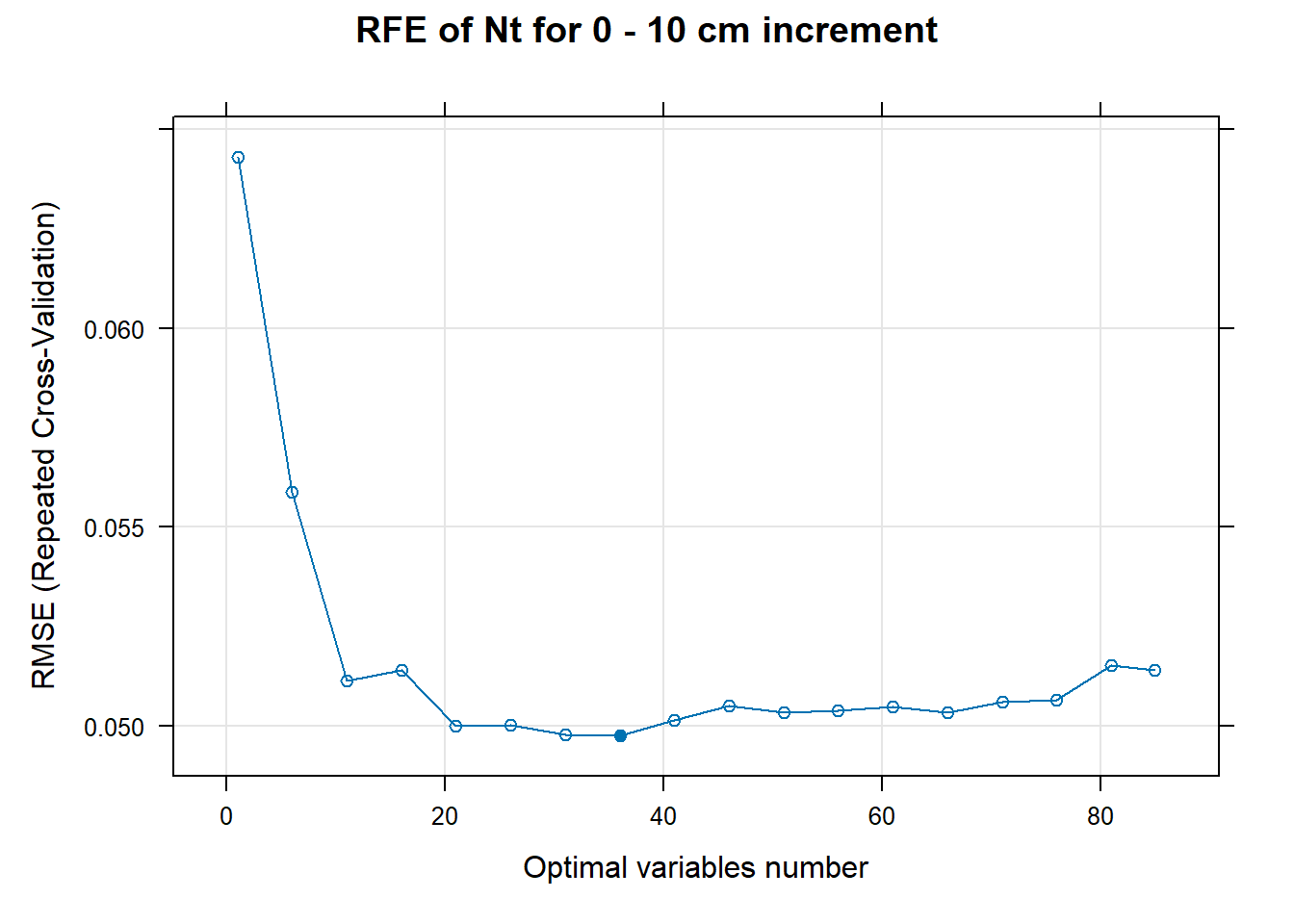

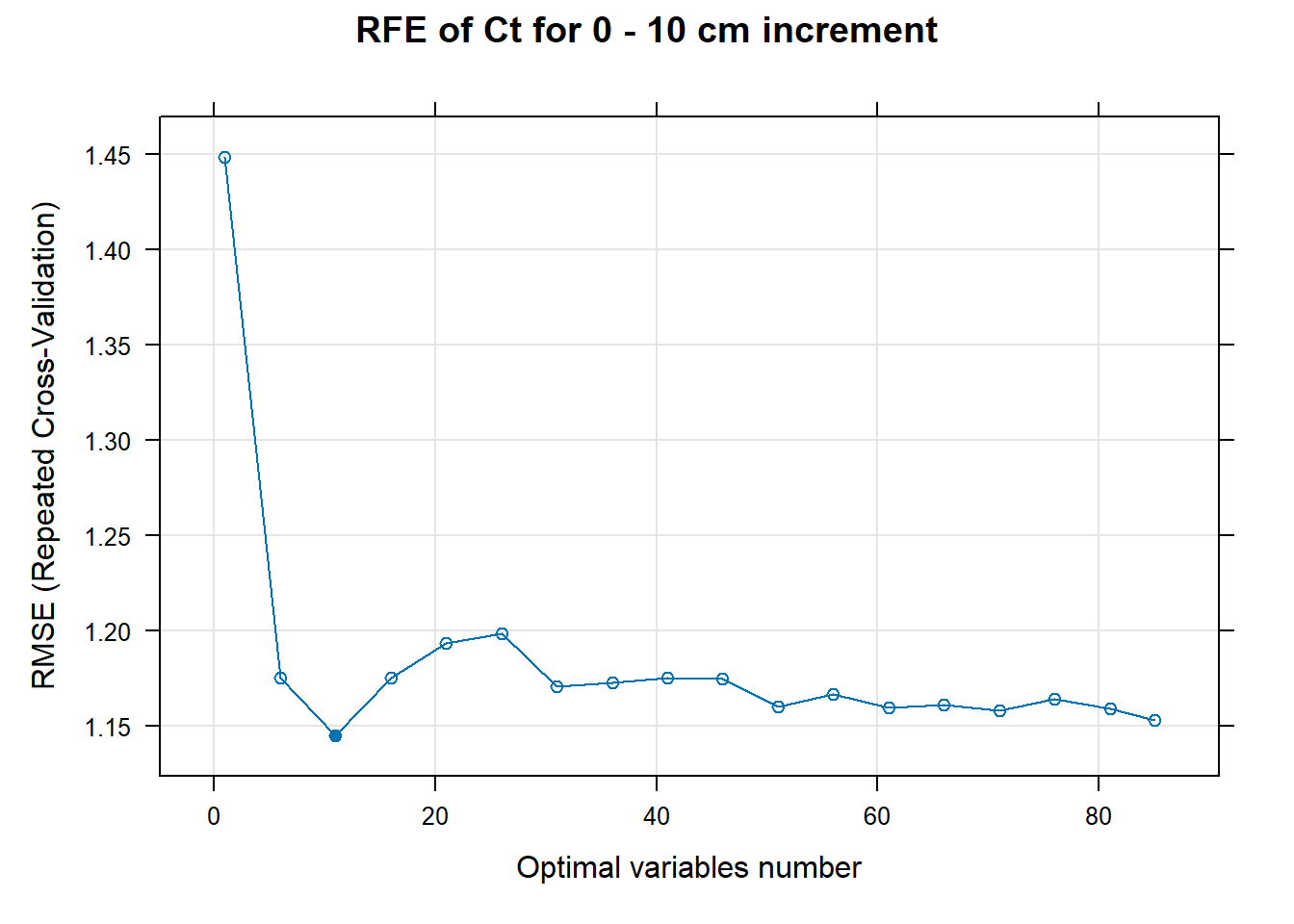

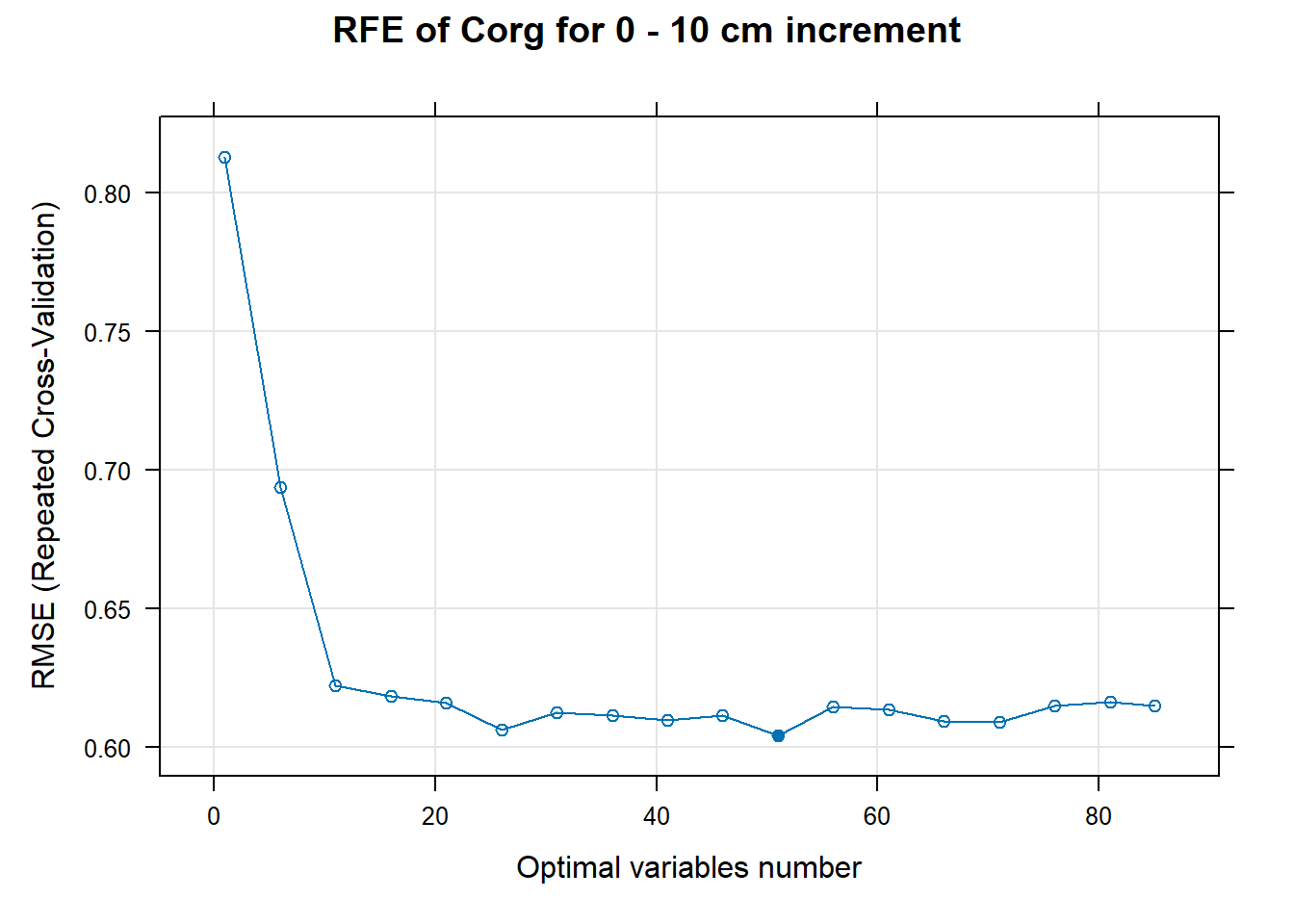

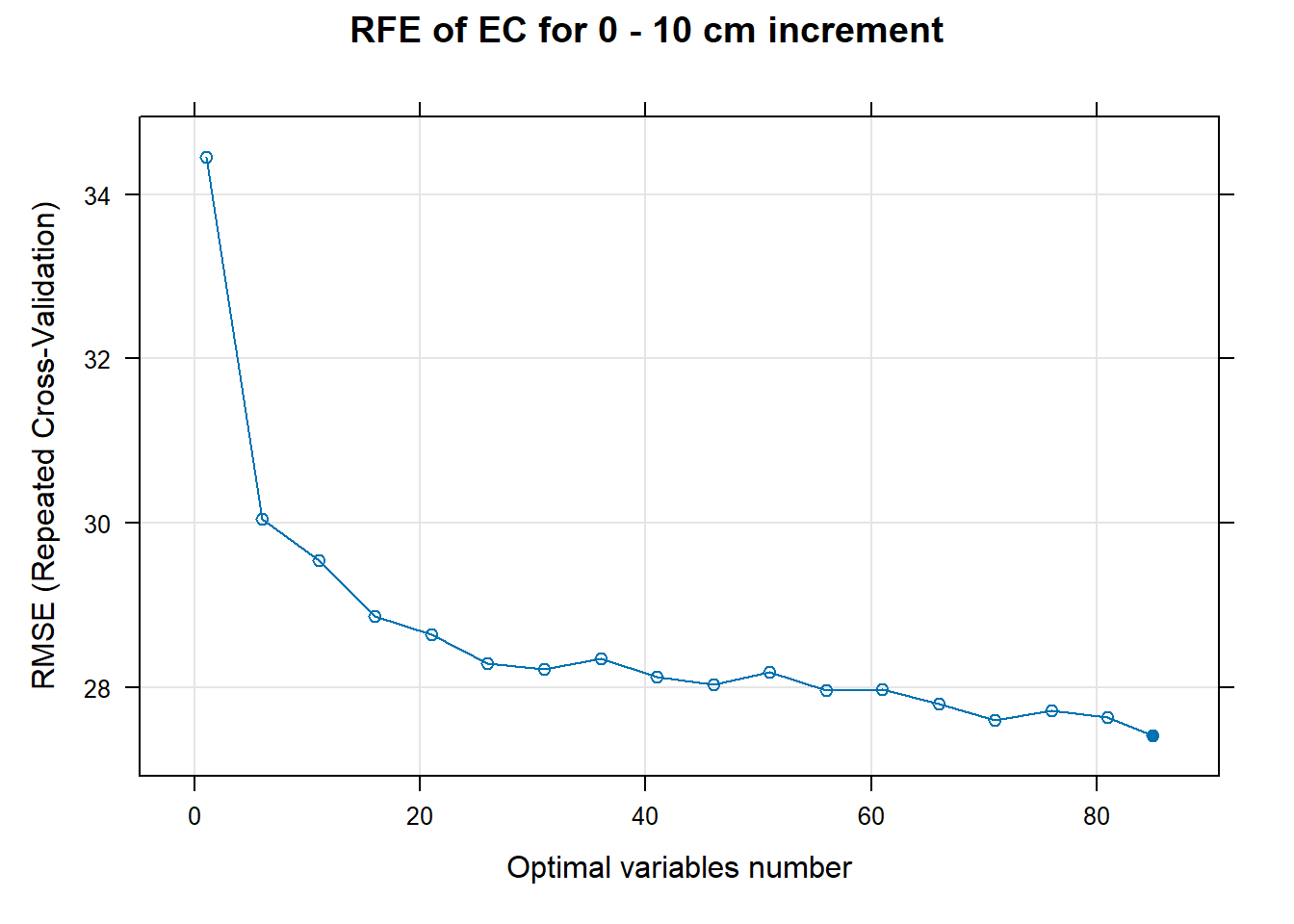

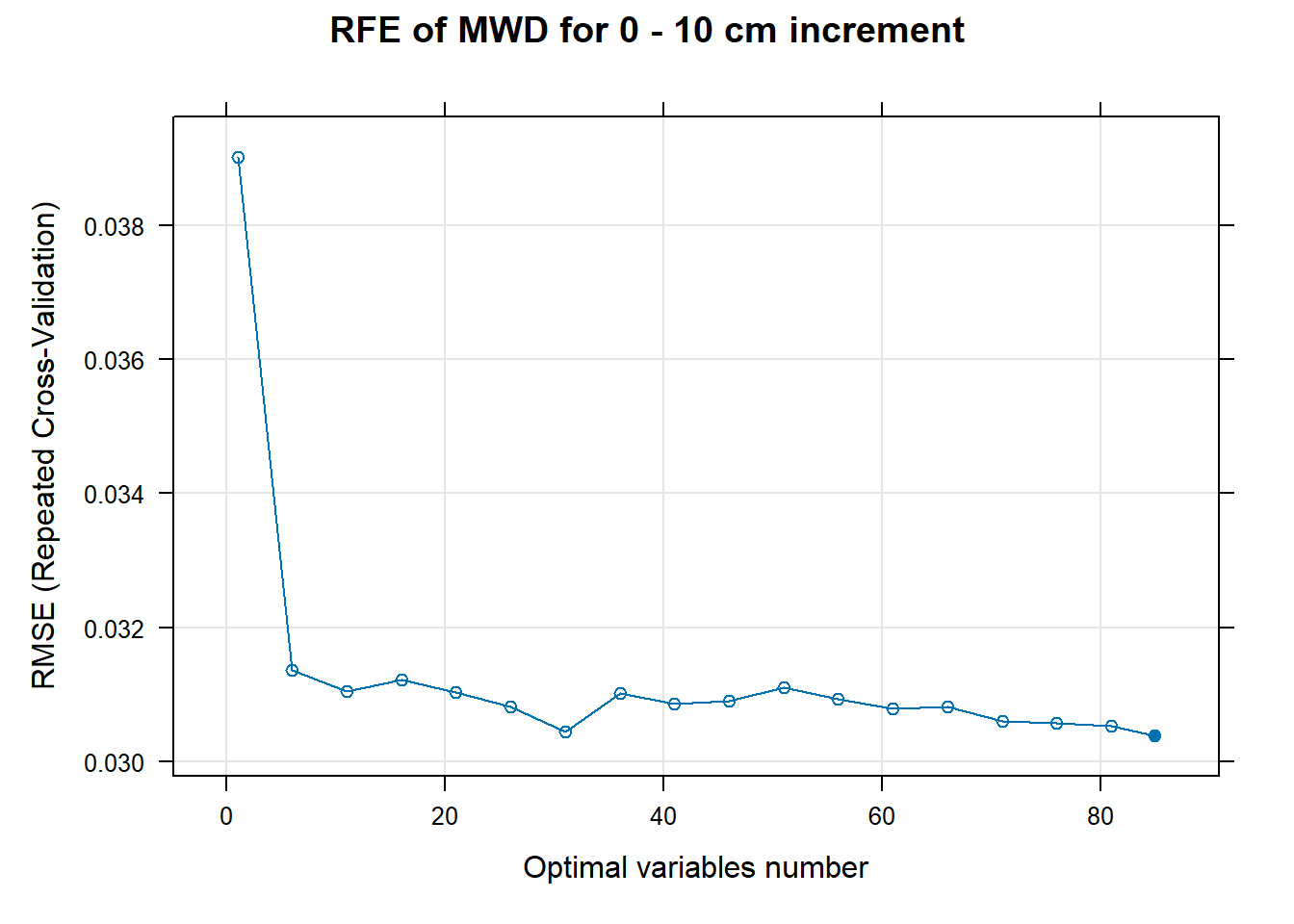

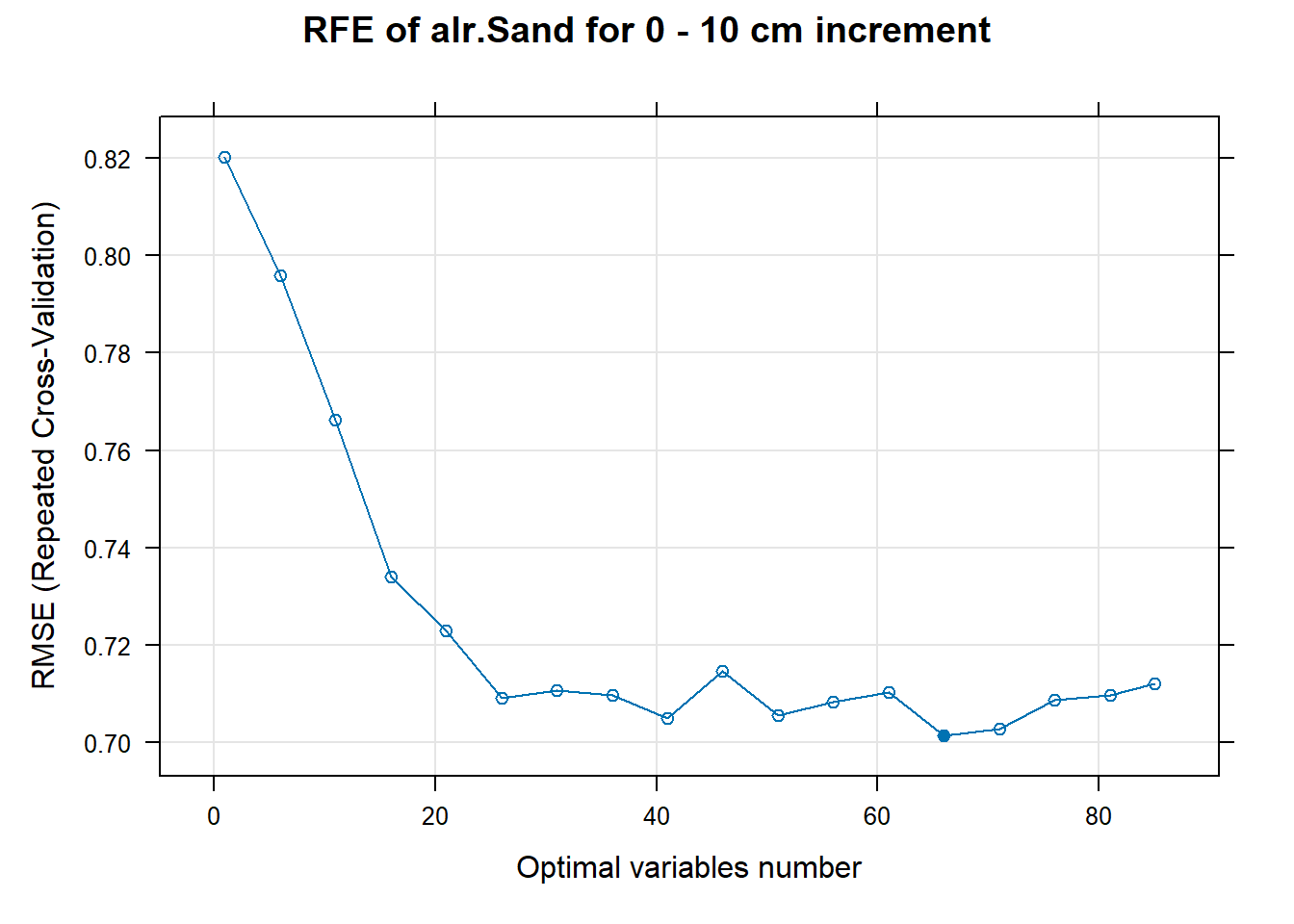

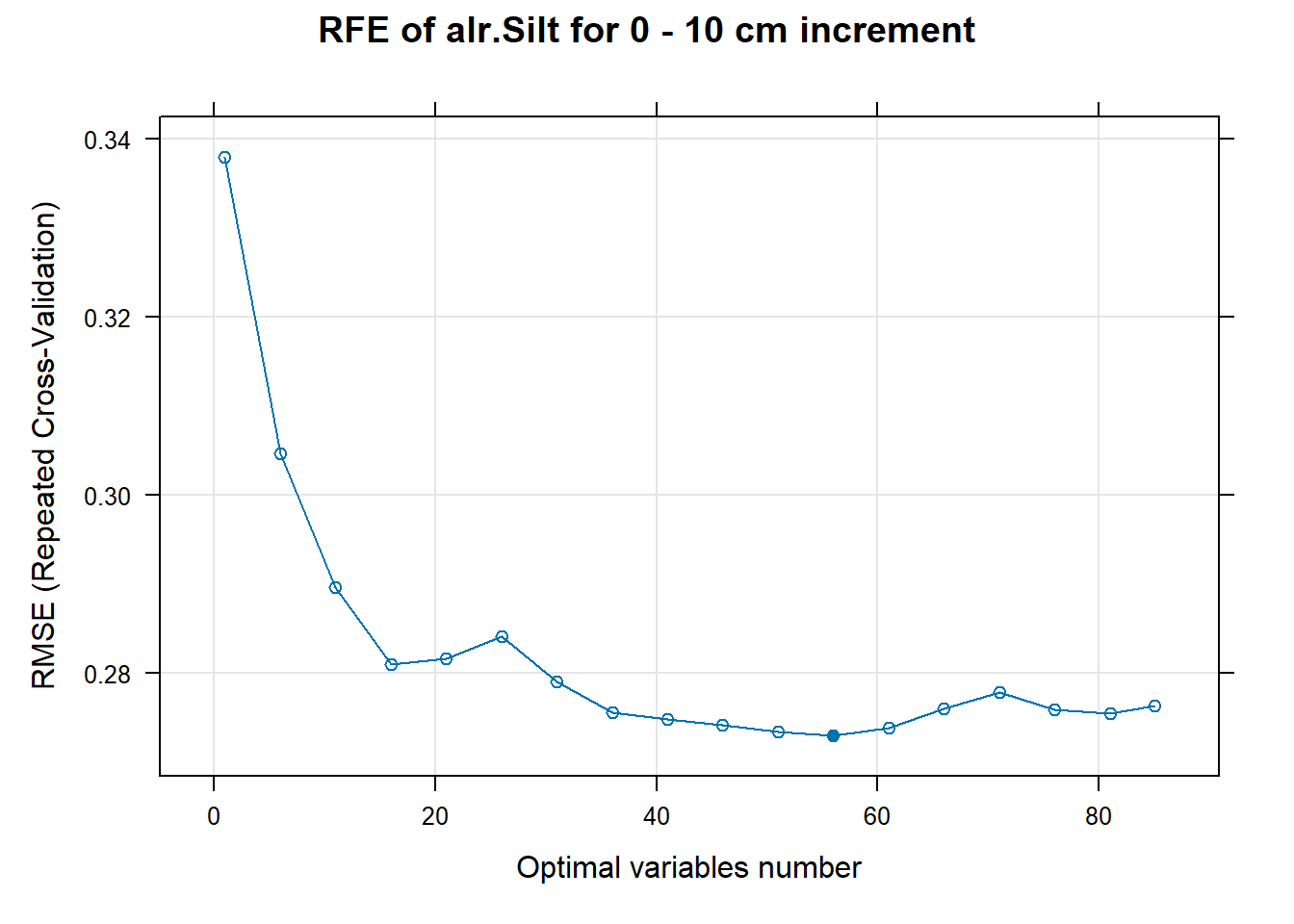

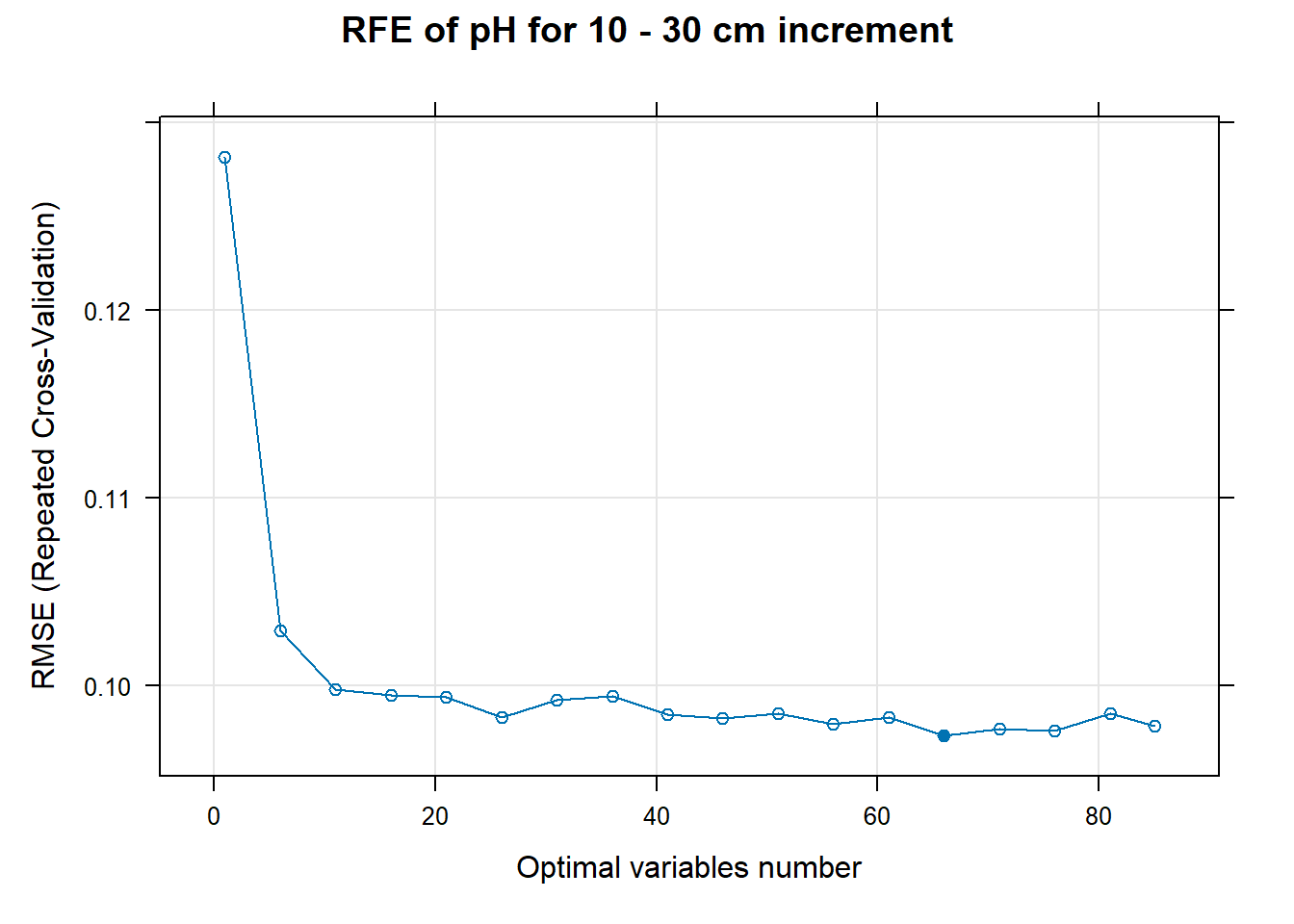

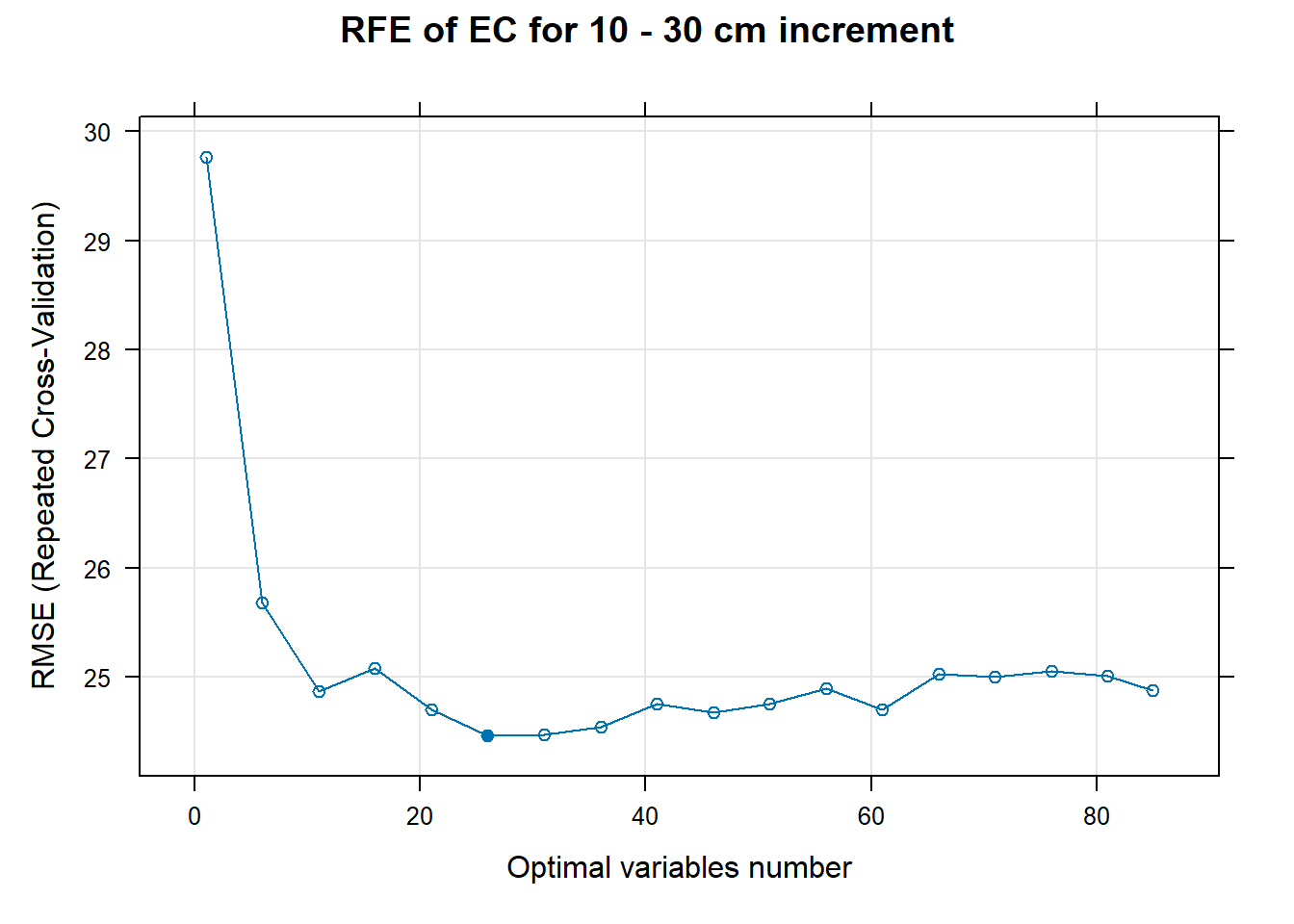

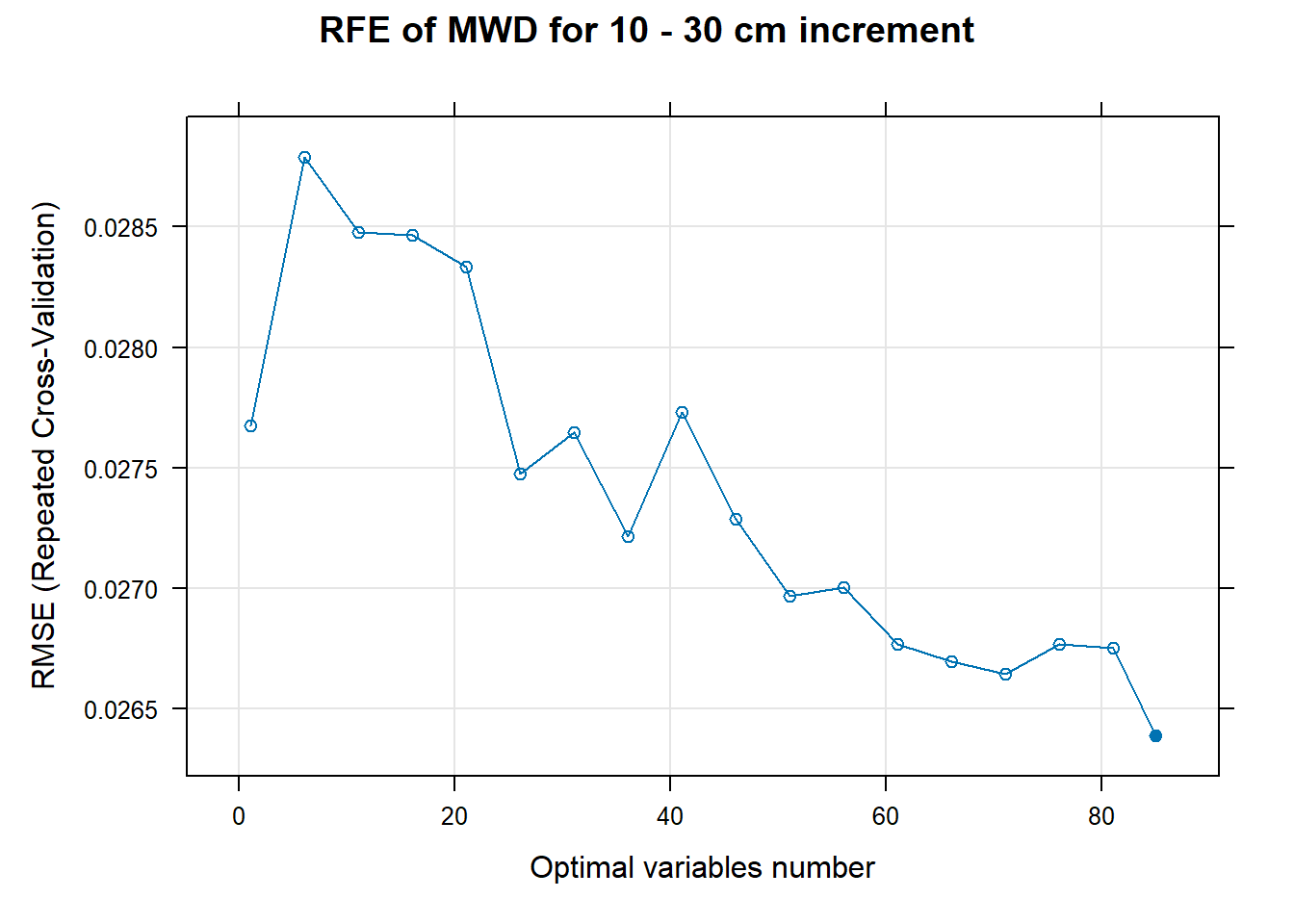

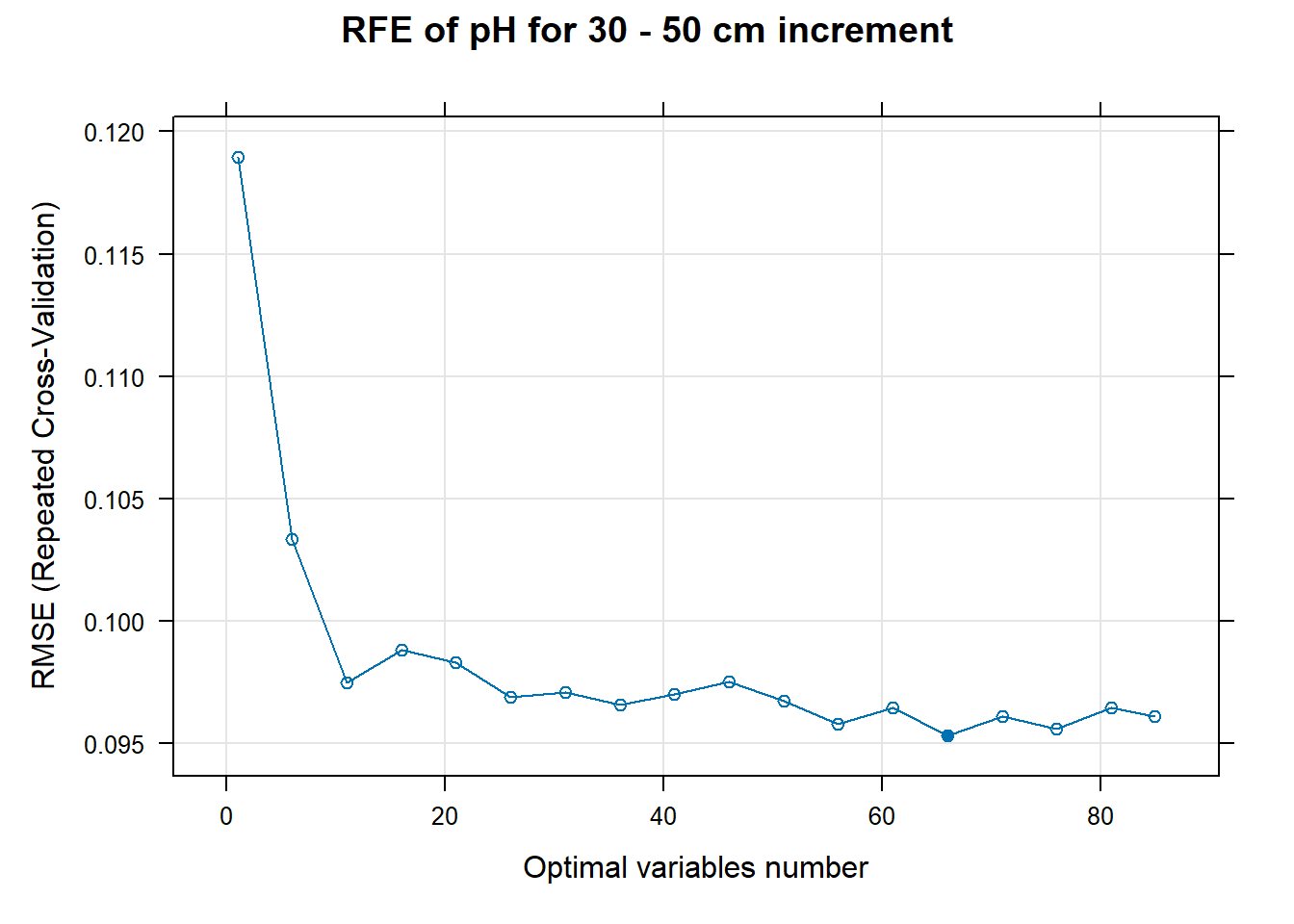

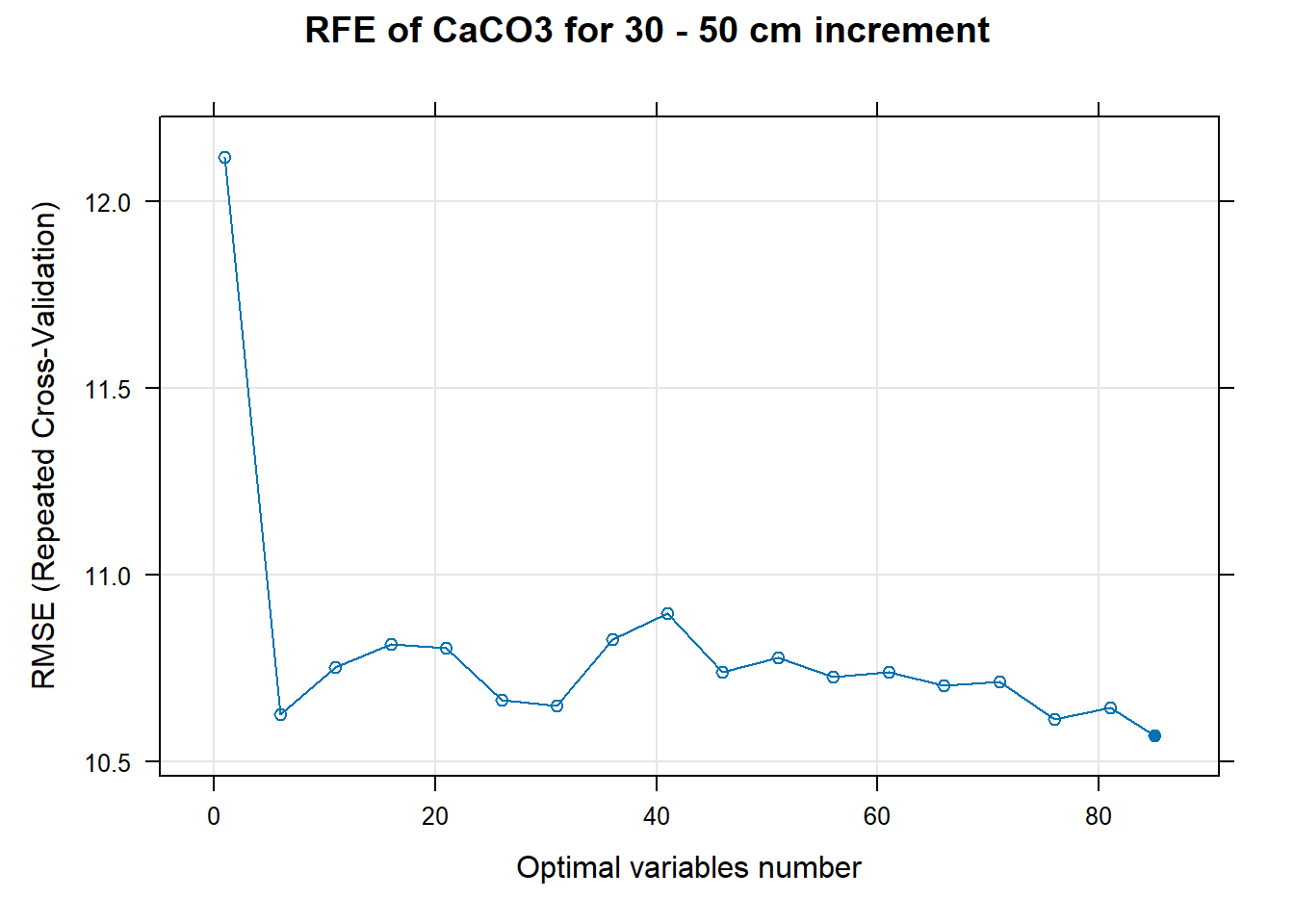

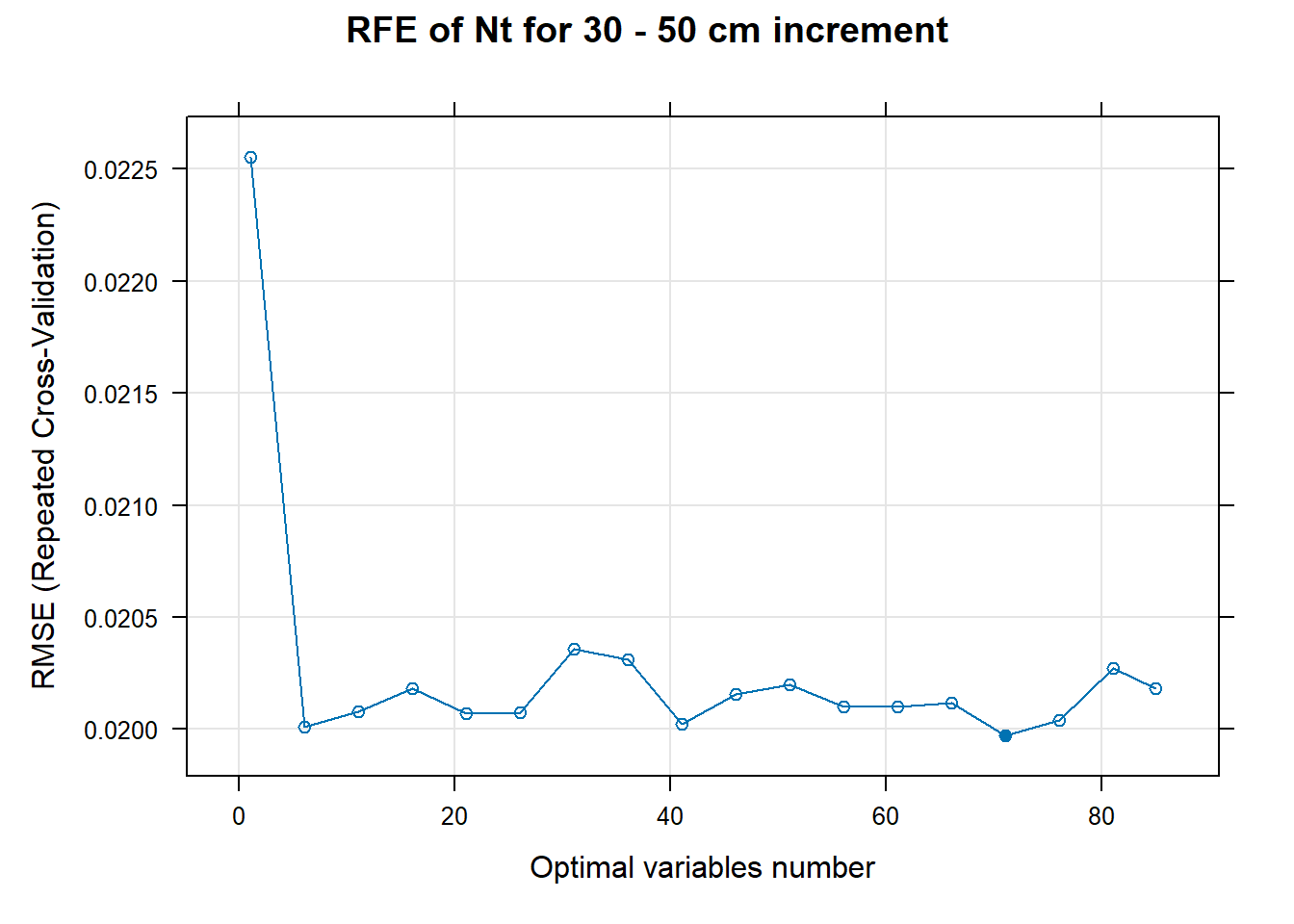

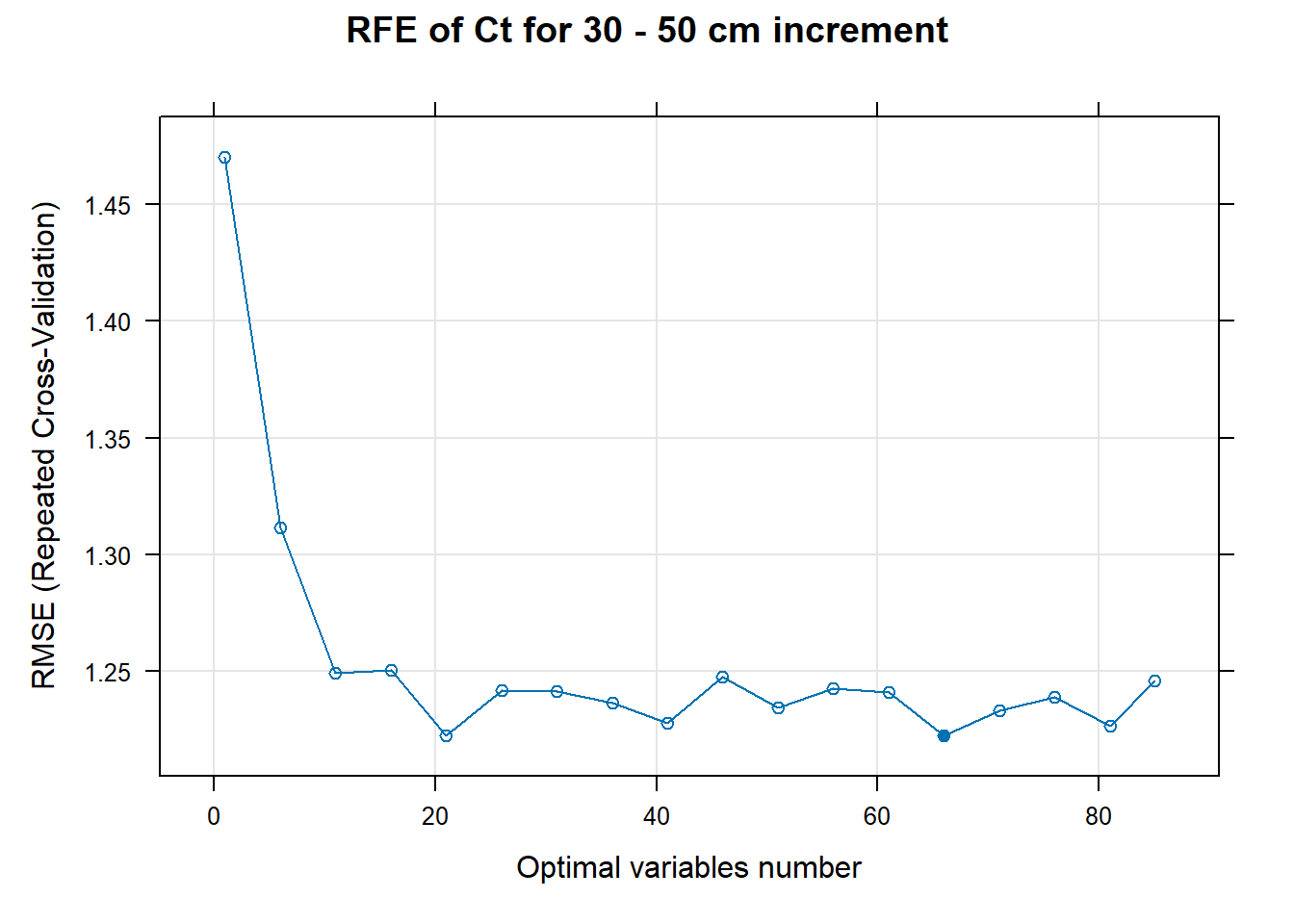

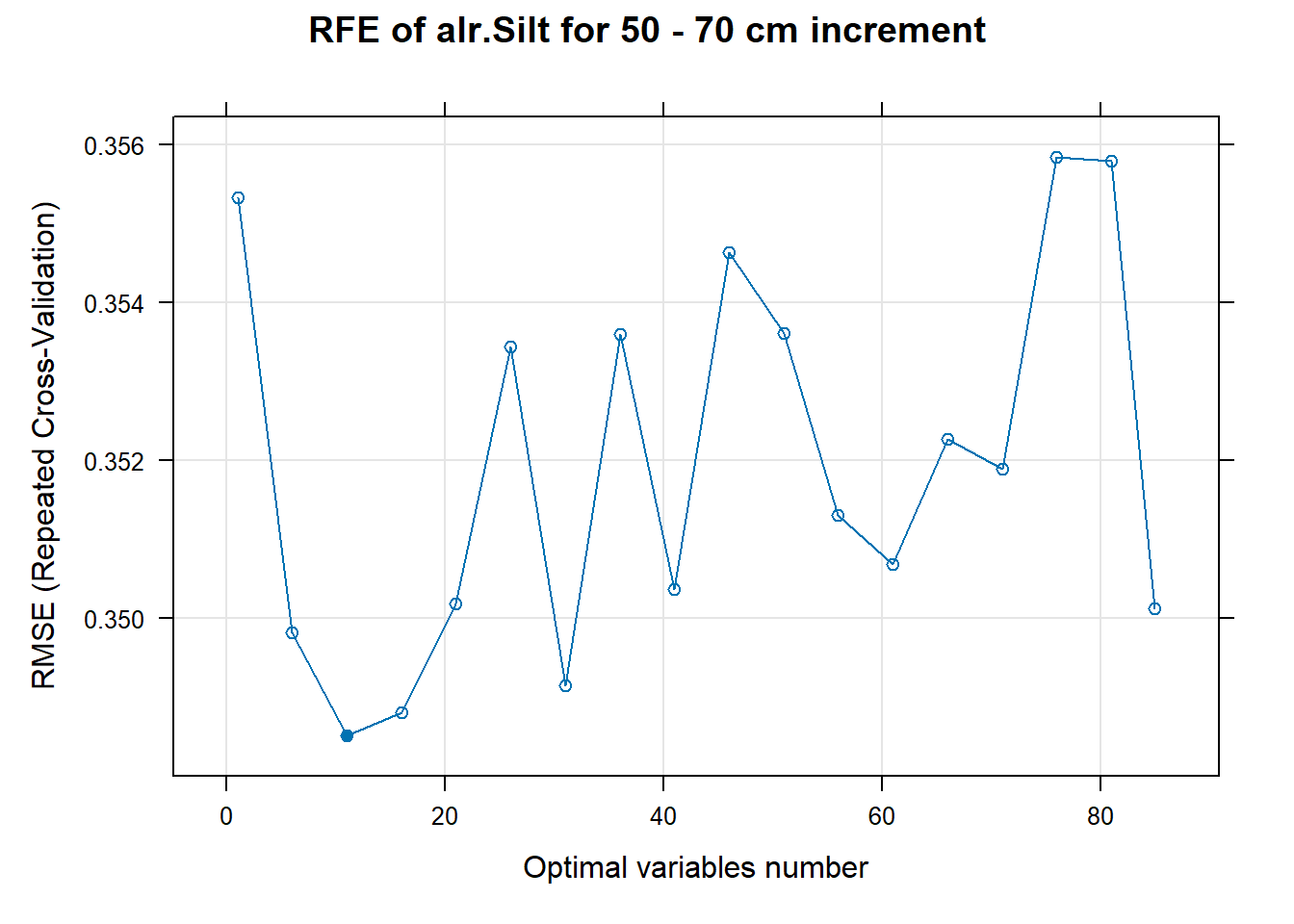

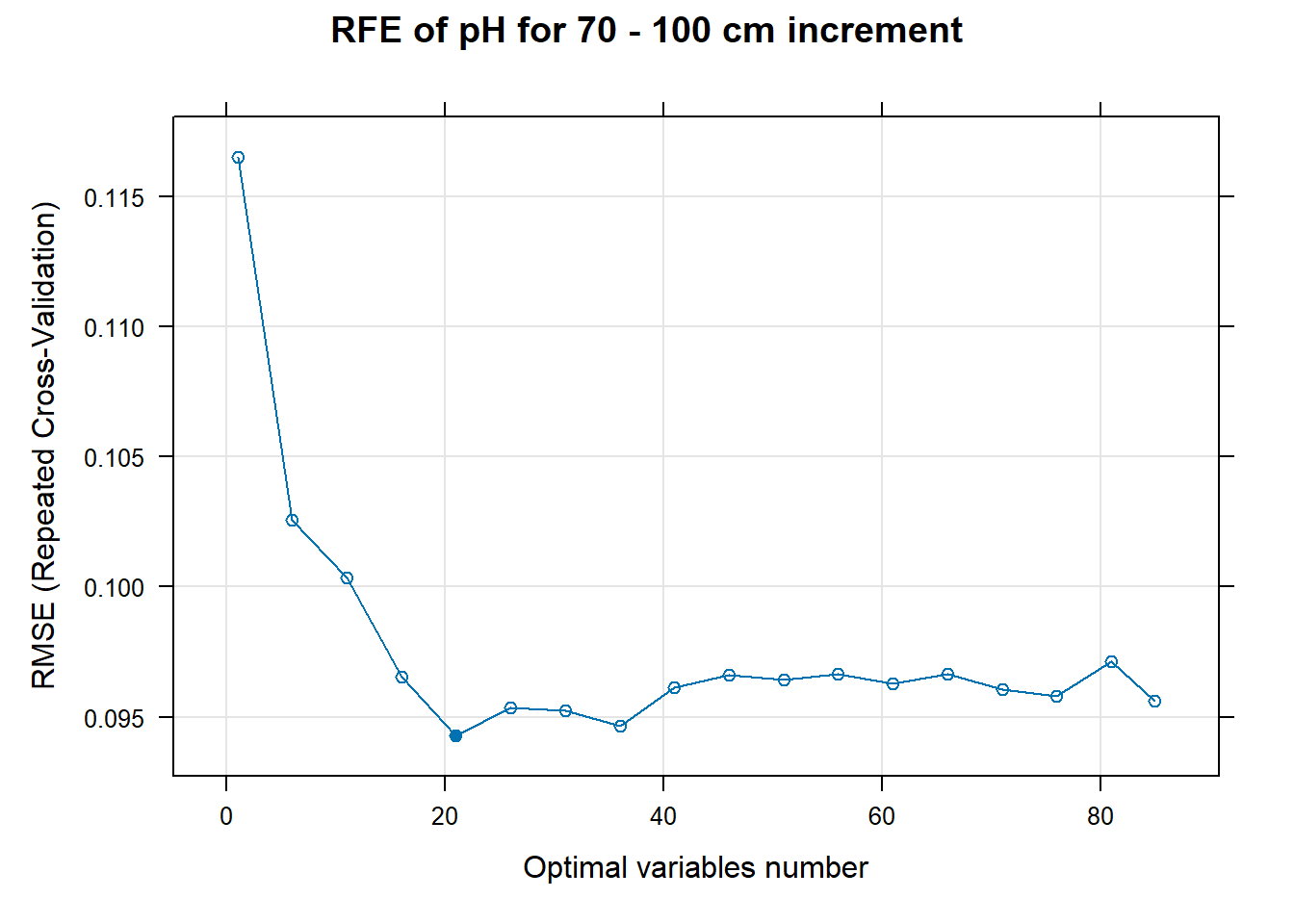

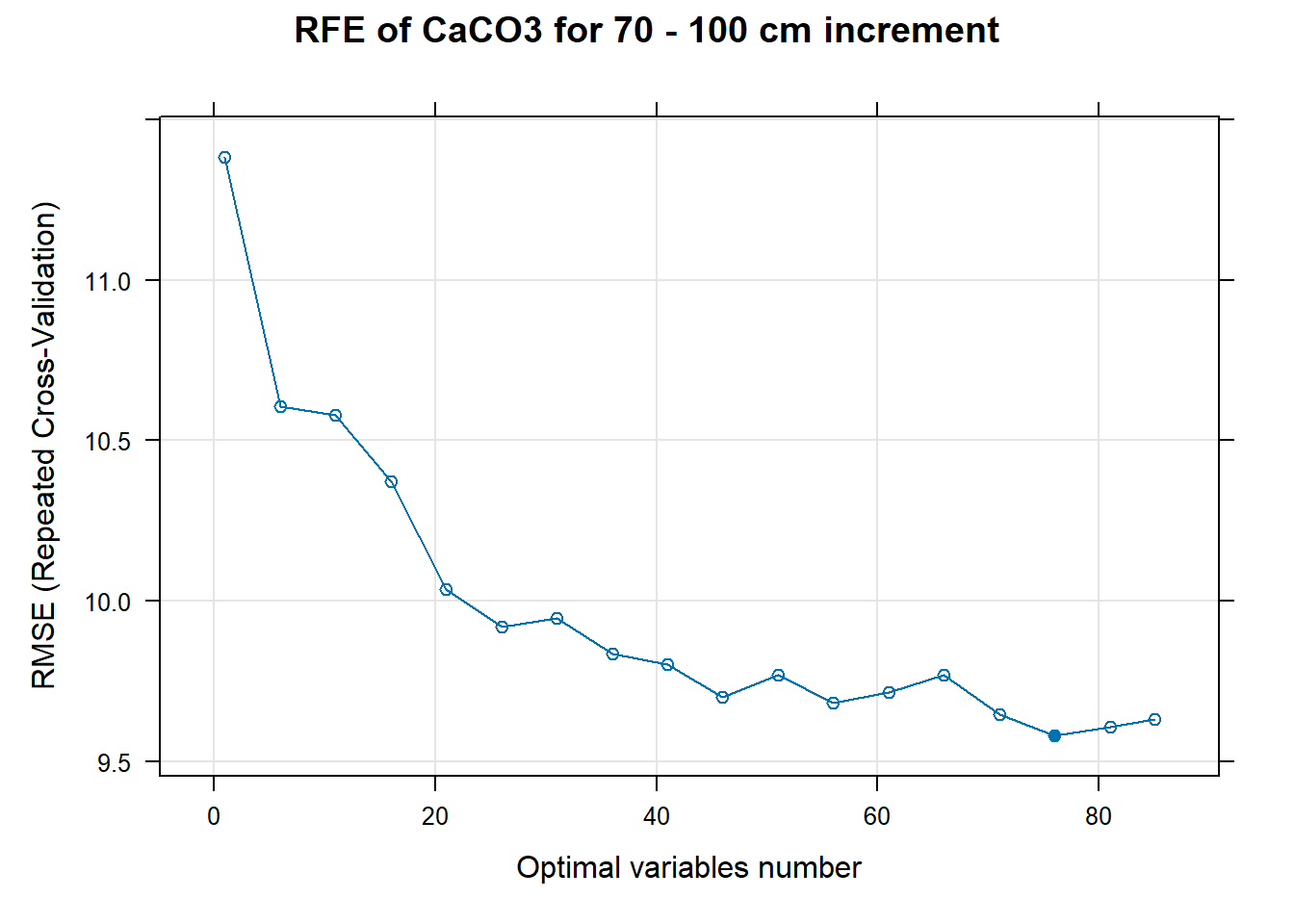

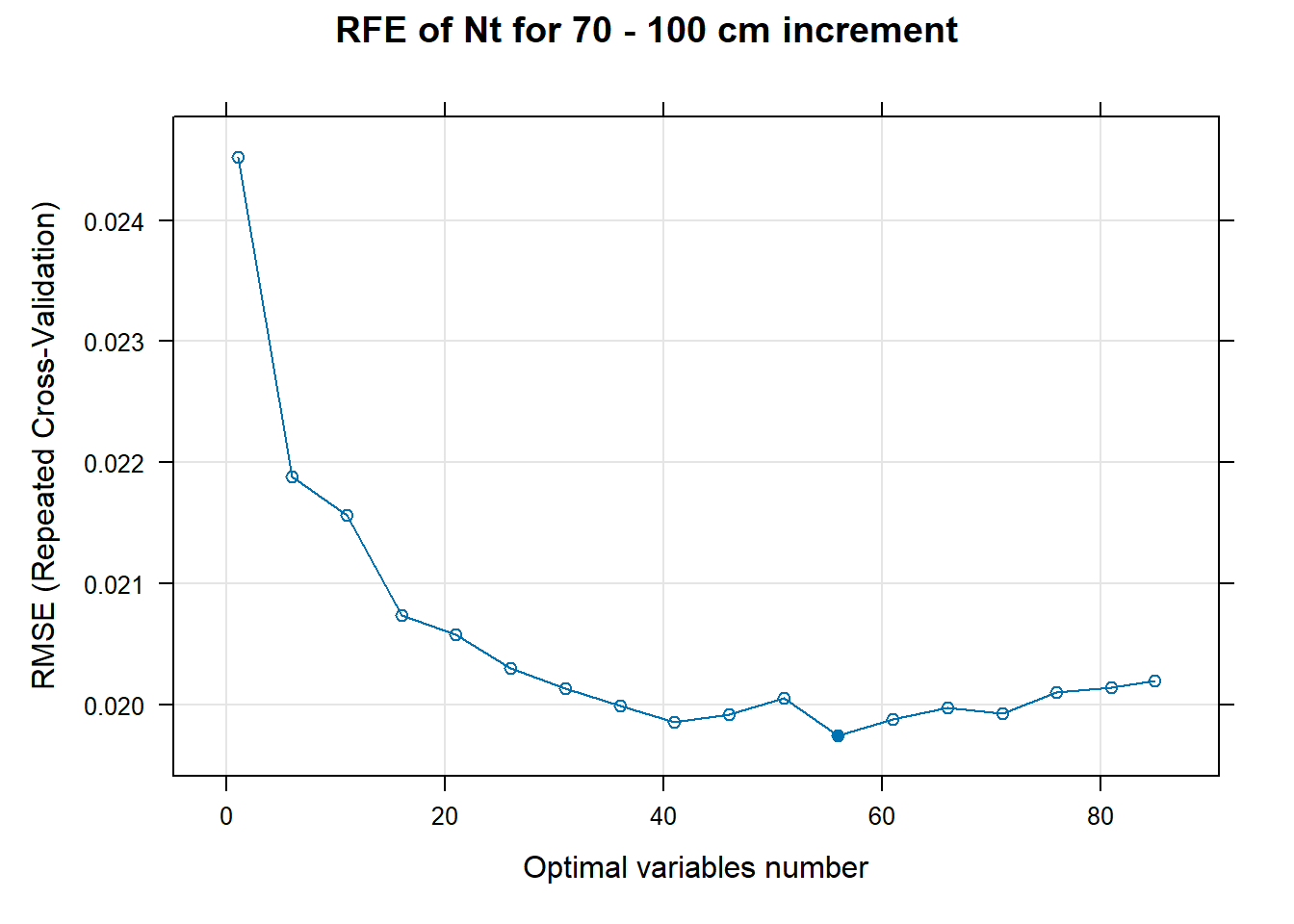

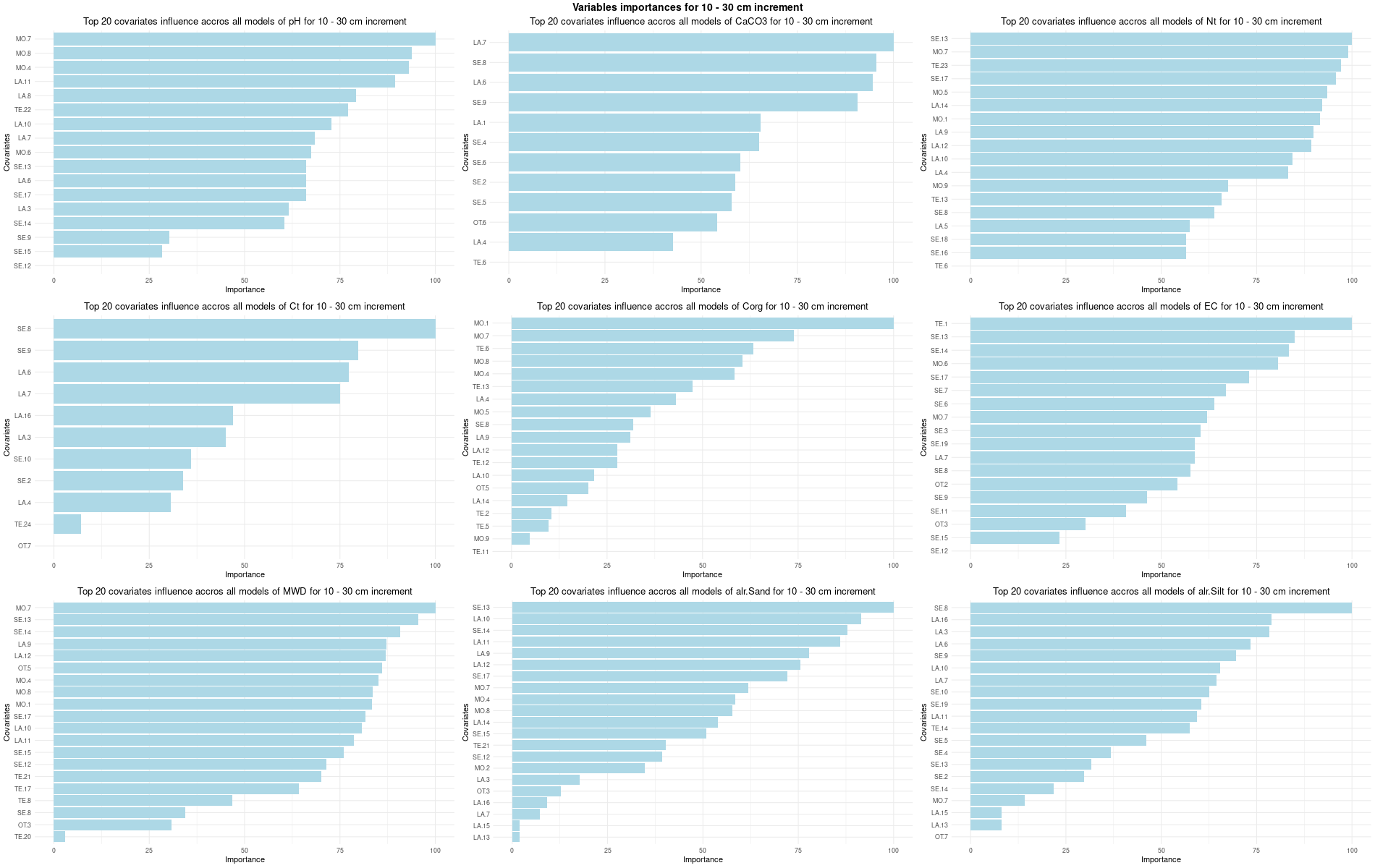

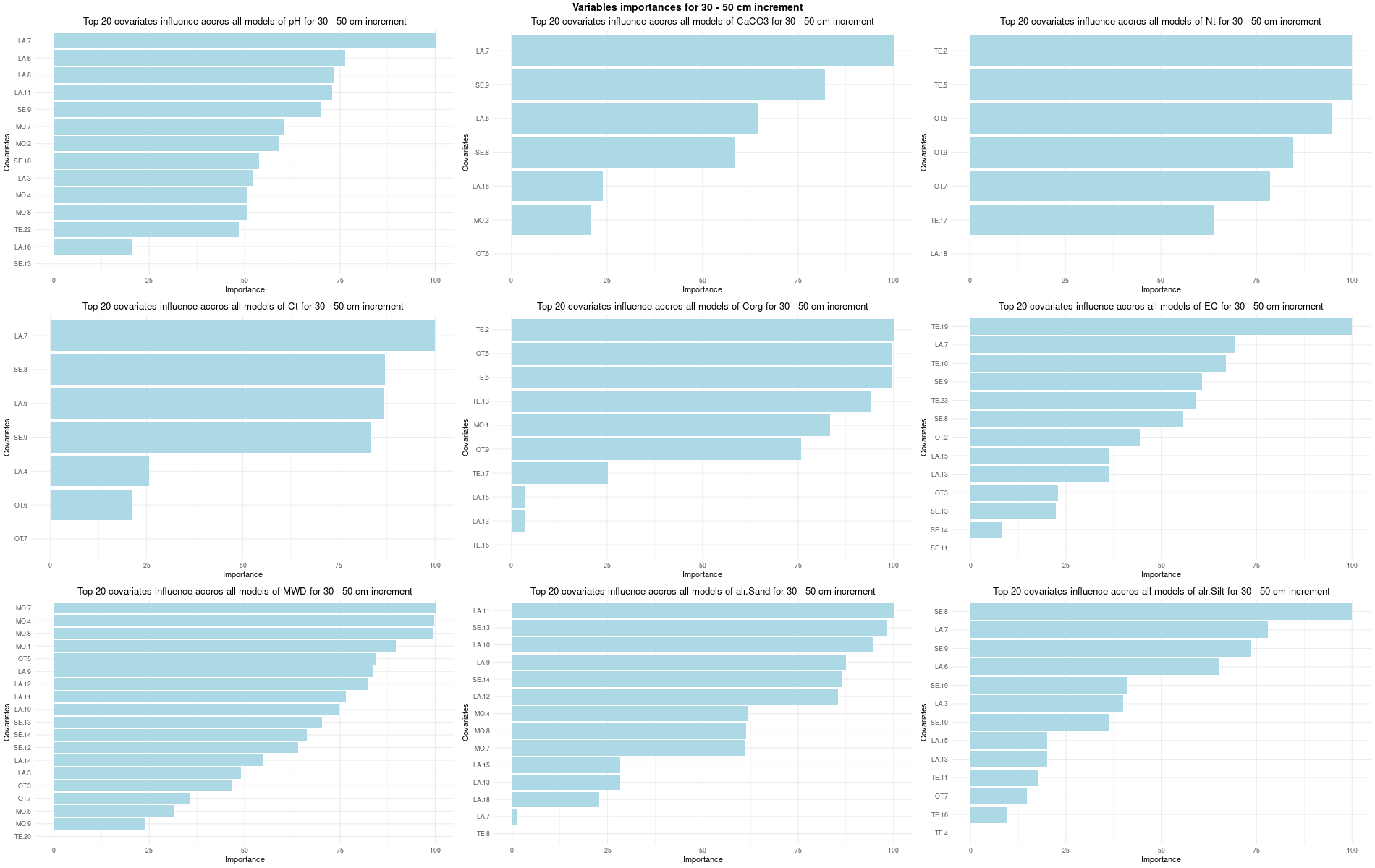

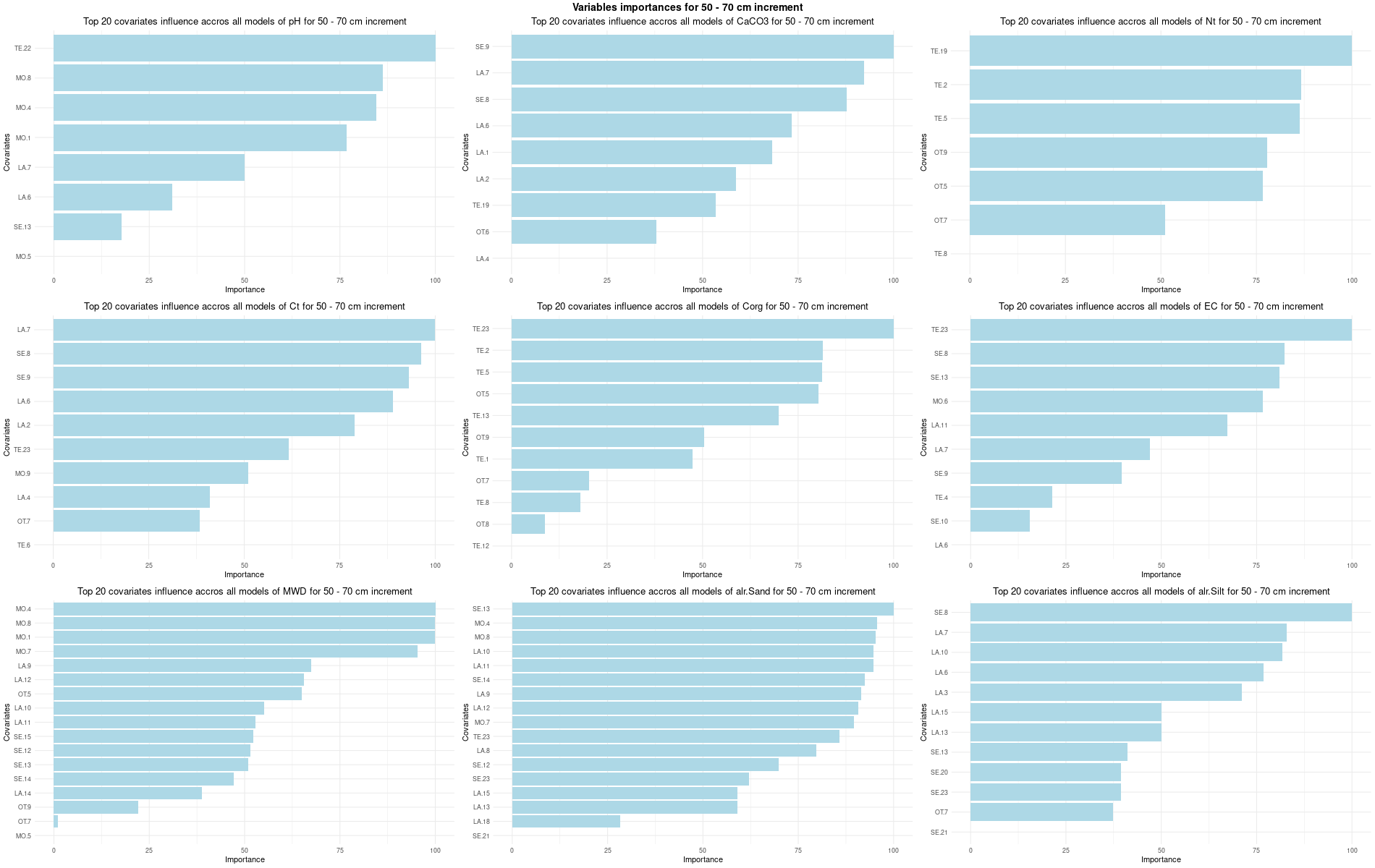

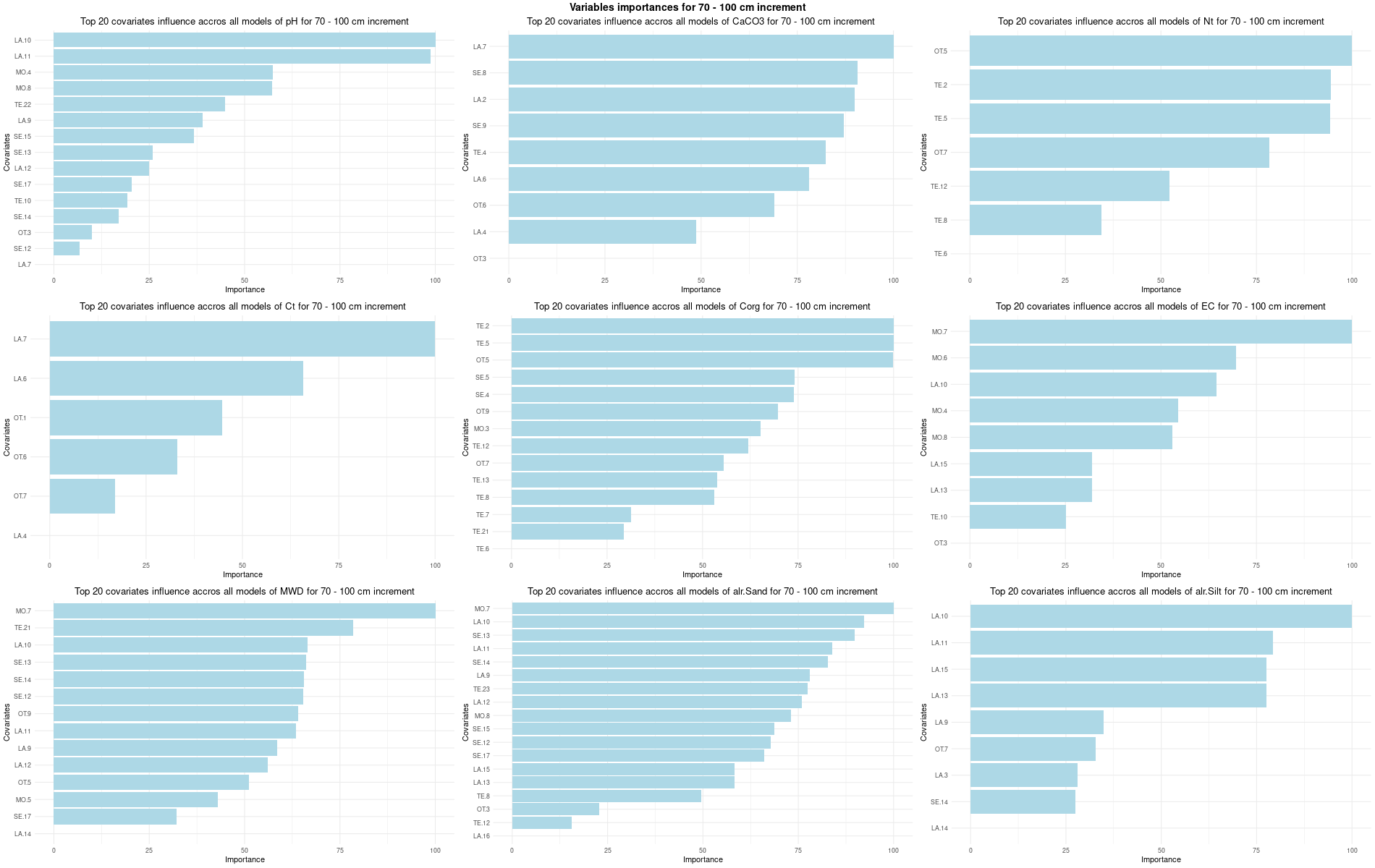

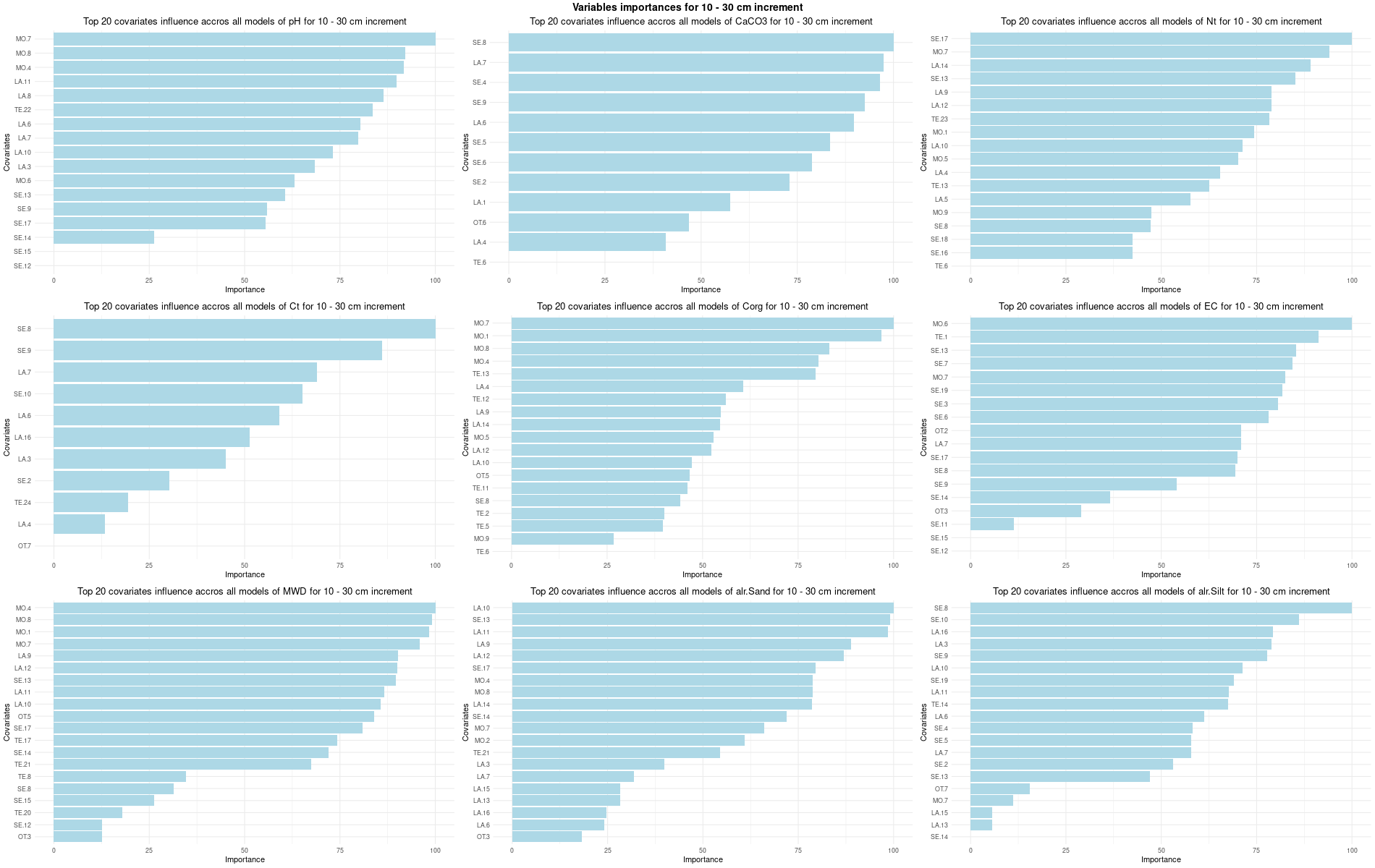

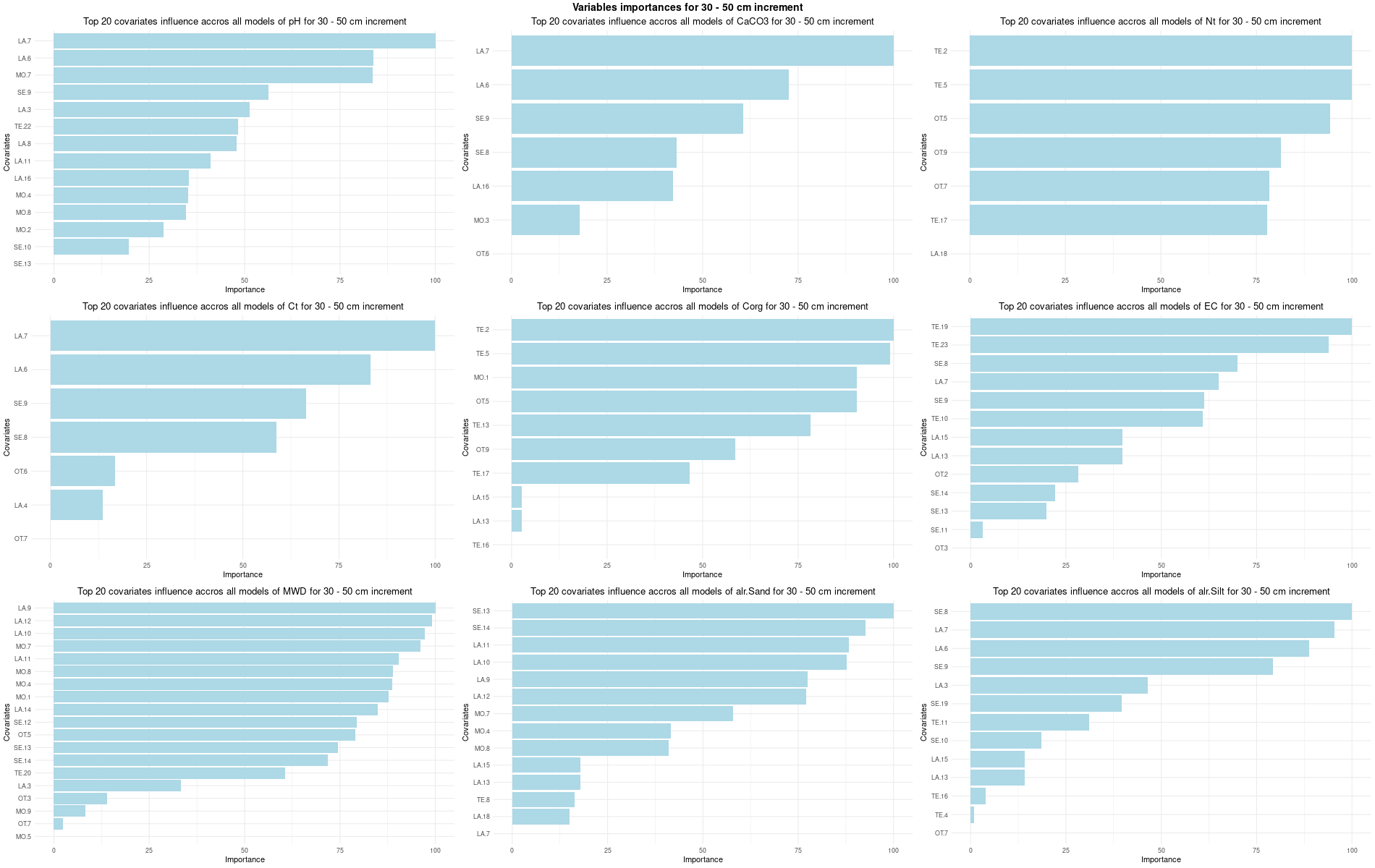

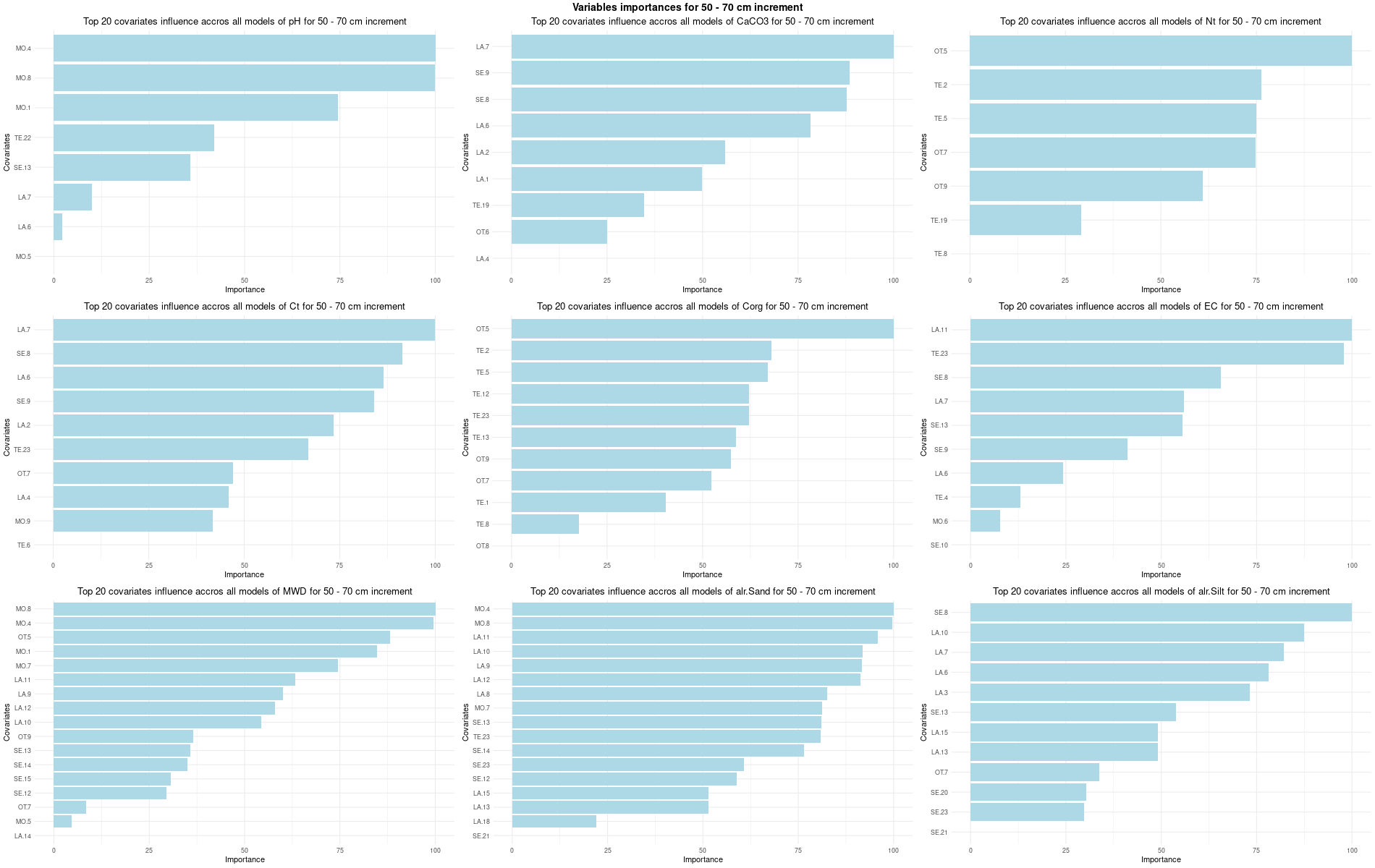

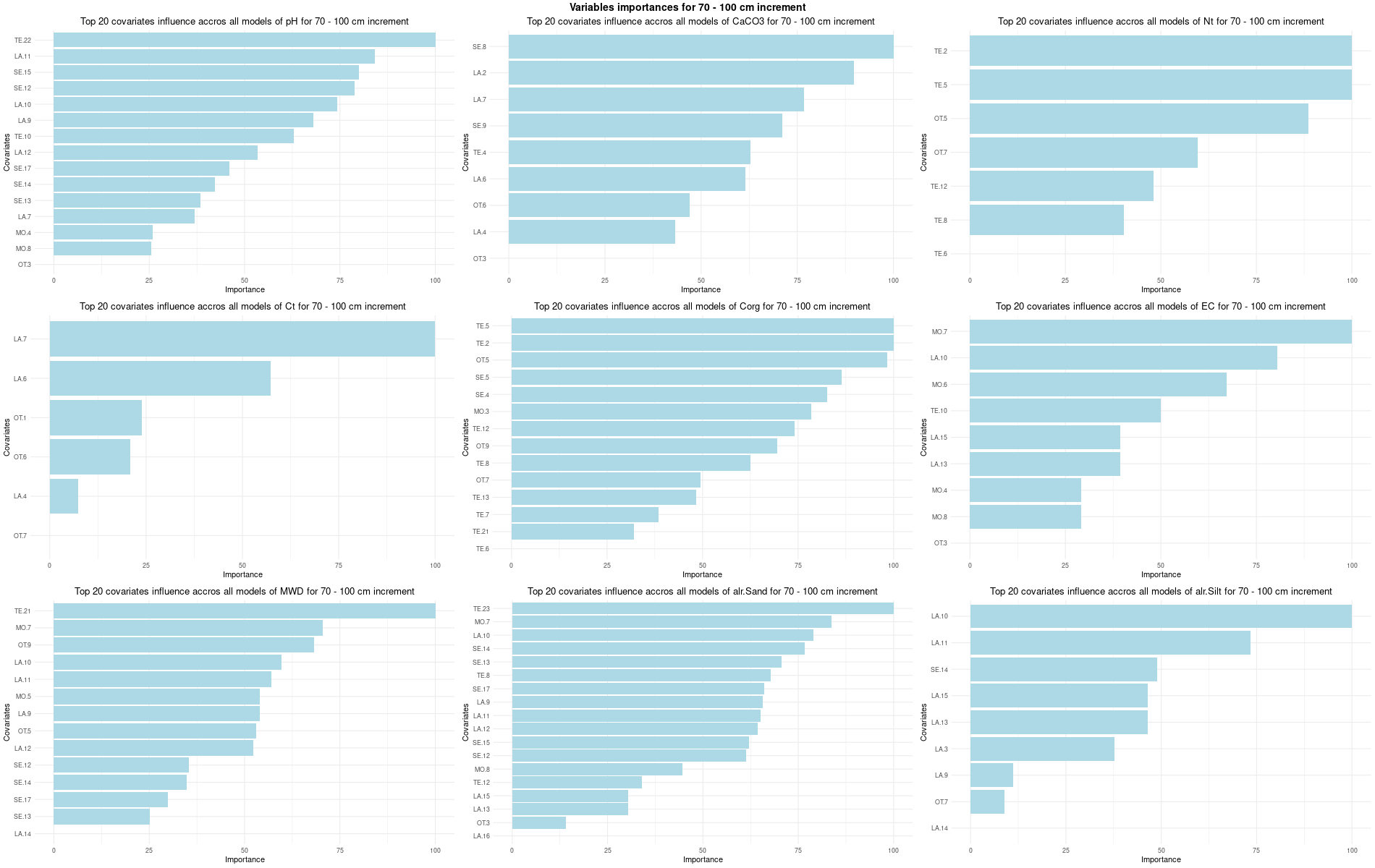

}7.2.5 Boruta and RFE selections

# 03.3 Boruta selection =========================================================

FormulaMLCon <- list()

Preprocess <- list()

for (i in 1:(ncol(Cov[[1]]) - NumCovLayer - 3)) {

FormulaMLCon[[i]] = as.formula(paste(names(Cov[[1]])[NumCovLayer+i]," ~ ",paste(names(Cov[[1]])[1:NumCovLayer],collapse="+")))

}

# Define traincontrol

TrainControl <- trainControl(method="repeatedcv", 10, 3, allowParallel = TRUE, savePredictions=TRUE)

TrainControlRF <- rfeControl(functions = rfFuncs, method = "repeatedcv", 10, 3, allowParallel = TRUE)

seed=1070

for (depth in increments) {

depth_name <- gsub("_", " - ", depth)

Boruta = list()

BorutaLabels = list()

Boruta_covariates = list()

SoilCovMLCon <- Cov[[depth]]

cli_progress_bar(

format = "Boruta {.val {depth}} {.val {var}} {cli::pb_bar} {cli::pb_percent} [{cli::pb_current}/{cli::pb_total}] | \ ETA: {cli::pb_eta} - Time elapsed: {cli::pb_elapsed_clock}",

total = length(FormulaMLCon),

clear = FALSE)

# Individual plots

for (i in 1:length(FormulaMLCon)) {

var <- names(SoilCovMLCon)[NumCovLayer+i]

set.seed(seed)

Boruta[[i]] <- Boruta(FormulaMLCon[[i]], data = SoilCovMLCon, rfeControl = TrainControl)

BorutaBank <- TentativeRoughFix(Boruta[[i]])

pdf(paste0("./export/boruta/", depth,"/Boruta_",names(SoilCovMLCon)[NumCovLayer+i], "_for_",depth,"_soil.pdf"), # File name

width = 8, height = 8, # Width and height in inches

bg = "white", # Background color

colormodel = "cmyk") # Color model

plot(BorutaBank, xlab = "", xaxt = "n",

main=paste0("Feature Importance - Boruta ",names(SoilCovMLCon)[NumCovLayer+i]," for ", depth_name ," cm increment"))

lz <- lapply(1:ncol(BorutaBank$ImpHistory),

function(j)BorutaBank$ImpHistory[is.finite(BorutaBank$ImpHistory[,j]),j])

names(lz) <- c(names(SoilCovMLCon)[1:NumCovLayer],c("sh_Max","sh_Mean","sh_Min"))

Labels <- sort(sapply(lz,median))

axis(side = 1,las=2,labels = names(Labels),at = 1:ncol(BorutaBank$ImpHistory),

cex.axis = 1)

dev.off() # Close the device

BorutaLabels[[i]] <- sapply(lz,median)

confirmed_features <- getSelectedAttributes(BorutaBank, withTentative = FALSE)

Boruta_covariates[[i]] <- cbind(SoilCovMLCon[, confirmed_features], SoilCovMLCon[, NumCovLayer + i])

colnames(Boruta_covariates[[i]]) <- c(confirmed_features,names(SoilCovMLCon)[NumCovLayer+i])

write.csv(data.frame(Boruta_covariates[[i]]), paste0("./export/boruta/", depth,"/Boruta_results_",names(SoilCovMLCon)[NumCovLayer+i], "_for_",depth,"_soil.csv"))

cli_progress_update()

}

cli_progress_done()

## Warning in TentativeRoughFix(Preprocess[[i]]$Boruta[[j]]): There are no

## Tentative attributes! Returning original object.

# Combinned plot

BorutaResultCon = data.frame();BorutaResultCon = data.frame(BorutaLabels[[1]])

for (i in 2:length(FormulaMLCon)) {

BorutaResultCon[i] = data.frame(BorutaLabels[[i]])}

BorutaResultCon = BorutaResultCon[c(1:NumCovLayer),]

names(BorutaResultCon) <- names(SoilCovMLCon)[c(StartTargetCov:NumDataCol)]

BorutaResultConT = data.frame();BorutaResultConT = data.frame(Boruta[[1]]$finalDecision == "Confirmed")

for (i in 2:length(FormulaMLCon)) {

BorutaResultConT[i] = data.frame(Boruta[[i]]$finalDecision == "Confirmed")}

names(BorutaResultConT) <- names(SoilCovMLCon)[c(StartTargetCov:NumDataCol)]

BorutaCovPlot = gather(BorutaResultCon,key,value);BorutaCovPlotT = gather(BorutaResultConT,key,value)

BorutaCovPlot$cov = rep(row.names(BorutaResultCon), (NumDataCol-NumCovLayer))

names(BorutaCovPlot) = c("Y","Z","X");BorutaCovPlot$Z.1 = BorutaCovPlotT$value

BorutaCovPlot$Y = factor(BorutaCovPlot$Y);BorutaCovPlot$X = factor(BorutaCovPlot$X)

BorutaCovPlot$Z.1 <- as.logical(BorutaCovPlot$Z.1)

BorutaCovPlot$Y = factor(BorutaCovPlot$Y, levels = rev(unique(BorutaCovPlot$Y)))

# Split into two groups

df1 <- BorutaCovPlot[BorutaCovPlot$X %in% unique(BorutaCovPlot$X)[1:42], ]

df2 <- BorutaCovPlot[BorutaCovPlot$X %in% unique(BorutaCovPlot$X)[(43):length(unique(BorutaCovPlot$X))], ]

# First part

p1 <- ggplot(df1, aes(x = X, y = Y)) +

geom_tile(aes(fill = Z, colour = Z.1), size = 1) +

labs(x = "Variables importance", y = "Soil properties", fill = "Importance") +

theme_classic() +

scale_fill_viridis_c(option = "E") +

scale_color_manual(values = c('#00000000', 'red')) +

theme(axis.text.x = element_text(colour = "black", size = 10, angle = 90, hjust = 1),

axis.text.y = element_text(colour = "black", size = 10),

legend.position = "right") +

geom_text(aes(label = round(Z, 1)), cex = 3) +

coord_flip() +

coord_equal()

# Second part

p2 <- ggplot(df2, aes(x = X, y = Y)) +

geom_tile(aes(fill = Z, colour = Z.1), size = 1, show.legend = FALSE) +

labs(x = "Variables importance", y = "Soil properties", fill = "Importance") +

theme_classic() +

scale_fill_viridis_c(option = "E") +

scale_color_manual(values = c('#00000000', 'red')) +

theme(axis.text.x = element_text(colour = "black", size = 10, angle = 90, hjust = 1),

axis.text.y = element_text(colour = "black", size = 10)) +

geom_text(aes(label = round(Z, 1)), cex = 3) +

coord_flip() +

coord_equal()

# Combinne poth plot

FigCovImpoBr <- (p1 / p2) +

plot_annotation(title = paste0("Boruta selection of features for the ", depth_name, " cm depth interval"),

theme = theme(plot.title = element_text(size = 14, face = "bold", hjust = 0.5)))

ggsave(paste0("./export/boruta/", depth,"/Boruta_final_combinned_plot_", depth,"_soil.png"), FigCovImpoBr, width = 15, height = 8.5)

ggsave(paste0("./export/boruta/", depth,"/Boruta_final_combinned_plot_", depth,"_soil.pdf"), FigCovImpoBr, width = 15, height = 8.5)

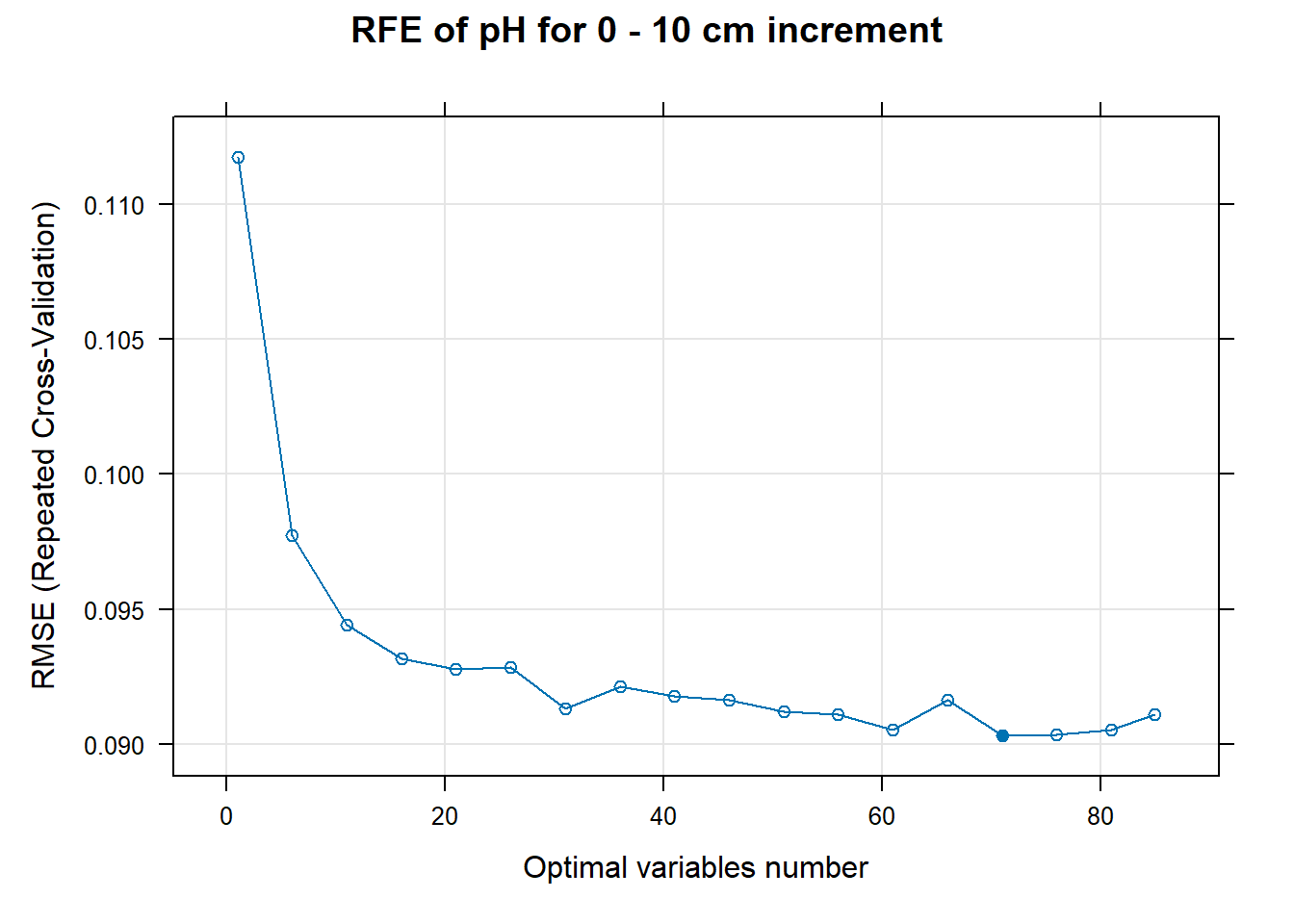

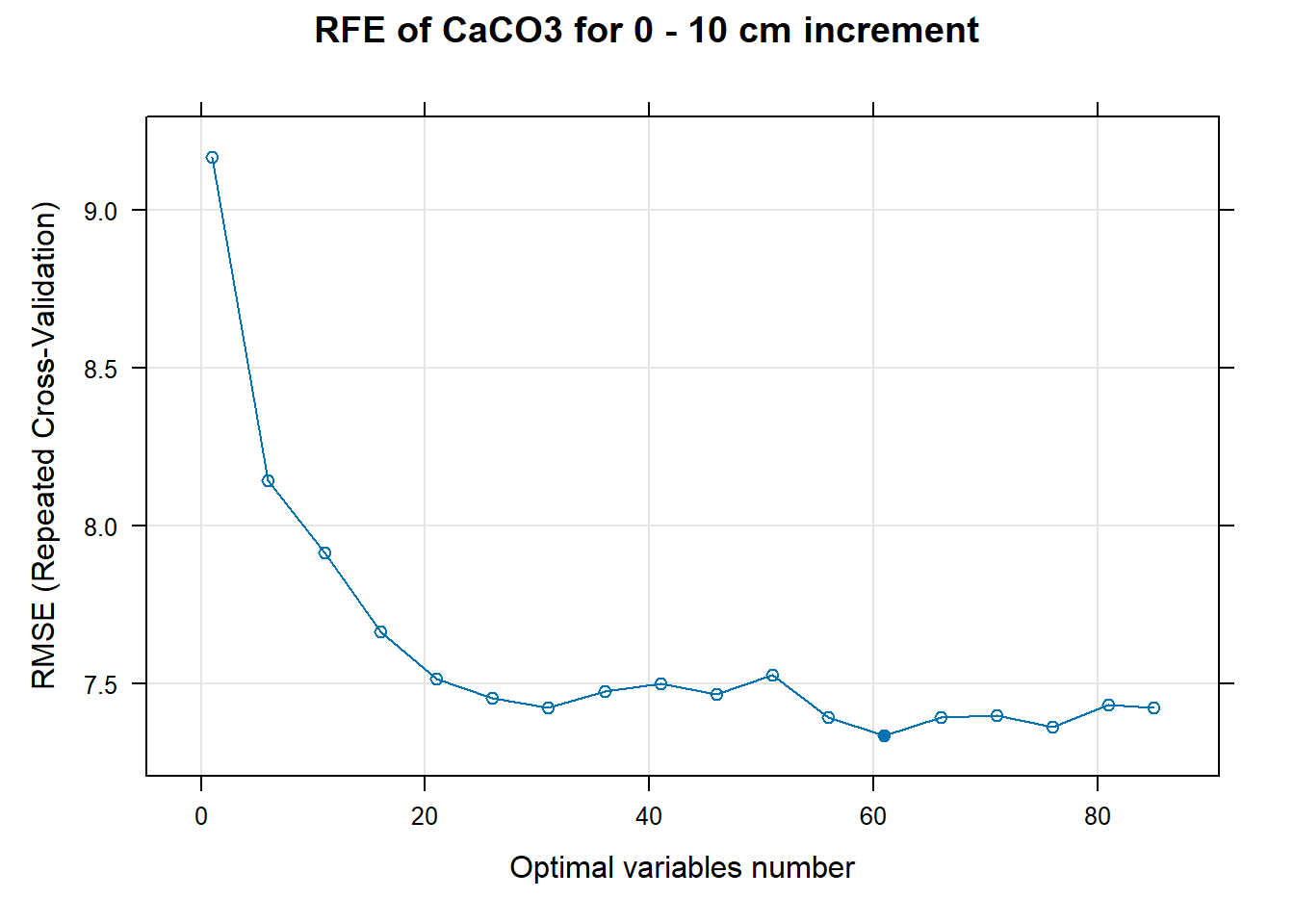

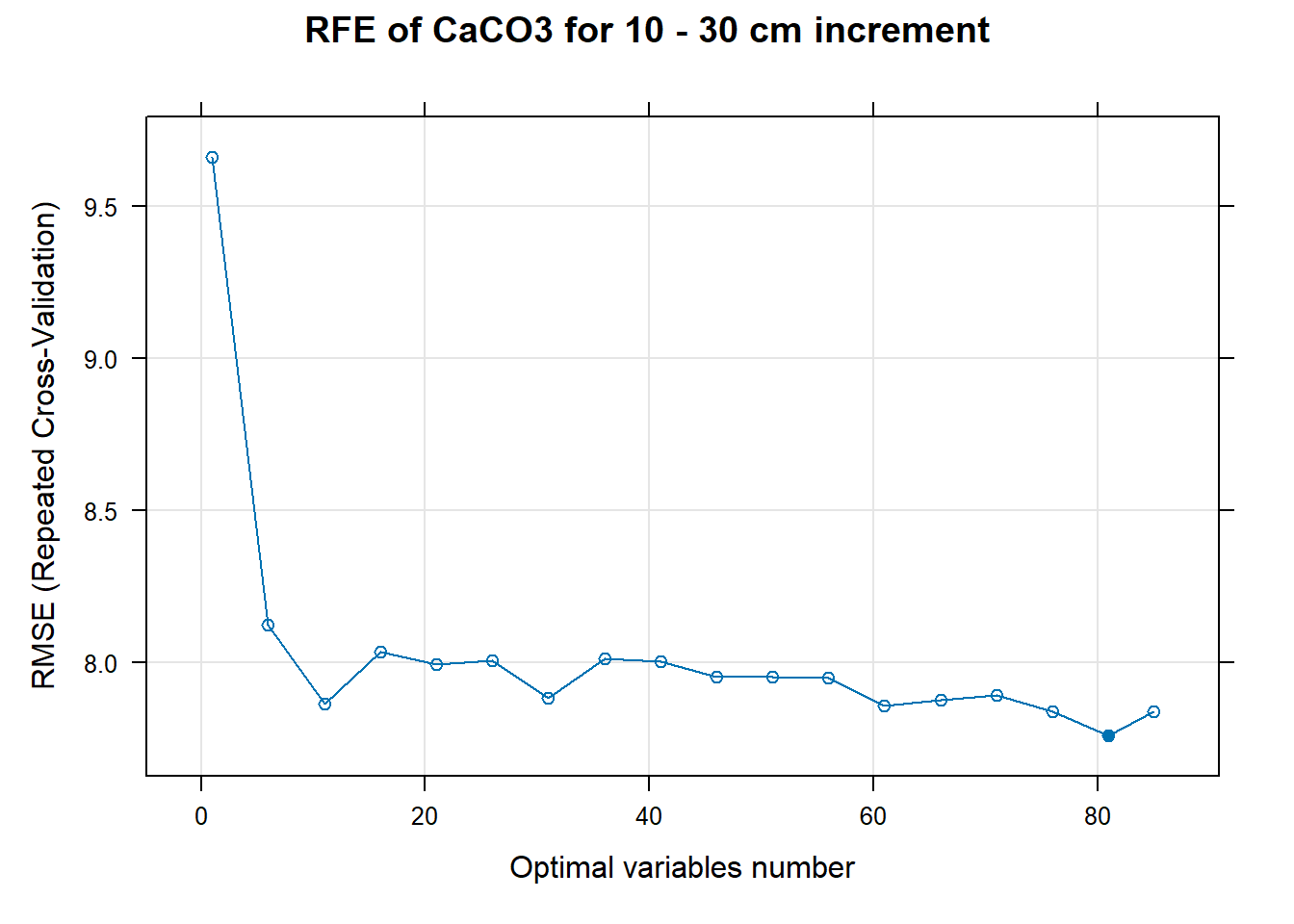

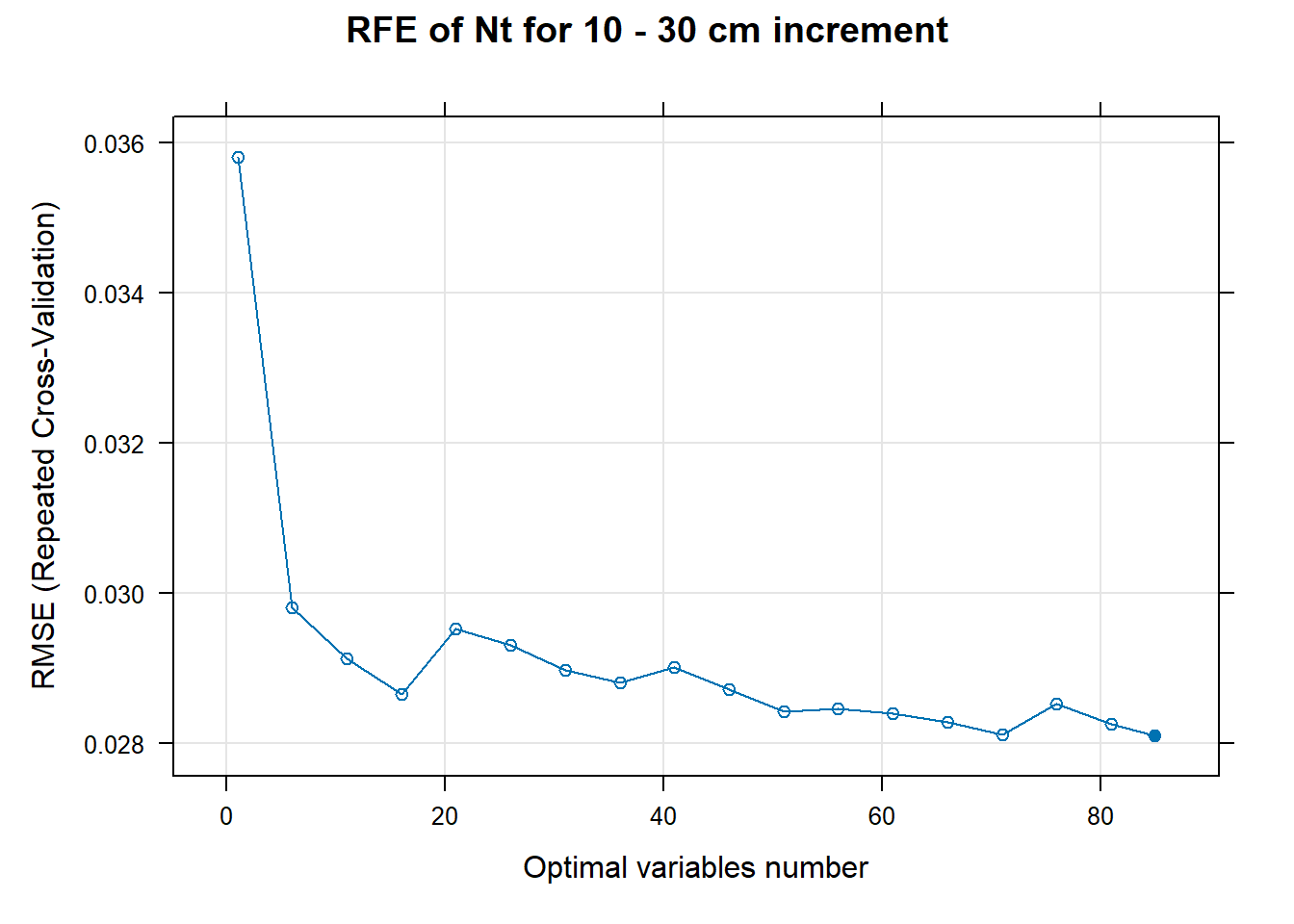

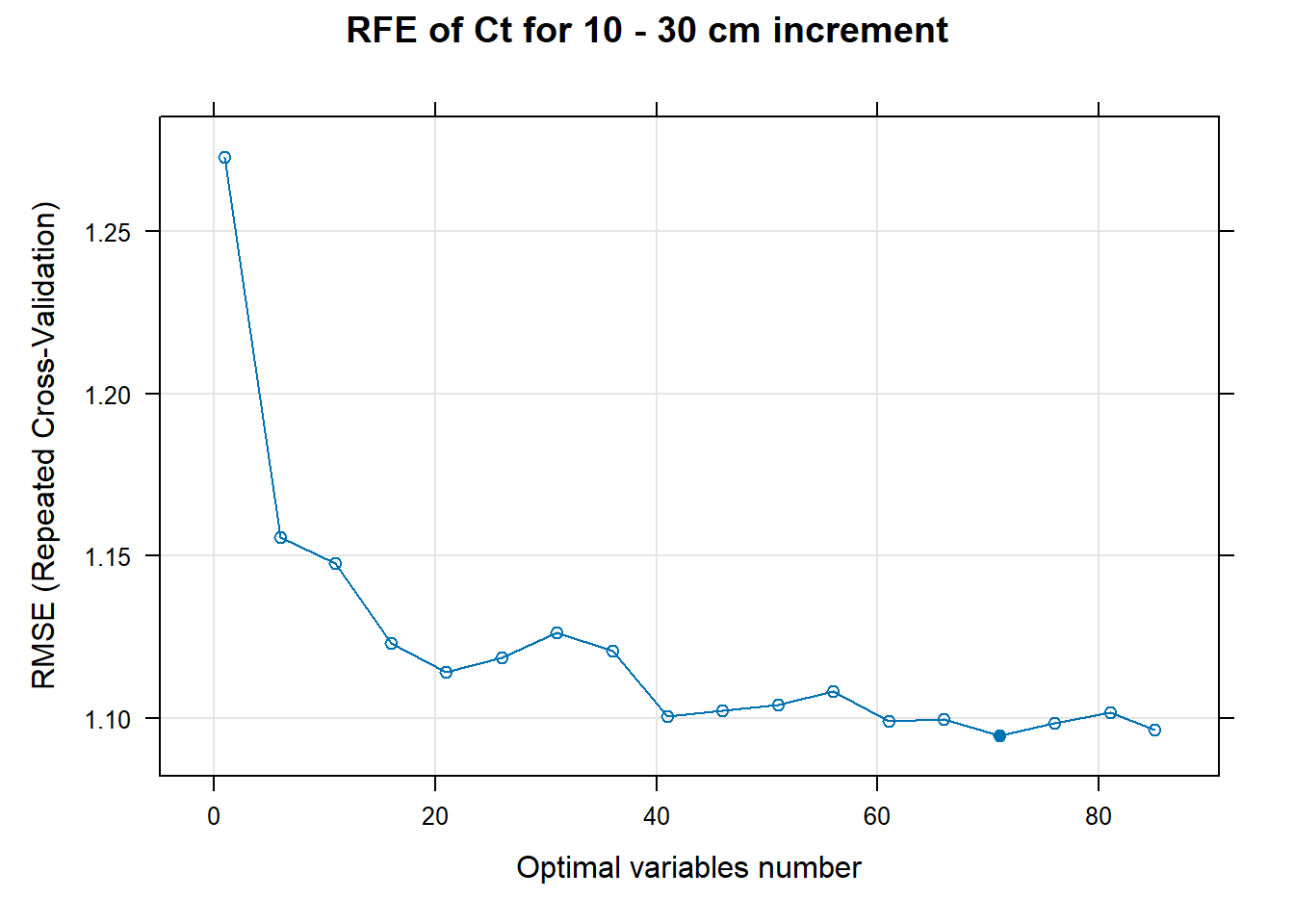

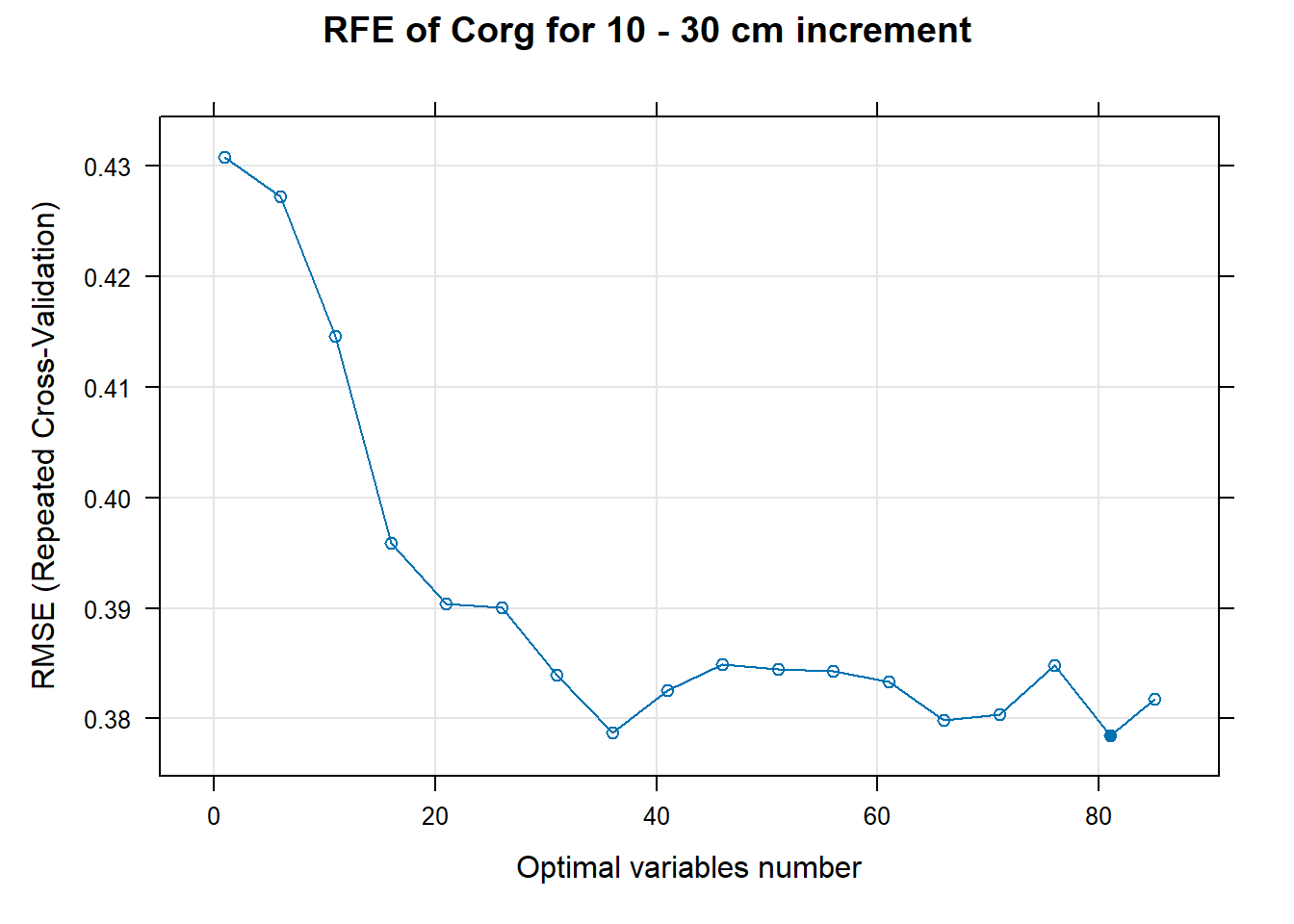

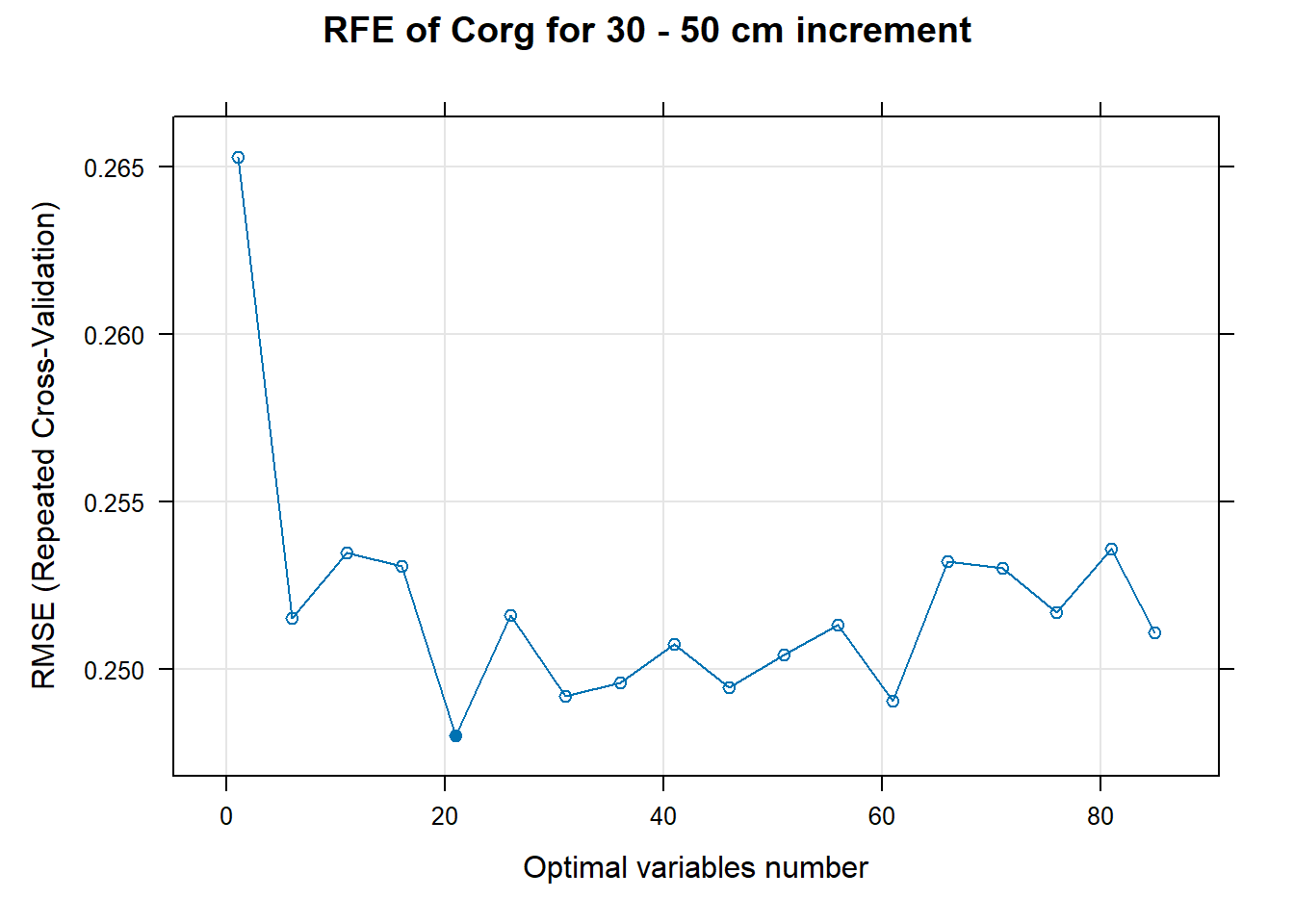

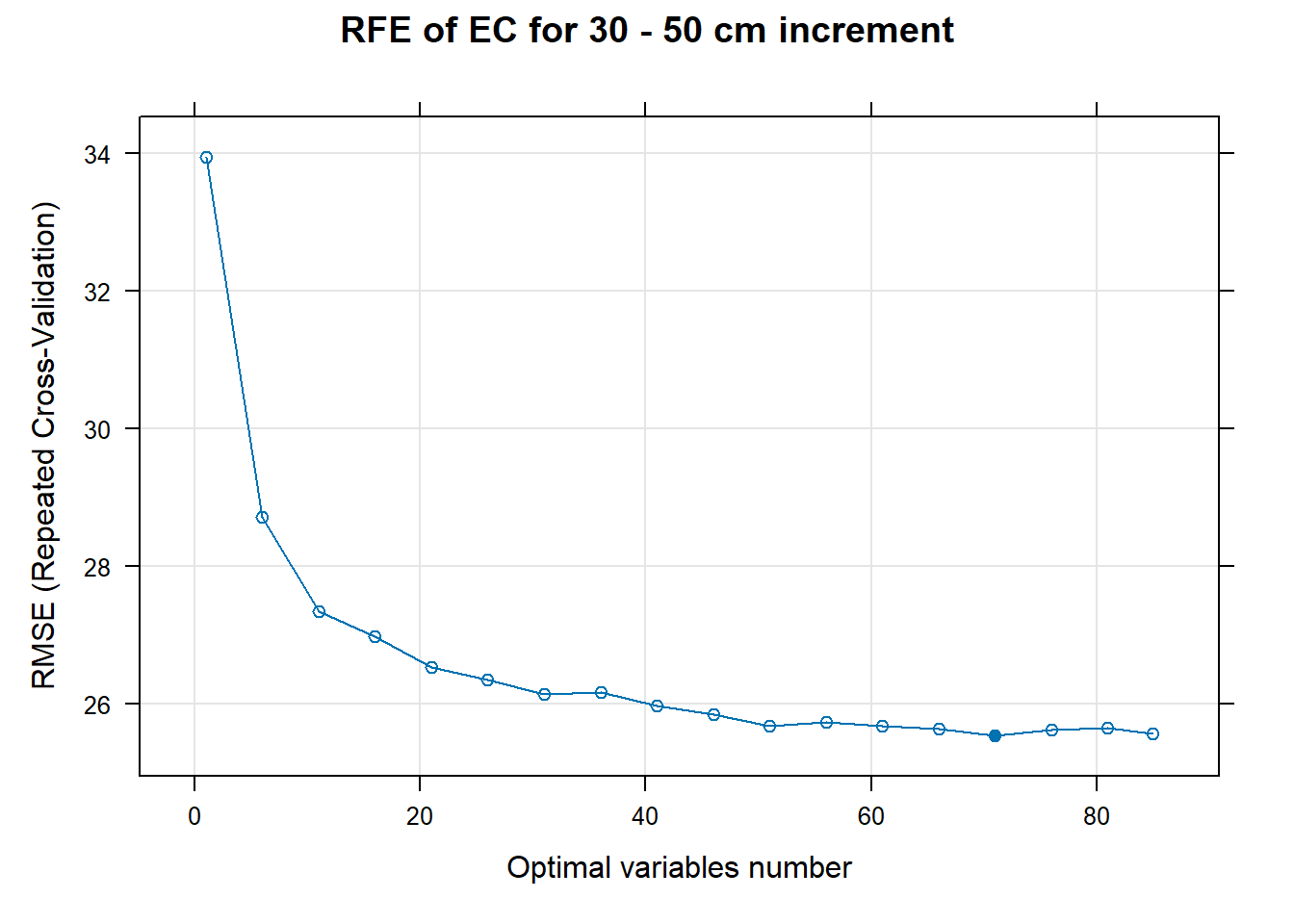

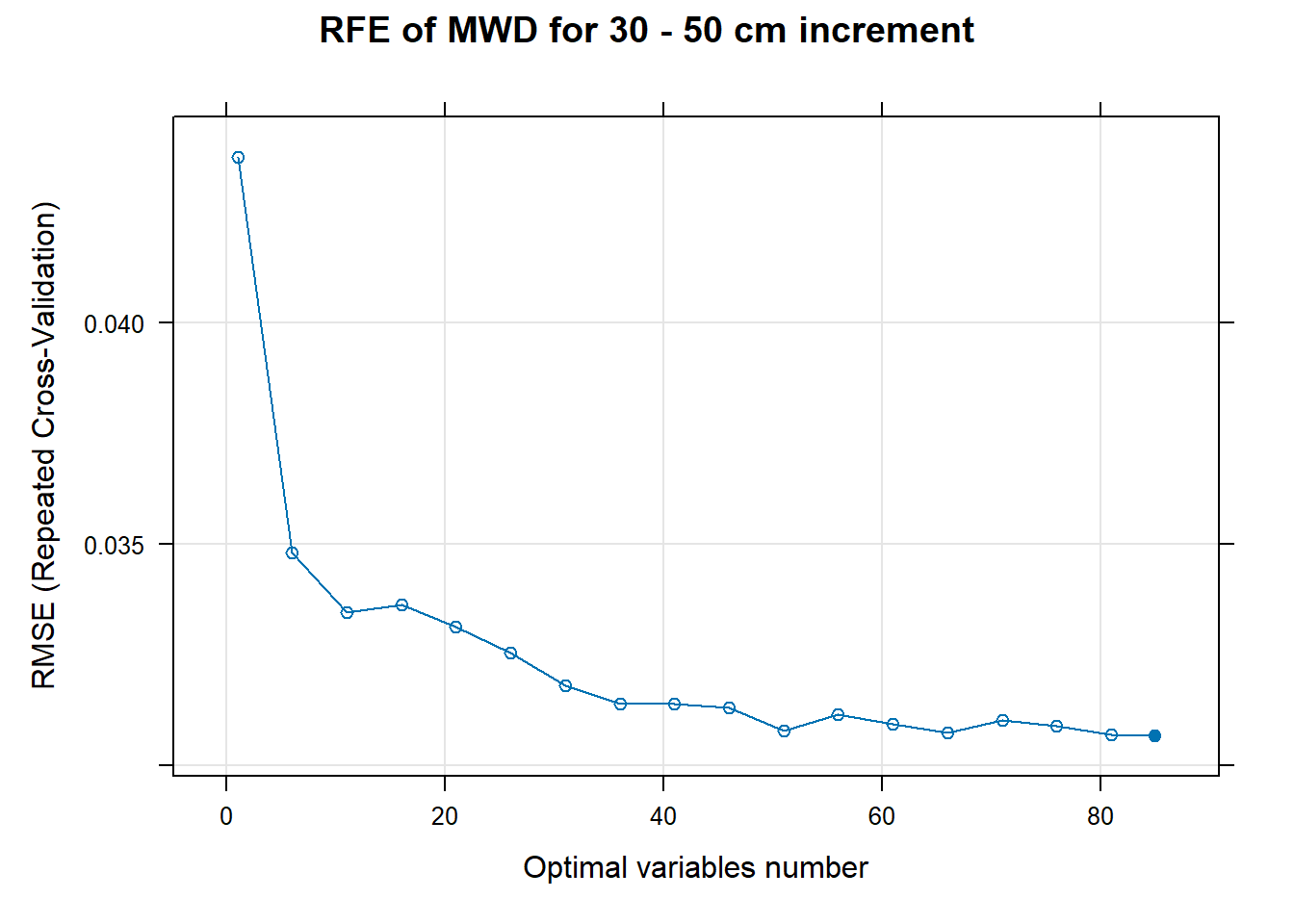

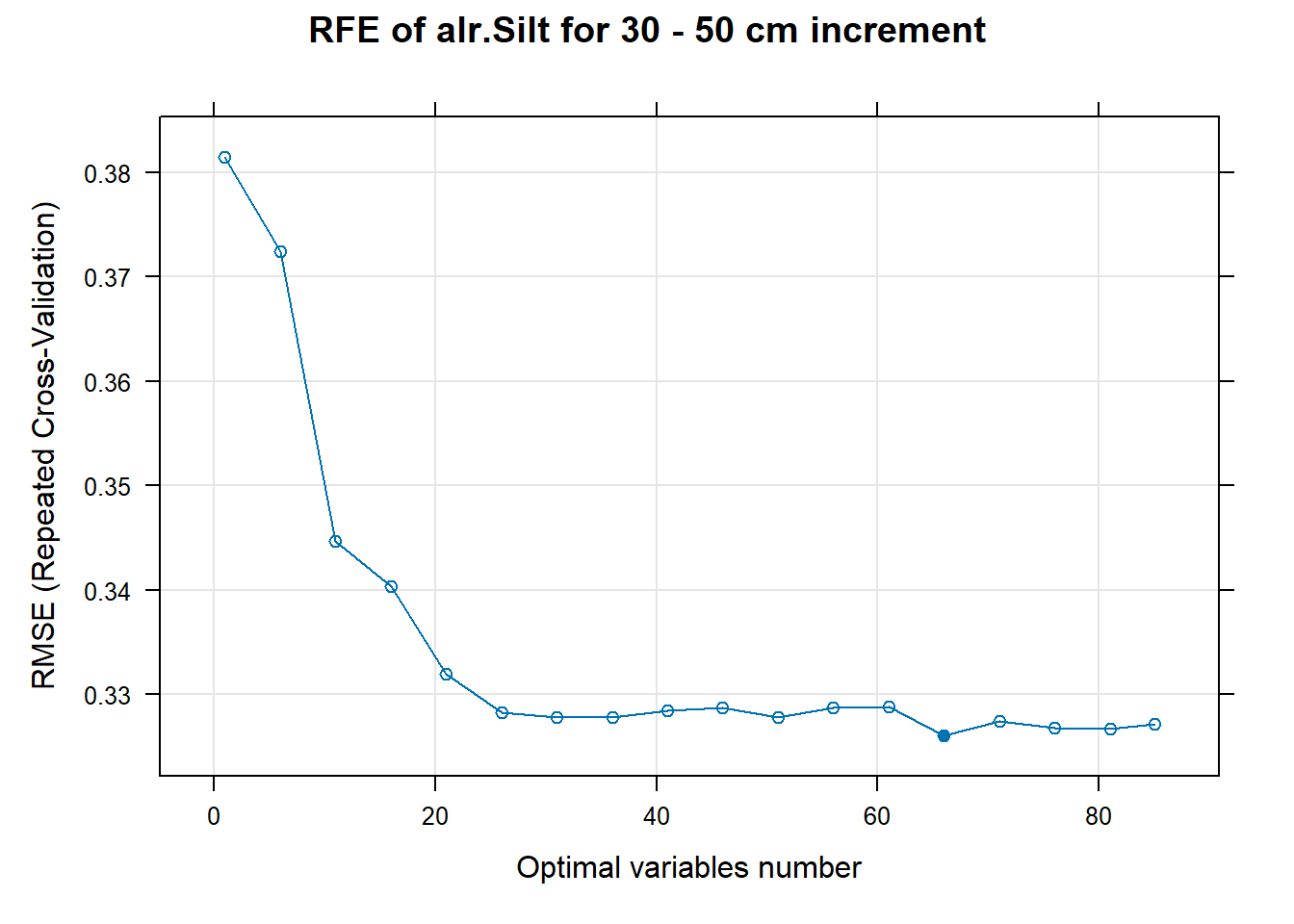

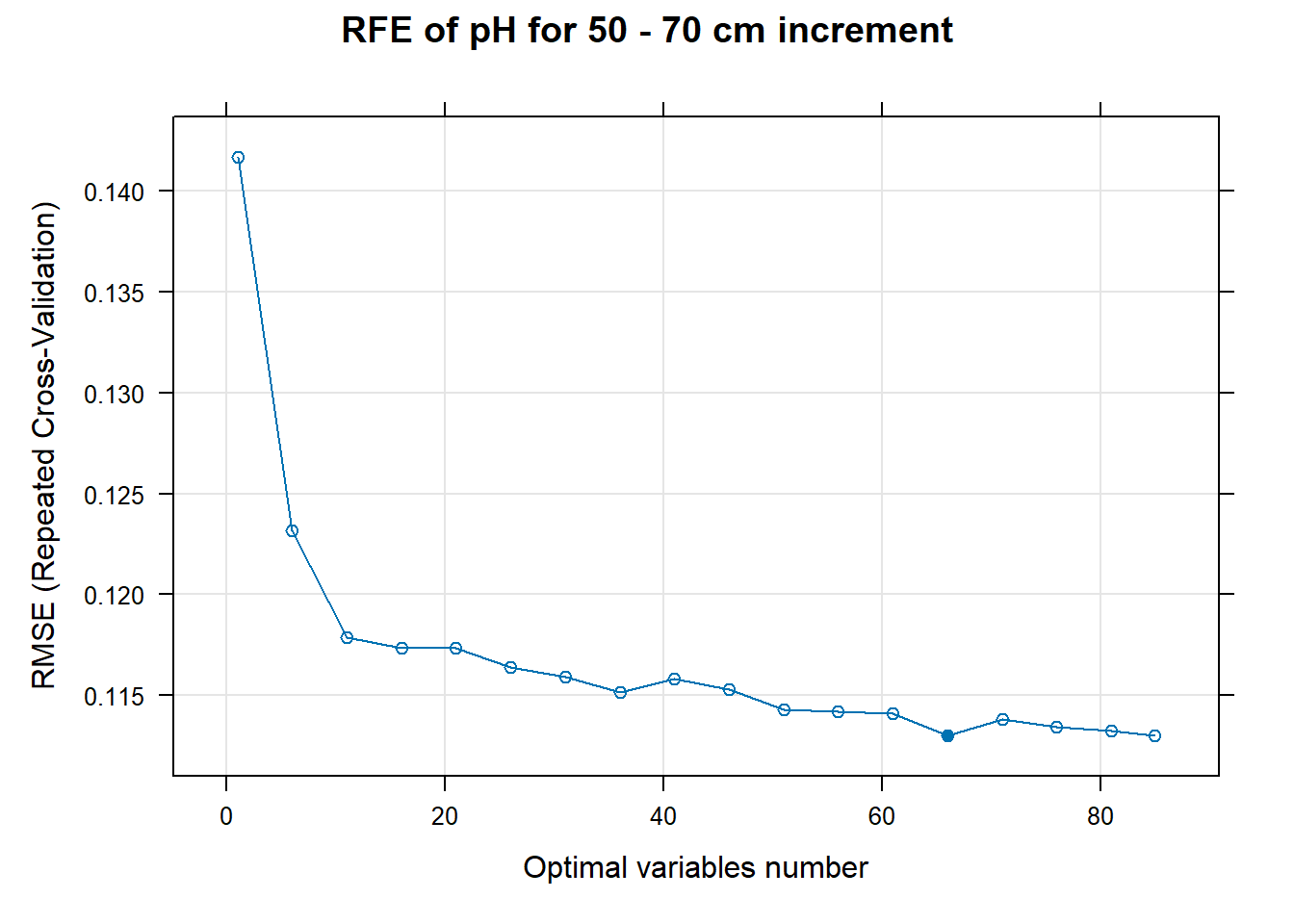

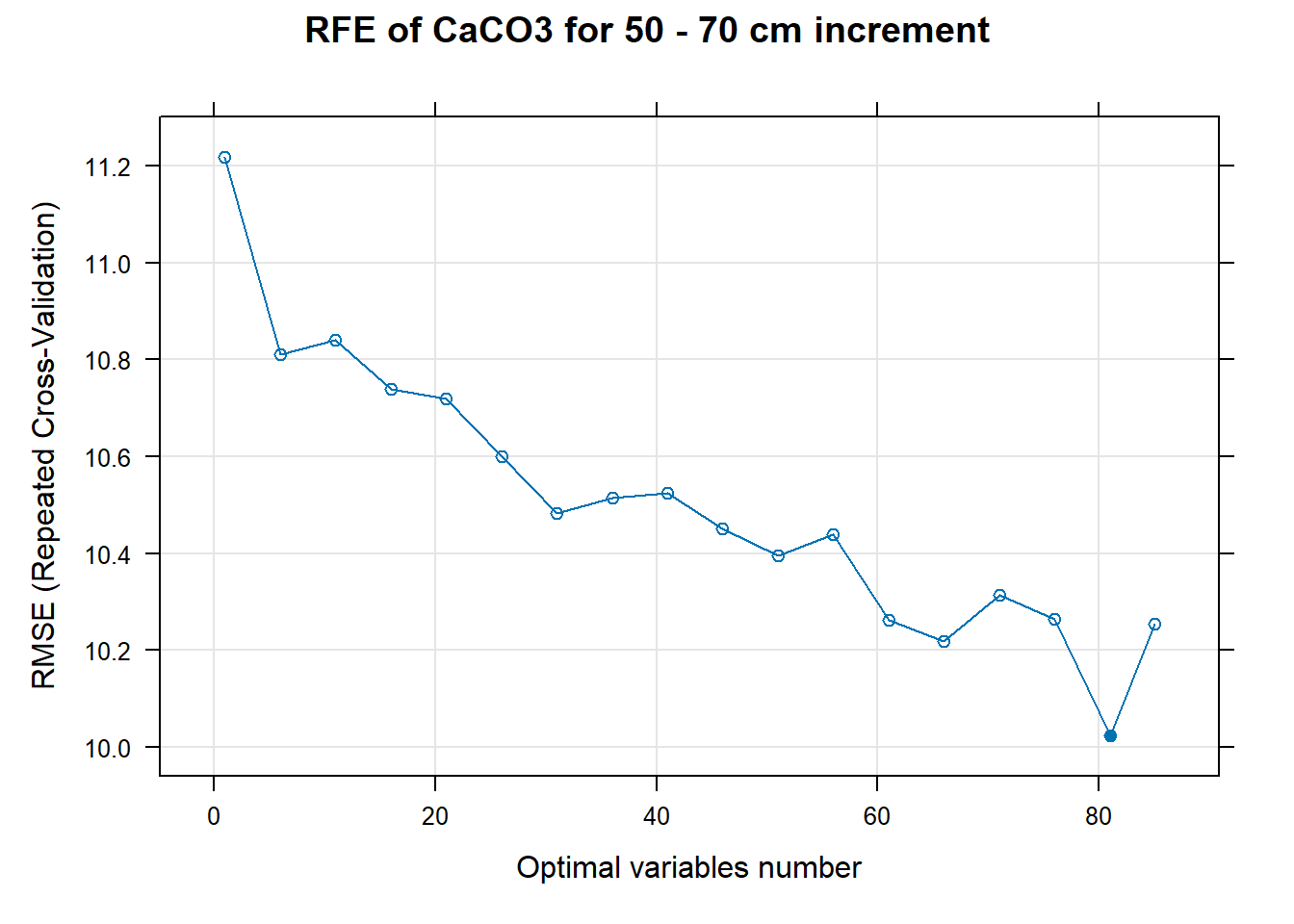

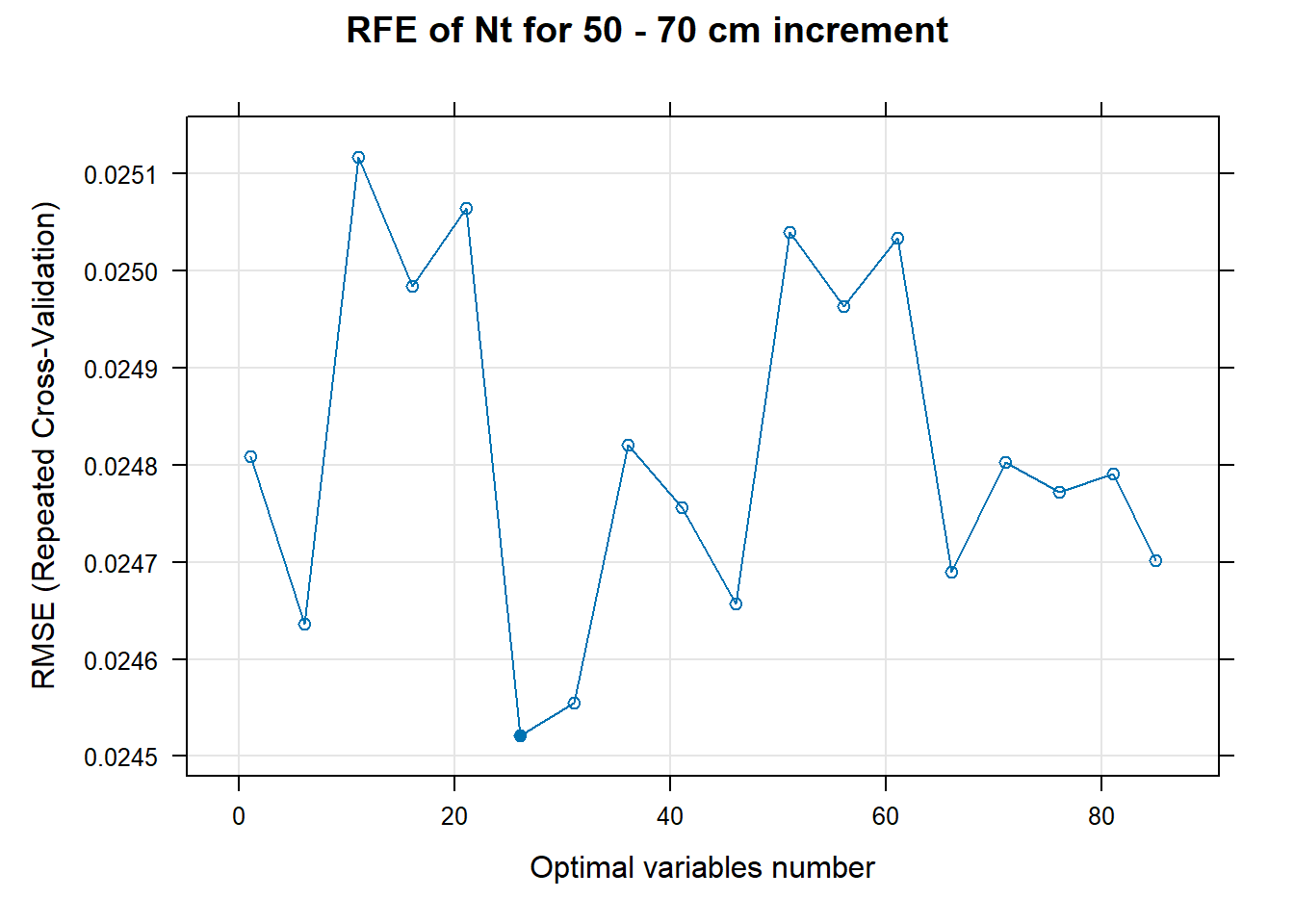

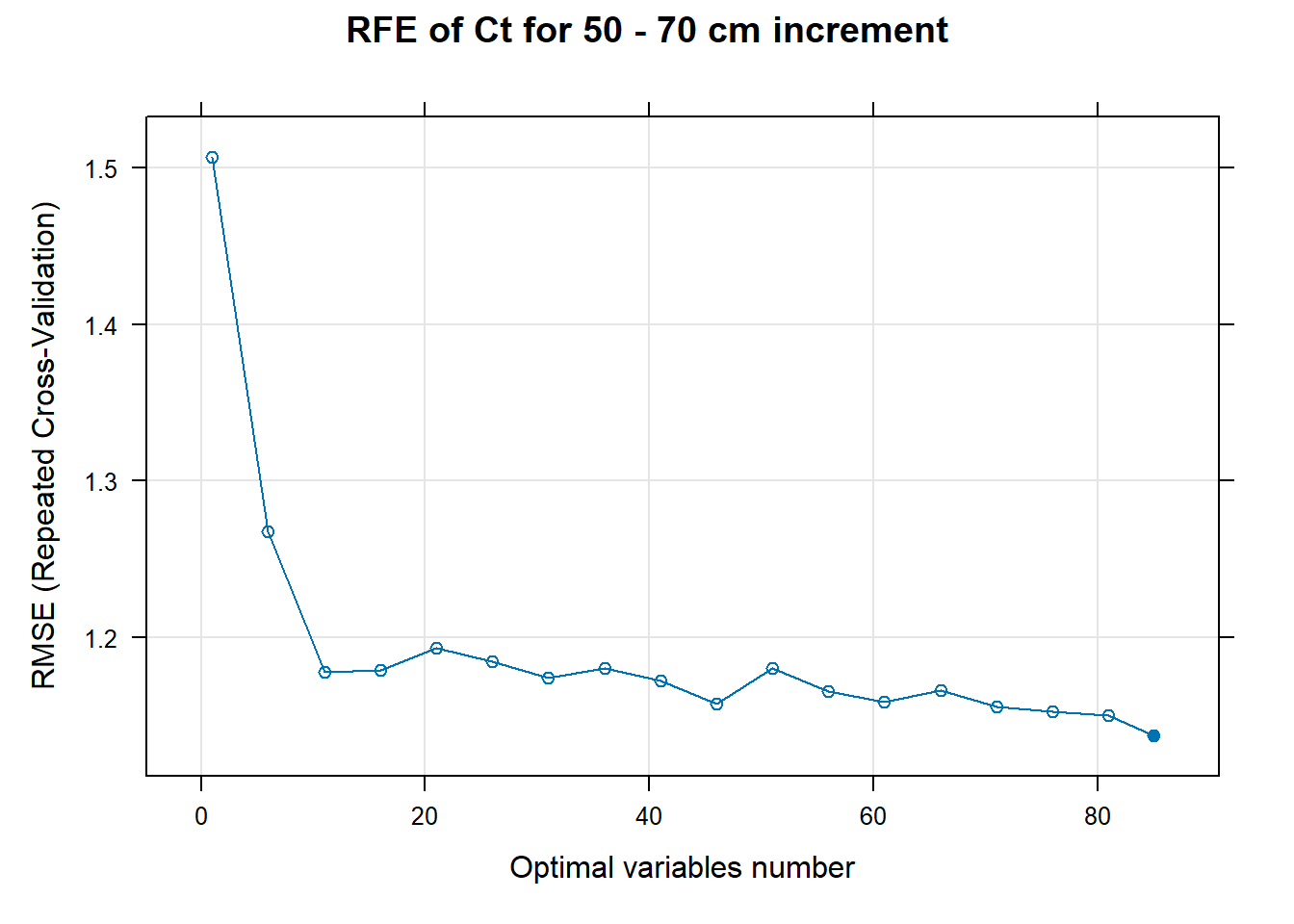

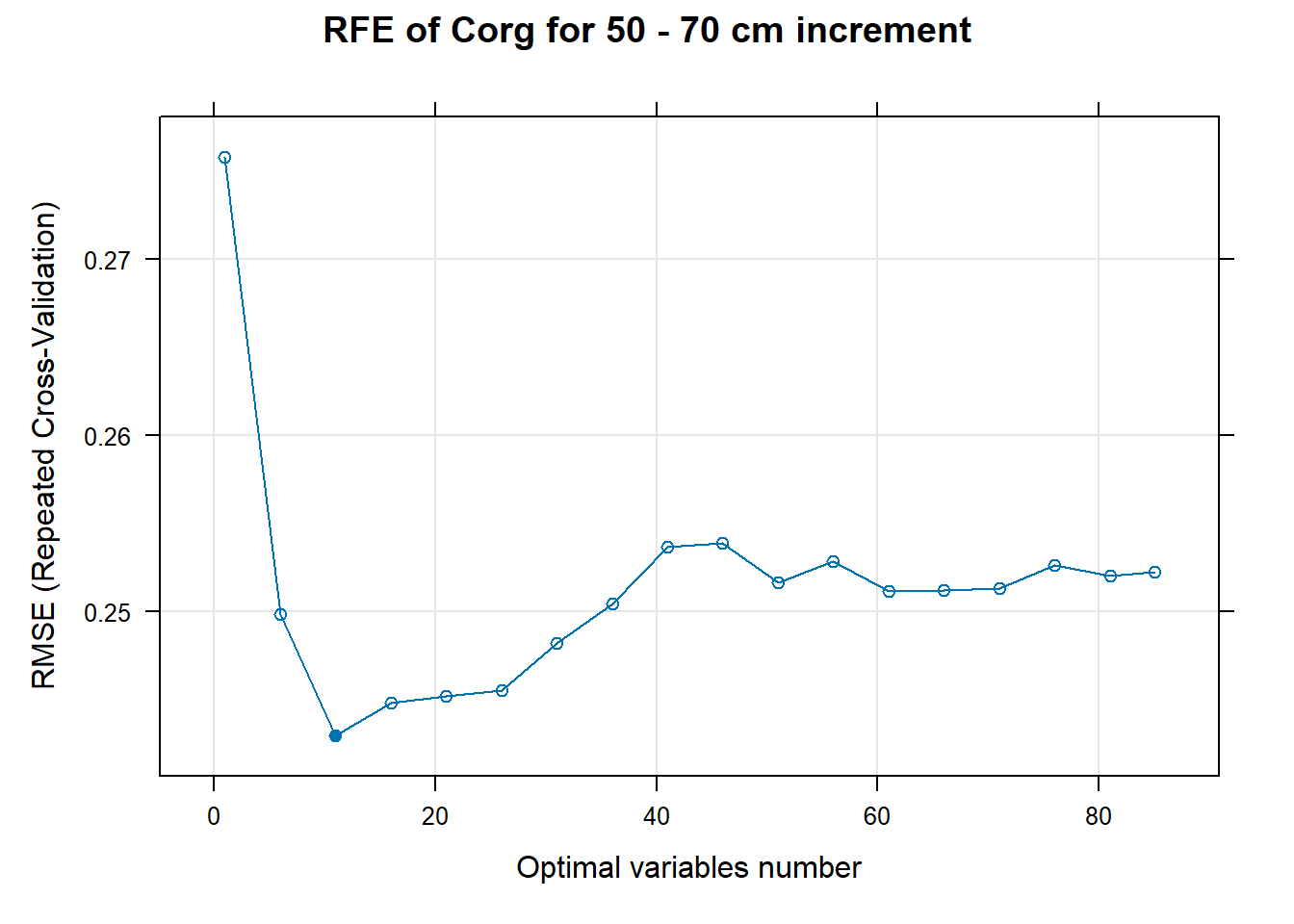

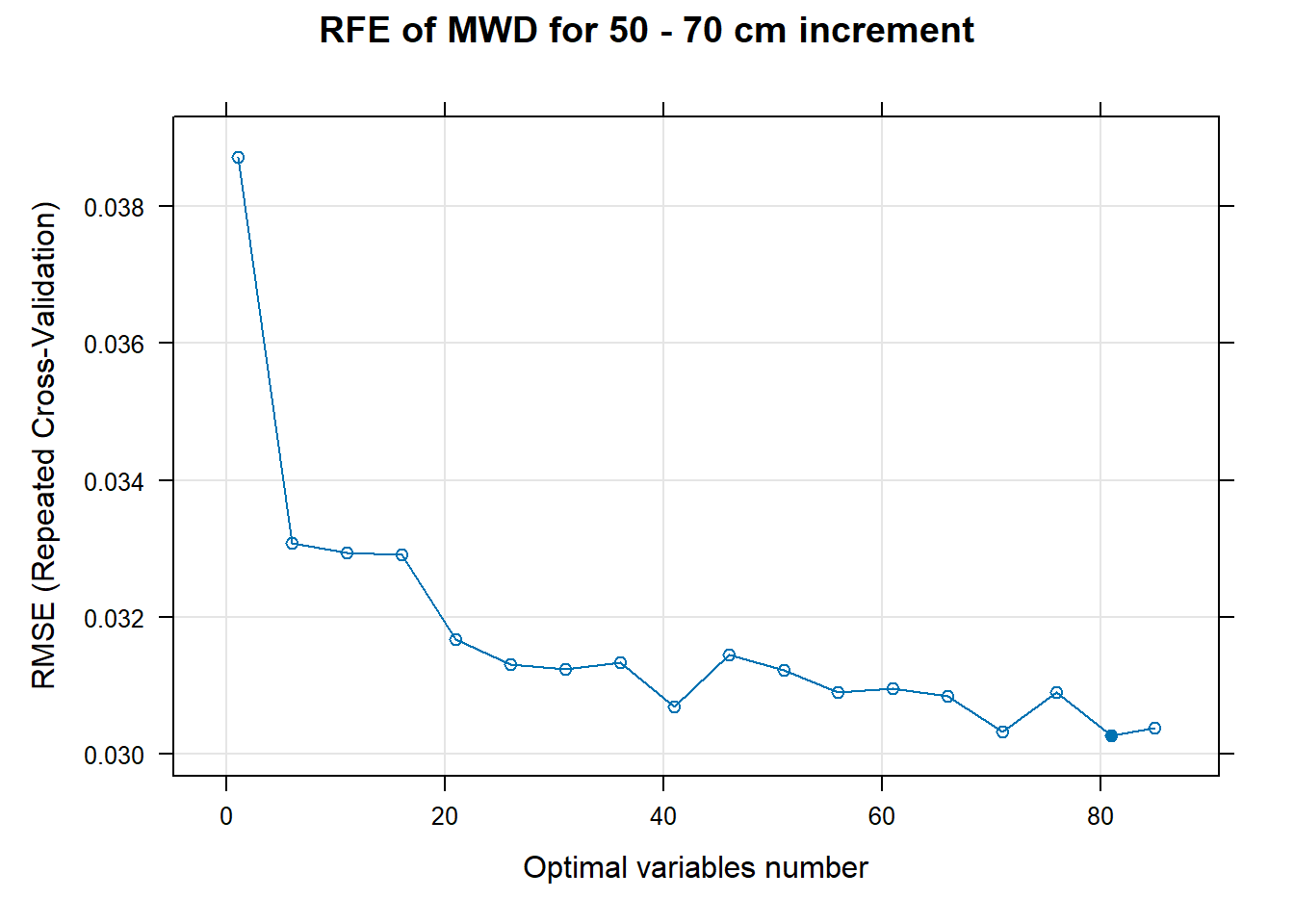

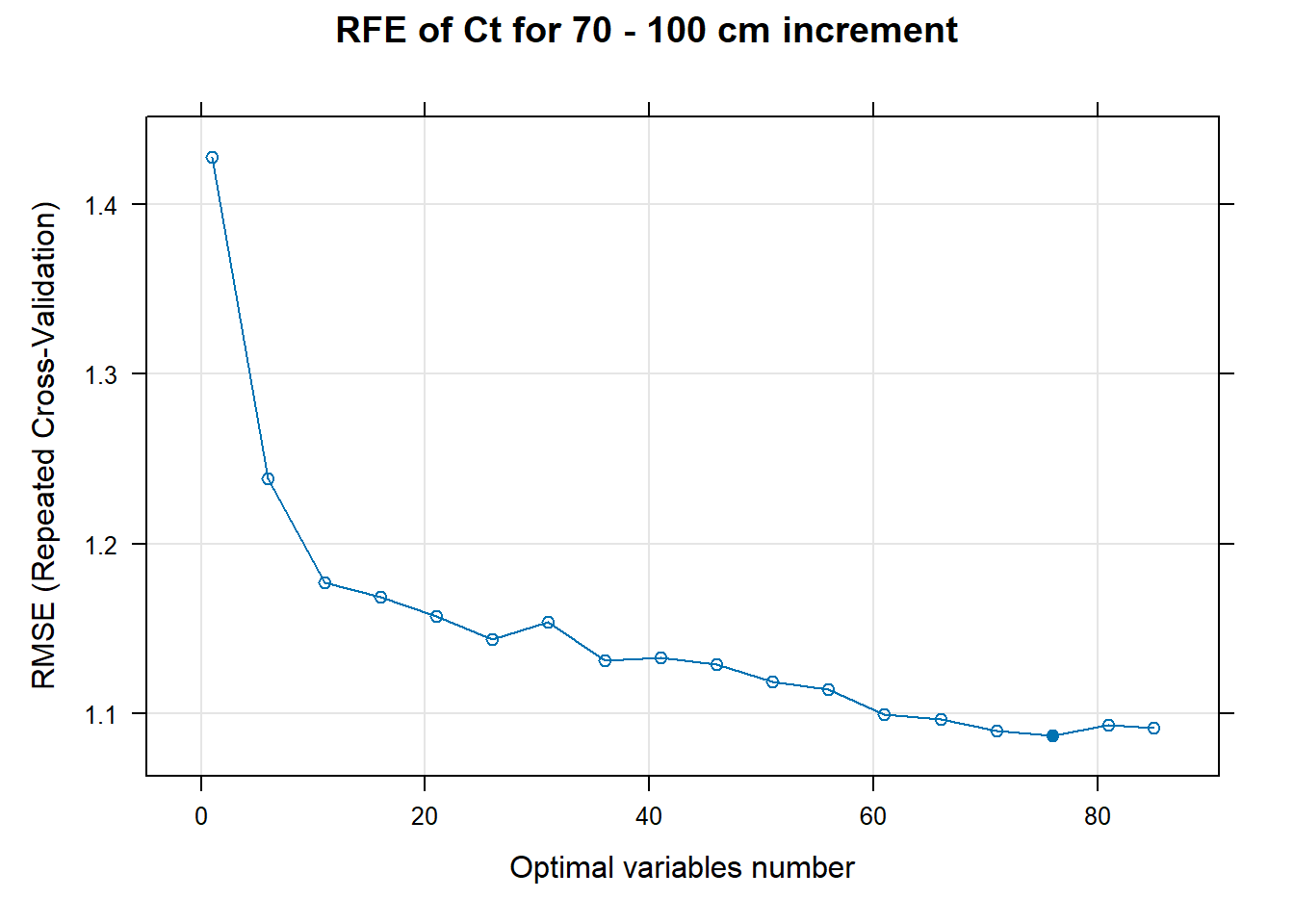

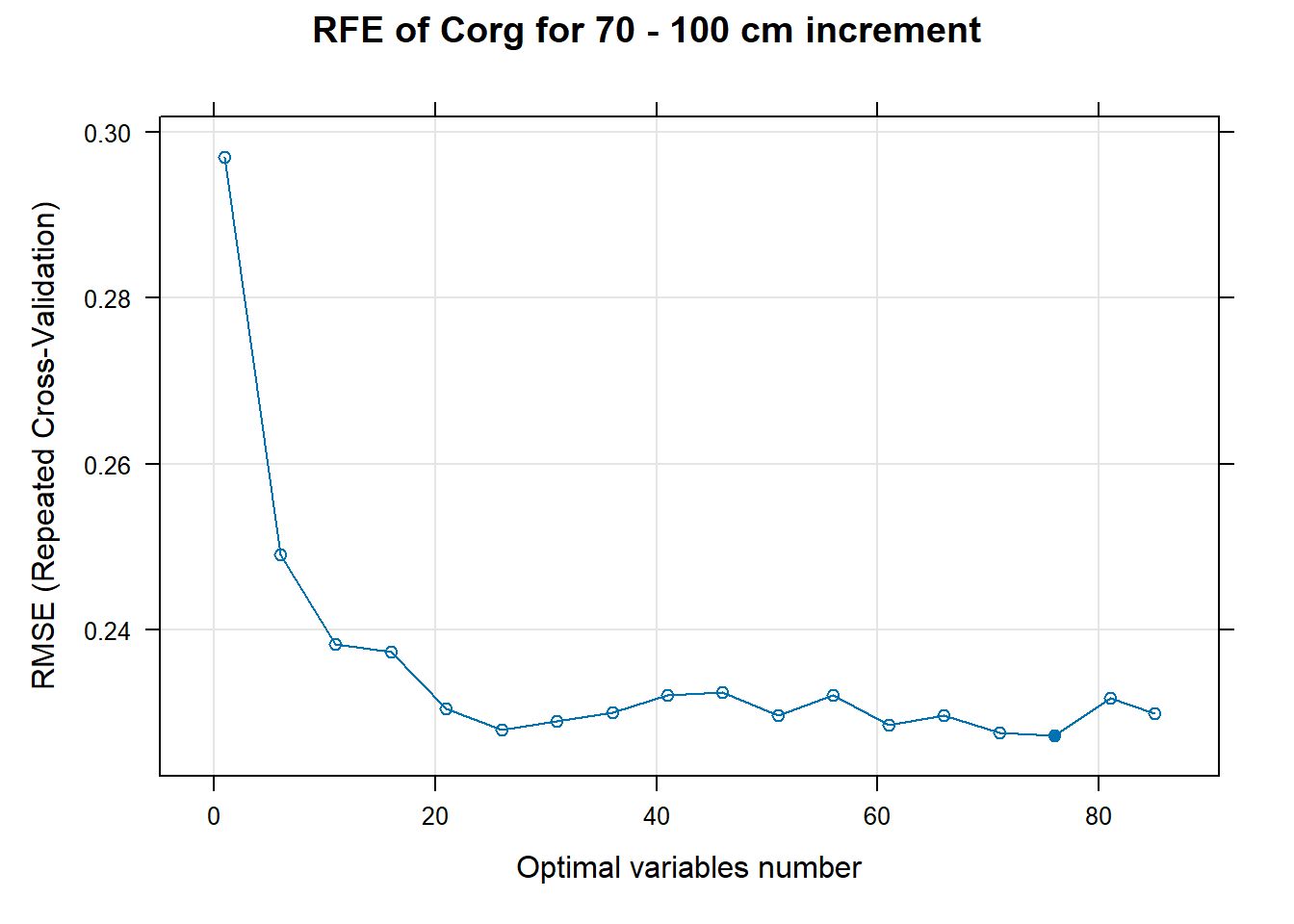

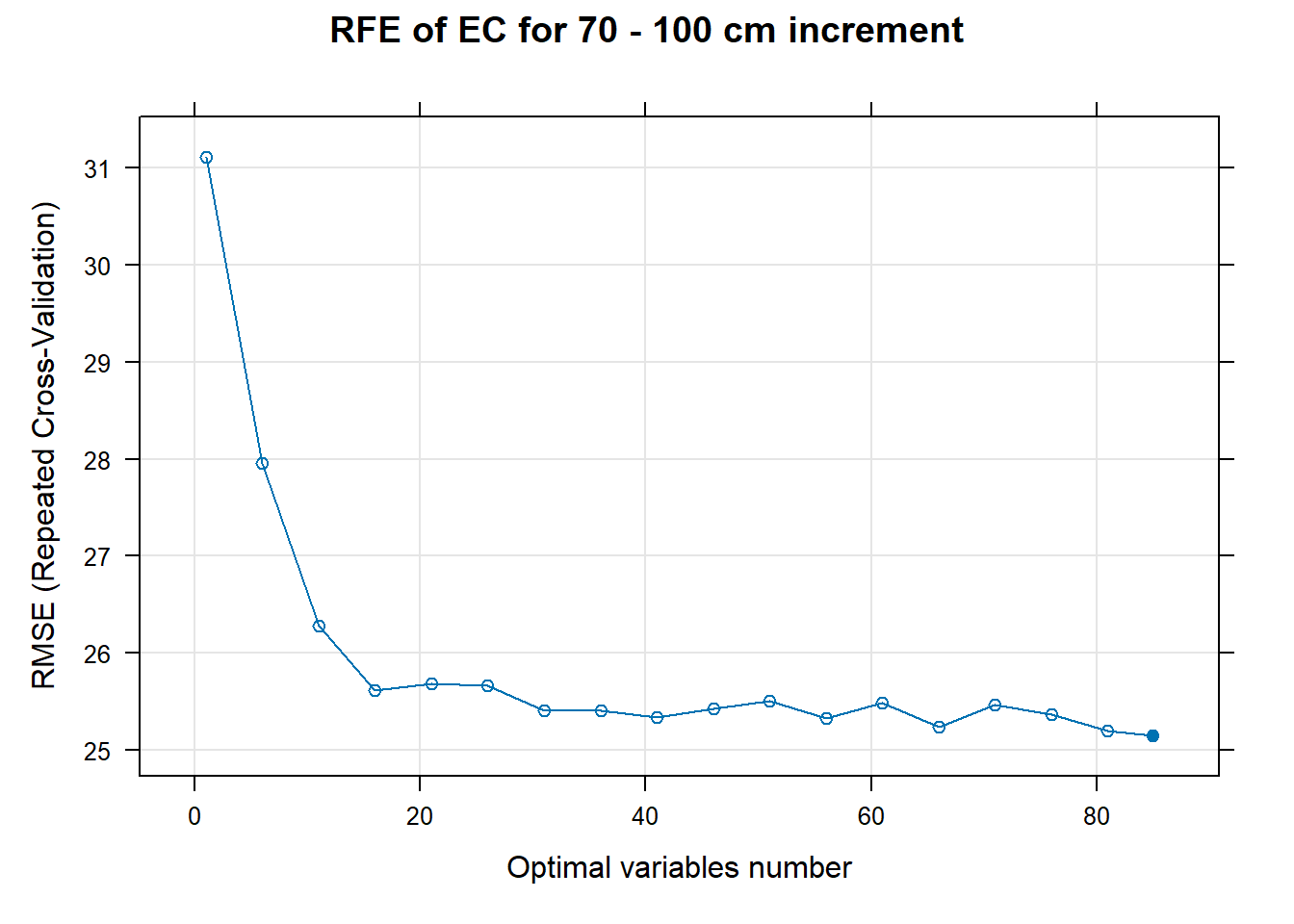

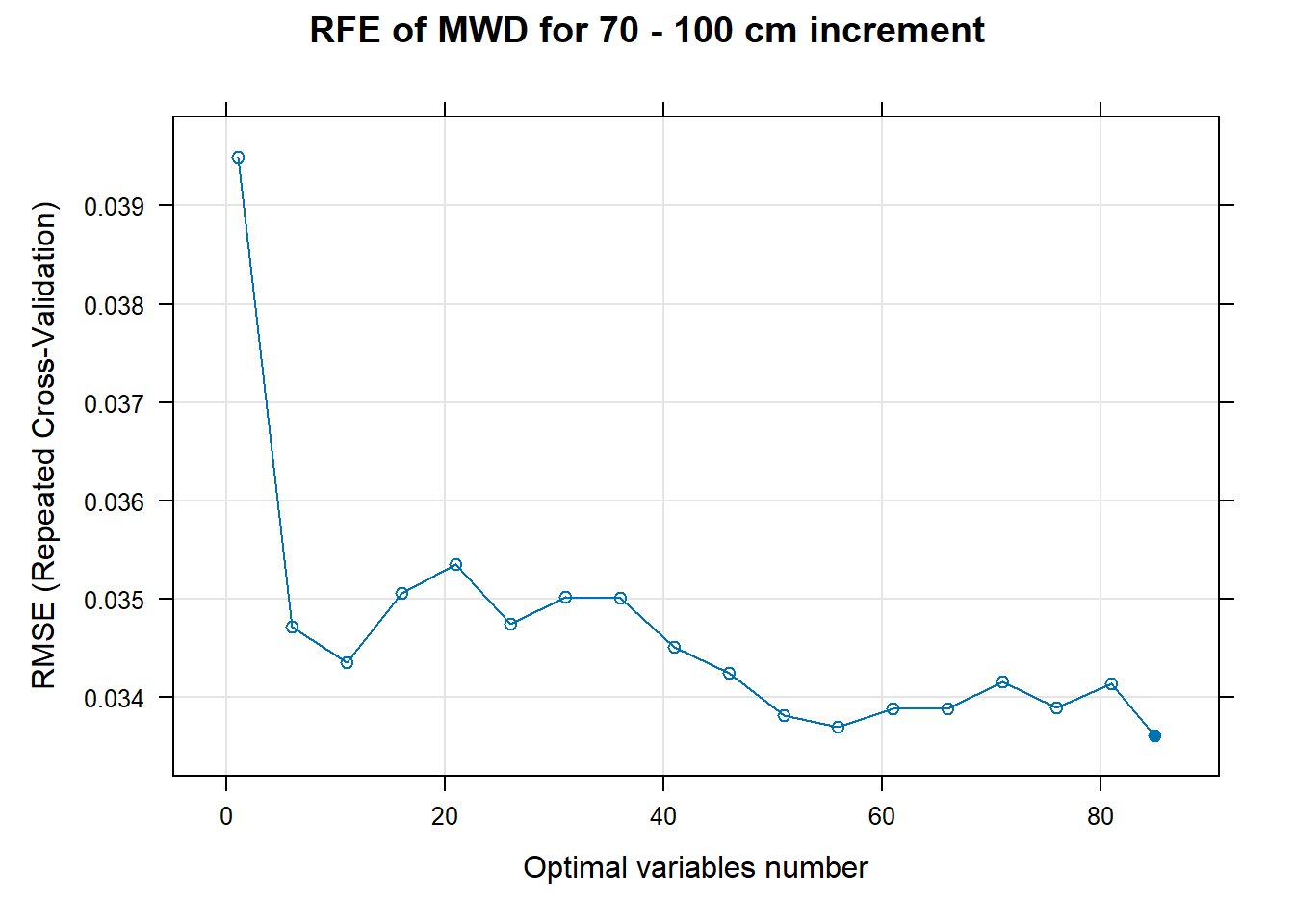

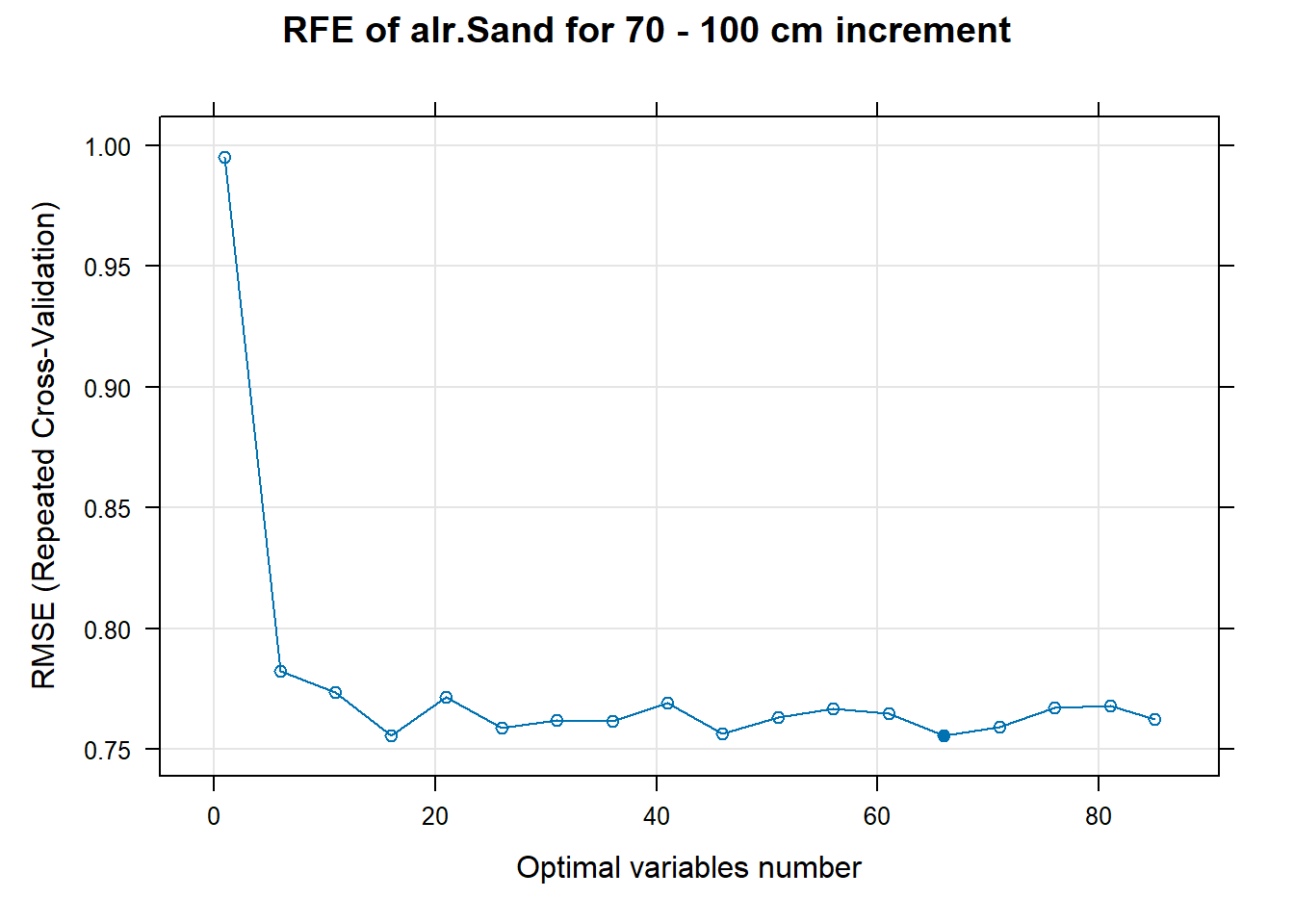

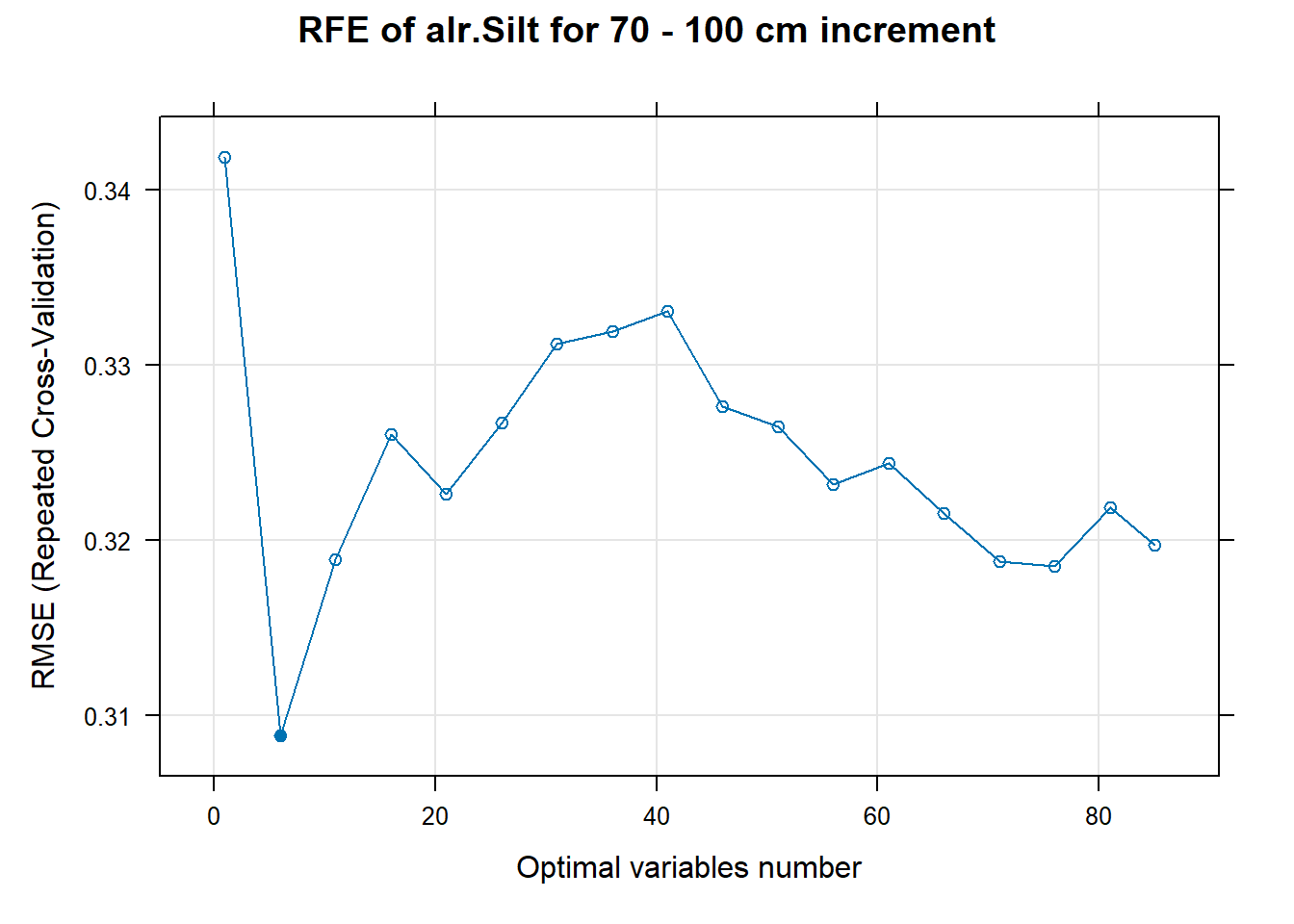

plot(FigCovImpoBr) # 03.4 RFE covariate influence =================================================

cli_progress_bar(

format = "RFE {.val {depth}} {.val {var}} {cli::pb_bar} {cli::pb_percent} [{cli::pb_current}/{cli::pb_total}] | \ ETA: {cli::pb_eta} - Time elapsed: {cli::pb_elapsed_clock}",

total = length(FormulaMLCon),

clear = FALSE)

ResultRFECon <- list()

subsets= c(seq(1,NumCovLayer,5))

for (i in 1:length(FormulaMLCon)) {

var <- names(SoilCovMLCon)[NumCovLayer+i]

set.seed(seed)

ResultRFECon[[i]] <- rfe(FormulaMLCon[[i]], data=SoilCovMLCon,

sizes = subsets, rfeControl = TrainControlRF)

cli_progress_update()

}

cli_progress_done()

RFE_covariates <- list()

for (i in 1:length(ResultRFECon)) {

RFEpredictors <- predictors(ResultRFECon[[i]])

RFE_covariates[[i]] <- cbind(SoilCovMLCon[, RFEpredictors], SoilCovMLCon[, NumCovLayer + i])

colnames(RFE_covariates[[i]]) <- c(RFEpredictors, colnames(SoilCovMLCon[NumCovLayer+i]))

write.table(data.frame(RFEpredictors), paste0("./export/RFE/", depth,"/RFE_results_",names(SoilCovMLCon)[NumCovLayer+i], "_for_",depth,"_soil.txt"))

}

PlotResultRFE =list()

for (i in 1:length(ResultRFECon)) {

trellis.par.set(caretTheme())

PlotResultRFE[[i]] <- plot(ResultRFECon[[i]],

type = c("g", "o"),

main=paste0("RFE of ",names(SoilCovMLCon)[NumCovLayer+i]," for ",depth_name, " cm increment"),

xlab="Optimal variables number")

pdf(paste0("./export/RFE/", depth,"/RFE_",names(SoilCovMLCon)[NumCovLayer+i], "_for_",depth,"_soil.pdf"), # File name

width = 12, height = 12, # Width and height in inches

bg = "white", # Background color

colormodel = "cmyk") # Color model

plot(PlotResultRFE[[i]])

dev.off()

}

# 03.5 Export results ==========================================================

Preprocess[[depth]] <- list(

NZV = nzv_vars,

Cov = Cov,

Selected_cov_boruta = Boruta_covariates,

Boruta_full_fig = FigCovImpoBr,

Boruta = Boruta,

Selected_cov_RFE = RFE_covariates,

RFE_fig = PlotResultRFE,

RFE = ResultRFECon

)

}

save(Preprocess, file = paste0("./export/save/Preprocess.RData"))

# 03.6 Covariates selection table ==============================================

cov_names <- read.table("./data/Covariates_names_DSM.txt")

conv <- data.frame(source = cov_names[,1],

target = paste(cov_names[,1], cov_names[,2], sep = "_"),

stringsAsFactors = FALSE)

conversion <- setNames(conv$target, conv$source)

cov_list <- list()

# For Boruta

for (i in 1:length(Preprocess[[1]]$Selected_cov_boruta)) {

cov <- list()

for (depth in increments) {

cov[[depth]] <- colnames(Preprocess[[depth]]$Selected_cov_boruta[[i]][1:length(Preprocess[[depth]]$Selected_cov_boruta[[i]])-1])

}

cov_combinned <- do.call(c, cov)

cov_table <- conversion[cov_combinned]

cov_table <- table(cov_table)

df_top5 <- as.data.frame(

head(sort(cov_table, decreasing = TRUE), 5)

)

colnames(df_top5) <- c("value", "occurrence")

cov_list[[i]] <- data.frame(variable = colnames(Preprocess[[depth]]$Selected_cov_boruta[[i]][length(Preprocess[[depth]]$Selected_cov_boruta[[i]])]),

num.cov = c(paste0(length(Preprocess[["0_10"]]$Selected_cov_boruta[[i]])-1, "; ", length(Preprocess[["10_30"]]$Selected_cov_boruta[[i]])-1, "; ",

length(Preprocess[["30_50"]]$Selected_cov_boruta[[i]])-1, "; ", length(Preprocess[["50_70"]]$Selected_cov_boruta[[i]])-1, "; ",

length(Preprocess[["70_100"]]$Selected_cov_boruta[[i]])-1)),

top.cov = c(paste0(df_top5[1,1], "; ", df_top5[2,1], "; ", df_top5[3,1], "; ", df_top5[4,1], "; ", df_top5[5,1], "; "))

)

}

cov_combinned <- do.call(rbind, cov_list)

write.table(cov_combinned, "./export/boruta/Factors_selection.txt")

# For RFE

for (i in 1:length(Preprocess[[1]]$Selected_cov_RFE)) {

cov <- list()

for (depth in increments) {

cov[[depth]] <- colnames(Preprocess[[depth]]$Selected_cov_RFE[[i]][1:length(Preprocess[[depth]]$Selected_cov_RFE[[i]])-1])

}

cov_combinned <- do.call(c, cov)

cov_table <- conversion[cov_combinned]

cov_table <- table(cov_table)

df_top5 <- as.data.frame(

head(sort(cov_table, decreasing = TRUE), 5)

)

colnames(df_top5) <- c("value", "occurrence")

cov_list[[i]] <- data.frame(variable = colnames(Preprocess[[depth]]$Selected_cov_RFE[[i]][length(Preprocess[[depth]]$Selected_cov_RFE[[i]])]),

num.cov = c(paste0(length(Preprocess[["0_10"]]$Selected_cov_RFE[[i]])-1, "; ", length(Preprocess[["10_30"]]$Selected_cov_RFE[[i]])-1, "; ",

length(Preprocess[["30_50"]]$Selected_cov_RFE[[i]])-1, "; ", length(Preprocess[["50_70"]]$Selected_cov_RFE[[i]])-1, "; ",

length(Preprocess[["70_100"]]$Selected_cov_RFE[[i]])-1)),

top.cov = c(paste0(df_top5[1,1], "; ", df_top5[2,1], "; ", df_top5[3,1], "; ", df_top5[4,1], "; ", df_top5[5,1], "; "))

)

}

cov_combinned <- do.call(rbind, cov_list)

write.table(cov_combinned, "./export/RFE/Factors_selection.txt")7.3 Models developpement

7.3.1 Preparation of the environment

# 0 Environment setup ##########################################################

# 0.1 Prepare environment ======================================================

# Folder check

getwd()

# Set folder direction

setwd()

# Clean up workspace

rm(list = ls(all.names = TRUE))

# 0.2 Install packages =========================================================

install.packages("pacman")

#Install and load the "pacman" package (allow easier download of packages)

library(pacman)

pacman::p_load(ggplot2, caret, patchwork, quantregForest, readr, grid, gridExtra,

dplyr, reshape2, cli, doParallel, compositions)

# 0.3 Show session infos =======================================================R version 4.5.1 (2025-06-13 ucrt)

Platform: x86_64-w64-mingw32/x64

Running under: Windows 11 x64 (build 26200)

Matrix products: default

LAPACK version 3.12.1

locale:

[1] LC_COLLATE=French_France.utf8 LC_CTYPE=French_France.utf8 LC_MONETARY=French_France.utf8 LC_NUMERIC=C

[5] LC_TIME=French_France.utf8

time zone: Europe/Paris

tzcode source: internal

attached base packages:

[1] grid parallel stats graphics grDevices utils datasets methods base

other attached packages:

[1] compositions_2.0-9 doParallel_1.0.17 iterators_1.0.14 foreach_1.5.2 cli_3.6.5 reshape2_1.4.5

[7] dplyr_1.1.4 gridExtra_2.3 readr_2.1.6 quantregForest_1.3-7.1 RColorBrewer_1.1-3 randomForest_4.7-1.2

[13] patchwork_1.3.2 caret_7.0-1 lattice_0.22-7 ggplot2_4.0.1

loaded via a namespace (and not attached):

[1] DBI_1.2.3 pROC_1.19.0.1 tcltk_4.5.1 rlang_1.1.7 magrittr_2.0.4 otel_0.2.0

[7] e1071_1.7-17 compiler_4.5.1 png_0.1-8 vctrs_0.7.0 stringr_1.6.0 pkgconfig_2.0.3

[13] fastmap_1.2.0 leafem_0.2.5 rmarkdown_2.30 tzdb_0.5.0 prodlim_2025.04.28 purrr_1.2.1

[19] xfun_0.56 satellite_1.0.6 recipes_1.3.1 terra_1.8-93 R6_2.6.1 stringi_1.8.7

[25] parallelly_1.46.1 rpart_4.1.24 lubridate_1.9.4 Rcpp_1.1.1 bookdown_0.46 knitr_1.51

[31] future.apply_1.20.1 base64enc_0.1-3 pacman_0.5.1 Matrix_1.7-4 splines_4.5.1 nnet_7.3-20

[37] timechange_0.3.0 tidyselect_1.2.1 rstudioapi_0.18.0 dichromat_2.0-0.1 yaml_2.3.12 timeDate_4051.111

[43] codetools_0.2-20 listenv_0.10.0 tibble_3.3.1 plyr_1.8.9 withr_3.0.2 S7_0.2.1

[49] evaluate_1.0.5 future_1.69.0 survival_3.8-6 sf_1.0-24 bayesm_3.1-7 units_1.0-0

[55] proxy_0.4-29 pillar_1.11.1 tensorA_0.36.2.1 rsconnect_1.7.0 KernSmooth_2.23-26 stats4_4.5.1

[61] generics_0.1.4 sp_2.2-0 hms_1.1.4 scales_1.4.0 globals_0.18.0 class_7.3-23

[67] glue_1.8.0 tools_4.5.1 robustbase_0.99-6 data.table_1.18.0 ModelMetrics_1.2.2.2 gower_1.0.2

[73] crosstalk_1.2.2 ipred_0.9-15 nlme_3.1-168 raster_3.6-32 lava_1.8.2 DEoptimR_1.1-4

[79] gtable_0.3.6 digest_0.6.39 classInt_0.4-11 htmlwidgets_1.6.4 farver_2.1.2 htmltools_0.5.9

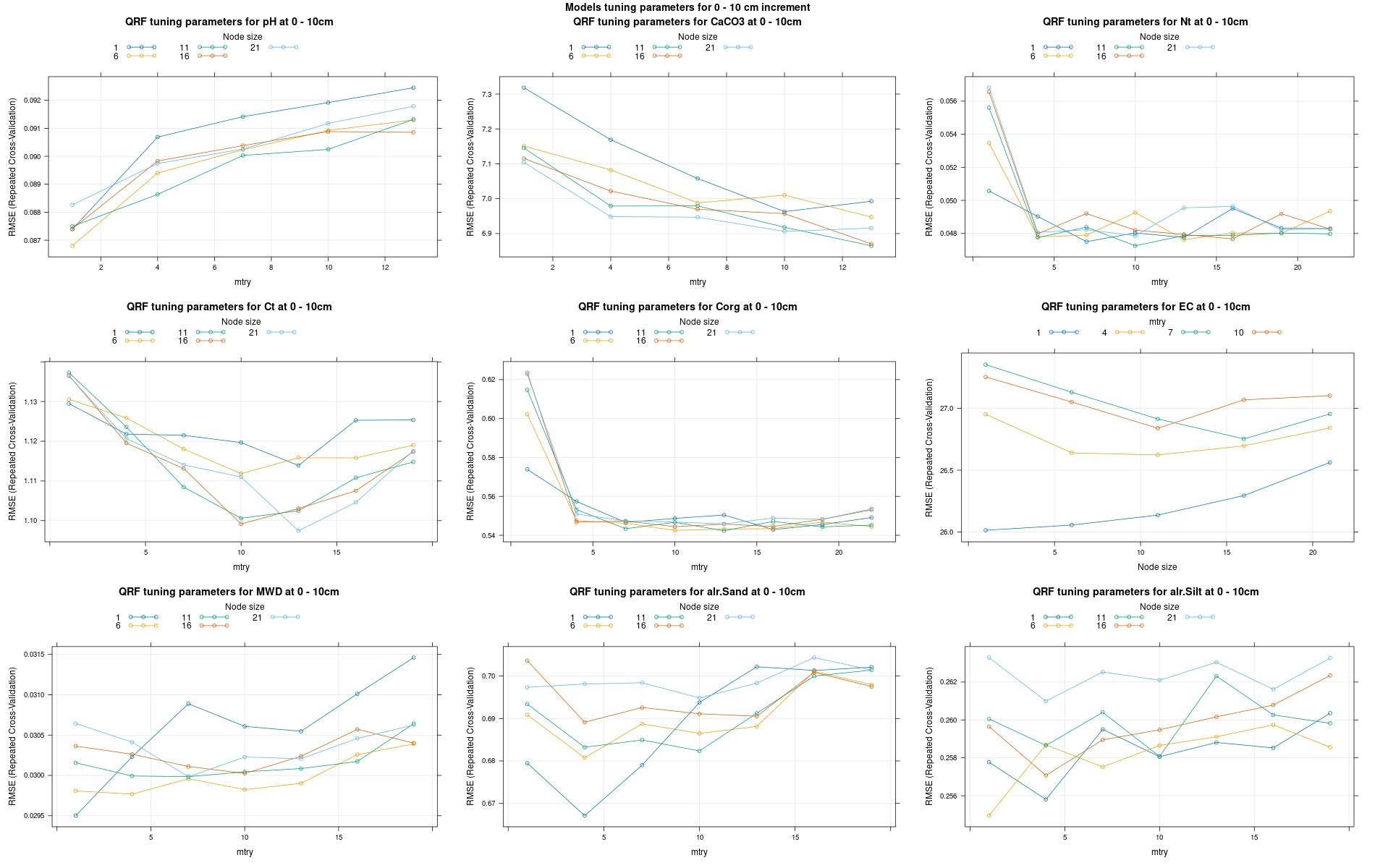

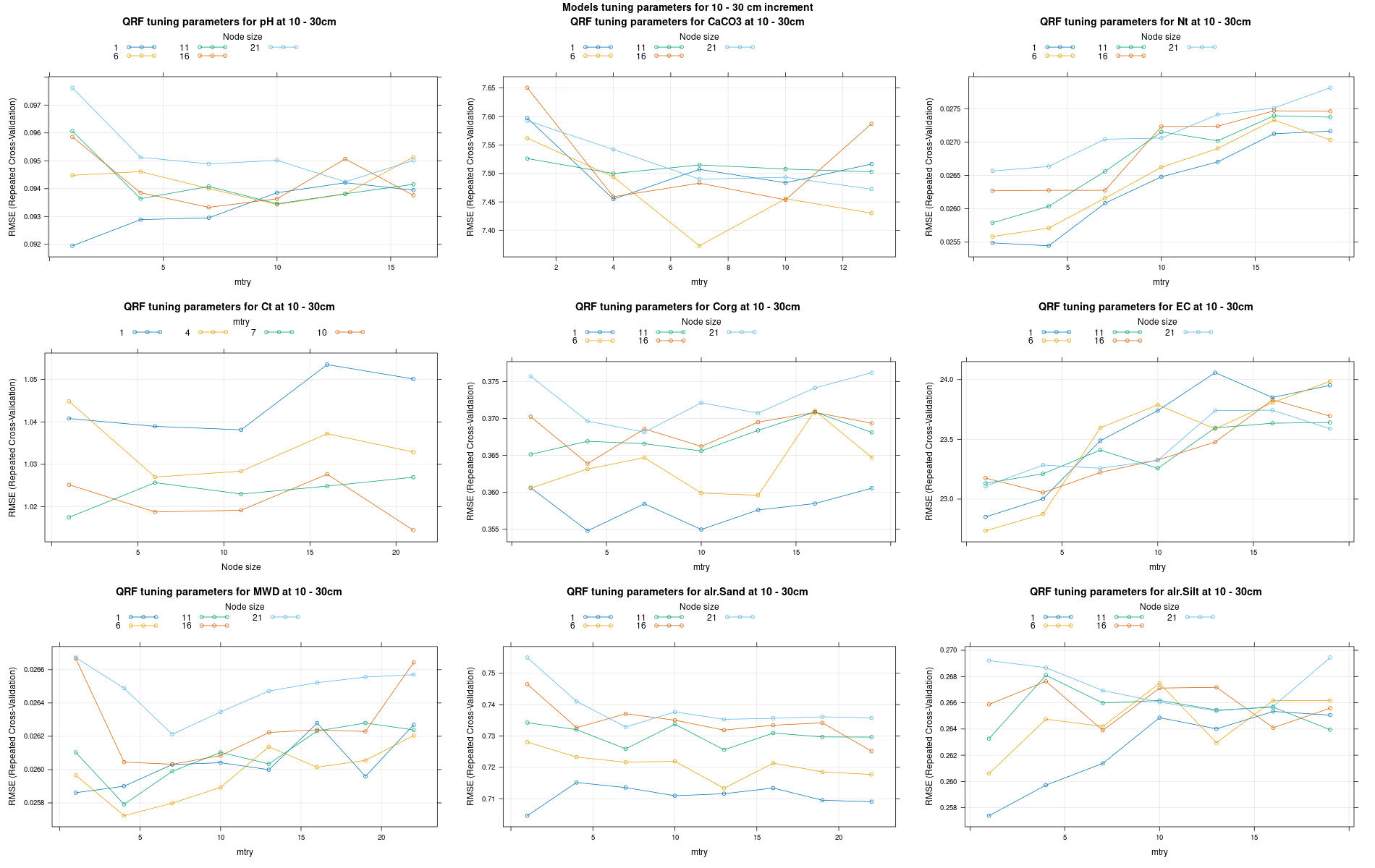

[85] lifecycle_1.0.5 leaflet_2.2.3 hardhat_1.4.2 MASS_7.3-65 7.3.2 Custom QRF model

library(caret)

library(quantregForest)

qrf_caret <- list(

type = "Regression",

library = "quantregForest",

parameters = data.frame(

parameter = c("mtry", "nodesize"),

class = c("numeric", "numeric"),

label = c("mtry", "Node size")

),

grid = function(x, y, len = NULL, search = "grid") {

expand.grid(

mtry = unique(pmax(1, floor(seq(1, ncol(x), length.out = len)))),

nodesize = c(5, 10, 15)

)

},

fit = function(x, y, wts, param, lev, last, classProbs, ...) {

quantregForest(

x = x,

y = y,

mtry = param$mtry,

nodesize = param$nodesize,

keep.inbag = TRUE,

...

)

},

predict = function(modelFit, newdata, submodels = NULL) {

predict(modelFit, newdata, what = c(0.5, 0.05, 0.95))

},

prob = NULL,

sort = function(x) x[order(x$mtry), ],

levels = function(x) NULL

)7.3.3 Run the models

# 04 Model tuning and run #####################################################

# Here we decided to split every run by soil depth to have a better vision

# on the running process.

# 04.1 Load the data ===========================================================

make_subdir <- function(parent_dir, subdir_name) {

path <- file.path(parent_dir, subdir_name)

if (!dir.exists(path)) {

dir.create(path, recursive = TRUE)

message("✅ Folder created", path)

} else {

message("ℹ️ Existing folder : ", path)

}

return(path)

}

make_subdir("./export", "models")

increments <- c("0_10", "10_30", "30_50", "50_70", "70_100")

load(file = "./export/save/Preprocess.RData")

# 04.2 Prepare the data ========================================================

seed <- 1070

Models <- list()

cl <- makeCluster(6)

registerDoParallel(cl)

source("./script/QRF_models.R")

for (depth in increments) {

SoilCovML <- Preprocess[[depth]]$Selected_cov_boruta

depth_name <- gsub("_", " - ", depth)

make_subdir("./export/models", depth)

FormulaML <- list()

for (i in 1:length(SoilCovML)) {

SoilCovMLBoruta <- SoilCovML[[i]]

StartTargetCov = ncol(SoilCovMLBoruta)

NumCovLayer = StartTargetCov - 1

FormulaML[[i]] <- as.formula(paste(names(SoilCovMLBoruta)[StartTargetCov]," ~ ",paste(names(SoilCovMLBoruta)[1:NumCovLayer],collapse="+")))

}

# 04.3 Run the models ========================================================

cli_progress_bar(

format = "Models {.val {depth}} {.val {variable}} {cli::pb_bar} {cli::pb_percent} [{cli::pb_current}/{cli::pb_total}] | \ ETA: {cli::pb_eta} - Time elapsed: {cli::pb_elapsed_clock}",

total = length(SoilCovML),

clear = FALSE

)

# Train control

set.seed(seed)